Creating a sentiment analysis model with Scrapy and MonkeyLearn

Data is everywhere. And in massive quantities. We are currently in an era of data explosion, where millions of tweets, articles, comments, reviews and the like are being published everyday.

Developers are taking advantage of the abundance of data and using things like web scraping to do all kinds of cool things. Sometimes web scraping is not enough; digging deeper and analyzing the data is often needed to unlock the true meaning behind the data and discover valuable insights.

On this tutorial we will cover how you can use MonkeyLearn and Scrapy to build a machine learning model that will help you analyze vast amounts of web scraped data in a cost-effective way.

Getting started

We will use Scrapy to extract hotel reviews from TripAdvisor and use those reviews as training samples to create a machine learning model with MonkeyLearn. This model will learn to detect if a hotel review is positive or negative and will be able to understand the sentiment of new and unseen hotel reviews.

1. Create a Scrapy spider

The first step is to scrape hotel reviews from TripAdvisor by creating a spider:

New to Scrapy? If you have never used Scrapy before, visit this article. It's very powerful yet easy to use, and will allow you to start building web scrapers in no time.

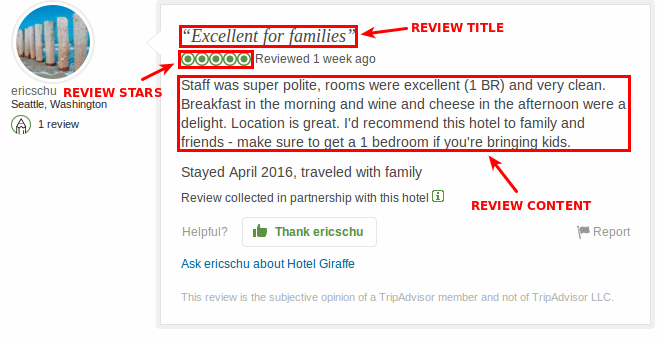

Choose the data you want to scrape with Scrapy In this tutorial we will use New York City hotel reviews to create our hotel sentiment analysis classifier. In our case we will extract the review title, the review content and the stars:

A TripAdvisor hotel review breakdown

Why the stars? In order to train MonkeyLearn models, we need data that is already tagged, so the algorithm knows how a positive or a negative review actually looks like. Luckily the reviewers were kind enough to provide us with this information, in the form of stars.

To save the data, we will define a Scrapy item with three fields: "title", "content" and "stars":

import scrapy

class HotelSentimentItem(scrapy.Item):

title = scrapy.Field()

content = scrapy.Field()

stars = scrapy.Field()

We also create a spider for filling in these items. We give it the start URL of the New York Hotels page.

import scrapy

from hotel_sentiment.items import HotelSentimentItem

class TripadvisorSpider(scrapy.Spider):

name = "tripadvisor"

start_urls = [

"https://www.tripadvisor.com/Hotels-g60763-New_York_City_New_York-Hotels.html"

]

Then, we define a function for parsing a single review and saving its data:

def parse_review(self, response):

item = HotelSentimentItem()

item['title'] = response.xpath('//div[@class="quote"]/text()').extract()[0][1:-1] #strip the quotes (first and last char)

item['content'] = response.xpath('//div[@class="entry"]/p/text()').extract()[0]

item['stars'] = response.xpath('//span[@class="rate sprite-rating_s rating_s"]/img/@alt').extract()[0]

return item

Afterwards, we define a function for parsing a page of reviews and then passing the page. You'll notice that on the reviews page we can't see the the whole review content, just the beginning. We will work around this by following the link to the full review and scraping the data from that page using parse_review :

def parse_hotel(self, response):

for href in response.xpath('//div[@class="quote"]/a/@href'):

url = response.urljoin(href.extract())

yield scrapy.Request(url, callback=self.parse_review)

next_page = response.xpath('//div[@class="unified pagination "]/child::*[2][self::a]/@href')

if next_page:

url = response.urljoin(next_page[0].extract())

yield scrapy.Request(url, self.parse_hotel)

Finally, we define the main parse function, which will start at the New York hotels main page, and for each hotel it will parse all its reviews:

def parse(self, response):

for href in response.xpath('//div[@class="listing_title"]/a/@href'):

url = response.urljoin(href.extract())

yield scrapy.Request(url, callback=self.parse_hotel)

next_page = response.xpath('//div[@class="unified pagination standard_pagination"]/child::*[2][self::a]/@href')

if next_page:

url = response.urljoin(next_page[0].extract())

yield scrapy.Request(url, self.parse)

So, to review: we told our spider to start at the New York hotels main page, follow the links to each hotel, follow the links to each review, and scrape the data. After it is done with each page it will get the next one, so it will be able to crawl as many reviews as we need.

You can view the full code for the spider here.

2. Getting the data

So we have our Scrapy spider created, we are ready to start crawling and gathering the data.

We tell it to crawl with scrapy crawl tripadvisor -o scrapyData.csv -s CLOSESPIDER_ITEMCOUNT=10000

This will scrape 10,000 TripAdvisor New York City hotel reviews and save them in a CSV file named scrapyData.csv . With that many reviews, it may take a while to finish. Feel free to change the amount if you need.

3. Preparing the data

So we generated our scrapyData.csv file, now it's time to preprocess the data. We'll do that with Python and the Pandas library.

First, we import the CSV file into a data frame, remove duplicates, drop the reviews that are neutral (3 of 5 stars):

import pandas as pd

# We use the Pandas library to read the contents of the scraped data

# obtained by Scrapy

df = pd.read_csv('scrapyData.csv', encoding='utf-8')

# Now we remove duplicate rows (reviews)

df.drop_duplicates(inplace=True)

# Drop the reviews with 3 stars, since we're doing Positive/Negative

# sentiment analysis.

df = df[df['stars'] != '3 of 5 stars']

Then we create a new column that concatenates the title and the content:

# We want to use both the title and content of the review to

# classify, so we merge them both into a new column.

df['full_content'] = df['title'] + '. ' + df['content']

Then we create a new column that will be what we want to predict: Good or Bad, so we transform reviews with more than 3 stars into Good, and reviews with less than 3 stars into Bad:

def get_class(stars):

score = int(stars[0])

if score > 3:

return 'Good'

else:

return 'Bad'

# Transform the number of stars into Good and Bad tags.

df['true_category'] = df['stars'].apply(get_class)

We'll keep only the full_content and true_category columns:

df = df[['full_content', 'true_category']]

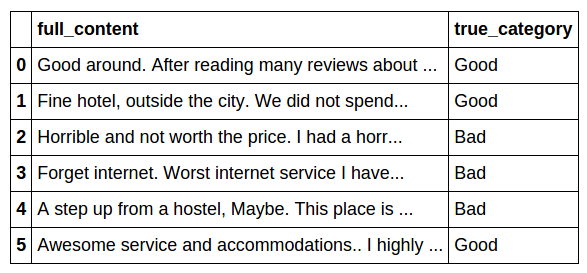

If we take a look at the data frame we created it may look something like this:

To have a quick overview of the data, we have 4,913 Good reviews and 4,501 Bad reviews:

# Print a histogram of sentiment values

df['true_category'].value_counts()

Good 4913

Bad 4501

dtype: int64

This looks about right. If you have too few reviews for a particular tag (for instance, 9,000 Good and 1,000 Bad), it could have a negative impact on the training of your model. To fix this, scrape more bad reviews: run the spider again, for a longer time, then get only the bad reviews and mix them with the data you already have. Or you could find hotels with mostly bad reviews and scrape those.

Finally, we have to save our dataset as a CSV or Excel file so we can upload it to MonkeyLearn to train our classifier. To train our model we only need the content of the reviews and the corresponding tags, so we remove the headers and the index column. We also encode the file in UTF-8:

# Write the data into a CSV file

df.to_csv('scrapyData_MonkeyLearn.csv', header=False, index=False, encoding='utf-8')

4. Creating a text classifier

Ok, now it's time to move to MonkeyLearn. We want to create a text classifier that classifies reviews into two possible tags Good or Bad. This process is known as Sentiment Analysis, that is, identifying the mood from a piece of text.

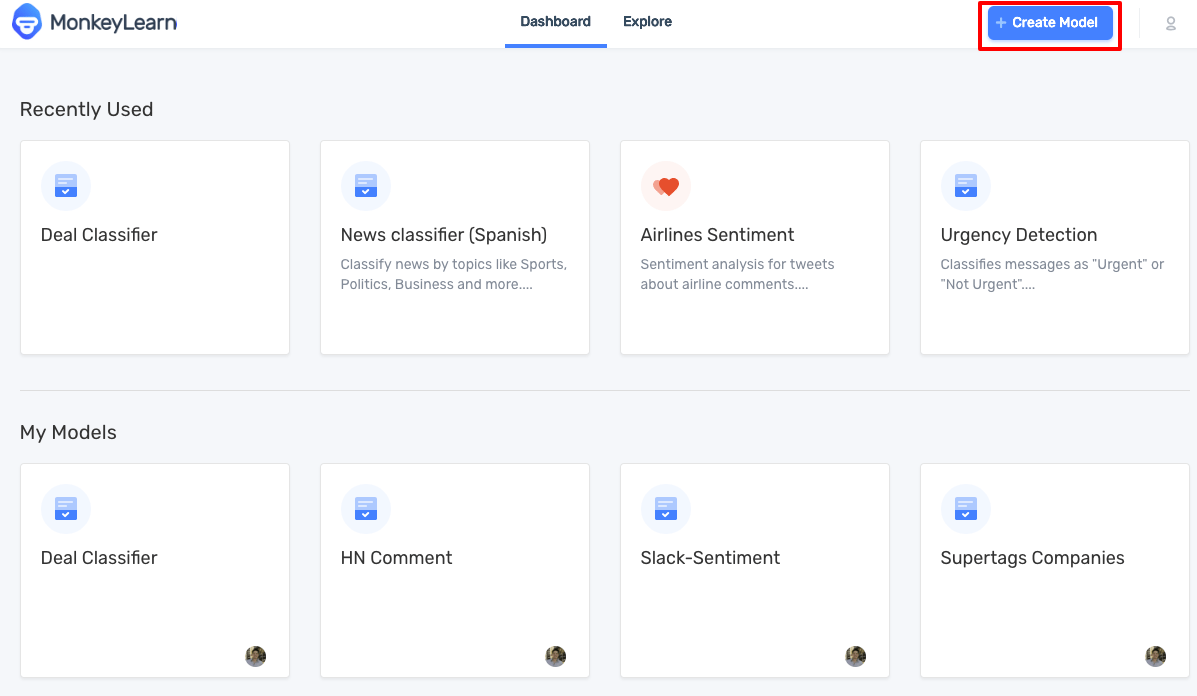

First, you have to sign up for Monkeylearn, and after you log in you will see the main dashboard. MonkeyLearn has public models created by the MonkeyLearn team trained for specific tasks, but it also allows you to create your own custom model to fit your needs. In this case, you'll build a custom text classifier, so click the Create Model button:

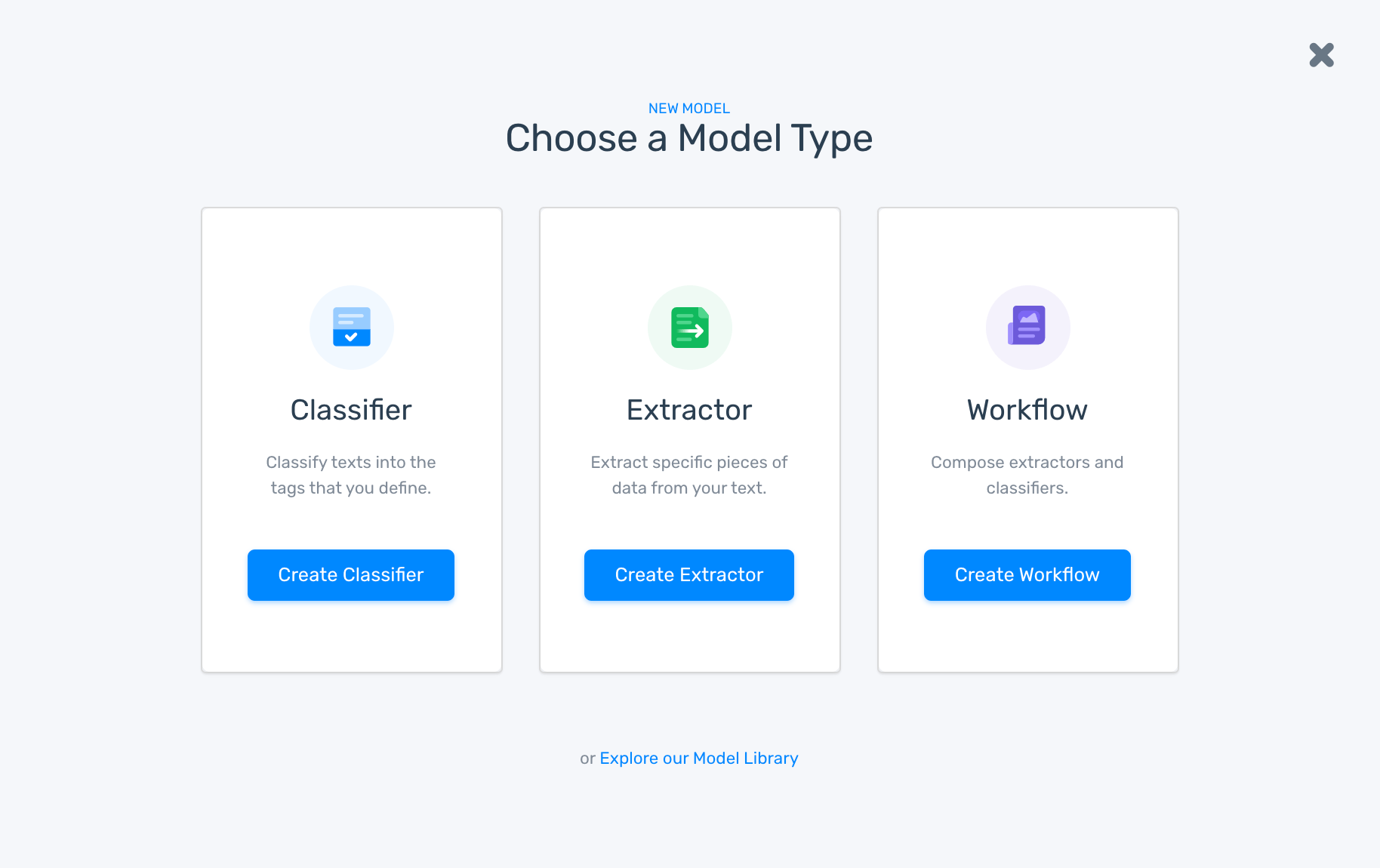

Now you'll be prompted to choose a Model Type. Choose Classifier since we want to classify texts using 'Good' or 'Bad' tags:

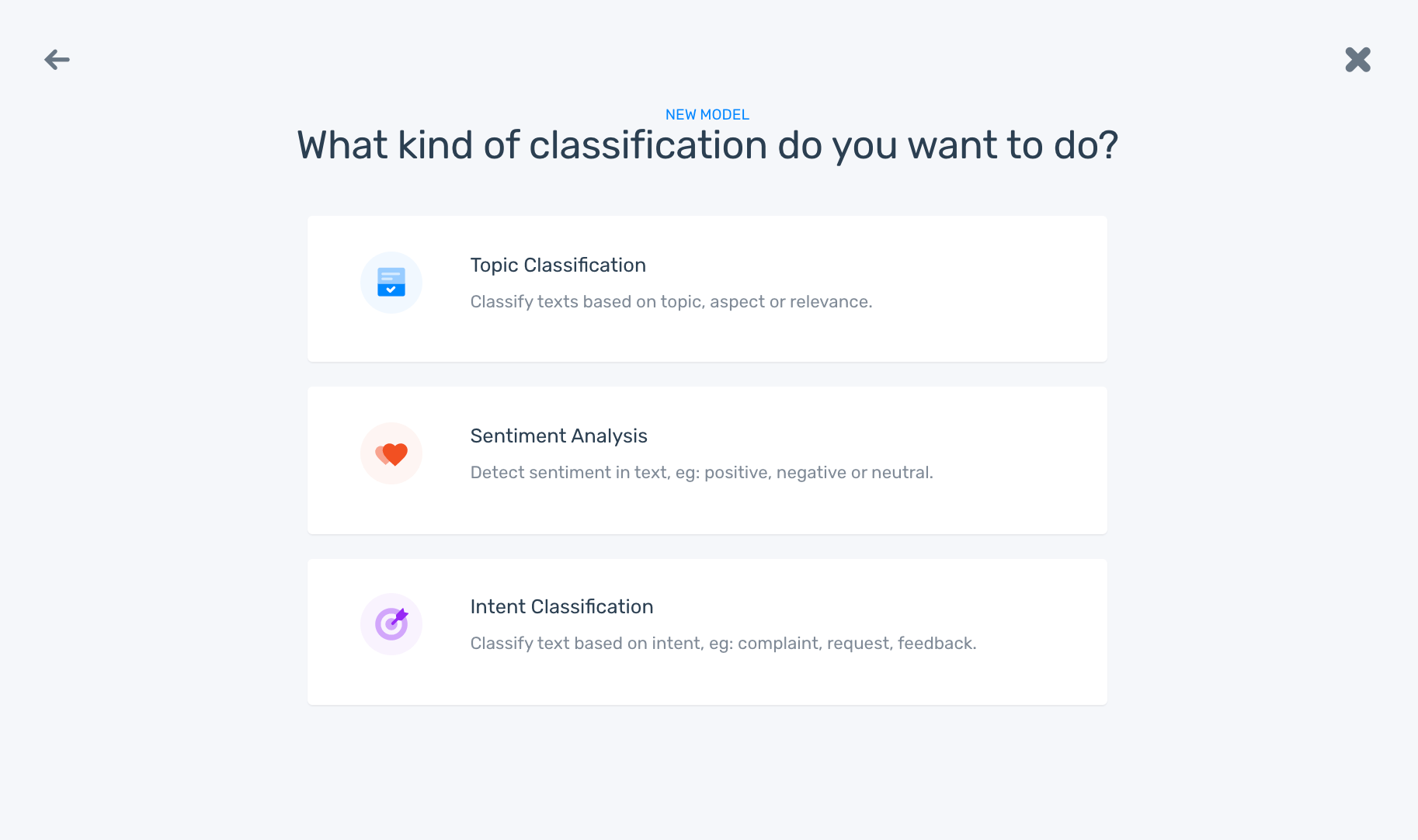

Now you'll be asked to define what type of classification you want to do. Choose Sentiment analysis:

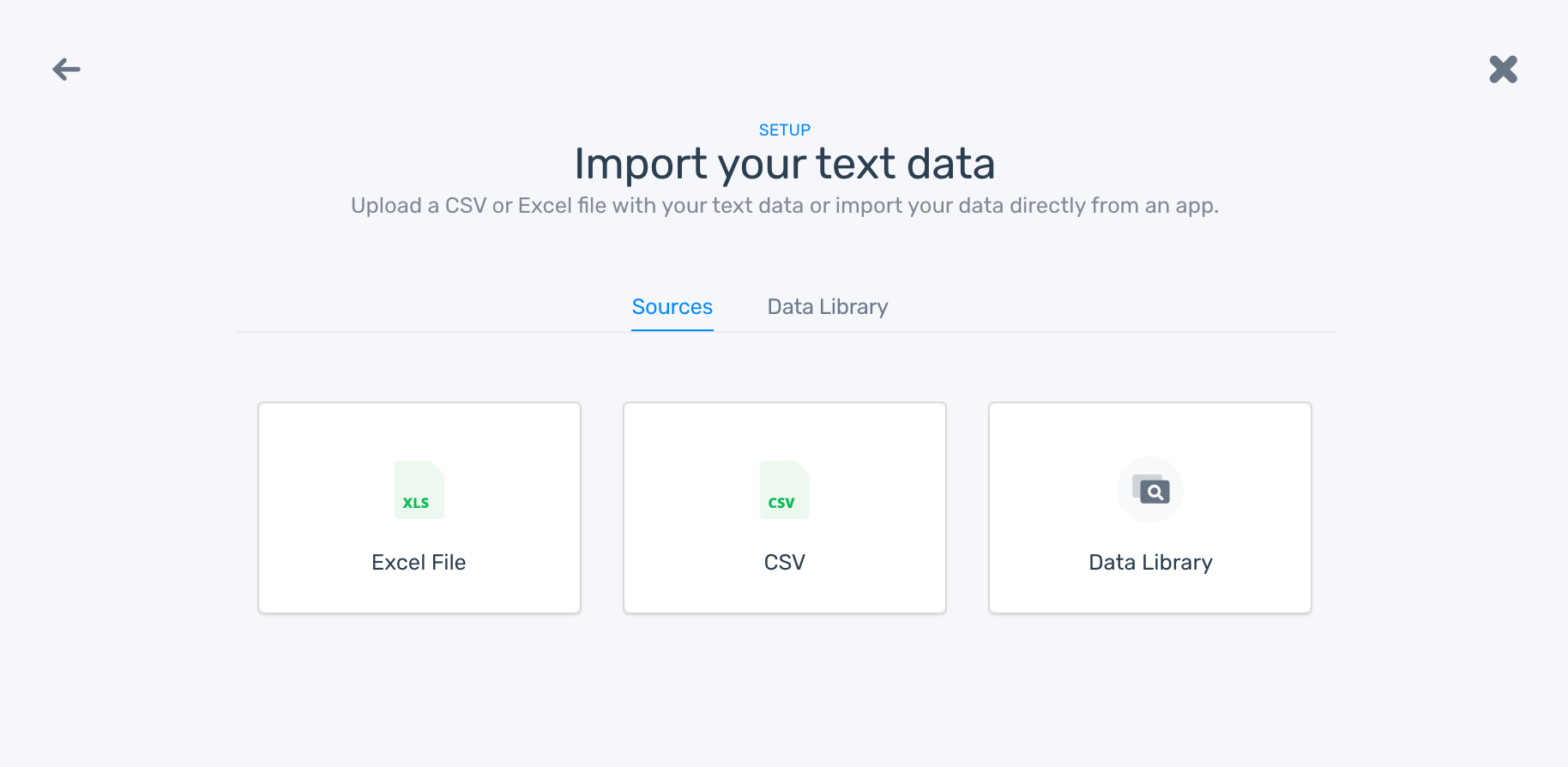

Now it's time to feed the model with the data you just scraped. As your text data is already tagged as 'Good' or 'Bad, you have to click on Upload already tagged data:

Finally, you are prompted to name your new model. And voilá, MonkeyLearn will create the model and train it for the first time. You can test it out right away or keep training the model in order to make it more accurate.

5. Testing your classifier

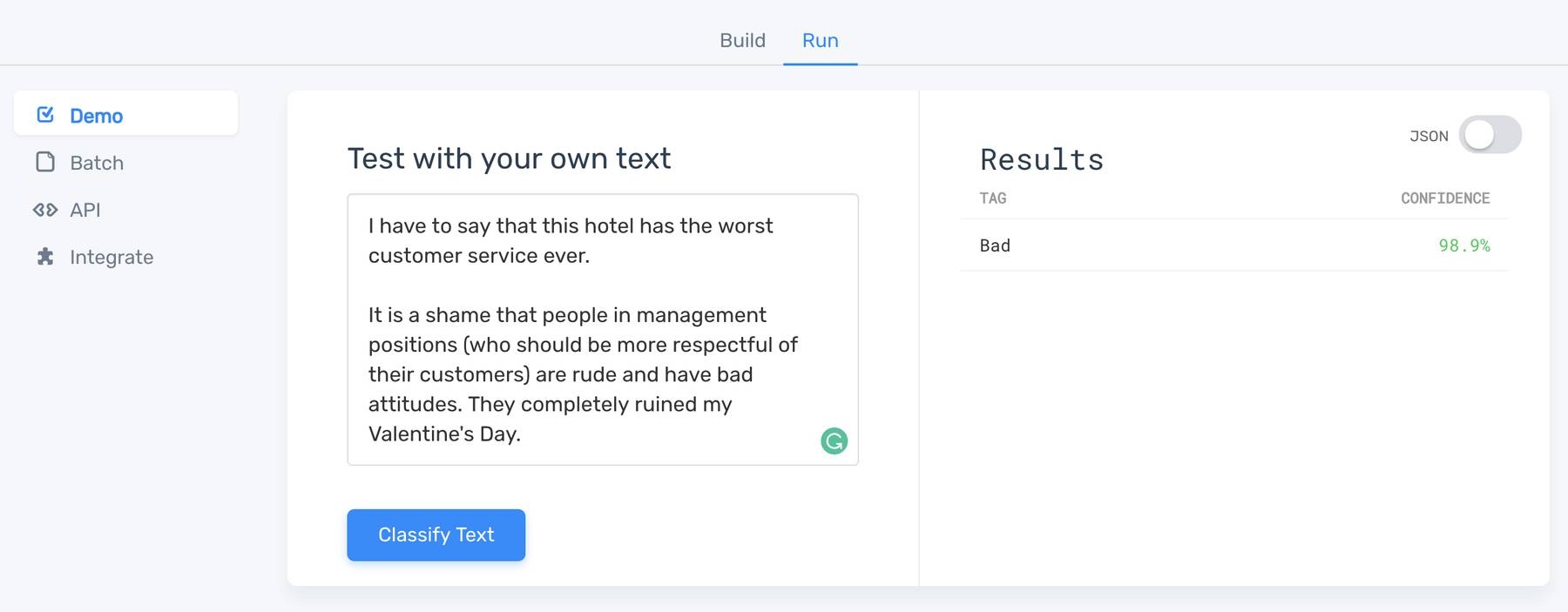

Click on Test it so you can test the model directly from the user interface. You can write or paste a text, click Classify Text and you'll get the prediction:

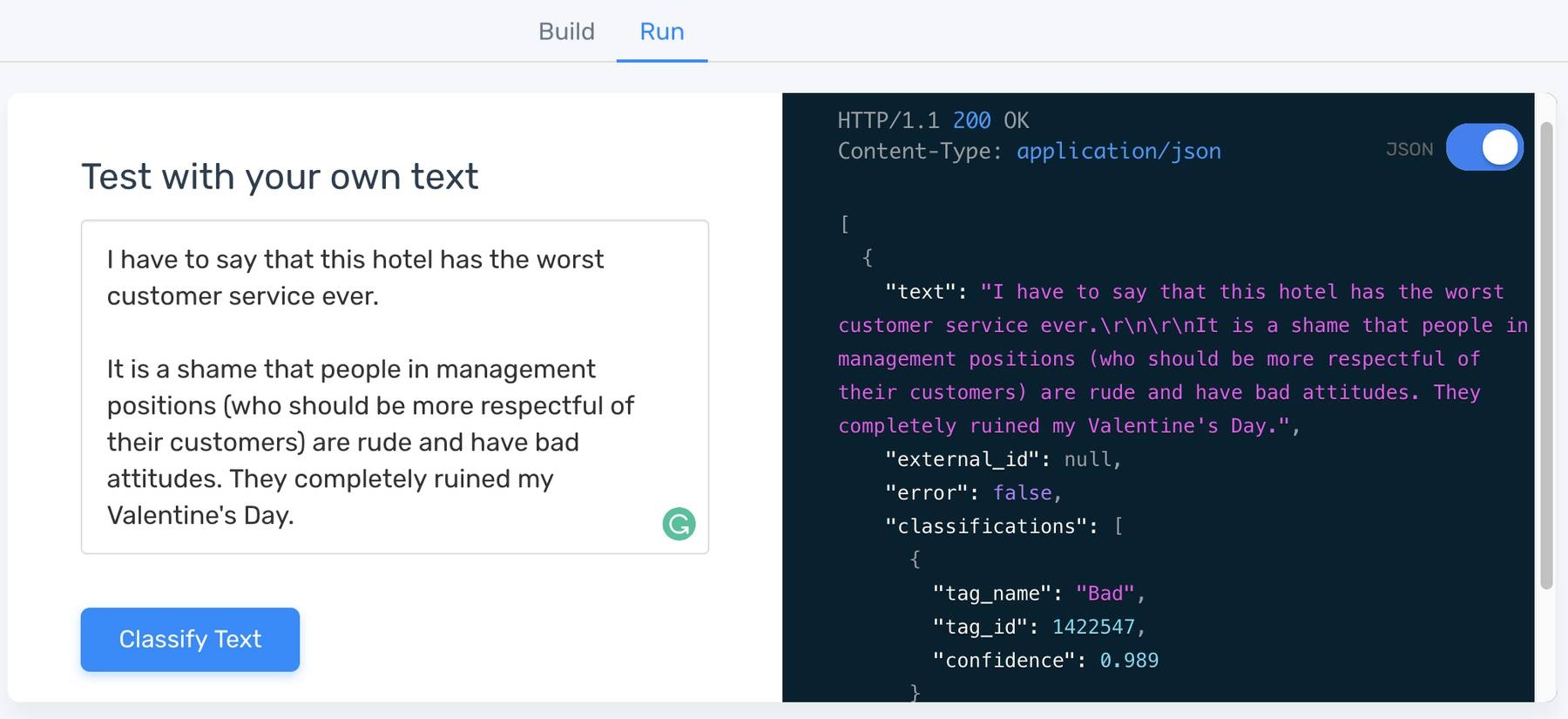

For this example, the model made a prediction and tagged the text as 'Bad' with a 98% confidence. Click on Json to see how the MonkeyLearn API would respond:

Your classifier may still have some errors, that is, classify good reviews as bad, and vice versa, but the good thing is that you can keep improving, if you gather more training samples with tools like Scrapy (in our example, by getting reviews from more cities), you can upload more samples to the classifier, retrain and improve the results. Also, you can try different configurations on the Settings of your classifier and retrain the algorithm.

If you want to look at the finished classifier, we created a public model for hotel sentiment analysis.

6. Integrating the model using the MonkeyLearn API

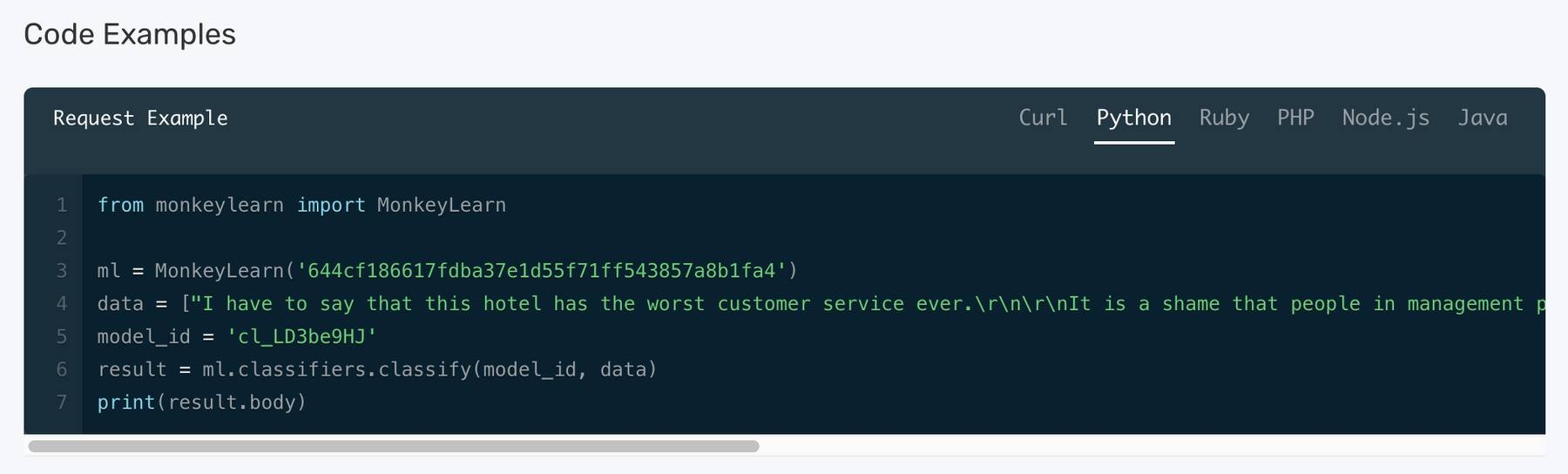

You can easily integrate any MonkeyLearn model with your projects using any programming language. For example, if you're working with Python, you can go to Run, then click on API, select the corresponding programming language and copy and paste the code snippet:

Conclusion

On this tutorial, we learned how to use Scrapy and MonkeyLearn for training a machine learning model that can analyze millions of reviews and predict their sentiment. With just a few lines of code, we can easily understand how customers feel about hotels in NY. Do they like the rooms? Do they hate the service? How do they compare to hotels in San Francisco?

Got any cool ideas on how to use Scrapy and MonkeyLearn? Share them with us in the comments.

Bruno Stecanella

May 3rd, 2016