How GlassDollar is using MonkeyLearn to better connect founders & investors

Guest post by Jan Hoekman, co-founder of GlassDollar.

Fundraising is an important step when building a startup. While the money certainly supports the business, the month-long process of obtaining it can be detrimental to the development of the company and in some cases even threaten its survival.

After having gone through this arduous experience ourselves, we were inspired to create GlassDollar to allow founders to find relevant investors in seconds, and for free.

Our core challenge of matching investors with founders is essentially solving a relevancy problem: given specific information about a founder's startup, which investors would be most suitable as well as interested in the startup?

This post explains how we have been using MonkeyLearn to help us save time preparing our data and improve the quality of matches we make between investors and founders. Let’s get started!

Finding relevance between startups and investors

We obtain data using various sources (APIs, scraping, etc.) and then clean and aggregate it in our database. Afterward, we analyze the portfolio of each investor (i.e. what industries Investor X invest in, at what stage, etc.). Finally, we compare that data to broader trends on investment activity that we see in the entire dataset.

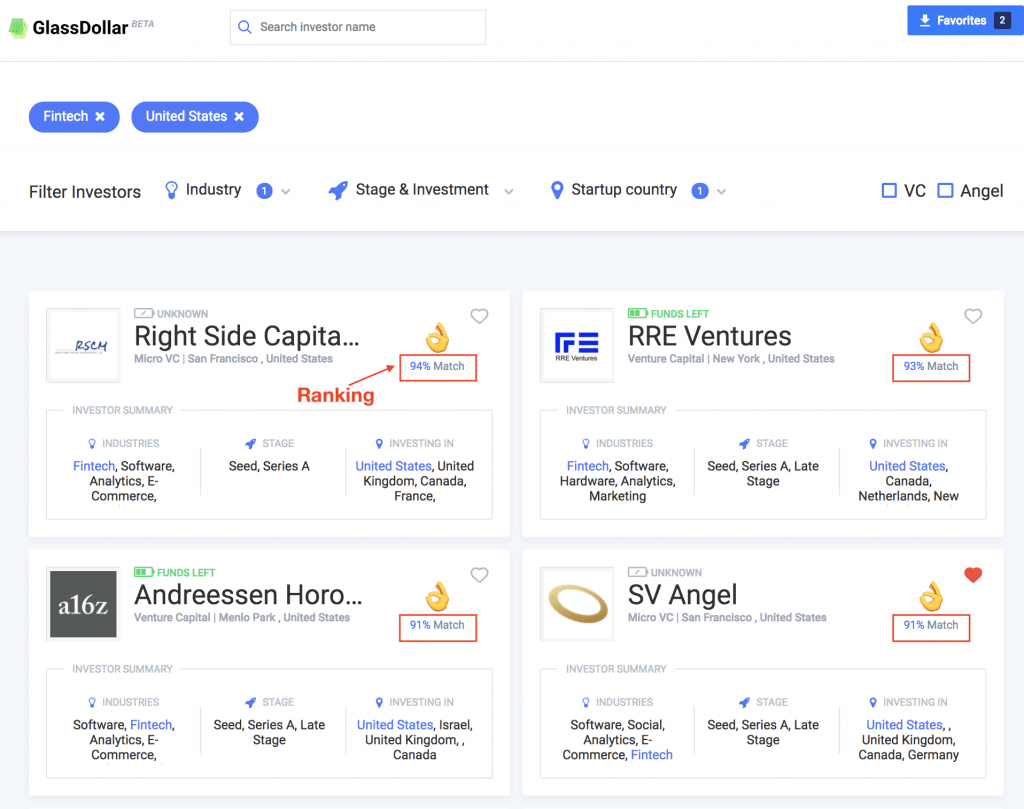

Each time a founder makes use of the GlassDollar search, a ranking of the most relevant investors given the criteria is calculated and displayed in the search results, just like below. This is our solution for enabling founders to find suitable investors:

A GlassDollar search

Normalizing data from different sources

Since we draw from a lot of various data sources to feed our program, we often encounter gaps or differing terminology used across these datasets. Our solution to this had previously been to jump in and fix these issues manually, thinking we could ‘grind’ through it in one night to get over with it. This usually takes the form of mapping input datasets to our formats or filling in gaps row by row. Unsurprisingly, it routinely took much longer than we had expected.

However, every time we can improve the quality of our data even by just a little, we would bring 100s of founders closer to finding the right investors faster, thus, fueling our motivation. The trade-off was labor-intensive data cleaning for better matches. Cleaning data became a core problem and bottleneck to achieving our mission.

To illustrate, imagine you are looking for the most interesting investors in the Fintech space. We ran exactly this search in the screenshot above for the United States. To show that Right Side Capital is likely interested in such an investment opportunity right now, we need to first figure out their historical investment activity as a preparatory step. The same needs to be repeated for every investor in our database, roughly thirty thousand of them.

Evaluating an investor's investment activity means analyzing every company within its portfolio, then finding patterns that form the basis for the user’s search later. This quickly becomes an issue when we are analyzing the deals involving more than 100k portfolio companies. As we mentioned earlier, whenever the data shows gaps, is poorly translated, or in the wrong format, we need to step in by manually searching for news articles and mentions using Google to fill in the data and maintain quality.

A multi-label problem

Another problem to consider occurred when we would try to classify startups into certain industries. It may be the case that a given startup may be best described using multiple industries. For example, a startup might be revolutionizing the Education sector using Artificial Intelligence. We can’t merely just tag them to one exclusive tag, but instead need to consider multi-label tags, Education and Artificial Intelligence.

Nevertheless, there remains a degree of artificiality associated to any classification. We reduce the individuality of a company’s business model to an overarching industry with the purpose of segmenting reality. This necessarily produces noise - i.e. Airbnb is in the travel industry, but certainly differs from booking.com or even real hotels. We will not be able to reflect that with any classification, but we could try to get quality as high as possible.

Using MonkeyLearn for classifying companies

Enter MonkeyLearn, tackling industry classification gaps in our source data.

For most companies, we already have a text description of their activities in our source data which usually makes it clear what industry the business is in. If we do not have a description, it can generally be obtained with relative ease using the API of Clearbit or FullContact.

For source data where no industry is given, we can make use of that description, and automatically classify that company into the corresponding industries using MonkeyLearn. The aim is to replace our manual google search essentially.

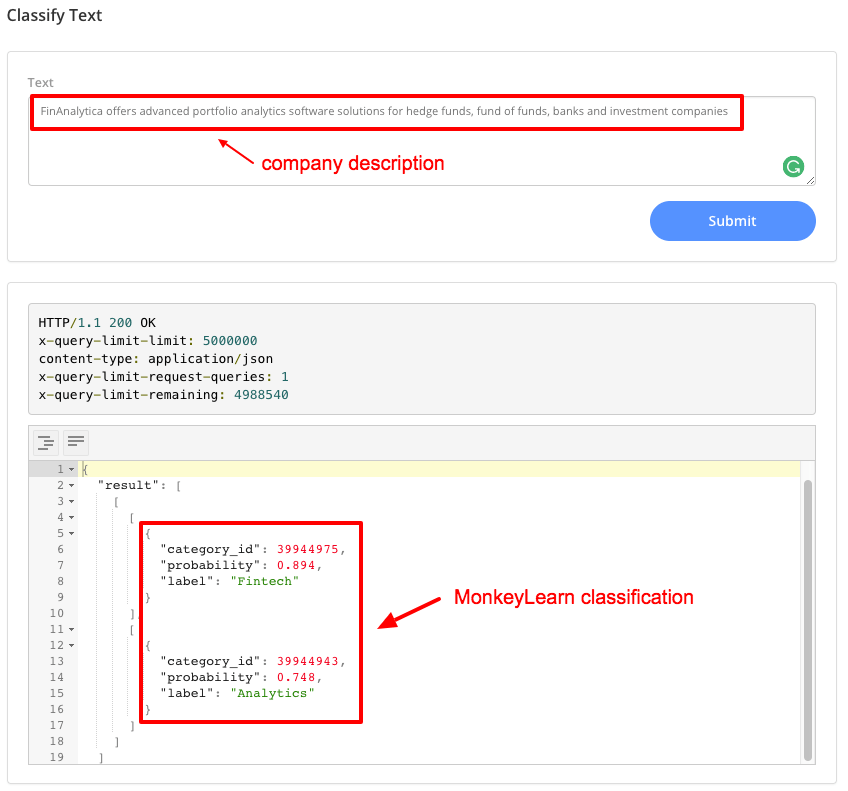

To get started, we built and trained a text classifier on MonkeyLearn with the tens of thousands of industry-company descriptions pairs we already had in our database. We were now ready to test it:

Testing the classifier with a company description

Results

Now we were solving the data gap problem by automatically classifying those ‘orphan’ descriptions with our classifier.

The results were impressive. Initially, 75% of cases were full-on hits with accurate predictions, saving us hours of manually going through the data top to bottom. Once we've trained the model with more text data, this percentage went up to around 90%.

Running this on our 100k+ companies to classify, for which around 8k have missing data, is saving us days of work. Clearly, this already justifies making MonkeyLearn part of our workflow.

And then...

It did not initially dawn on us that we could take this a step farther, but when we did, we certainly experienced an ‘aha’ moment. After using MonkeyLearn to help automate the analysis of new data, we realized that we could also analyze existing data to improve its quality. Thus we could have an impact on the classification accuracy of everything we had analyzed, and it meant no more long nights manually correcting things. The existing data might have been “good enough” already; founders or teams themselves often generated it, but it didn’t have a consistent quality standard. Besides, we knew that slight improvements in accuracy meant substantial benefits for our users, so this became a game changer.

Monkeylearn was able to process the existing descriptions and make suggestions for other industries that a startup might belong to. For example, instead of Airbnb being classified solely under a “Travel” tag, it may fall into “Hospitality” as well. This essentially supplemented our current data, and while it may still not qualify as a perfect representation of a company’s strategy or a founder’s vision, it increases the accuracy with which we can classify portfolio companies of investors.

Once done for our entire dataset, the MonkeyLearn model we created resulted in dramatically better investor matches for founders. Do not believe us? Try it yourself: https://www.glassdollar.com

Thank you Dominik Vacicar for recommending MonkeyLearn to us.

Jan Hoekman

January 11th, 2018