Go-to Guide for Text Classification with Machine Learning

Text classification is a machine learning technique that automatically assigns tags or categories to text. Using natural language processing (NLP), text classifiers can analyze and sort text by sentiment, topic, and customer intent – faster and more accurately than humans.

With data pouring in from various channels, including emails, chats, web pages, social media, online reviews, support tickets, survey responses; you name it, it’s hard for humans to keep up.

On Facebook Messenger alone, a mindblowing 20 billion messages are exchanged between business and users monthly – can you imagine having to process a fraction of that manually? Not only would it take forever, it would also be very expensive.

All channels of communication are incredibly rich sources of information for businesses, but it’s challenging for them to process all the data they receive, let alone extract valuable insights that could help them make informed decisions. Because of this, companies are turning to automated solutions like text classification.

Powered by machine learning, text classification enables you to classify text in a reliable, scalable, accurate, and cost-effective way.

How Does Text Classification Work?

To begin training a classifier with machine learning, you need to transform text into something a machine can understand. This is often carried out using a bag of words, where a vector represents the frequency of a word within a predefined list of words.

Once data is vectorized, the text classifier model is fed training data that consists of feature vectors for each text sample and tag. With enough training samples, the model will be able to make accurate predictions.

Let’s explore some of the most widely-used algorithms for text classification:

Naive Bayes

The Naive Bayes algorithm is a probabilistic classifier that makes use of Bayes' Theorem – a rule that uses probability to predict the tag of a text based on prior knowledge of conditions that might be related. It calculates the probability of each tag for a given text, and then predicts the tag with the highest probability.

You can also improve Naive Bayes’ performance by applying various techniques:

- Removing stopwords: common words that don’t add value. For example, such as, able to, either, else, ever, etc.

- Lemmatizing words: Grouping different inflections of the same word. For example, draft, drafted, drafts, drafting, etc.

- N-grams: The n-gram is the probability of the appearance of a word or a sequence of words of ‘n length’ within a text.

- TF-IDF: Short for term frequency-inverse document frequency, TF-IDF is a metric that quantifies how important a word is to a document in a document set. It is very powerful when used to score words, i.e. it increases proportionally to the number of times a specific word appears in a document, but is offset by the number of documents that contain said word.

Support Vector Machines

Support Vector Machines (SVM) is a classification algorithm that performs at its best when handling a limited amount of data. It determines the best result between vectors that belong to a given group or category, as well as the vectors that don’t belong to the group.

For example, let’s say you have two tags: expensive and cheap and the data has two features: x and y. For each pair of coordinates (x, y) there should be a result for each one to determine which is expensive and which one is cheap. To do this, SVM creates a divisory line between the data points, known as the decision boundary, and classifies everything that falls on one side as expensive and everything that falls on the other side as cheap.

The decision boundary divides a space into two subspaces, one for vectors that belong to a group and another for vectors that don’t belong to that group. Here, vectors represent training text and a group represents the tag you use to tag your texts. A perk of using SVM is that it doesn’t require a lot of training data to produce accurate results, although it does require more computational resources than Naive Bayes to yield more accurate results.

Deep Learning

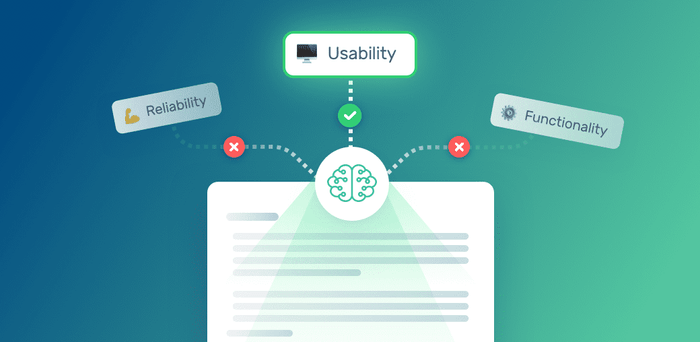

Deep Learning is comprised of algorithms and techniques that are designed to mimic the human brain. With text classification, there are two main deep learning models that are widely used: Convolutional Neural Networks (CNN) and Recurrent Neural Networks (RNN).

CNN is a type of neural network that consists of an input layer, an output layer, and multiple hidden layers that are made of convolutional layers. Convolutional layers are the major building blocks used in CNN, while a convolution is the linear operation that automatically applies filters to inputs – resulting in activations. These complex layers are key ingredients in a convolutional neural network as they assign importance to various inputs and differentiate one from the other.

Within the context of text classification, CNN represents a feature that is applied to words or n-grams to extract high-level features.

RNN are specialized neural-based networks that process sequential information. For each input sequence, the RNN performs calculations that are conditioned to the previous computer outputs. The key advantage of using RNN is the ability to memorize the results of previous computations and use it for current ones.

It’s important to remember that deep learning algorithms require millions of tagged examples, as they work best when fed more data.

Metrics and Evaluation

One of the most common types of performance evaluators of a text classifier is cross-validation. In this method, training datasets are randomly divided into same-length data sets. Then, for each same-length set, the text classifier is trained with the remaining sets to test predictions. This helps classifiers make predictions for each corresponding set, which they compare with human-tagged data to avoid false positives or false negatives.

These results lead to valuable metrics that demonstrate how effective a classifier is:

- Accuracy: percentage of texts that were predicted with the correct tag.

- Precision: percentage of texts the classifier got right out of the total number of examples it predicted for a specific tag.

- Recall: percentage of examples the classifier predicted for a specific tag out of the total number of examples it should have predicted for that tag.

- F1 Score: harmonic mean between precision and recall.

Supervised Vs Unsupervised Machine Learning

There are three widely adopted methods within machine learning: supervised learning, unsupervised learning, and hybrid learning.

Supervised Machine Learning

Supervised machine learning algorithms are trained with labeled datasets that are created by humans. Raw datasets are transformed into numerical vectors that are the input variables (X) which are easily understood by machine learning models and the output variables (Y). The supervised algorithm learns the mapping function from the input to the output. When you have new data, this technique should be able to predict the variables for that data.

Text classification uses supervised machine learning and has various applications, including ticket routing. In this example, incoming messages would be automatically tagged by topic, language, sentiment, intent, and more, and routed to the right customer support team based on their expertise.

Another great example is email filtering. Emails are sorted based on content, which is the result of algorithms learning to detect content that is considered spam, social, or inbox-worthy!

Two of the most common supervised algorithms include Naive Bayes and support vector machines (SVM), which we’ll come back to later.

Unsupervised Machine Learning

In contrast, unsupervised machine learning algorithms learn independently from raw datasets. In other words, it learns from the distributions of words and syntactic patterns in vast amounts of data, which are transformed into a huge vector.

In a nutshell, unsupervised machine learning is not given any labeled outputs or a “correct” outcome. It automatically understands the underlying structure of data and groups words with similar attributes. One of the most popular techniques of unsupervised learning is clustering, which segments topics in text.

Semi-Supervised Machine Learning

Finally, semi-supervised machine learning, otherwise known as hybrid machine learning, is a combination of unsupervised and unsupervised machine learning methods trained with labeled (typically a small amount) and unlabeled data (typically a large amount).

Semi-supervised machine learning is helpful in scenarios where businesses have huge amounts of data to label. For example, semi-supervised machine learning can be used for webpage classification to automatically categorize types of webpages based on labeled input and looking at similarities between webpages, thus identifying and labelling 'unstructured’ webpages.

How to Build A Text Classifier with Machine Learning

Now, you may be wondering how to get started with text classification. Well, MonkeyLearn makes it super easy to classify text data with machine learning.

First you’ll need to sign up to MonkeyLearn for free, then you’ll have access to our pre-trained classifiers.

However, if you want even more accurate insights from your data, we recommend you build a custom-made classifier that is tailored to your needs and trained with your unique data.

Follow these easy steps to build your custom text classifier:

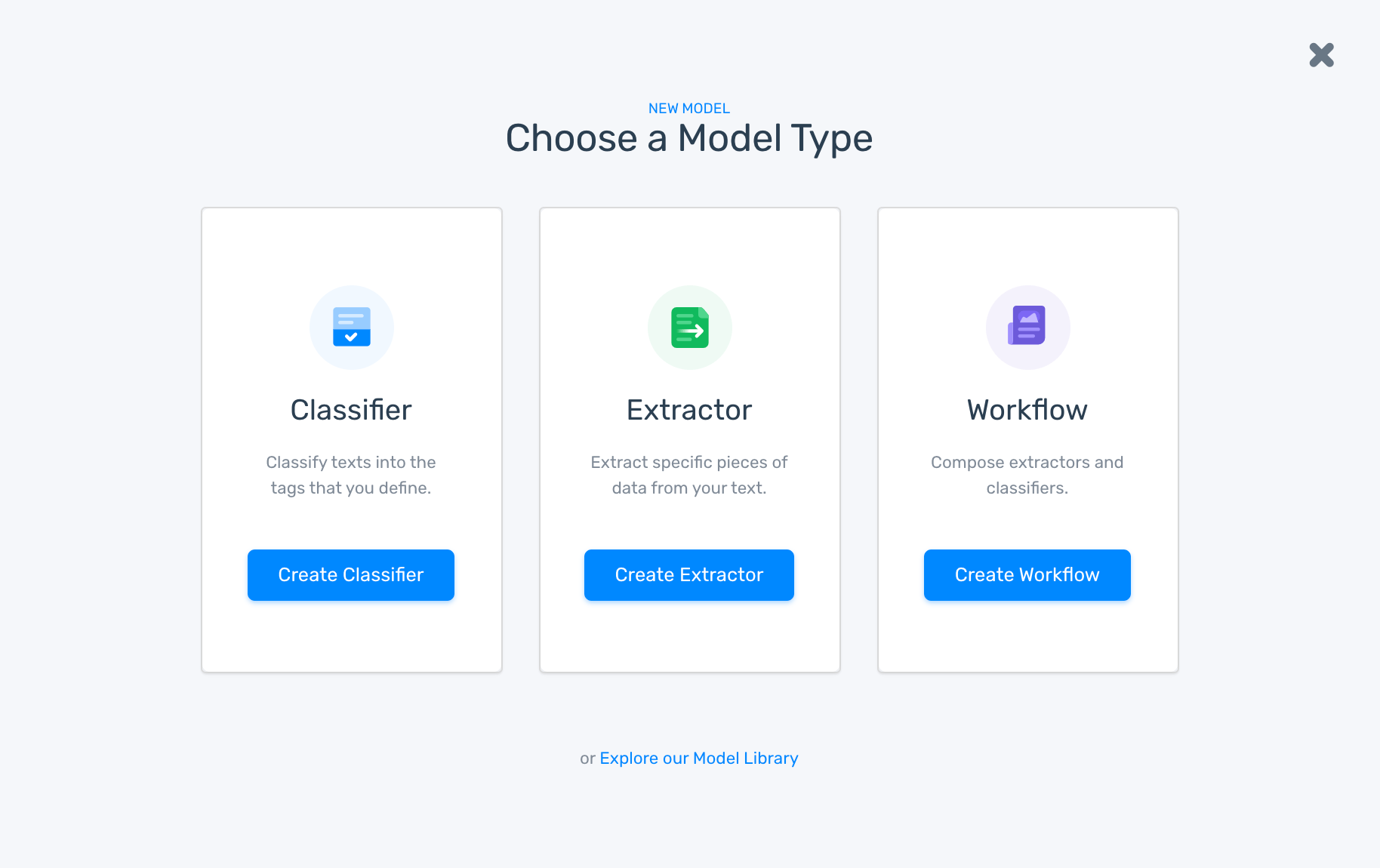

1. Choose A Model Type

Go to MonkeyLearn’s dashboard and click on ‘create model’. This action will prompt you to choose a model type. Select ‘classifier’:

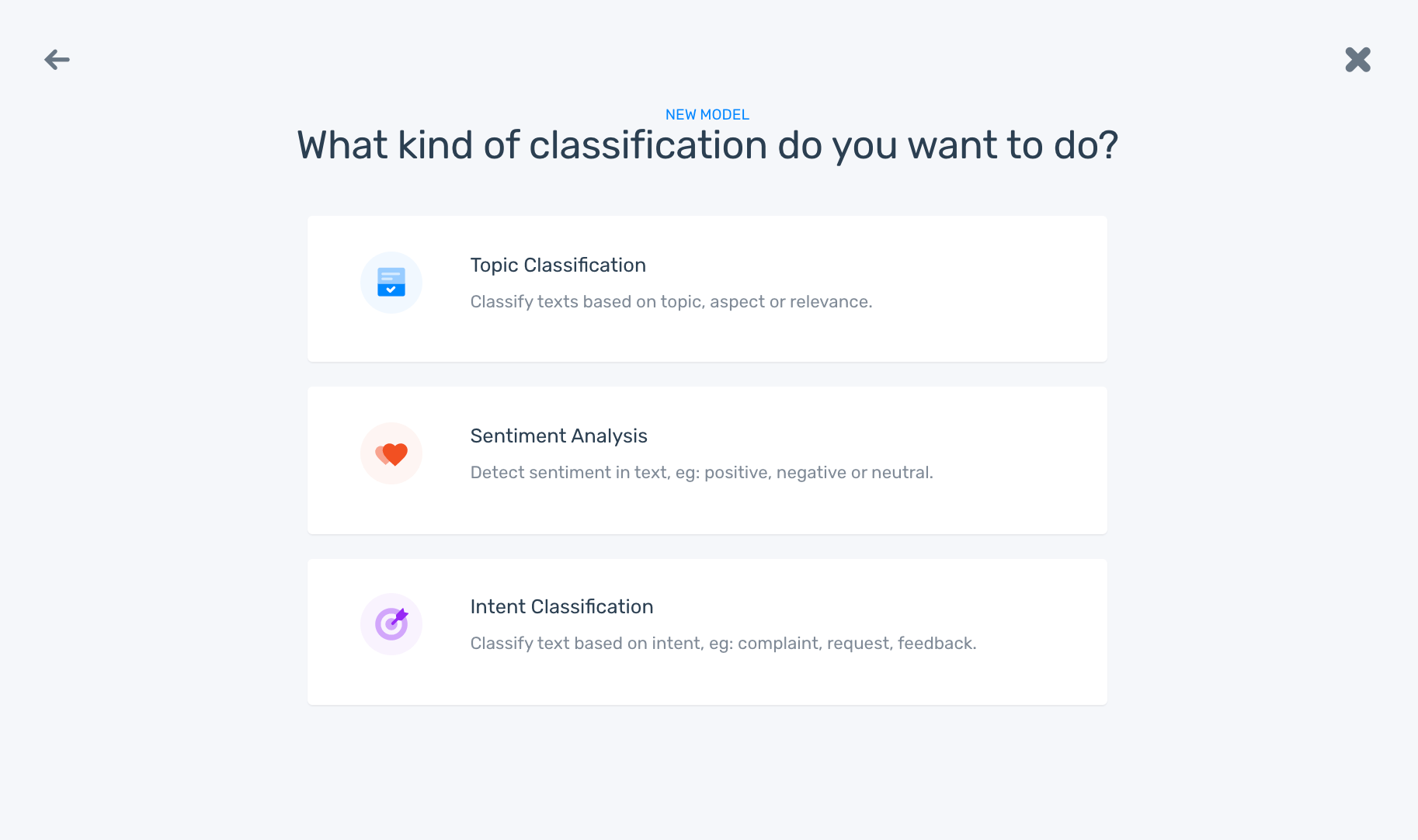

2. Select The Classification Type

Now, you’ll see different classification options. Choose the one you need: topic classification, sentiment analysis, or intent classification. For this example, we’re going to use sentiment analysis.

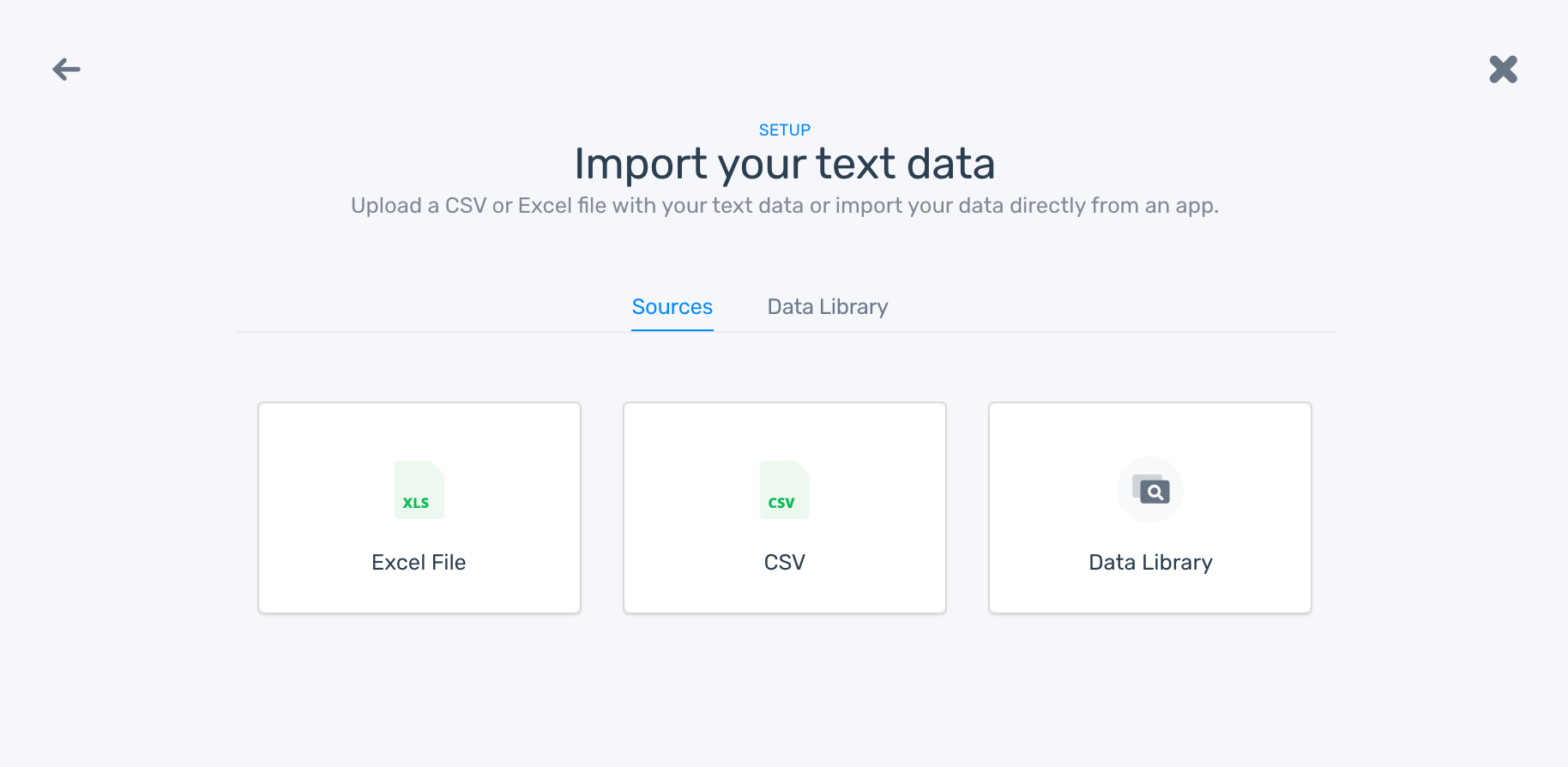

3. Upload Your Data

Upload the data you want to use to train your model. MonkeyLearn offers different sources from which you can upload data. You can either upload data in an Excel or CSV file:

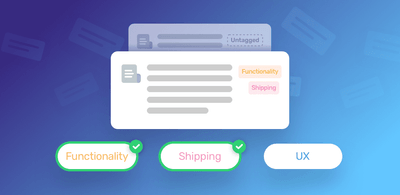

4. Train Your Model

We chose Sentiment Analysis, so we’ll need to train the model by assigning each example the expected tag (Positive, Negative, or Neutral). Once you’ve tagged a few samples manually, the model will make predictions on its own:

5. Test Your Model

Make sure the model is up to scratch by testing it! Just type your own review into the text box and see if it classifies your text correctly:

You can always continue to tag new data to provide more examples for your model. Remember, you’ll need to classify at least 100 samples of text for each tag so it can make more accurate predictions.

6. Analyze Your Data

Now you’re ready to analyze your data. Just choose from the following to import your data:

- Process data in a batch by importing an Excel or CSV file

- Use one of the available integrations

- Integrate MonkeyLearn's API to analyze data programmatically. You can call a model API with Python, Ruby, PHP, Javascript, and Java.

By following these easy steps, you are well on your way to classify your text data in next to no time. From its user-friendly interface to the many app integrations it offers, MonkeyLearn provides a centralized location where you can take care of all your text classification needs by training your own text classifier model.

Wrapping Up

At MonkeyLearn, our text classification models use a combination of machine learning algorithms – supervised, unsupervised, and hybrid – each with their own unique natural language processing techniques, to deliver the best possible results.

Our easy-to-use interface means you can start building your own customized classification model right away, and train it until it’s able to deliver quality metrics on its own.

Just sign up to MonkeyLearn for free or request a demo, and see how easy it is to perform text classification with the right tool by your side.

Inés Roldós

March 2nd, 2020