Training a sentiment analysis classifier using a web scraping visual tool

Contributed by Quentin Simms from ParseHub.

In a previous blog post, you learned how to make quick work out of training and deploying your own custom sentiment analysis model. In the past, this kind of project would have taken a team of machine learning experts a long time to finish, and it can now be done in just a few minutes using nothing but MonkeyLearn to create a machine learning model and a web scraping tool like Scrapy to get training data from the web.

There are other ways you can get the data you need to train your MonkeyLearn classifier. Experienced data miners know when to use Scrapy vs one of the visual web scraping tools in their arsenal, depending on the website. Since one of my favorite things about MonkeyLearn is that it requires no coding, it seems fitting to show how to get text from the web using a no-coding-necessary web scraping tool. You are invited to follow along to this walkthrough and decide which method you prefer.

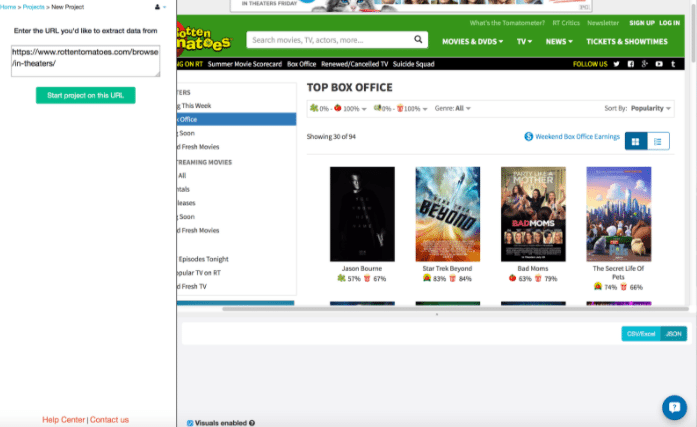

In this tutorial, you will be scraping movie reviews from Rotten Tomatoes top box office using the visual tool ParseHub, and then using this data to train a sentiment analysis machine learning model for movie reviews.

Getting Started

Rotten Tomatoes aggregates movie and TV show reviews from critics across the web. It's perfect for training a MonkeyLearn classifier, because each review is labeled as "Fresh" or "Rotten" depending on the critic's opinion of the film.

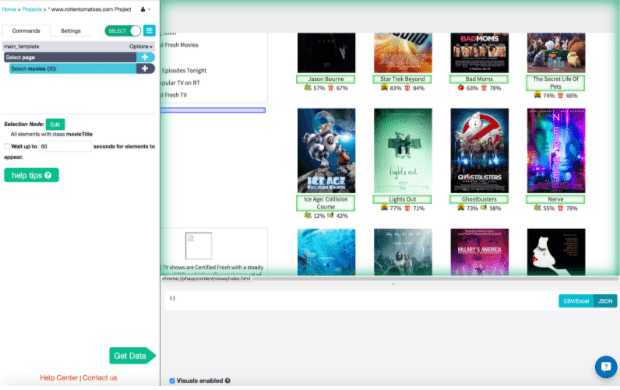

1. Creating a new ParseHub project

As a first step, download and open ParseHub, create a ParseHub account and start a new project at this URL: https://www.rottentomatoes.com/browse/in-theaters/

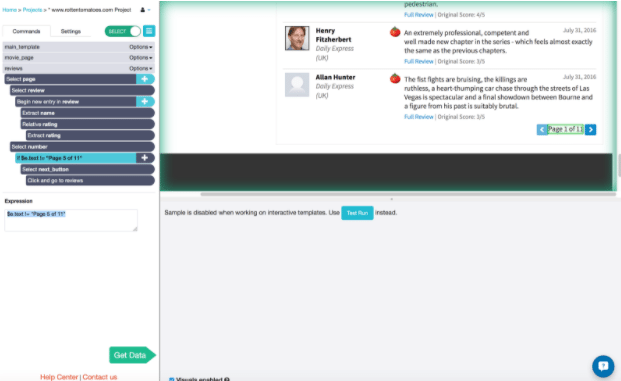

Adding the URL to the project.

This project will act like a set of instructions that tells ParseHub what data you want it to extract from Rotten Tomatoes. Commands are added in the left hand side bar. You will see that two commands are added as soon as the project is created, so that you can start selecting your elements right away.

With the new project open, Select the 30 titles on the page by clicking on two of them.

Change the name of this selection from “Select selection1” to “Select movies” by clicking on the command.

ParseHub will add three new commands, which extract the text and the url of each selected link into columns in your spreadsheet. They are added automatically because this is useful information for most projects. For this project, however, you won’t need the name and URL of each movie, only the reviews. You can click on the “x” button to the right of the command “Begin new entry in movies” to delete these three new commands. You can see how this looks in the picture below.

Commands for this new project.

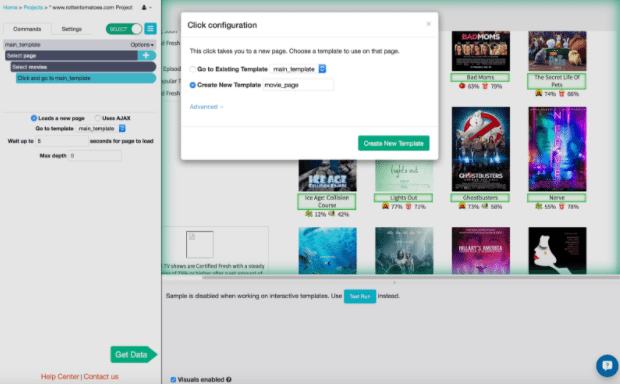

Now you will tell ParseHub to travel to each one of the pages you've selected. Click on the "plus" button next to the "Select movies" command. This opens the command menu, where we will choose the Click command.

A pop up window will open, and we will tell ParseHub to Create New Template and name it movie_page. Think of a template as a list of instructions that ParseHub will execute on a page. In order to create this new template and enter the instructions, you will be taken to the first movie on the page. Remember that you’ve told ParseHub to Click on each one of the movies on the page, however, when this project is run it will execute the template on each one of the movies.

Creating a new template.

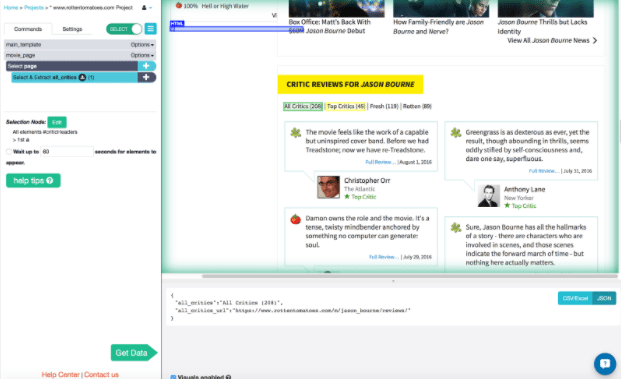

Scroll down until you find the reviews. Click on the tab called "All Critics" to select it. Rename the selection all_critics by clicking on the command.

Creating the 'All Critics' selection.

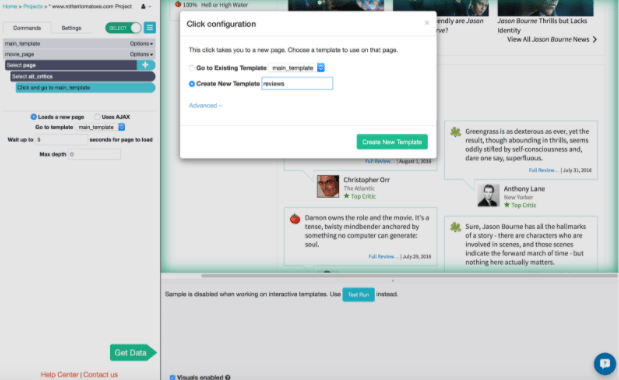

Next to the command “Select & Extract all_critics”, click on the “plus” button and add a Click command. In the pop up window, once again choose to Create New Template and call it reviews.

Creating the new template for the 'Click' command.

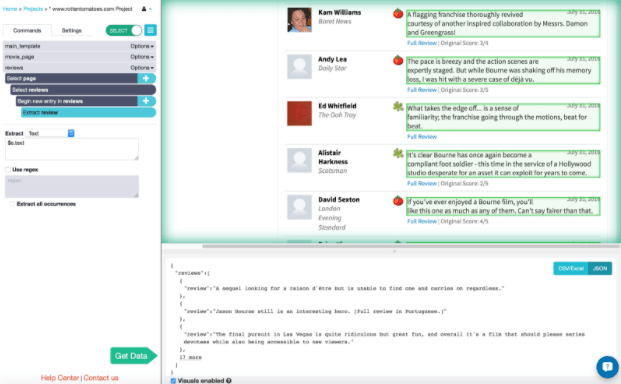

Select the excerpt of every review on the page by clicking on the first two. Name the selection review.

Selecting the reviews from the page.

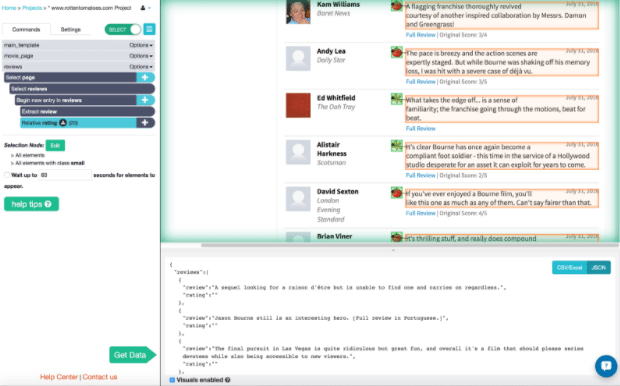

We have the text, but also want the associated 'freshness' rating, so that MonkeyLearn can be trained to recognize the difference between fresh and rotten reviews. Clicking on the “plus” icon within the “Begin new entry” command will let us add a Relative Select command. Relative Select will ensure that the rating will be extracted into the same row as the review in your spreadsheet.

Click on one of the "Fresh" reviews and then click on the tomato icon beside it. Notice that all the other tomatoes will be selected.

Select the "Rotten" reviews the same way: click on a review, then click on the splatter icon next to it. Rename the Relative selection rating.

Creating the relative selection.

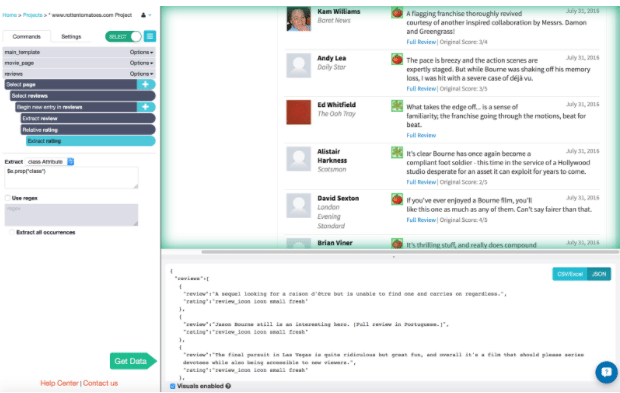

Add an Extract command by clicking on the “plus” button next to “Relative rating” and opening the Advanced menu.

In the drop down menu in your left hand toolbar, choose "class Attribute". Now there will be text extracted - take a look at the sample results below.

Adding the extract command.

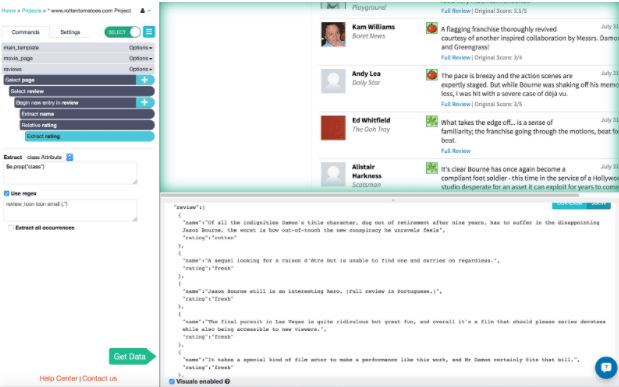

Get rid of the couple of words before "fresh" or "rotten" by using regex. If you are unfamiliar with regular expressions then don’t worry, you can copy and paste this into the regex text box:

review_icon icon small (.*)

Using regex to get rid of words before "fresh" or "rotten".

Now you have all of the reviews on the first page. To get enough texts to train your classifier, you will extract the first 5 pages of reviews for each movie. Traveling to new pages is how ParseHub handles pagination. Other visual web scraping tools may handle pagination differently.

Add a Select command with the “plus” button next to “Select page” right at the top of your template. Click on the text in between the navigation arrows ("page 1 of 11") and rename it number.

Click on the newly added “plus” button on 'Select & Extract number' and open the Advanced menu. Choose the Conditional command. Developers can think of this command as an “if” statement.

I entered the expression:

$e.text != "Page 5 of 11"

Meaning that the next commands would be skipped if the text found was "Page 5 of 11". From the newly added “plus” button, I then added a Select command and selected the next page button. With that newly added 'plus' button choose a 'Click' command, and tell ParseHub to Go to Existing Template called reviews.

Telling ParseHub to go to existing template called reviews.

This completes building your project! You can now run the project to get your data as a spreadsheet.

2. Running ParseHub project

Now that the project is complete, you have two options to get your data:

- If you have no coding experience, you can download a spreadsheet of the data from the ParseHub app to your computer, and then upload it through the MonkeyLearn website.

- If you do have coding experience, you can connect ParseHub to MonkeyLearn via their APIs.

If you would like to download a spreadsheet with the scraped data to your computer, click on the big green 'Get Data' button, press 'Run' and then click on 'Save and Run'. Your project will scrape 200 pages and get just under 3000 reviews, which will take between 10 and 30 minutes depending on your ParseHub plan. This will take place no matter whether you leave the project or close the application. Once complete, you will be able to click the 'Download CSV'.

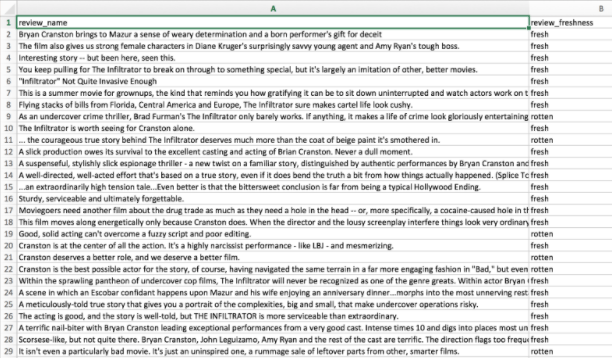

You can see a sample of the results below:

Getting the training data on a CSV.

You can skip to section 3 of this tutorial, 'Training a MonkeyLearn Classifier without coding' to see how to upload this spreadsheet as a sample.

If you do have programming experience, and would like to simply connect the two tools directly, most visual web scraping tools will also let you access your data through the API. Here is the MonkeyLearn API and the ParseHub API documentation.

This is an example of how data can be exchanged between the two tools using a Python script:

import requests

import time

import json

from monkeylearn import MonkeyLearn

#import ipdb

# need to fill out these four fields

api_key = "Parsehub API KEY"

project_token = "ParseHub Project Token"

ml_token = 'MonkeyLearn Token'

module_id = 'MonkeyLearn module ID'

# This commands ParseHub to run the project that I created. The variable r is a newly created run object.

params = {

"api_key": api_key

}

print("\nwaiting for data to be extracted...")

r = requests.post("https://www.parsehub.com/api/v2/projects/{}/run".format(project_token), data=params)

# This gives the run token for the run that was just created

r_json = json.loads(r.text)

run_token = r_json["run_token"]

# This is the status of the run

r = requests.get("https://www.parsehub.com/api/v2/runs/{}".format(run_token), params=params)

# This polls the ParseHub API until the run is complete and the data is ready.

r_json = json.loads(r.text)

while r_json["data_ready"] == 0:

time.sleep(185)

r = requests.get("https://www.parsehub.com/api/v2/runs/{}".format(run_token), params=params)

#ipdb.set_trace()

r_json = json.loads(r.text)

print("\ndata has been extracted")

# This gets the data using the run token

params = {

"api_key": api_key,

"format": "json"

}

r = requests.get('https://www.parsehub.com/api/v2/runs/{}/data'.format(run_token), params=params)

r_json = json.loads(r.text)

r = r_json["review"]

# The MonkeyLearn API will accept a list of tuples as a sample.

# This steps through the ParseHub JSON and creates a list of tuples. Each tuple has the text (name) and freshness (rating) of a review.

print("\nwaiting for samples to be uploaded...")

samples = []

for i in range(len(r)):

review = "{}".format(r[i]["name"]), "/{}".format(r[i]["rating"])

samples.append(review)

# This is the MonkeyLearn upload sample command

ml = MonkeyLearn(ml_token)

res = ml.classifiers.upload_samples(module_id, samples)

print('\nsamples have been uploaded')

# This is the MonkeyLearn train classifier command

res = ml.classifiers.train(module_id)

print("\nclassifier is being trained!")

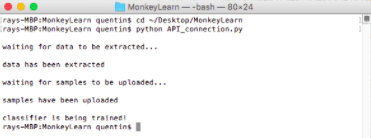

Enter your ParseHub and your MonkeyLearn API Keys and Project Tokens at the start of the code, and then you can execute this script from your terminal. Click here to find out where your ParseHub API Key and Project token are. Depending on the amount of data, it will take about 15 - 45 minutes for the script to complete:

Running the code.

Now you can use the machine learning classifier to predict whether a text is a positive review or a negative review. You can classify text programmatically through the API as well, meaning that you can integrate this model directly into your app. Alternatively, you can go to the MonkeyLearn classifier to provide additional training data to your model.

3. Training MonkeyLearn Classifier without Coding

Now it's time to train our machine learning model within MonkeyLearn with the data we just scraped. With this classifier, we will be able to predict if a movie review is positive or negative using machine learning.

As a first step, click on "Create Model" within the MonkeyLearn dashboard and give it a name and description. Remember that you are creating a classifier and not a pipeline.

In the model creation wizard, tell MonkeyLearn that you are working on with 'Web Scraping' data for 'Sentiment Analysis' from 'Comments or Reviews':

Creating my text classifier on MonkeyLearn.

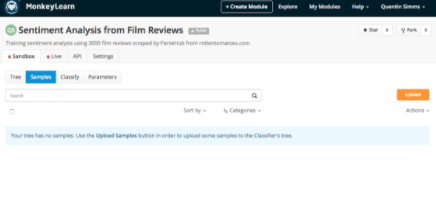

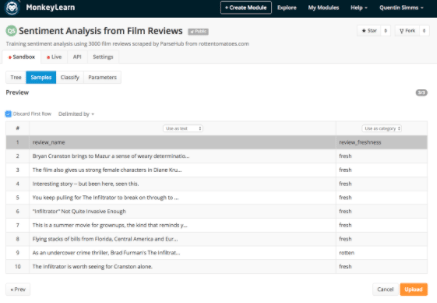

Now, we have created our model and it's time to upload the training data. To do this, go to the 'Samples' tab of the 'Sandbox' of your classifier, click the 'Upload' button and choose the CSV file you saved from ParseHub.

Remember to "Discard First Row" and select 'use as text' in the column with the text of the review and 'use as tag' on the column with the"review freshness":

Uploading my training data to MonkeyLearn.

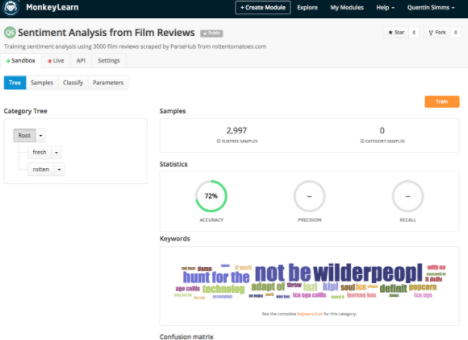

Now go back to the 'Tree' tab and click "Train". And voila, you have a working sentiment analysis model. Isn't machine learning easy?

My machine learning model, trained without writing a single line of code.

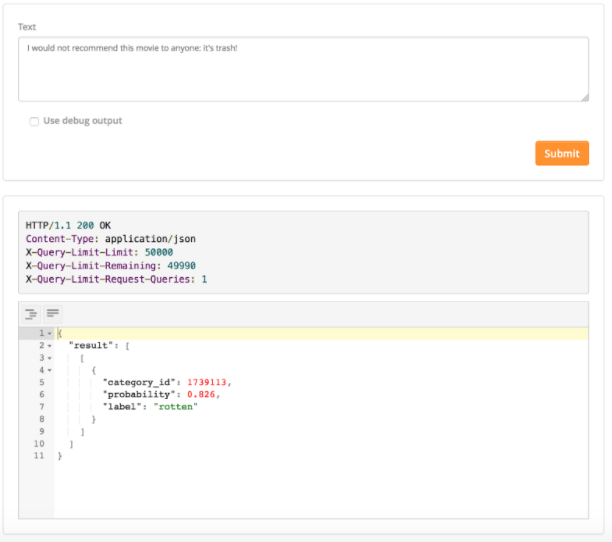

You can test the classifier within the 'Classify tab' and check out how it predicts the sentiment of a new review:

Testing the classifier.

Or you can use MonkeyLearn API to integrate the machine learning model to your app and make the predictions programmatically.

Final words

With MonkeyLearn, natural language processing has never been as accessible. The same goes with data extraction: visual web scraping tools are not only making it possible for people without any coding experience to get the data they need. Similarly, they are cutting down the time that experienced developers need to spend on data extraction. The less time spent getting the data means the more time that can be spent analyzing and visualizing it.

How do you plan to use the power of combining machine learning and web scraping?

If you are a developer, let us know how you are integrating natural language processing into your app or website, and your favourite methods for extracting data from the web. And if you are a non-programmer, we hope that this opened your eyes to some awesome tools that you can use without writing a single line of code.

Finally, we would like to know what you think of MonkeyLearn, ParseHub and other visual web scraping applications! Please share your thoughts in the comment section of this post.

Federico Pascual

September 22nd, 2016