Analyzing news headlines across the globe with Kimono and MonkeyLearn

Update May 2016: Kimono has been acquired by Palantir and its cloud service has been discontinued.

Contributed by Pratap Ranade, co-founder at Kimono Labs.

The news is probably one of the first things you check in the morning, but how much does what you know and understand about the world depend on your news source? Will you view the world differently if you head over to CNN instead of BBC? Tools like MonkeyLearn and Kimono allow us to gather and analyze the text we encounter in our daily lives and determine what that text says behind the words - in this case, it turns out that different news sources report on different news with different weighting.

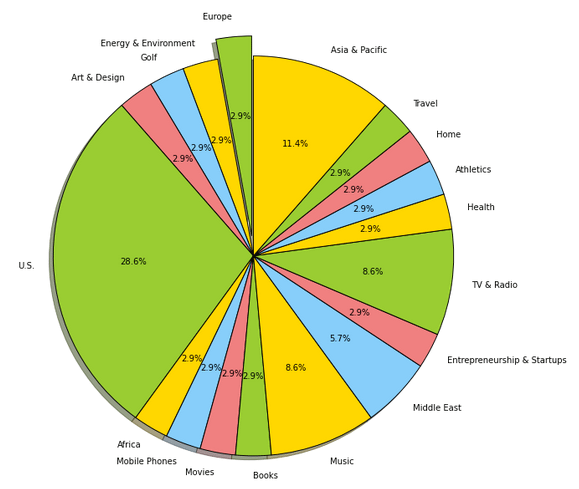

We analyzed the front page articles of five different major global news outlets - Fox, CNN, China Daily, Al Jazeera (English), and the BBC. We amassed the data and then used MonkeyLearn's text analysis, a combination of entity extraction - a process that recognizes text corresponding to important people, places, and organizations - news classification and keyword extraction.

In this post, we share a few insights and explain how to replicate our process so that you can analyze any text on the web. This analysis is by no means conclusive, as it is based on just one day of data but instead demonstrates the types of insights you can get when you use smart tools like Kimono and MonkeyLearn.

We did this in three steps:

- Set up Kimono APIs for top news sites.

- Format our kimono API output.

- Upload data to MonkeyLearn and run it through the appropriate classifiers.

1. Set up Kimono APIs for top news sites

For each news source, we created one 'Headlines API' to scrape the links to the articles on the front page, and fed those links into a secondary 'Articles API' to crawl the full article text behind each link. You can read more about how to do this in this blog post, or you can watch this video tutorial. Setting up this two step crawl enables you to quickly scrape a broad set of content.

Here’s a sample of our unprocessed output from our BBC Articles API (this is the content behind one of the BBC front page articles):

{

"article_text": "Police in Haiti have clashed with anti-government protesters angry

about the high cost of fuel."

},

{

"article_text": "Several people were injured as police moved in to clear roadblocks

set up in the capital, Port-au-Prince."

},

{

"article_text": "Protest organisers said that drivers who ventured into the city

centre during the two-day protest would be putting their lives at risk."

},

{

"article_text": "Haiti has seen months of protests against President Michel

Martelly over delayed elections."

},

{

"article_text": "\"Because of the price of fuel, the cost of living is going

up,\" said Ralph La Croix, a Port-au-Prince resident."

},

To format this data to be compatible with MonkeyLearn, we need to format these ‘article_text’ properties into a single string.

2. Format the data

To format the data you can use your language of choice (or you can write a javascript function directly in the kimono web interface).

In this scenario, we downloaded the data, stitched the article_text properties for each API into one string and pushed it to MonkeyLearn. Here is the code repository along with an IPython Notebook - we suggest you clone the repository and follow the example to see how we formatted the data and called the MonkeyLearn classifiers to analyze the text.

3. Upload data to MonkeyLearn and run it through the appropriate classifiers

Here are links to each of the kimono APIs we used, if you would like to get the data manually:

Here is an excerpt of code from the IPython notebook that shows you how to run the MonkeyLearn classifiers on the formatted data:

import requests

# We will store all of our results here. The first list will contain news

# classification, the second extracted entities, and the third will hold

# any extracted keywords. Positions will correspond to the position of the

# original piece of news in the objects variable.

partials = [[], [], []]

while src_data:

# We will classify data in chunks of BATCH_SIZE.

items = src_data[:BATCH_SIZE]

# Prepare the data and headers for our request

data = {'text_list': items}

headers = {'Authorization': 'Token {0}'.format(API_KEY),

'Content-Type': 'application/json'

# We will call the classifiers and extractors here, and then add the

# result to the partials list.

for i, url in enumerate([NEWS_CLASSIFIER, ENTITY_EXTRACTOR, KEYWORD_EXTRACTOR]):

response = requests.post(url,

data=json.dumps(data),

headers=headers)

result = json.loads(response.text)['result']

partials[i] += result

# Remove the classified items from the list of items.

src_data = src_data[BATCH_SIZE:]

# Lets zip the original news with the data MonkeyLearn returned. We will

# end up with a list containing tuples that look like:

# (news text, news categories, extracted entities, extracted keywords)

data = zip(objects, *partials)

Visualization

By running this scraped data through MonkeyLearn’s categorizers and extractors, we are able to analyze and graph this data to generate different insights. See a few samples below, or view more in the Notebook.

We can see which were the most popular topics:

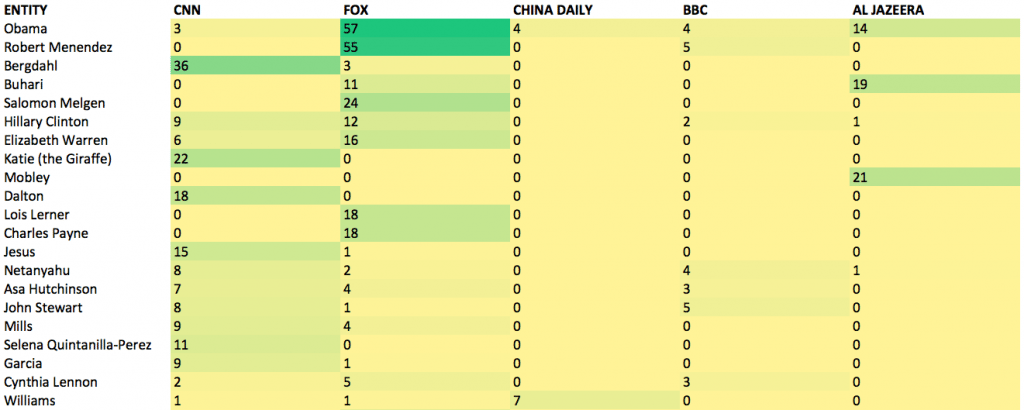

We can also see who the most talked about people were:

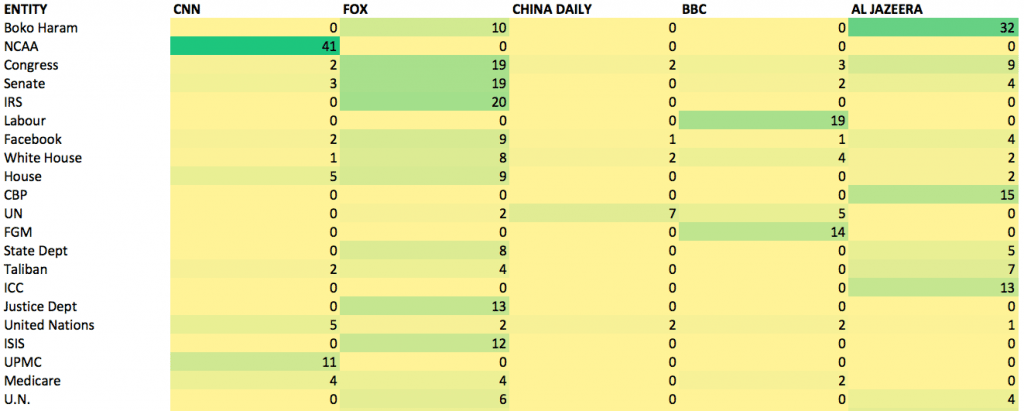

Or check out which were the most talked about organizations:

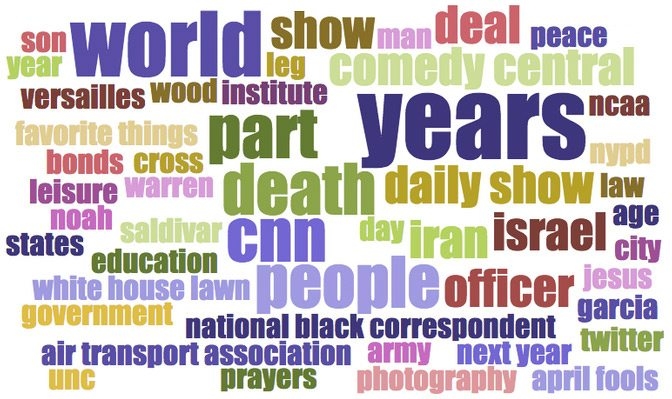

Or create a word cloud with the keywords in the articles:

Insights

Using this process, we garnered some preliminary insights on these news outlets, such as:

- Only 1 person and 4 organizations were consistently mentioned across all global outlets - Barack Obama, Congress, the White House, Facebook and the United Nations.

- Al Jazeera and Fox had the highest overlap - 98 terms; while Al Jazeera and the BBC had the lowest overlap 44 terms.

- Mike Pence, the infamous Indiana Governor who passed a controversial 'religious freedom law' in Indiana made the news in all outlets except China.

- NCAA mentions only appeared on CNN - with March Madness entering the final week.

- Boko Haram appears consistently only on Fox and Al Jazeera (we ran this analysis for longer, and this is consistent across months).

- Bowe Bergdalh, the once-missing American soldier charged with deserting his unit appeared significantly more often (10x) in CNN than Fox, and was not mentioned outside the US.

- The corruption charges against Governor Menendez of New Jersey (D) had a high number of mentions but appeared exclusively on Fox and the BBC, not CNN.

We'd need to run a longer analysis to draw conclusions, but it is interesting to note that in this analysis, like our past news analyses - it seems that negativity wins in reporting -- CNN vs Fox spend more time reporting negative content relating to the Democratic political party than they do on positive content on the Republicans.

Have any cool ideas on how to combine Kimono and MonkeyLearn? Share them with us in the comments.

Federico Pascual

April 17th, 2015