Aspect Analysis from reviews using Machine Learning

Recently we walked you through on how to train a sentiment analysis classifier for hotel reviews using Scrapy and MonkeyLearn. This tutorial is a perfect example on how we can combine web scraped data and machine learning for discovering valuable insights about a particular industry.

With this model, we were able to analyze millions of reviews and understand if guests love or hate different hotels. But besides understanding the sentiment of a review, wouldn't be interesting to understand what particular aspects do the guests love or hate about a particular hotel?

This post will cover how you can create a machine learning classifier to understand the different aspects of hotel reviews. We will combine this model with the sentiment analysis classifier to get insights like are guests loving the location of a particular hotel but complaining about its cleanliness?

Getting the data

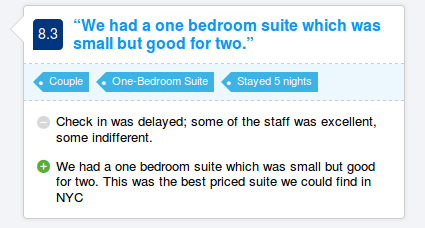

We decided to scrape reviews from Booking.com for getting the data to train our aspect classifier. The format used in these reviews - divided into positive and negative aspects - makes the guests to be more straightforward about the different aspects of the hotel like cleanliness, location or comfort. In contrast, the reviews found on other sites such as TripAdvisor are less structured and tend to mix various opinions where the writer tells us their story and experience with the establishment. In addition, the fact that reviews are divided into positive and negative could be useful later on if we want to use these reviews to train a new sentiment analysis classifier.

A Booking.com hotel review

We wrote a Scrapy spider that would scrape six pages of reviews from every hotel in New York City. Since every page has 75 reviews, this ended up being around 75,000 items. For each item, we can collect the title, the tags, the positive content, the negative content and the total score. Sadly, even though guests give a score for each aspect, you can only see the totals.

You can view the full code for the spider here.

Opinion Units

Since we were interested in creating a classifier by aspect of the hotel, we wanted to have text data tagged with the specific aspects that they mention (possibly an opinion can reference more than one aspect). With this in mind, we divided the text content into what we called opinion units. We divided the text into sentences using a simple script and further split the sentences whenever the word but appeared. This is because that connector usually is used to express more than one opinion in the same sentence. For example:

“The location was good, but the service was lacking.”

Here we have one opinion unit 'The location was good' where the aspect is the location and the sentiment is positive, followed by another opinion unit 'the service was lacking' where the aspect is the service and the sentiment is negative.

Opinion units are mostly short phrases with an opinion about some aspects (usually a single aspect per opinion unit) of the hotel, which was just what we need to train a machine learning model.

Tagging the data and creating the tag list

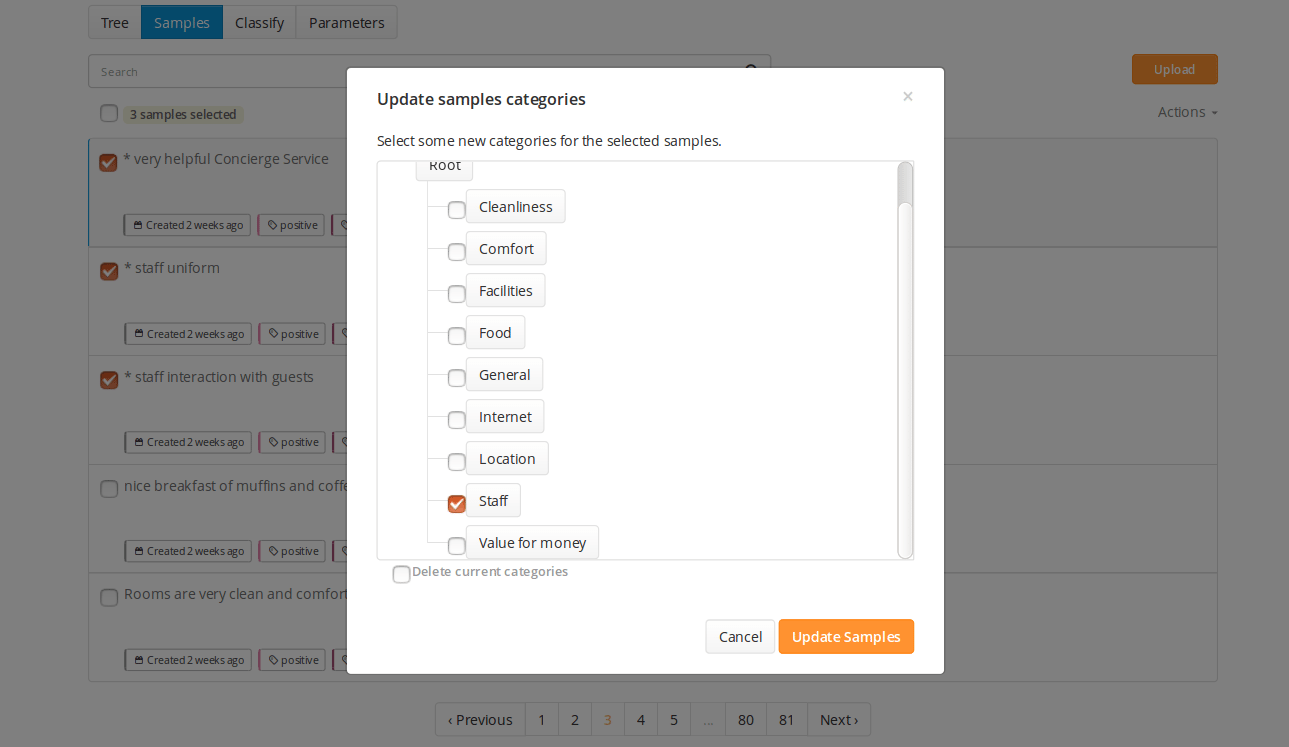

We separated 400 of these opinion units and called them Testing Dataset. We created a multi-label classifier and tagged these samples using MonkeyLearn's UI:

Tagging the data by hand with MonkeyLearn

This was a time consuming but necessary process, since it's a good practice to have a trusted dataset where you can test the machine learning model after it's trained.

It also helps to understand the data and the problem that we want to solve. Initially, we weren't 100% sure of the aspects that we should tag the opinions with. As a starting point, we used the tags shown in Booking.com (Cleanliness, Comfort, Location, Facilities, Staff, Value for Money, and WiFi). But while tagging the testing dataset, we decided to do some modifications in the tags that we will be using.

Training the classifier

When training a machine learning classifier, usually you'll need a substantial amount of training data (in the order of thousands of texts) in order for the classifier to work accurately. It was time to get some help from the crowd, so we used Amazon Mechanical Turk, a marketplace for outsourcing micro jobs, for scaling the tagging of the text data.

There are a lot of alternatives out there, but Mechanical Turk is pretty reliable. The most important part of this process is writing the instructions for the workers, detailing what to include and what not to include in each tag (in our case, the aspects). Without good instructions, the workers can't do a good job.

Once the writing of the instructions was done, the actual tagging process was pretty quick. We got 2,000 samples tagged in a couple of hours and the quality of the tags was mostly very good.

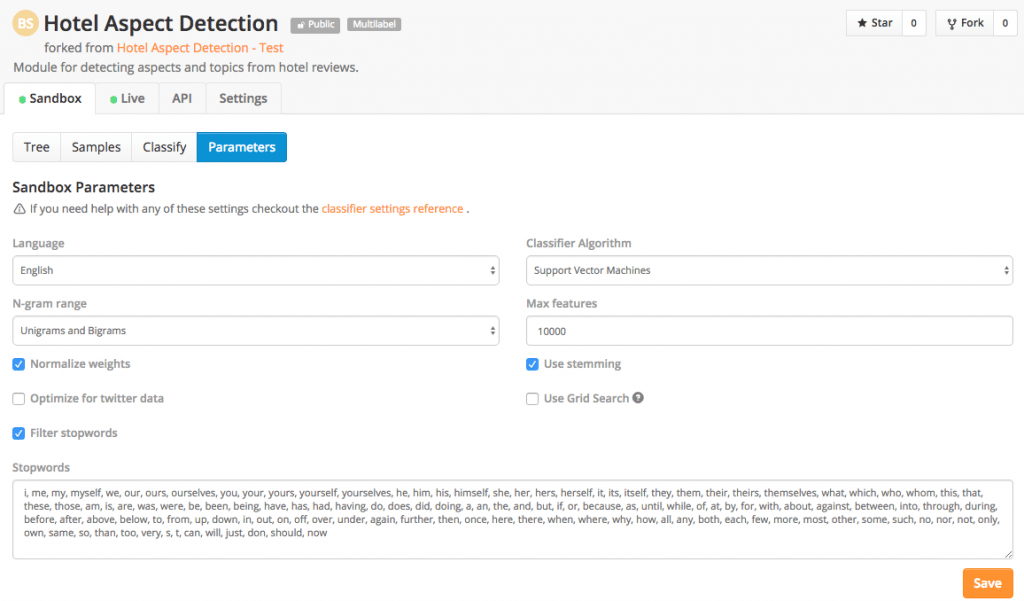

After we have the tagged training set, we just uploaded it to MonkeyLearn and trained a new multi-label classifier. At first, the classifier had a low accuracy (50%), so we experimented with the parameters in order to get better results. Specifically, we changed the algorithm to Support Vector Machines, which, even though slower to train than Naive Bayes, usually gives a more accurate classifier.

Those changes in the model's parameters raised the accuracy, but it still wasn't at an acceptable level. However, as we looked through the results, we found why: there was a lot of overlap between the Comfort and Facilities tags.

If a sample is tagged as Facilities, more often than not one could make a strong case for it being tagged as Comfort as well. For example, in the opinion “the room's bathroom is too small”, the bathroom is a facility, but a small bathroom is clearly a cause for discomfort. “Room was noisy and cold”, that's clearly about comfort, but the rooms are also facilities.

In other words, the classifier generally was confused by these type of opinions, since the taggers themselves didn't really agree on what counts as talking about the facilities of the hotel and what are talking about its comfort.

Thus, we decided to combine the tags into a new tag Comfort & Facilities, which caused the accuracy to go up because the criteria is less ambiguous. This is a common pattern when creating a classifier: you have to rethink your classification strategy and work on your model before it produces consistent results.

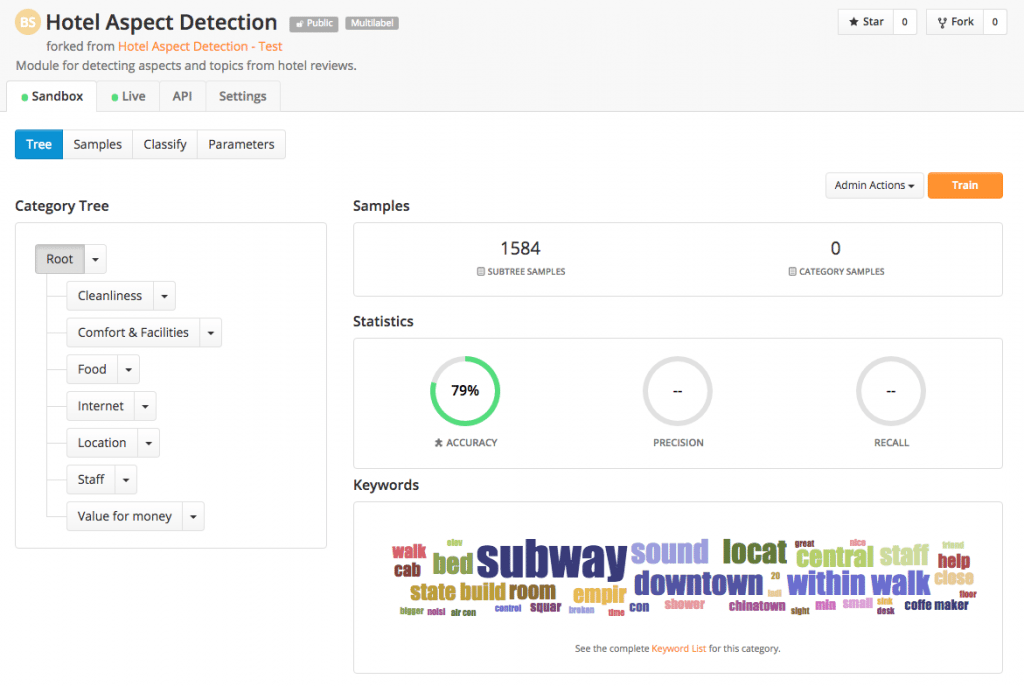

The final classifier gets 79% of accuracy with 7 tags and above 80% of precision and recall on almost all tags. This is a very good result, specially considering that we are training a multi-label classifier. Usually, these kind of models needs more accurate text data, which are harder to come by.

Check out the finished classifier here.

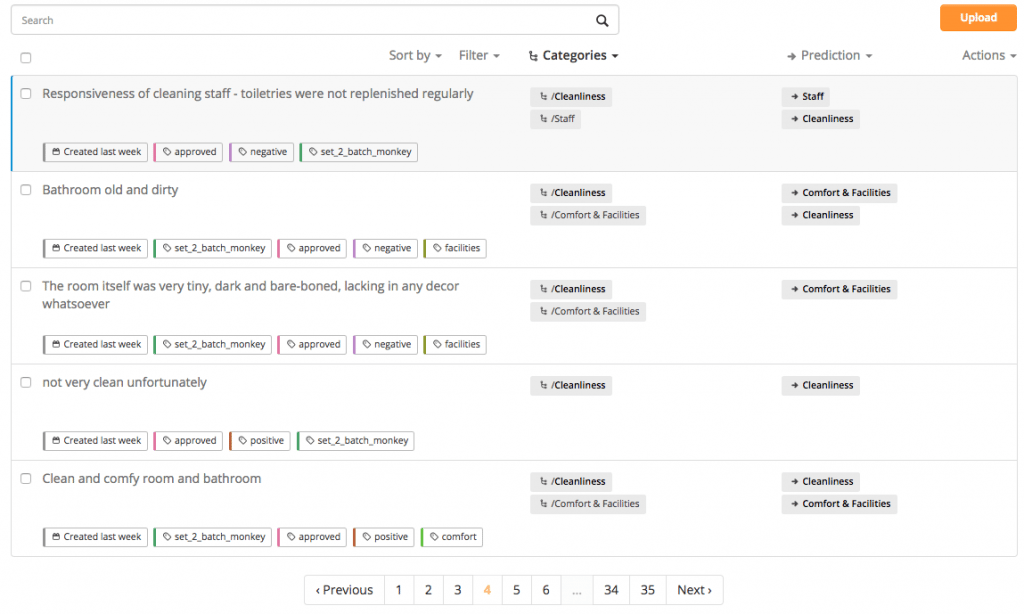

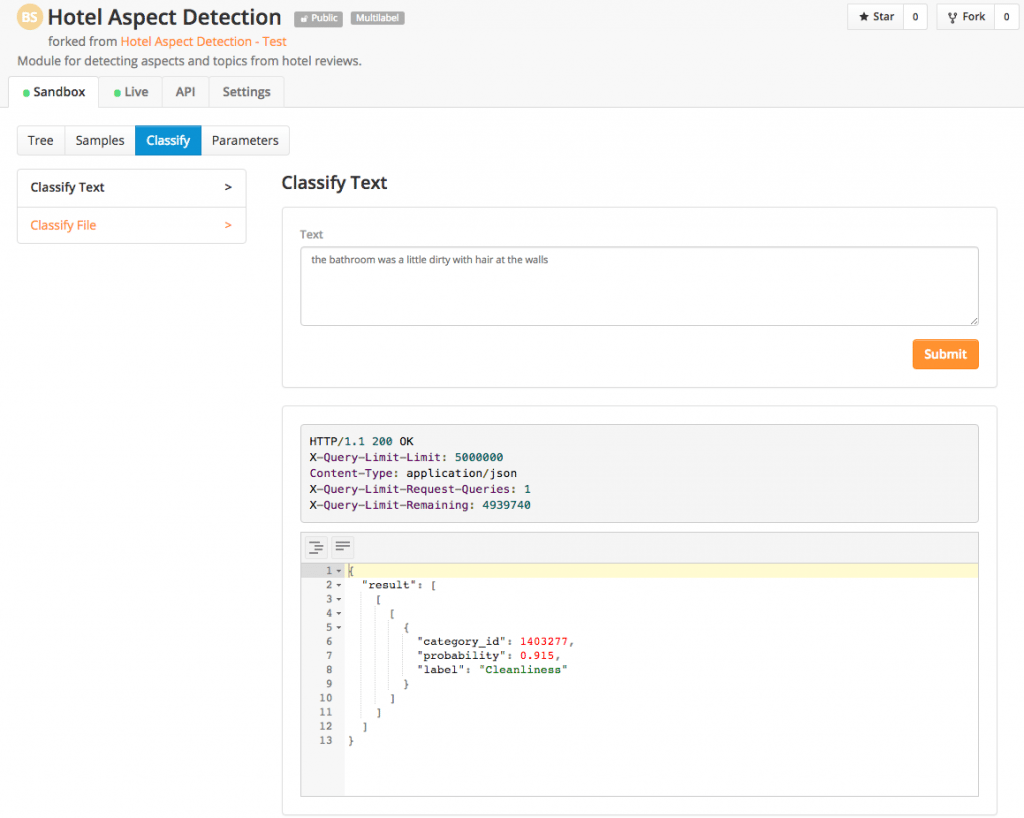

You can easily try the classifier by going to the Classify section within the Sandbox tab and make a prediction:

In the case of "spotless - clean, modern and staff very friendly and really helpful!", the prediction is Cleanliness with 1.0 of probability and Staff also with 1.0 of probability, this is an example that justifies our decision to use a multi-label classifier.

You can also do bulk classifications by using the Classify File option on the interface by uploading a CSV or Excel file with all the texts that you want to classify, one text for each row. This will generate a new CSV with new columns with the predictions and probabilities for each row.

In addition, you can integrate the model into your own project using the API that is instantly published once you create a MonkeyLearn model. If you work with Python, Ruby, PHP, Javascript or Java you can also use the SDKs to make the integration even easier.

Getting insights from reviews

Alright, we have our hotel review classifier by aspect. What now?

A machine learning model that automatically understands what a review is talking about has many uses and applications. For example, let's imagine that we want to know which aspects of each hotel are mostly praised by reviewers and which are mostly criticized.

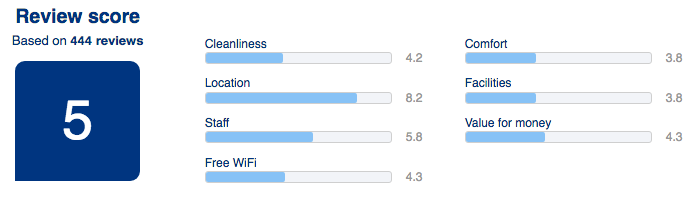

How could we do that? First, using the spider we've built, we can download all the reviews of the New York Inn hotel. You can see from the aspect breakdown that it's not a particularly good hotel, but apparently it's well located:

Hotel review score breakdown from Booking.com.

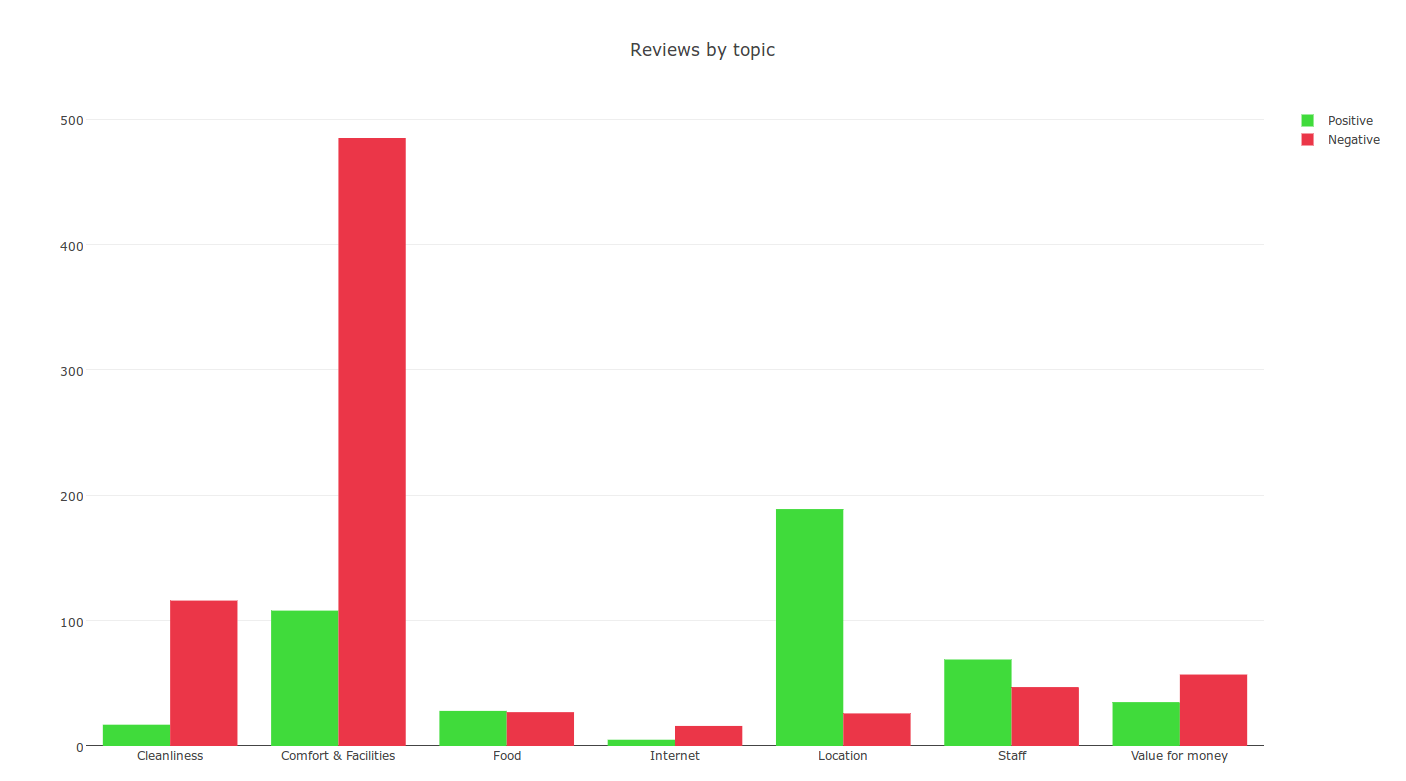

Afterwards, we can divide the reviews into opinion units and classify them by aspect using the API. We also classified these opinion units by sentiment, using the sentiment analysis classifier we've built in our last post.

Finally, we can combine these results into this graph:

Using Plotly to plot the results

You can see that while reviews of cleanliness and comfort & facilities are mostly negative, mentions of the location are mostly positive, which mirrors the aspect breakdown on Booking.com for this particular hotel.

Conclusion

We used MonkeyLearn to create a machine learning classifier that is able to learn to transform unstructured text reviews into actionable insights. The model learns to predict the aspects that are mentioned on each opinion unit within hotel reviews.

We can use these models to better understand the reviews from sites like TripAdvisor or any other source where there isn't an aspect breakdown of the reviews. And we can use it to process massive amounts of data in real time (something that manually could be practically impossible).

This model can also be used to normalize the criteria used on different sites. For example, Priceline reviews only scores 3 aspects of the hotel (location, staff and cleanliness) and Hotels.com uses other criteria (location, service, bar & beverages and WiFi). This is a very frequent problem when gathering and comparing data from different sources, each on with its own criteria.

These kind of tools can provide business intelligence to the owners of products and services to accurately know what people are complaining/praising and quickly take action. Besides, consumers can quickly get quantified information about a product or service without having to read tons of reviews.

The same process can be implemented for any product or service, ranging from hotels, restaurants, airlines and retail products.

Hope you enjoyed this post, you can take a look at part of the source code used (Scrapy spider and scripts).

Next post, we'll explore more uses for these kind of machine learning models, stay tuned!

Bruno Stecanella

May 19th, 2016