Creating machine learning models to analyze startup news

This is the second part in a series where we analyze thousands of articles from tech news sites in order to get insights and trends about startups.

Last time around we scraped all the articles ever published in TechCrunch, VentureBeat and Recode using Scrapy. We then filtered out all the articles that weren’t about startups, so we now have only the publications relevant to our analysis.

Today we are going to continue building our analysis tools by creating two new models:

- Startup event classifier that understands what event the article is describing (a new round of funding, an acquisition, product launch, etc).

- Startup industry classifier that can tell which industries the startup belongs to (fintech, machine learning, advertising and so on).

Finally, we'll combine these classifiers to be ready to analyze all of our data.

Let's get started!

The Startup Event Classifier

For the first part of this analysis, it’d be great to know for each piece of startup news what “event” it is describing. That is, for each article answer the questions:

- What happened that caused the article to be written?

- Did the company announce a new round of fundraising?

- Did it get acquired?

- Did it get sued?

If we had this information for every article ever published, we’d know a lot about historic industry trends!

Defining the category tree

In order to answer these questions, let’s first make an outline of the categories an article can belong to. We are already familiar with the data, since we already worked with it when building the startup filter. Reading through the data and understanding it is an important part of building a machine learning model. This is an iterative process, the first category tree you think of usually won’t be the final one.

Let’s give the first tree the following categories:

- Fundraising: the focus of the piece is to report on a new round of fundraising.

- Acquisition: reporting that a company got acquired.

- Product launch: reporting a new product that was launched.

- Lawsuit: reporting on a lawsuit a company is involved in.

In addition, let's add an Other category as well, to put all the articles that don’t fall under any of the previous.

Tagging articles

Now we are ready to start tagging articles into these categories so we can provide examples to our classifier. However, as soon as we start tagging, we realize that our tree is incomplete.

First, we find many articles in our dataset that simply showcase a product that either already exists or hasn’t launched yet. Others just mention new features for an existing product. These don’t fall under our previous definition of Product Launch, so we will extend the definition of this category so that all these articles fall there.

Second, there are many stories about startups shutting down or going bankrupt. Since these could turn out to be interesting for our analysis, instead of throwing those into Other we will create a new Bankruptcy category to put them in.

These are the type of decisions that have to be made when tagging the data, which result in modifying the tree and getting it to better fit our reality.

Finding training data

Something that happens when making a classifier like this is that some categories have more representation than others. For example, let’s say that 1% of all tech articles are about lawsuits. If we exclusively used random sampling like we did in the last post, we’d need to manually tag 10,000 articles before getting to 100 lawsuit articles for our classifier. This is obviously an outrageous amount of work, so we have to turn to more directed methods for finding data.

A simple but effective approach is searching for articles with specific keywords. In this case, searching lawsuit, lawyer, attorney, copyright and so on, will bring the data we want into our hands.

However, this is a double-edged sword. The fact that an article contains the keywords you were looking for doesn’t mean that it actually belongs to the category you wanted. A good rule to follow here is to never add new training data blindly just because they match your keywords; always read before adding.

An additional problem is that if you only add articles that contain these keywords, you’ll be ignoring the ones that don’t. This will bias the classifier and might result in it not putting the Lawsuit tag in some articles that deserve it, simply because they don’t contain these keywords. This ought to be mitigated by adding texts to this category that weren’t found through keyword search.

Completing the model

After having enough carefully selected data, we proceed to train and test the model as usual.

Check out the classifier we made! We ended up using 2,700 training texts for this model, almost triple than for our previous classifier. We needed more texts as we were working with more categories. Skip to the final section if you’d like to see it in action alongside the startup classifier.

The Startup Industry Classifier

Now we know what a piece of startup news is about. But what if we also want to know which industry the startup being discussed belongs to? That is, given an article about a startup company, we want a classifier that outputs tags like Advertising, Fintech or Health. This sounds easy enough until you start looking at the data and realize that a company can belong to several industries at the same time!

For example, if a startup is making Internet-connected pacemakers (hopefully not!) then it should have both the Internet of Things and Health tags. This isn't doable with the single-label classifiers we have been building until now, since they are capable of returning only one tag to each text.

Multi-label classifiers

However, we have a solution: multi-label classification. That is, as the name says, a classifier that has an output of not just one, but several tags at the same time.

Each category of a multi-label classifier works like a binary classifier. In our case, this means that for each industry the model asks does this article belong to this industry?. If the answer is yes then the article gets that industry tag, and if it doesn’t, the answer is no. Simple as that.

So, when a new article is sent to a multi-label classifier it can get two tags, or it can get three, or one, or even zero if it doesn’t belong to any of the categories!

Things to consider when training a multi-label classifier

When tagging data for a multi-label classifier, each text should be tagged with the multiple categories it belongs to.

So, if a text mentions an IoT pacemaker startup, it should get the IoT tag in addition to the Health tag. If we don’t do this and only tag it as Health, we’ll be telling the classifier that this startup doesn’t belong to the IoT category! We have to avoid this if we don't want to create confusion in the model.

Building a multi-label classifier

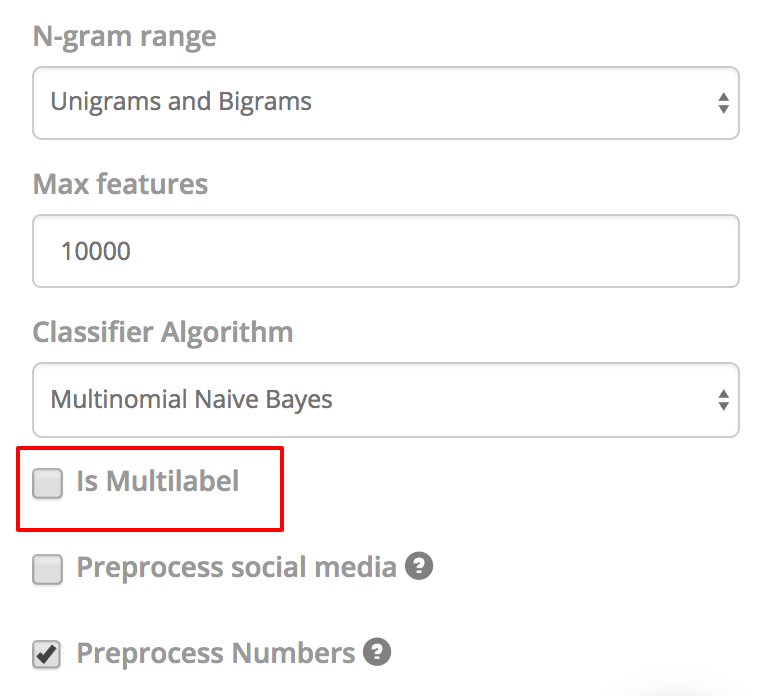

In MonkeyLearn, creating a multi-label classifier is the same as a single-label; you just have to say so during model creation. The option can be found under Advanced options on the final step of the creation wizard:

Creating a multi-label classifier.

We defined the category tree by checking out to the Crunchbase category list in order to see what categories might be of interest for our analysis. We included categories like Driverless Cars, Machine Learning, Drones, AR & VR, Fintech, Gaming, Robotics and Education. You can see the finished category tree here.

Tagging the data was a similar process to the previous classifier, except that this time we took special care in tagging every text with all the relevant categories.

Feel free to play around with the completed classifier, it ended up performing really well and will be invaluable for our analysis.

Putting our classifiers to work

We have finished all the classifiers we need for our analysis. Putting them to use it's just a matter of going to the API tab for each model and using the code in your language of choice. We have to take two new things into account here. First, only the articles that get the Startup tag have to be sent to the other two classifiers. Second, the industry classifier is multi-label, so we have to keep in mind that an article can get several tags (or even no tag at all).

At this point, we are ready to repeat the same experiment we did in the previous post: classifying 100 articles and seeing what happens. With a couple of additions to the code we can do this:

from monkeylearn import MonkeyLearn

ml = MonkeyLearn('<Your API key here>')

articles = ["<The list of articles>"]

# Classify as "startup" or "not_startup"

module_id = 'cl_Xq6cFpsX'

res = ml.classifiers.classify(module_id, articles)

for i, article in enumerate(articles):

articles[i] = [article, res.result[i][0]["label"]]

# Send all the "startup" news to the new classifiers

texts_startup = [article[0] for article in articles if article[1] == "startup"]

res_event = ml.classifiers.classify('cl_iju4tFr6', texts_startup)

res_industry = ml.classifiers.classify('cl_nt3NPYem', texts_startup)

articles_startup = []

for i, article in enumerate(texts_startup):

current_event = res_event.result[i][0]

# Join the list of industry labels under one semicolon separated line

industries = ";".join([tag[0]["label"] for tag in res_industry.result[i]])

articles_startup.append([article, current_event["label"], industries])

# The final results

print(articles_startup)

An example output for a single article would be something like:

['Chinese E-Commerce Site Acquires Ador, Owner Of Heavily Funded Lockerz.Com. The acquisition [...] ',

'acquisition',

'Ecommerce & Retail']

As a side note, a way to make this process more efficient is to use something called a pipeline. A pipeline is a special MonkeyLearn model that combines several other models, while doing processing on the data. Pipelines are a more advanced feature of MonkeyLearn, and in this case they can be used to do all three classifications sending the data to the API at once.

You can find in-depth code for using these classifiers (with and without pipelines) in this notebook.

Final words

Today we created two new classifiers to analyze the content of the startup articles we collected last time. One understands which event the article describes and the other one finds out which industries the startup belongs to.

We also combined last post’s work with this one, and now we are able to given a tech article, first tell whether it’s about startups or not, and then apply the new tags.

Join us in the next post, where we’ll finally put all of our preparations to good use. We will classify all of the articles we collected, plot the results, and do an in-depth analysis. We will be discovering new and interesting insights about the startup world, so stay tuned!

Bruno Stecanella

April 11th, 2017