What Is Training Data in Machine Learning?

Machine learning can perform some pretty amazing feats to automate processes and gather powerful insights from all manner of text data: from documents, surveys, emails, customer support tickets, social media, and all over the web.

But you first need to begin with proper training data to ensure that your machine learning models are set up for success.

- What Is Training Data?

- What Is the Difference Between Training Data and Testing Data?

- How Is Training Data Used in Machine Learning?

- What Makes Good Training Data?

- Why Is Quality Training Data Important?

- How Much Training Data Do You Need?

What Is Training Data?

Training data (or a training dataset) is the initial data used to train machine learning models.

Training datasets are fed to machine learning algorithms to teach them how to make predictions or perform a desired task.

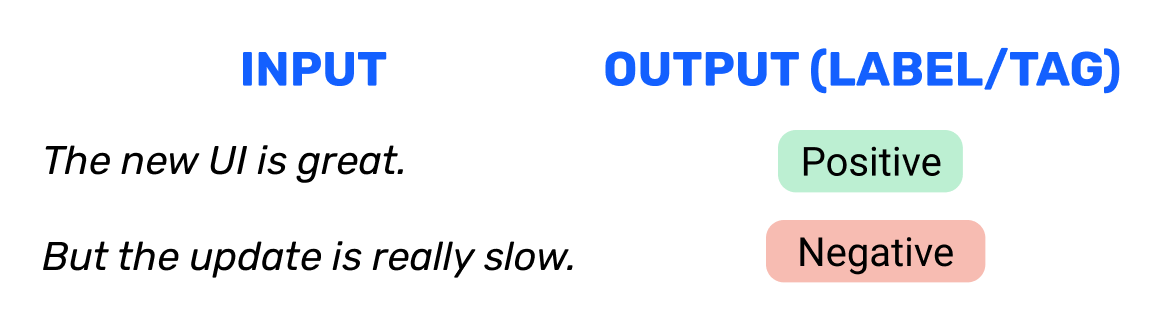

If you’re training a sentiment analysis model (that analyzes text for opinion polarity: positive, negative, and neutral), training data examples could be:

AI training data will vary depending on whether you’re using supervised or unsupervised learning.

Unsupervised learning uses unlabeled data. Models are tasked with finding patterns (or similarities and deviations) in the data to make inferences and reach conclusions.

With supervised learning, on the other hand, humans must tag, label, or annotate the data to their criteria, in order to train the model to reach the desired conclusion (output). Labeled data is shown in the examples above, where the desired outputs are predetermined.

There are also hybrid models that use a combination of supervised and unsupervised learning.

In topic analysis (text categorization), below, we are using a supervised machine learning model and training the model to automatically analyze and categorize customer support tickets into topics, like Shipping, Billing, Account, Login, etc.

Take a look at how we annotate the input data with a desired output tag to properly train the model.

What Is the Difference Between Training Data and Testing Data?

Training data is the initial dataset you use to teach a machine learning application to recognize patterns or perform to your criteria, while testing or validation data is used to evaluate your model’s accuracy.

You’ll need a new dataset to validate the model because it already “knows” the training data. How it performs on new test data will let you know if it’s working accurately or if it requires more training data to perform to your specifications.

Let’s say you’re training a model to sentiment analyze tweets about your brand. You can search Twitter for brand mentions and download the data to a CSV file, then you would randomly split this data into a training set and a testing set. Splitting the data into 80% training and 20% testing is generally an accepted practice in data science.

MonkeyLearn offers a number of integrations to sync your data. By using similar data for training and testing, you can minimize the effects of data discrepancies and better understand the characteristics of the model.

How Is Training Data Used in Machine Learning?

Traditional programming algorithms follow a set of instructions to transform data into a desired output with no deviations.

Machine learning algorithms, on the other hand, enable machines to solve problems based on past observations. The great thing about machine learning models is that they improve over time, as they’re exposed to relevant training data.

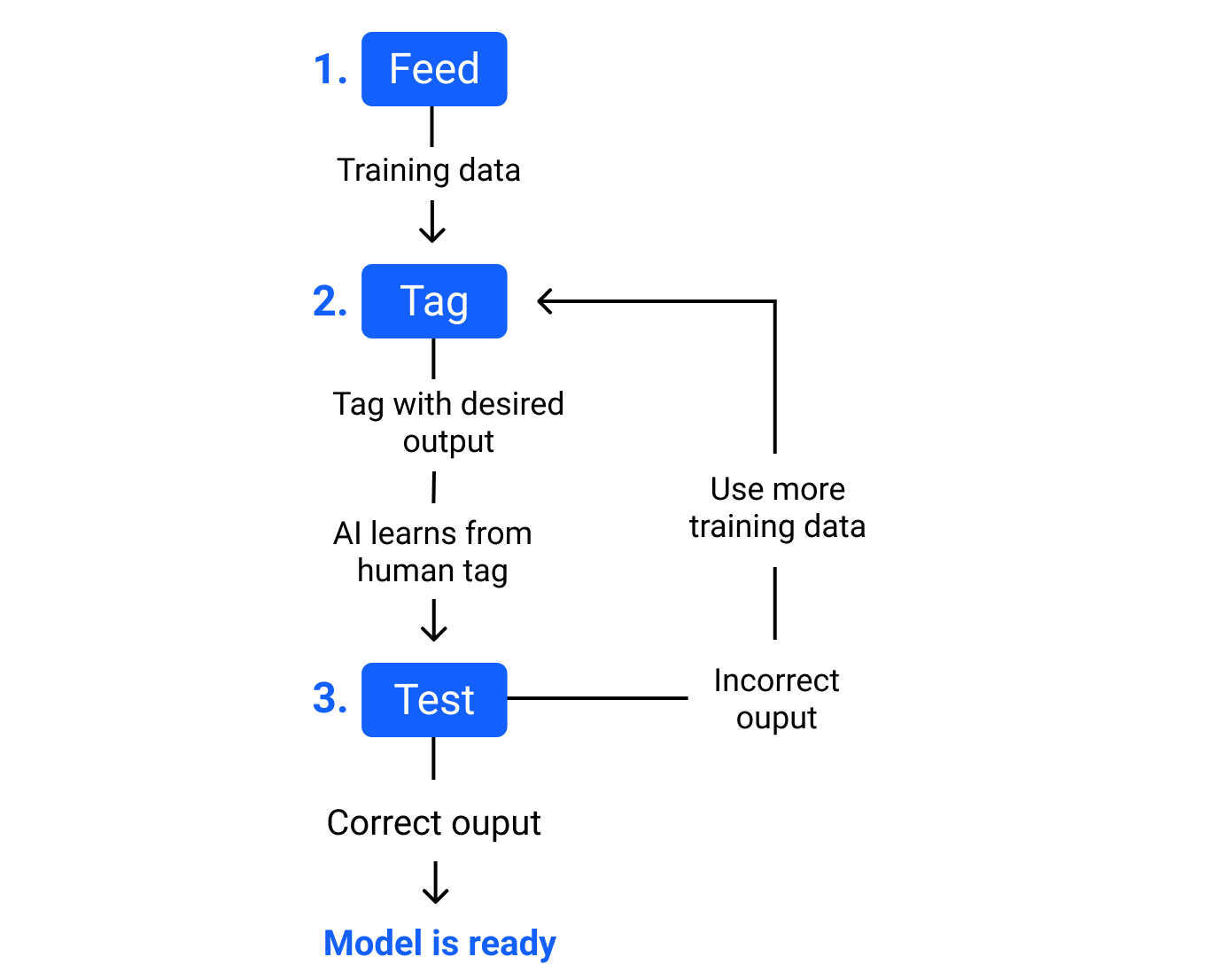

Let’s break the data training process down into three steps:

1. Feed a machine learning model training input data

2. Tag training data with a desired output. The model transforms the training data into text vectors – numbers that represent data features.

3. Test your model by feeding it testing (or unseen) data. Algorithms are trained to associate feature vectors with tags based on manually tagged samples, then learn to make predictions when processing unseen data.

What Makes Good Training Data?

High-quality training data is absolutely necessary to build a high-performing machine learning model, ESPECIALLY in the early stages, but definitely throughout the training process. The features, tags, and relevancy of your training data will be the “textbooks” from which your model will learn.

Your training data will be used to train and retrain your model throughout its use because relevant data generally isn’t fixed. Human language, word use, and corresponding definitions change over time, so you’ll likely need to update your model with retraining periodically.

5 Traits of quality training data

For good training data, check off this list to make sure your dataset is:

Relevant: You will, of course, need data relevant to the task at hand or the problem you’re trying to solve. If your goal is to automate customer support processes, you’d use a dataset of your actual customer support data, or it would be skewed. If you’re training a model to analyze social media data, you’ll need a dataset from Twitter, Facebook, Instagram, or whichever site you’ll be analyzing.

Uniform: All data should come from the same source with the same attributes.

Representative: Your training data must have the same data points and factors as the data you’ll be analyzing.

Comprehensive: Your training dataset must be large enough for your needs and have the proper scope and range to encompass all of the model’s desired use cases.

Diverse: The dataset should reflect the training and user base, or the results will end up skewed. Ensure those tasked with training the model have no hidden biases or bring in a third party to audit the criteria.

Why Is Quality Training Data Important?

Quite simply because the labeled data will determine just how smart your model can become. It can be thought of similarly to a human that is only exposed to adolescent-level reading, they wouldn’t be able to easily understand complex, university-level texts.

There are, however, three more factors to consider when training your machine learning models: people, processes, and tools.

People

The team that will be training your models will have a huge impact on their performance. So you need workers that are familiar with your business and your goals, all using the same criteria to train the models. Whether analyzing social media data for sentiment or categorizing support tickets by department or for degree of urgency, there is a level of subjectivity involved. Regular training and testing is important to maintain consistent data tagging.

Processes

Similarly, quality controls must be put in place to maintain consistency. Step-by-step guidelines are important to ensure that all models are trained with the same process, and clear communication is key to upholding training criteria.

Tools

The above are simply irrelevant if you don’t have the right tools. Flexibility and ease-of-use are crucial if you don’t want to put whole teams to work on building your own tools. SaaS text analysis tools, like MonkeyLearn allow you to train and implement models with little to no code at any scale.

MonkeyLearn offers simple integrations with tools you already use, like Zapier, Zendesk, SurveyMonkey, Google, Excel, and more, so you can get quality data right from the source. Check out training data best practices to see how easy it is to set up.

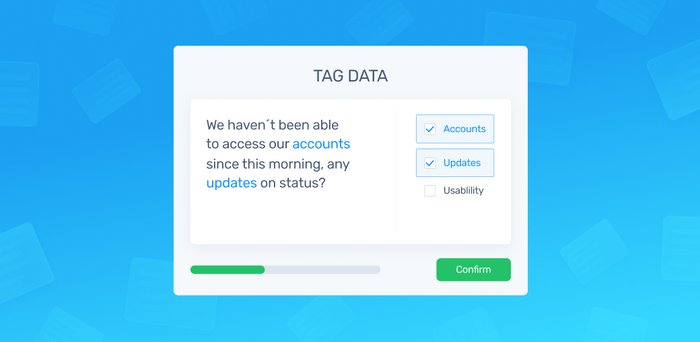

How to Tag Training Data

Think about the results you need. Do you have small amounts of data that can easily be tagged by department, for example, Billing, Legal, Product, or product features: Reliability, Functionality, Usability? Or do you have large datasets with thousands of pieces of text that may overlap within multiple category tags?

It’s important that your tags don’t overlap, at least at the beginning of training, or the model won’t be able to distinguish and learn from the text.

For example, three common tags for categorizing customer feedback are: Product, Customer Service and Marketing, and within these categories are:

- Product: Bugs and Feature Requests

- Customer Service: Billing, Account, and Usability

- Marketing: Features, Pricing, and Upgrades

It’s important to read through your dataset to narrow it down to the most relevant topics, themes, and issues before you start tagging, to establish a solid tagging taxonomy. Think about how to clearly distinguish one tag from the next, and make sure your tag labels are relevant to the data and the results you need.

Create guidelines with descriptions for each of your tags, so that everyone in your team knows when to use each tag. For example, there may be two or more tags that teammates want to apply to one piece of feedback, but after reading the descriptions alongside each tag they may choose just the one tag.

How Much Training Data Do You Need?

There isn’t a hard-and-fast-rule but tasks like training a model to analyze the sentiment of brand mentions will require far less data than something that needs an incredibly confident model, like self-driving cars.

With text analysis, it all depends on the use case and the number of tags you need. As a general rule of thumb for training MonkeyLearn models:

- Topic detection: at least 100-300 samples per tag.

- Sentiment analysis: at least 500-1,000 samples per tag.

- Intent classification: at least 100-300 samples per tag.

- Urgency detection: at least 100 samples for the Urgency tag and 200 samples for the Non-Urgent tag.

The more you train your model, the smarter it will become, so it’s always safe to err on the side of “a lot of training data.”

Put Your Training Data to Work

Now that you understand what training data is and why it’s important, you can put your own training dataset to work training your own MonkeyLearn text analysis model:

- Sign up to MonkeyLearn

- Create a classifier

- Upload your training data

- Tag your data and test

Or schedule a demo and we’ll show you how to get the most from your data.

Rachel Wolff

November 2nd, 2020