Topic Analysis: The Ultimate Guide

Topic analysis is a Natural Language Processing (NLP) technique that allows us to automatically extract meaning from text by identifying recurrent themes or topics.

Automatically detect topics in text data

Businesses deal with large volumes of unstructured text every day like emails, support tickets, social media posts, online reviews, etc.

When it comes to analyzing huge amounts of text data, it’s just too big a task to do manually. It’s also tedious, time-consuming, and expensive. Manually sorting through large amounts of data is more likely to lead to mistakes and inconsistencies. Plus, it doesn’t scale well.

The good news is that AI-guided topic analysis makes it easier, faster, and more accurate to analyze unstructured data.

Read this guide to learn more about topic analysis, its applications, and how to get started with no-code tools like MonkeyLearn.

Let's get started!

Introduction to Topic Analysis

What Is Topic Analysis?

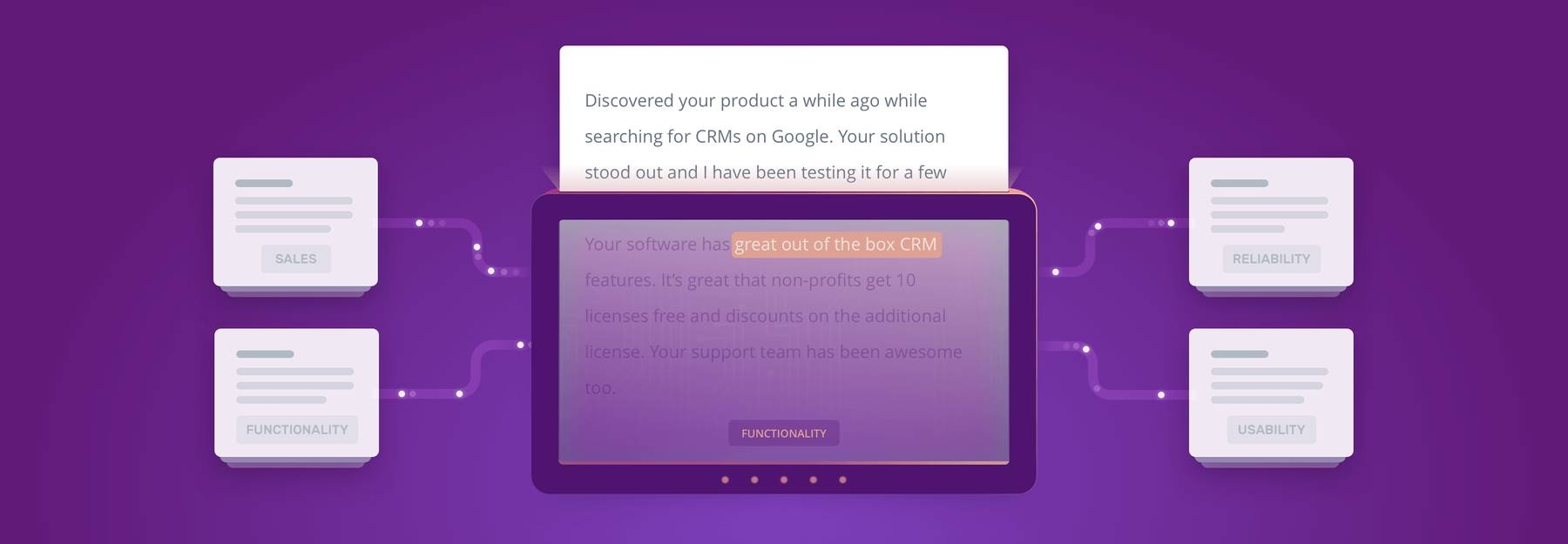

Topic analysis (also called topic detection, topic modeling, or topic extraction) is a machine learning technique that organizes and understands large collections of text data, by assigning “tags” or categories according to each individual text’s topic or theme.

Topic analysis uses natural language processing (NLP) to break down human language so that you can find patterns and unlock semantic structures within texts to extract insights and help make data-driven decisions.

The two most common approaches for topic analysis with machine learning are NLP topic modeling and NLP topic classification.

Topic modeling is an unsupervised machine learning technique. This means it can infer patterns and cluster similar expressions without needing to define topic tags or train data beforehand. This type of algorithm can be applied quickly and easily, but there’s a downside – they are rather inaccurate.

Text classification or topic extraction from text, on the other hand, needs to know the topics of a text before starting the analysis, because you need to tag data in order to train a topic classifier. Although there’s an extra step involved, topic classifiers pay off in the long run, and they’re much more precise than clustering techniques.

We’ll look more closely at these two approaches in the section How It Works.

Scope of Topic Analysis

Topic analysis can be applied at different levels of scope:

- Document-level: the topic model obtains the different topics from within a complete text. For example, the topics of an email or a news article.

- Sentence-level: the topic model obtains the topic of a single sentence. For example, the topic of a news article headline.

- Sub-sentence level: the topic model obtains the topic of sub-expressions from within a sentence. For example, different topics within a single sentence of a product review.

When Is Topic Analysis Used?

Topic tagging is particularly useful to analyze huge amounts of text data in a fast and cost-effective way – from internal documents, communications with customers, or all over the web. Yes, you could do it manually but, let’s face it, when there’s too much information to be classified, it will just end up being time-consuming, expensive, and much less accurate.

At MonkeyLearn, we help companies use topic analysis to make their teams more efficient, automate business processes, get valuable insights from data, and save hours of manual data processing.

Imagine you need to analyze a large dataset of reviews to find out what people are saying about your product. You could combine topic labeling with sentiment analysis to discover which aspects or features (topics) of your product are being discussed most often, and determine how people feel about them (are their statements positive, negative or neutral?). This technique is known as aspect-based sentiment analysis.

Besides brand monitoring, there are many other uses for topic analysis, such as social media monitoring, customer service, voice of customer (VoC) analysis, business intelligence, sales and marketing, SEO, product analytics and knowledge management.

Why Is Topic Analysis Important?

Businesses generate and collect massive amounts of data every day. Analyzing and processing this data using automated topic analysis methods will help businesses make better decisions, optimize internal processes, identify trends, and provide all sorts of other advantages to make them more efficient and productive.

When it comes to sorting through all this data, machine learning models are crucial. Topic detection allows us to easily scan large documents and find out what customers are talking about.

Benefits of topic modeling include:

- Data analysis at scale

If you had to manually detect topics by sifting through a huge database, not only would it be very time-consuming, it would also be far too expensive. Automated topic analysis with machine learning makes it possible to scan as much data as you want, providing brand new opportunities to obtain meaningful insights.

- Real-time analysis

By combining topic tagging with other types of natural language processing techniques, like sentiment analysis, you can obtain a real-time picture of what your clients are saying about your product. And most importantly, you can use that information to make data-driven decisions, 24/7 and in real time.

- Consistent Criteria

Automated topic analysis is based on natural language processing (NLP) – a combination of statistics, computational linguistics, and computer science – so you can expect high-quality results with unsurpassed accuracy.

Topic Analysis Examples

Here are some examples to help you better understand the potential uses of automatic topic analysis:

- Topic labeling is used to identify the topic of a news headline. What is a news article talking about? Is it

Politics,Sport, orEconomy? For example:

“iPhone sales drop 20 percent in China as Huawei gains market share”

A topic model would infer the general topic of this headline is Economy by identifying words and expressions related to this topic (sales - drop - percent - China - gains - market share).

- Topic analysis is used to automatically understand which type of issue is being reported on any given Customer Support Ticket. Is this ticket about

Billing Issues,Account IssuesorShipping Issues? For example:

“My order hasn’t arrived yet” will be tagged as a Shipping Issue.

- Topic analysis can be used to analyze open-ended questions in customer satisfaction surveys, to find what aspect of the product or service the customer is referring to. For example:

Question: “What is the one thing that we could do to improve your experience with [product]?” Answer: “Improve the shopping cart experience, it’s super confusing.”

The topic of this answer is UI/UX.

You’ll discover the most frequently discussed topics about your product or service, uncover new trends just as they emerge, and streamline processes by letting machines perform tasks for you.

How Does Topic Analysis Work?

Topic analysis models are able to detect topics in a text with advanced machine learning algorithms that count words and find and group similar word patterns.

Let's imagine you want to find out what customers are saying about various features of a new laptop.

Your topics of interest might be Portability, Design, and Price. Now, instead of going through rows and rows of customer feedback with a fine-tooth comb, in an attempt to separate feedback into topics of interest, you'll be able to run a topic analysis.

For Price, analysis models might detect patterns such as currency symbols followed by numbers, related words (affordability, expensive, cheap), synonyms (cost, price tag, worth) or phrases (worth every penny), and label the corresponding text accordingly.

Now, let's imagine you don't have a list of predetermined topics. Topic analysis models can also detect topics by counting word frequency and the distance between each word use. Sounds simple, right? Well, it's a little more complicated than just counting and spotting patterns.

Topic Modeling vs Topic Classification

There are many approaches and techniques you can use to automatically analyze the topics of a set of texts, and the one you decide to use depends on the problem at hand. To understand the ins and outs of how topic analysis models work, we're going to focus on the two most common approaches.

If you just have a bunch of texts and want to figure out what topics these texts cover, what you're looking for is topic modeling.

Now, if you already know the possible topics for your texts and want to automatically tag them with the relevant topic, you want topic classification.

Enter machine learning. It can be used to automate tedious and time-consuming manual tasks. There are many machine learning algorithms that, given a set of documents and a couple of friendly nudges, are able to automatically infer the topics on the dataset, based on the content of the texts themselves.

The majority of these algorithms are unsupervised, which means that you feed them the texts and training parameters and they do the rest. Topic modeling runs on this kind of algorithm.

On the other hand, you have supervised algorithms. Machines are fed examples of data labeled according to their topics so that they eventually learn how to tag text by topic by themselves. These are the types of algorithms commonly used for topic classification.

Unsupervised machine learning algorithms are, in theory, less work-intensive than supervised algorithms, since they don't require human tagged data. They may, however, require quality data in large quantities.

In this case, it may be advantageous to just run unsupervised algorithms and discover topics in the text, as part of an analysis process.

Having said this, topic modeling algorithms will not deliver neatly packaged topics with labels such as Sports and Politics.

Rather, they will churn out collections of documents that the algorithm thinks are related, and specific terms that it used to infer these relations.

It will be your job to figure out what these relations actually mean.

On the other hand, supervised machine learning algorithms require that you go through the legwork of explaining to the machine what it is that you want, via the tagged examples that you feed it. Therefore, the topic definition and tagging process are important steps that should not be taken lightly, since they make or break the real-life performance of the model.

The advantages of supervised algorithms win hands down, though. You can refine your criteria and define your topics, and if you're consistent in the labeling of your texts, you will be rewarded with a model that will classify new, unseen samples according to their topics, the same way you would.

Now, let's go further and understand how both topic modeling and topic classification actually work.

Topic Modeling

Topic modeling is used when you have a set of text documents (such as emails, survey responses, support tickets, product reviews, etc), and you want to find out the different topics that they cover and group them by those topics.

The way these algorithms work is by assuming that each document is composed of a mixture of topics, and then trying to find out how strong a presence each topic has in a given document. This is done by grouping together the documents based on the words they contain, and noticing correlations between them.

Although similar, topic modeling shouldn't be confused with cluster analysis.

To better understand the ideas behind topic modeling, we will cover the basics of two of the most popular algorithms: LSA and LDA.

Latent Semantic Analysis (LSA)

Latent Semantic Analysis is the ‘traditional' method for topic modeling. It is based on a principle called the distributional hypothesis: words and expressions that occur in similar pieces of text will have similar meanings.

Like Naive Bayes, it is based on the word frequencies of the dataset. The general idea is that for every word in each document, you can count the frequency of that word and group together the documents that have high frequencies of the same words.

Now, to dive a little bit more into how this is actually done, let's first clarify what is meant by word frequency. The frequency of a word or term in a document is a number that indicates how often a word appears in a document. That's right, these algorithms ignore syntax and semantics such as word order, meaning, and grammar, and just treat every document as an unsorted “bag of words.”

The frequency can be calculated simply by counting – if the word cat appears 10 times in a document, then its frequency is 10. This approach proves to be a bit limited, so tf-idf is normally used. Tf-idf takes into account how common a word is overall (in all documents) vs. how common it is in a specific document, so more common words are ranked higher since they are considered a better “representation” of a document, even if they are not the most numerous.

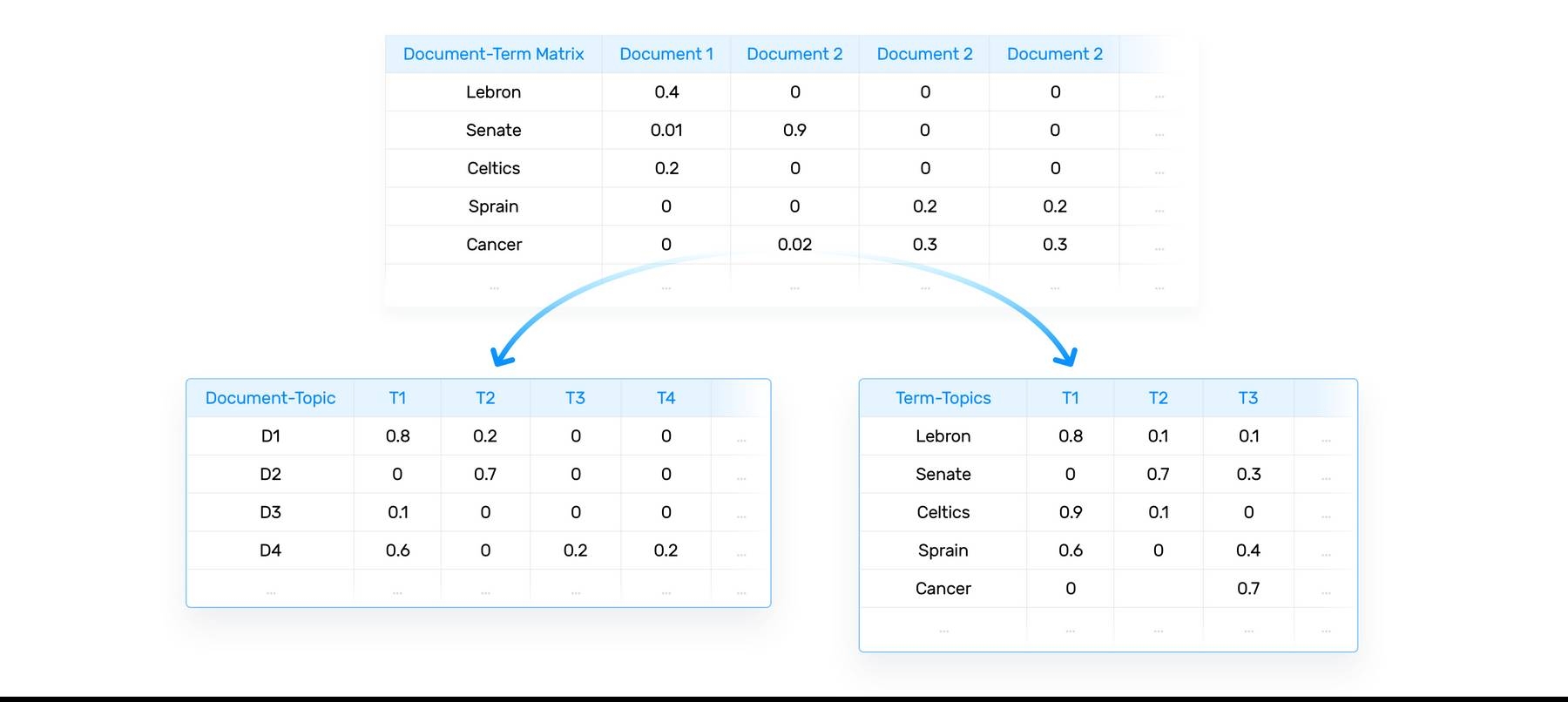

After doing the word frequency calculation, we are left with a matrix that has a row for every word and a column for every document. Each cell is the calculated frequency for that word in that document. This is the document-term matrix; it relates documents to terms.

Hidden inside it is what we want: a document-topic matrix and a term-topic matrix, which relate documents to topics and terms to topics. These matrices are the ones that show information about the topics of the texts.

The way these matrices are generated is by decomposing the document-term matrix into three matrices using a technique called truncated SVD. Firstly, singular-value decomposition (SVD) is a linear algebra algorithm for factorizing a matrix into the product of three matrices U * S * V.The important part is that the middle matrix S is a diagonal matrix of the singular values of the original matrix. For LSA, every one of the singular values represents a potential topic.

Truncated SVD selects the largest t singular values and keeps the first t columns of U and the first t rows of V, reducing the dimensionality of the original decomposition. t will be the number of topics that the algorithm finds, so it's a hyperparameter that will need tuning. The idea is that the most important topics are selected, and U is the document-topic matrix and V is the term-topic matrix.

The vectors that make up these matrices represent documents expressed with topics, and terms expressed with topics; they can be measured with techniques such as cosine similarity to evaluate.

Latent Dirichlet Allocation (LDA)

Here is where things start getting a bit technical. Understanding LDA fully involves some advanced mathematical probability topics. However, the basic idea behind it is more easily understood.

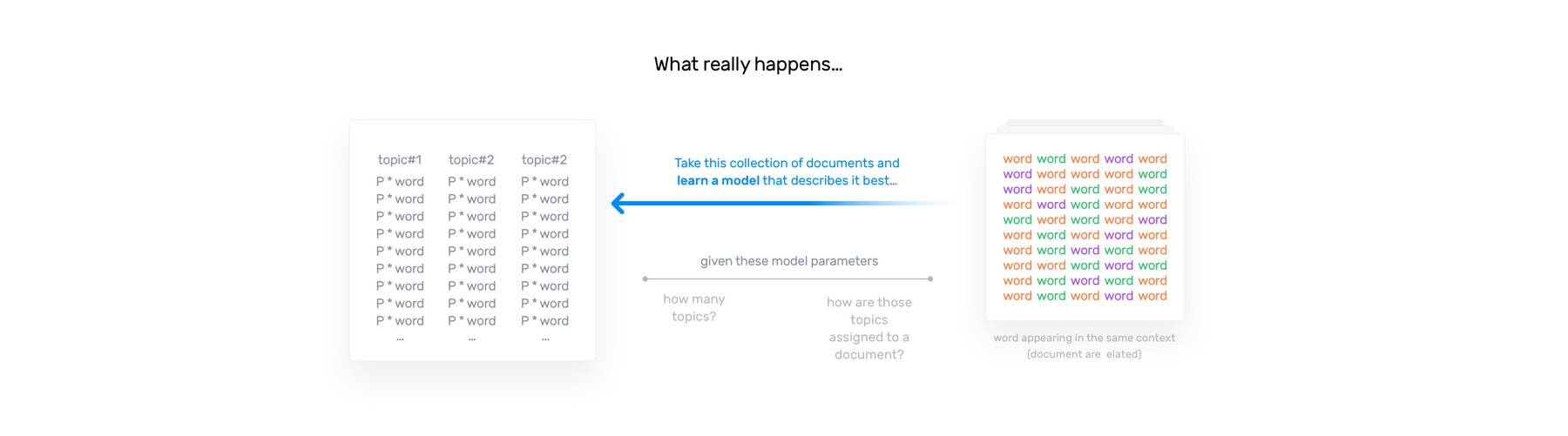

Imagine a fixed set of topics. We define each topic as represented by an (unknown) set of words. These are the topics that our documents cover, but we don't know what they are yet. LDA tries to map all the (known) documents to the (unknown) topics in a way such that the words in each document are mostly captured by those topics.

The fundamental assumption here is the same used for LSA: documents with the same topic will use similar words. It's also assumed that every document is composed of a mixture of topics, and every word has a probability of belonging to a certain topic.

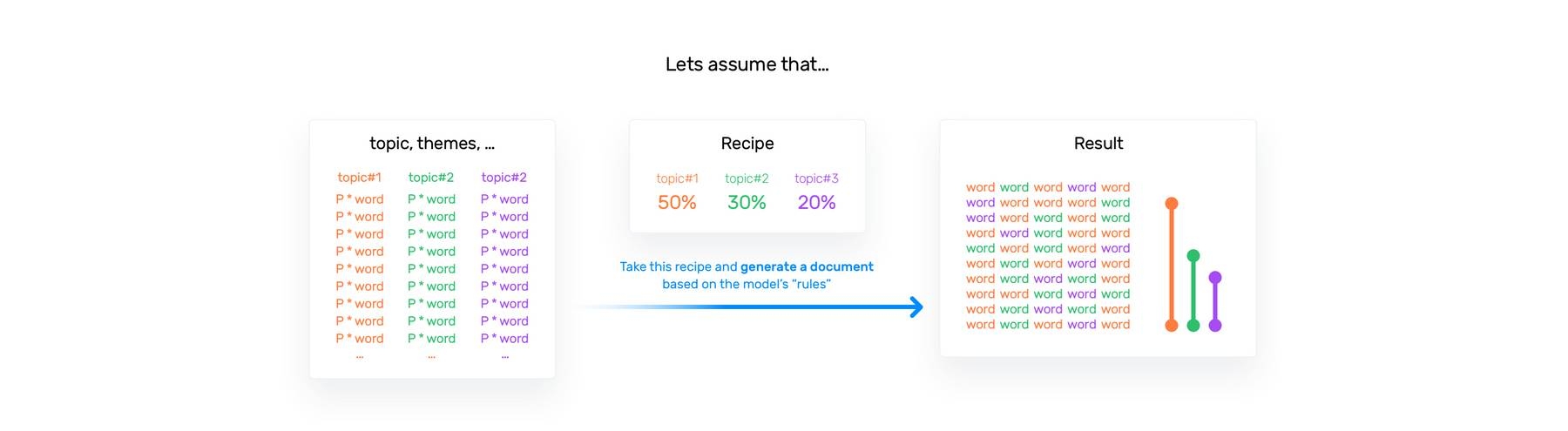

LDA assumes documents are generated the following way: pick a mixture of topics (say, 20% topic A, 80% topic B, and 0% topic C) and then pick words that belong to those topics. The words are picked at random according to how likely they are to appear in a certain document.

Of course, in real life documents aren't written this way. Documents are written by humans and have characteristics that make them readable, such as word order, grammar, etc. However, it can be argued that just by looking at the words of a document, you can detect the topic, even if the actual message of the document doesn't come through.

This is what LDA does. It sees a document and assumes that it was generated as described above. Then it works backward from the words that make up the document and tries to guess the mixture of topics that resulted in that particular arrangement of words.

The way this is achieved exceeds the scope of this article but if you'd like to learn more, a good starting point is the original LDA paper.

Something we should mention about the implementation is that it has two hyperparameters for training, usually called α (alpha) and β (beta). Knowing what these do is important for using libraries that implement the algorithm.

Alpha controls the similarity of documents. A low value will represent documents as a mixture of few topics, while a high value will output document representations of more topics -- making all the documents appear more similar to each other.

Beta is the same but for topics, so it controls topic similarity. A low value will represent topics as more distinct by making fewer, more unique words belong to each topic. A high value will have the opposite effect, resulting in topics containing more words in common.

Another important thing that has to be specified before training is the number of topics that the model will have. The algorithm cannot decide this by itself, it needs to be told how many topics to find. Then, the output for every document will be the mixture of topics that each particular document has. This output is just a vector, a list of numbers that means "for topic A, 0.2; for topic B, 0.7; ..." and so on. These vectors can be compared in different ways, and these comparisons are useful for understanding the corpus, to get an idea of its fundamental structures.

Topic Classification

Unlike topic modeling, in topic classification you already know what your topics are.

For example, you may want to categorize customer support tickets by Software Issue and Billing Issue.

What you want to do is assign one of these topics to each of the tickets, usually to speed up and automate some human-dependent processes. For example, you could automatically route support tickets, sorted by topic, to the correct person on the team without having to sift through them manually.

Unlike the algorithms for topic modeling, the machine learning algorithms used for topic classification are supervised. This means you need to feed them documents already labeled by topic, and the algorithms learn how to label new, unseen documents with these topics.

Now, how you predetermine topics for your documents is a different issue entirely. If you're looking to automate some already existing task, then you probably have a good idea about the topics of your texts. In other cases, you could use the previously discussed topic modeling methods as a way to better understand the content of your documents beforehand.

What ends up happening in real-life scenarios is that the topics are uncovered as the model is built.

Since automated classification – either by rules or machine learning – always involves a first step of manually analyzing and tagging texts, you usually end up refining your topic set as you go. Before you can consider the model finished, your topics should be solid and your dataset consistent.

Next, we will cover the main paths for automated topic classification: rule-based systems, machine learning systems, and hybrid systems.

Rule-Based Systems

Before getting into machine learning algorithms, it's important to note that it's possible to build a topic classifier entirely by hand, without machine learning.

The way this works is by directly programming a set of hand-made rules based on the content of the documents that a human expert actually read. The idea is that the rules represent the codified knowledge of the expert, and are able to discern between documents of different topics by looking directly at semantically relevant elements of a text, and at the metadata that a document may have. Each one of these rules consists of a pattern and a prediction (in this case, a predicted topic).

Back to support tickets, a way to solve this problem using rules would be to define lists of words, one for each topic (e.g., for Software Issue words like bug, program, crash, etc., and for Billing Issue words like price, charge, invoice, $, and so on). Now, when a new ticket comes in, you count the frequency of software-related words and billing-related words. Then, the topic with the highest frequency gets the new ticket assigned to it.

Rule-based systems such as this are human comprehensible; a person can sit down, read the rules, and understand how a model works. Over time it's possible to improve them by refining existing rules and adding new ones.

However, there are some disadvantages. First, these systems require deep knowledge of the domain (remember that we used the word expert? It's not a coincidence). They also require a lot of work, because creating rules for a complex system can be quite difficult and requires a lot of analysis and testing to make sure it's working as intended. Lastly, rule-based systems are a pain to maintain and don't scale very well, because adding new rules will affect the performance of the rules that were already in place.

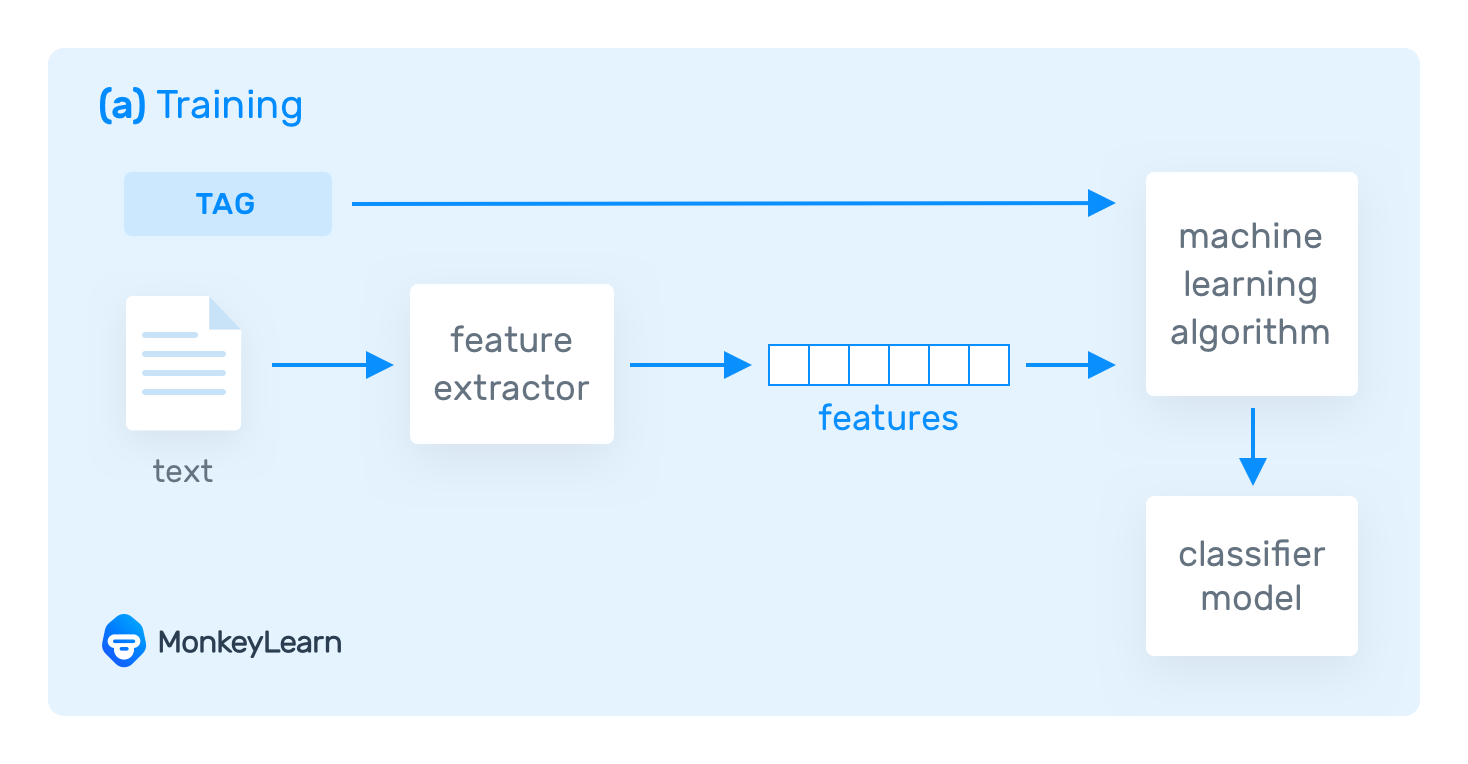

Machine Learning Systems

In machine learning classification, examples of text and the expected categories (AKA training data) are used to train an NLP topic classification model. This model learns from the training data (with the help of natural language processing) to recognize patterns and classify the text into the categories you define.

First, training data has to be transformed into something a machine can understand, that is, vectors (i.e. lists of numbers which encode information). By using vectors, the model can extract relevant pieces of information (features) which will help it learn from the training data and make predictions. There are different methods to achieve this, but one of the most used is known as the bag of words vectorization. Learn more about text vectorization

Once the training data is transformed into vectors, they are fed to an algorithm which uses them to produce a model that is able to classify the texts to come:

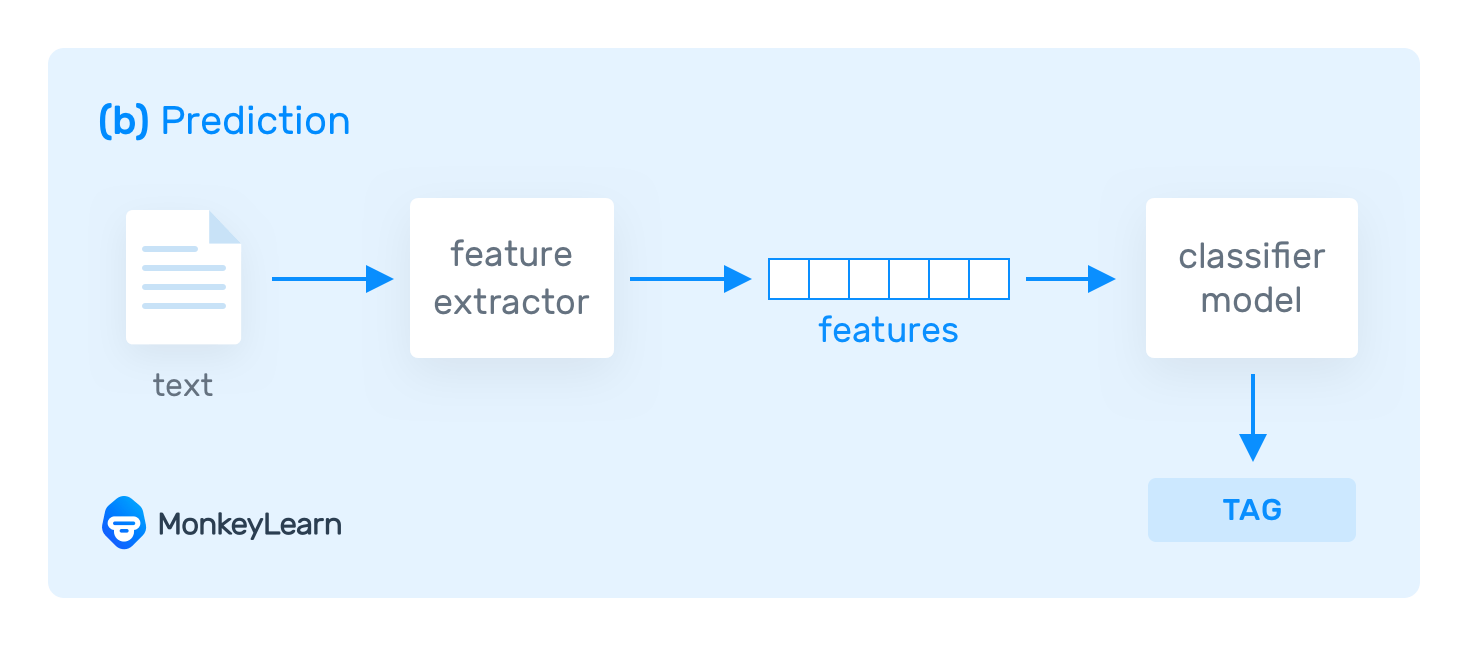

For making new predictions, the trained model transforms an incoming text into a vector, extracts its relevant features, and makes a prediction:

The classification model can be improved by training it with more data and changing the training parameters of the algorithm; these are known as hyperparameters.

The following are broad-stroke overviews of machine learning algorithms that can be used for topic classification. For a more in-depth explanation of each, check out the linked articles.

Naive Bayes

Naive Bayes is a family of simple algorithms that usually give great results from small amounts of training data and limited computational resources. The most popular member of the family is probably Multinomial Naive Bayes (MNB), and it's one of the algorithms that MonkeyLearn uses.

Similar to LSA, MNB correlates the probability of words appearing in a text with the probability of that text being about a certain topic. The main difference between the two is what is done with the data afterwards: LSA looks for patterns in the existing dataset, while MNB uses the existing dataset to make predictions for new texts.

Support Vector Machines

Although based on a simple idea, Support Vector Machines (SVM) is more complex than Naive Bayes, so it requires more computational power, but it usually gives better. However, it's possible to get training times similar to those of an MNB classifier with optimization by feature selection, in addition to running an optimized linear kernel such as scikit-learn's LinearSVC.

The basic idea for SVM is, once all the texts are vectorized (so they are points in mathematical space), to find the best line (in higher dimensional space called a hyperplane) that separates these vectors into the desired topics. Then, when a new text comes in, vectorize it and take a look at which side of the line it ends up: that's the output topic.

Deep Learning

Deep learning is actually a catch-all term for a family of algorithms loosely inspired by the way human neurons work. Although the ideas behind artificial neural networks originate in the 1950s, these algorithms have seen a great resurgence in recent years thanks to the decline of computing costs, the increase of computing power, and the availability of huge amounts of data.

Text classification, in general, and topic classification in particular, have greatly benefited from this resurgence and usually offer great results in exchange for some draconian computational requirements. It's not unusual for deep learning models to train for days, weeks, or even months.

For topic classification, the two main deep learning architectures used are Convolutional Neural Networks (CNN) and Recurrent Neural Networks (RNN). The differences are outside the scope of this article, but here's a good comparison with some real-world benchmarks.

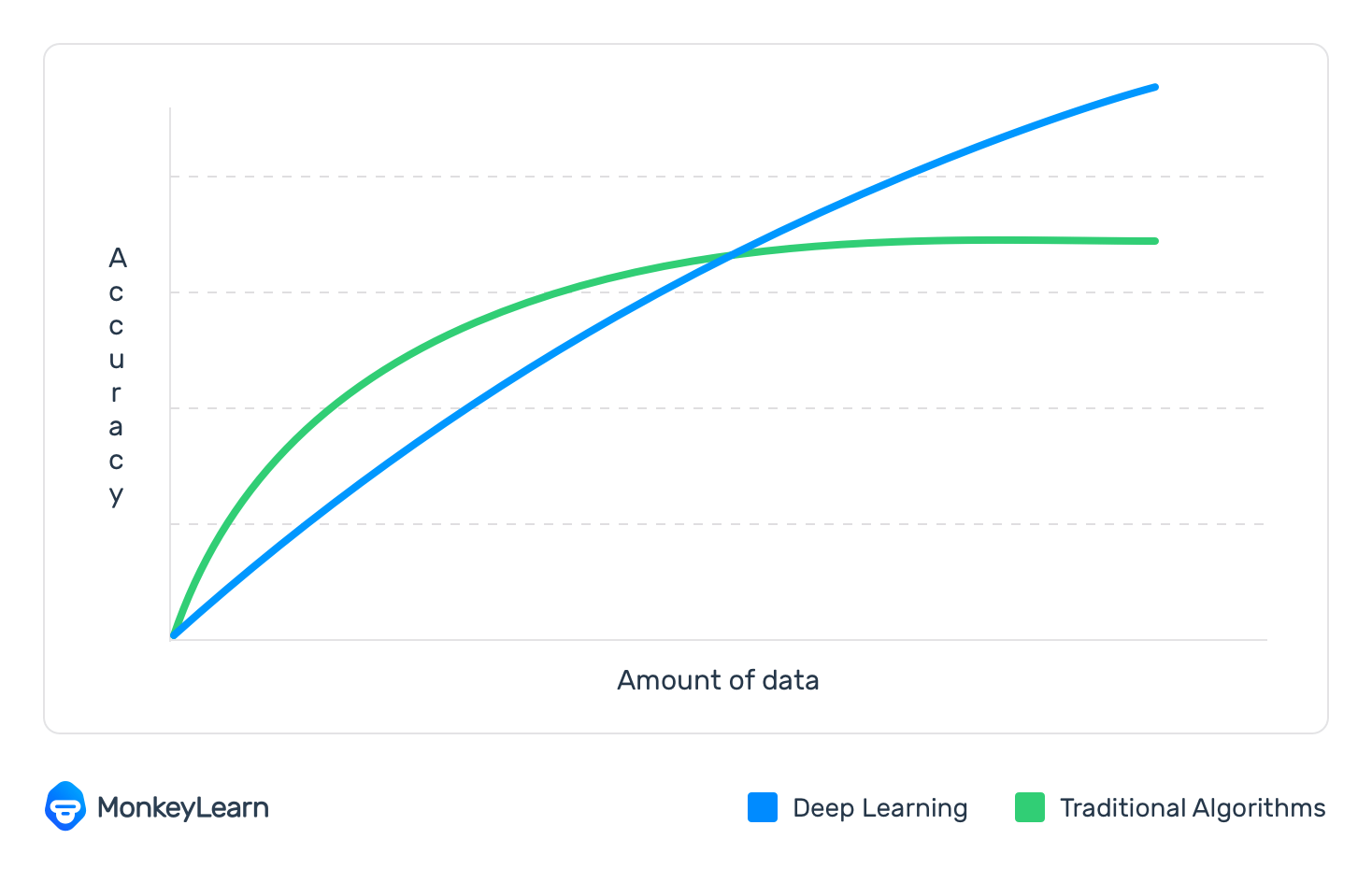

Although deep learning algorithms require much more training data than traditional machine learning algorithms, deep learning classifiers continue to get better the more data they have. On the other hand, traditional machine learning algorithms, such as SVM and MNB, reach a limit, after which they can't improve even with more training data:

This doesn't mean that the other algorithms are strictly worse; it depends on the task at hand. For instance, spam detection was declared "solved" a couple of decades ago using just Naive Bayes and n-grams.

Other deep learning algorithms like Word2Vec or GloVe are also used; these are great for getting better vector representations for words when training with other, traditional machine learning algorithms.

Hybrid Systems

The idea behind hybrid systems is to combine a base machine learning classifier with a rule-based system that improves the results with fine-tuned rules. These rules can be used to correct topics that haven't been correctly modeled by the base classifier.

Metrics and evaluation

Training models is great and all, but unless you have a regular, consistent way to measure your results, you won't be able to judge the operability or improvability of your model.

In order to measure the performance of a model, you'll need to let it categorize texts that you already know which topic category they fall under, and see how it performed.

The basic metrics analyzed are:

- Accuracy: the percentage of texts that were predicted with the correct topic

- Precision: the percentage of texts the model got right out of the total number of texts that it predicted for a given topic

- Recall: the percentage of texts the model predicted for a given topic out of the total number of texts it should have predicted for that topic

- F1 Score: the harmonic mean of precision and recall

One important note: you shouldn’t use training data to measure performance, as the model has already seen these samples.

The ideal way to measure would be to take part of the manually tagged data, don't use it to train, and when the model is trained then use it to test. This dataset is called a golden standard. However, leaving part of your hard-earned data just lying around unused instead of powering the model isn't very optimal. You could add this testing data to the final model, but then you have the same problem: you don't know if it was actually better off without it.

Something that can be done instead is called cross-validation. You split the training dataset randomly into equally sized sets (for example 4 sets with 25% of the data each). For each one of these sets, you train a classifier with all the data that's not in this set (75% of the data) and use this set as the gold standard to test.

Then, you build the final model by training with all the data, but the performance metrics you use for it are the average of the partial evaluations.

Use Cases & Applications

Topic analysis helps businesses become more efficient by saving time on repetitive manual tasks and gathers insights from the text data they manage on a daily basis.

From sales and marketing to customer support and product teams, topic analysis offers endless possibilities across different industries and areas within a company. Let’s say you want to uncover the main themes of conversations around your brand in social media, understand the priorities of hundreds of incoming support tickets, or identify brand promoters based on customer feedback. Topic analysis enables you to do all this (and more) in a simple, fast, and cost-effective way.

Getting started is easy – you don’t need a data science background or coding skills or.

How to use topic analysis for your business:

- Social Media Monitoring

- Brand Monitoring

- Customer Service

- Voice of Customer (VoC)

- Business Intelligence

- Sales and Marketing

- Product Analytics

- Knowledge Management

Social Media Monitoring

Every day, people send 500 million tweets. Impressive, right? And that’s just Twitter! Within these immense volumes of social media data, there are mentions of products and services, stories of customer experiences, and interactions between users and brands. Following these conversations is vital to get real-time actionable insights from customers, address potential issues, and anticipate crises. But getting a handle on all this data can be daunting.

Merely counting clicks, likes, and brand mentions isn’t enough anymore. Topic analysis allows you to automatically add context to social media data, to understand what people are actually saying about your brand.

Imagine you work at United Airlines. You could use topic detection to analyze what users are saying about your brand on Twitter, Facebook, and Instagram, and easily identify the most common topics in the conversation. Your customers may be alluding to functionalities, ease of use, or maybe referring to customer support issues. With topic analysis, you’ll get valuable insights like:

- Understanding what people value the most about your product or service

- Identifying which areas of your product or service are raising more concerns

- Recognizing your pain-points, so that you can use them as opportunities for improvement

You can also use topic detection to keep an eye on your competition and track trends in your field over time.

Add an extra dimension to your data analysis, by combining topic detection with sentiment analysis, so you can get a sense of the feelings and emotions behind social media comments. Aspect-based sentiment analysis is a machine learning technique that allows you to associate specific sentiments (positive, negative, neutral) to different aspects (topics) of a product or service. In the case of United Airlines, not only would you know that most of your users are talking about your in-flight menu on Twitter, but you could also find out if they are referring to it in a negative or positive way, as well as the main keywords they are using for this topic.

Follow reactions to marketing campaigns or product releases in real time and get an accurate map of perceptions and follow them as they change over time.

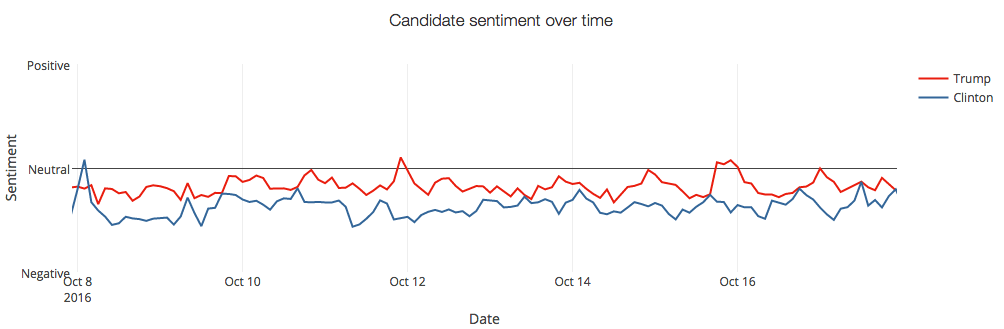

Example: Trump vs Hillary, analyzing Twitter mentions during the US Elections

At MonkeyLearn, we used machine learning to analyze millions of tweets posted by users during the 2016 US elections. First, we classified tweets by topic, whether they were talking about Donald Trump or Hillary Clinton. Then, we used sentiment analysis to classify tweets as positive, negative or neutral. This allowed us to do all sorts of analysis, like extracting the most relevant keywords for the negative tweets about Trump on a particular day.

This graph shows the progression of positive, neutral and negative tweets referring to Trump and Clinton over time. The majority of tweets about both candidates are negative.

Brand Monitoring

It’s not all about social media. Blogs, news outlets, review sites, and forums have a strong influence over a brand’s reputation, too. In fact, nearly 90% of consumers read at least 10 online reviews before forming an opinion about a business, and almost 60% will only use a product or service if it has four or more stars. We’ve all been there, whether it’s booking a hotel for your next holiday or downloading a new app on your cell phone, checking the reviews is an inevitable step in our decision-making process.

Real-time topic analysis allows you to keep track of your brand image ( take action in case of a crisis, or make improvements based on customer feedback), but also to monitor your competitors and detect the latest trends in your industry.

Use topic identification and analysis to get insights about your brand by detecting and tracking the different areas of your business people are discussing the most.

Then, for a deeper understanding of your data, you can perform aspect-based sentiment analysis to “opinion mine” for your customers’ feelings and emotions. You could even combine this with keyword extraction to reveal the most relevant terms used about each of the topics. Combining a number of text analysis techniques allows you to get truly fine-grained results from your data analysis, to understand why something is happening and even make predictions for the future.

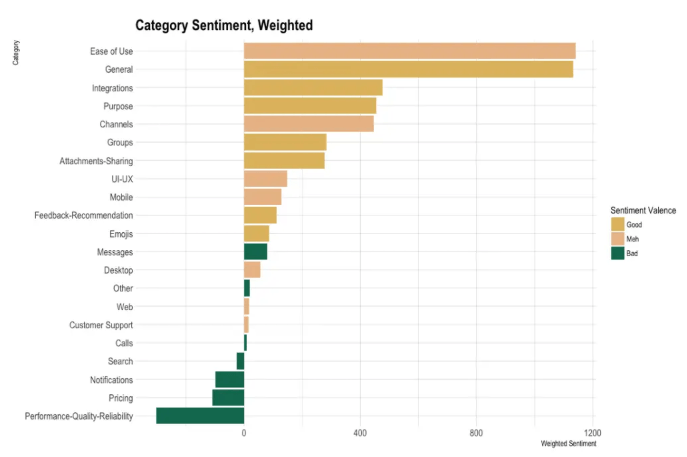

Example: Analyzing Slack reviews on Capterra

With MonkeyLearn, you can create personalized, custom-built models with machine learning and train them to do the work automatically. To show you exactly how it works, we used MonkeyLearn R package to analyze thousands of Slack reviews from the product review site Capterra.

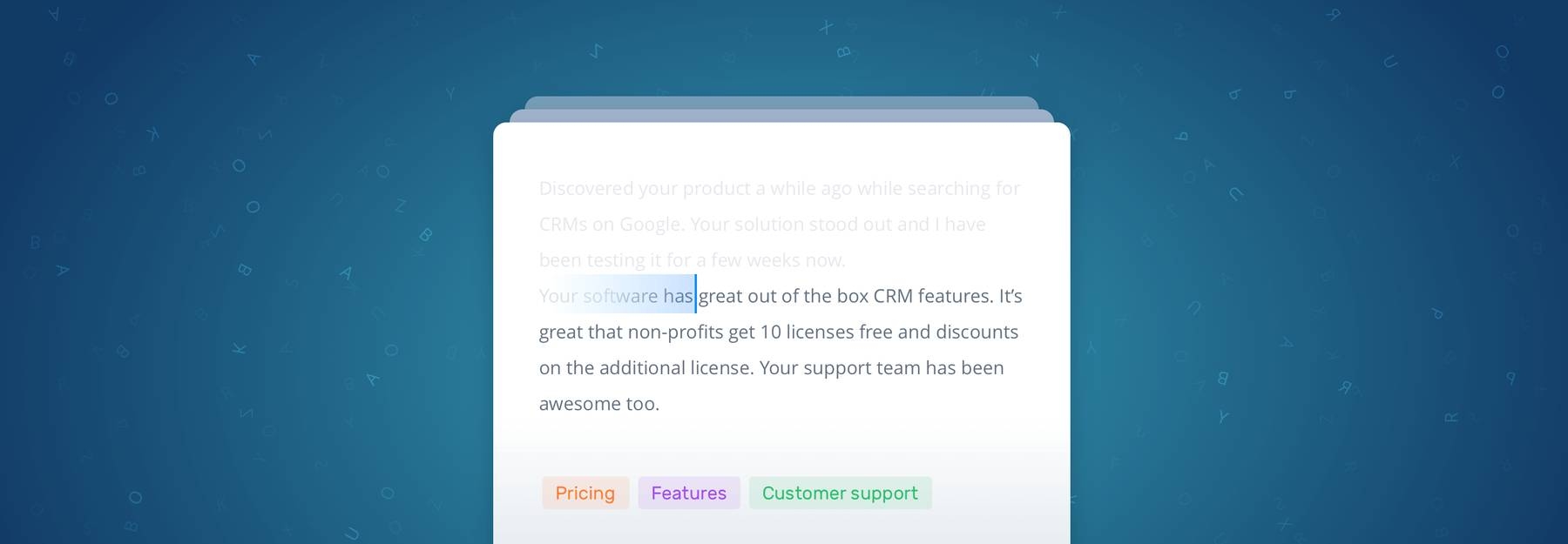

After scraping the data, we defined a series of topic classifiers that helped us detect what the text in the reviews was about pricing, UX, customer support, performance, etc. Then, we used an opinion unit extractor to divide each review into individual opinions (as some sentences may contain more than one opinion). Finally, we created a sentiment analysis model to classify them as positive, negative or neutral:

The graphic above shows the sentiment for each of the aspects analyzed, weighted by the number of mentions. We see that the aspects users love most about Slack are ease of use, integrations, and purpose, while most of the complaints refer to performance-quality-reliability, pricing, and notifications.

Customer Service

It’s not enough any more, to just have an amazing product at a competitive price. Being able to deliver a great customer experience can make all the difference and help you stand out from your competitors. According to a 2017 Microsoft report, 96% of people surveyed say customer service has influenced their choice and loyalty to a brand. Plus, 56% stated that they’d stopped using a product or service due to poor customer experience.

With, perhaps hundreds or thousands of support tickets arriving at your helpdesk every day, a big part of the job in customer service consists of processing large amounts of text. First, you need to find out the subject of each ticket and tag them accordingly, and then triage tickets to the corresponding area that handles each type of issue.

Machine learning opens the door to automating this repetitive and time-consuming (not to mention horribly boring) task to save valuable time for your customer support team, allowing them to focus on what they can do best: helping customers and keeping them happy. Machine learning algorithms can be trained to sort customer support tickets (in large volumes or instantaneously, tag each ticket with the relevant topic or department, and automatically route them to the proper employee, all with no need for human interaction.

Thanks to a combination of machine learning models (not only topic labeling, but also intent classification, urgency detection, sentiment analysis, and language detection) your customer support team can:

- Automatically tag customer support tickets, which you can easily do with the MonkeyLearn Zendesk integration

- Automatically triage and route conversations to the most appropriate team

- Automatically detect the urgency of a support ticket and prioritize accordingly

- Get insights from customer support conversations

Voice of Customer (VoC)

Customer feedback is a valuable source of information that provides insights into the experiences, level of satisfaction, and expectations of your customers, so you can take direct action, identify promoters and detractors, and make improvements based on feedback.

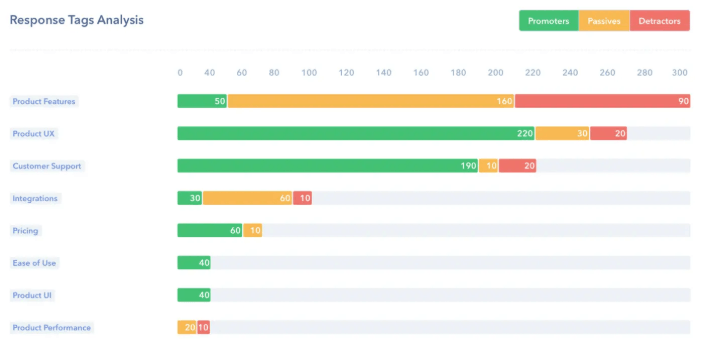

Net Promoter Score (NPS) surveys and direct customer surveys are two of the most common ways of measuring customer feedback. Gathering the information is the simple part of the process, but then comes the analysis. Fortunately, topic analysis enables teams to automatically process surveys, usually in just minutes.

NPS surveys ask a simple question to evaluate customer satisfaction:

“How likely are you to recommend us to a friend?”

The customer gives a score from 0 to 10 and, depending on the answer, the customer will be classified as promoter (9 or 10), passive (7, 8) or detractor (6 or below).

The second part is where NPS survey analysis gets tough – the respondent is asked to explain why they responded as they did. It’s an open-ended response. For example, a customer may have given a 6 on the survey and then responded:

“The product is really great, I love the UX as its really easy to use. The bad thing about the service is the pricing, it’s quite expensive”

This response provides MUCH more information, and now, with the help of machine learning topic analysis, it can be analyzed automatically. Let’s take a look at this real use case:

Retently used MonkeyLearn to analyze NPS responses. They created a topic classifier and trained it to tag each response with different topics like Product UX, Customer Support and Ease of Use. Then, they grouped the Promoters, Passives, and Detractors to determine the most prevalent tags in each group’s feedback. The result looked like this:

Combining topic analysis with sentiment analysis and keyword extraction is a powerful approach that enables you to see beyond the NPS score and really understand how your customers feel about your product, and what aspects they appreciate or criticize.

Analyzing Customer Surveys

Whether it’s emails or online surveys, if you have lots of open-ended questions to tag, machine learning can handle it! Forget about time-consuming manual tasks and get results fast and simply. MonkeyLearn even allows you to integrate directly with survey tools you may already use, for streamlined survey data analysis, like Google Forms and SurveyMonkey.

Business Intelligence

This is the era of data. Business intelligence (BI) is an all-around, holistic approach to data analysis, collecting data from as many sources as possible for historical, real time, and predictive analyses. By taking advantage of insightful and actionable information, companies are able to improve their decision-making processes, stand out from their competitors, identify trends and spot problems before they escalate.

Use topic analysis to find recurrent themes in a set of data, and obtain valuable insights about what’s important to your customers. Once you’ve done that, you can also run an aspect-based sentiment analysis to add an extra layer of analysis and gain a deeper understanding about how customers feel about each of those topics.

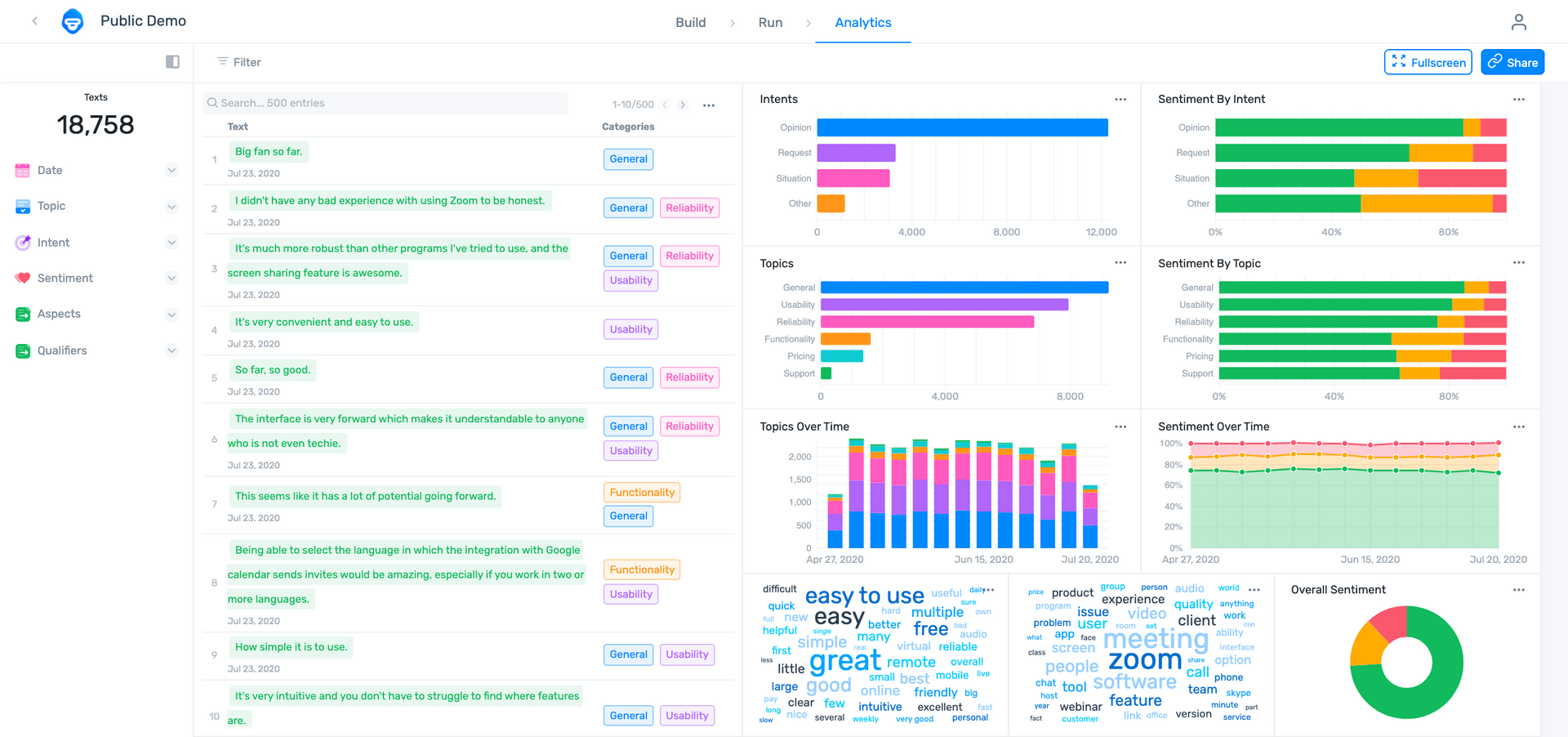

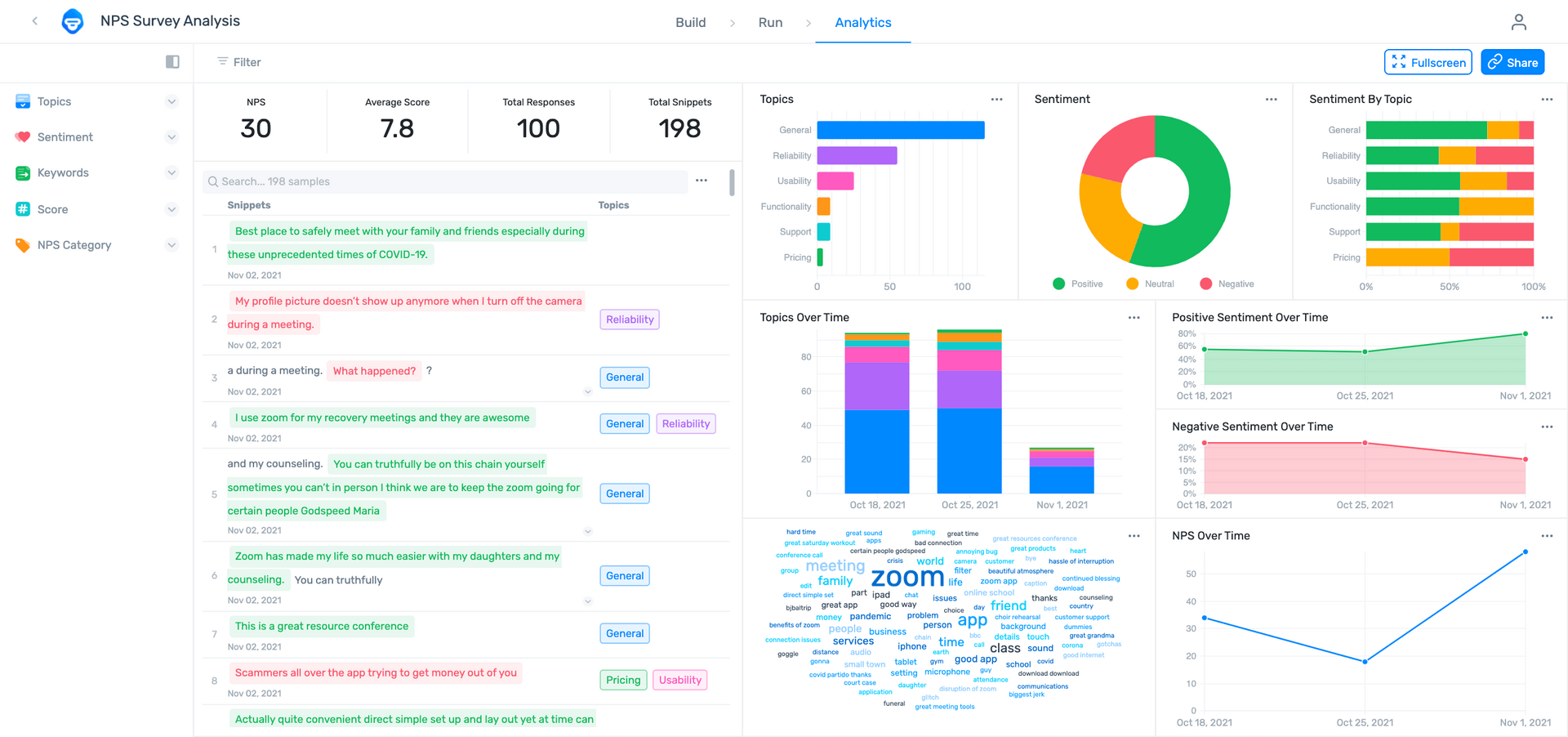

MonkeyLearn is more than a text analysis tool. It also provides in-depth data visualization. Once you’ve gathered all your data, and analyzed it, you’ll get a striking dashboard to share with your team. Here’s an example of a MonkeyLearn dashboard that shows the results of an analysis on Zoom reviews. Filter by sentiment, topic, keyword, and more, to see the detailed insights you can discover.

Want to see for yourself how it works? Book a demo with our team.

Sales and Marketing

Lead qualification is one of the top challenges for sales teams. Only the leads that fit your ideal buyer persona can be qualified as good prospects for your product or service, and identifying them often requires tons of tedious research and manual tasks. What if you could use topic analysis to partially automate lead qualification and help make it even more effective?

Xeneta, a company that provides sea freight market intelligence, is doing exactly that. Machine learning is helping them predict the quality of their leads based on company descriptions. Basically, they trained an algorithm to do a job that used to take them dozens of hours of manual processing.

Productivity influencer Ari Meisel is also using machine learning to identify potential customers. He trained a classifier model with MonkeyLearn that analyzes e-mails enriched with publicly available data and is able to predict if a contact is a good fit for any of the services he offers.

Another exciting use case of machine learning topic detection in sales is Drift. On its mission to connect salespeople with the best leads, the company is using MonkeyLearn to automatically filter outbound email responses and manage unsubscribe requests. Thanks to natural language processing, they avoid sending unwanted emails by allowing recipients to opt out based on how they reply. That way, they save their sales team valuable time.

Intent classification is another great topic analysis method that can automatically classify email responses to marketing campaigns as, among others, Interested, Not Interested, Email Bounce, etc.

Product Analytics

One of the main challenges for product managers tasked with improving their products is to look both at the details and the “bigger picture.” When it comes to the customer journey, for example, product managers should be able to effectively anticipate a customer’s needs and take action based on customer feedback.

Text analysis can be used to analyze customer interactions and automatically detect areas for improvement.

Let’s say you’re analyzing data from customer support interactions and you see a spike in the number of people asking how to use a new feature. This may indicate that the explanation about how to use the feature is unclear, so you need to improve the UI/UX – or any documentation about that feature.

Knowledge Management

Organizations generate a huge amount of data every day. In this context, knowledge management aims to provide the means to capture, store, retrieve, and share that data when needed. Topic detection has enormous potential when it comes to analyzing large datasets and extracting the most relevant information out of them.

This could transform industries like healthcare, where tons of complex data is produced every second – and it’s expecting to see explosive growth in the next few years – but is extremely difficult to access when needed. Topic analysis makes it possible to classify data by disease, symptoms, treatments, and more, so it can be accessed quickly, when needed, even used to find patterns and other relevant insights.

Take a look at some resources below to find more information about NLP topic detection, classification, and modeling, and text analysis overall. Then we’ll show you how easy it is to get started with topic analysis models and simple, step-by-step tutorials.

Resources

You’re probably eager to get started with topic analysis, but you may not know where to begin. The good news is that there are many useful tools and resources.

Implementing the algorithms we discussed earlier can be a great exercise to understand how they work. However, if you don’t have years of data science and coding experience, you’re probably better off sticking to SaaS solutions. SaaS text analysis tools can be implemented right away, are much less costly, and can be trained to be just as effective as building models from scratch. Take a look at The Build vs. Buy Debate to learn more.

Open source libraries

If you are going to code yourself, there are a plethora of open source libraries available in many programming languages to do topic analysis. Whether you're using topic modeling or topic classification, here are some useful pointers.

Topic analysis in Python

Python has grown in recent years to become one of the most important languages of the data science community. Easy to use, powerful, and with a great supportive community behind it, Python is ideal for getting started with machine learning and topic analysis.

Gensim is the first stop for anything related to topic modeling in Python. It has support for performing both LSA and LDA, among other topic modeling algorithms, and implementations of the most popular text vectorization algorithms.

NLTK is a library for everything NLP-related. Its main purpose is to process text: cleaning it, splitting paragraphs into sentences, splitting up words in those sentences, and so on. Since it's for working with text in general, it's useful for both topic modeling and classification. This library is not the quickest of its kind (that title probably goes to spaCy), but it's powerful, easy to use, and comes with plenty of tidy corpora to use for training.

Scikit-learn is a simple library for everything related to machine learning in Python. For topic classification, it's a great place to start: from cleaning datasets and training models, to measuring results, scikit-learn provides tools to do it all. And, since it's built on top of NumPy and SciPy, t's quite fast, as well.

Topic analysis in R

R is a language that’s popular with the statistics crowd at the moment. Since there are a lot of statistics involved in topic analysis, it's only natural to use R to solve stat-based problems.

For topic modeling, try the topicmodels package. It's part of the tidytext family, which uses tidy data principles to make text analysis tasks easier and more effective.

Caret is a great package for doing machine learning in R. This package offers a simple API for trying out different algorithms and includes other features for topic classification such as pre-processing, feature selection, and model tuning.

mlR (short for machine learning in R) is an alternative R package also for training and evaluating machine learning models.

Topic Analysis SaaS APIs

For non-coders, SaaS tools are definitely the way to go. Otherwise, you’d have to hire a whole team of developers, which could take months and cost in the hundreds of thousands of dollars.

SaaS APIs usually only require a few lines of code to call and most integrate with tools you already use, so you don’t need to learn whole new systems.

MonkeyLearn offers a suite of SaaS topic analysis and many more text analysis tools that can be called with just 10 lines of code and custom-tailored to the language and needs of your business, usually in just a few minutes.

Try out the Intent and Email Classifier, for example, that’s pre-trained to understand the reason behind email responses and classify them into topics: Autoresponder, Email Bounce, Interested, Not Interested, Unsubscribe, or Wrong Person.

Or take a look at other pre-trained text analysis models below see how they work:

- Keyword Extractor: find the most used and most important keywords in your own text

- Sentiment Analyzer: classify text by opinion polarity (positive, negative, neutral)

- Word Cloud Generator: a keyword clustering tool that groups keywords by size, according to their importance within the text

The MonkeyLearn API offers simple SDKs for pre-trained models and tutorials to teach you how to train your own. And with MonkeyLearn Studio you can chain together all the analyses you need and have them work in concert, automatically, then visualize your results. It all works in a single dashboard, so you no longer have to upload and download between applications.

Other great SaaS topic analysis solutions:

- Amazon Comprehend

- IBM Watson

- Google Cloud NLP

- Aylien

- MeaningCloud

- BigML

Papers About Topic Modeling and Topic Classification

If you would like to learn more about the finer details of how topic analysis works the following papers are a good starting point:

- An Introduction to Latent Semantic Analysis (Landauer, Foltz and Laham, D., 1998)

- Indexing by Latent Semantic Analysis (Deerwester et al., 1990)

- Latent Dirichlet Allocation (Blei, Ng and Jordan, 2003)

- An empirical study of the naive Bayes classifier (Rish, 2001)

- Text categorization with Support Vector Machines: Learning with many relevant features (Joachims, 1998)

Courses and Lectures

There are online courses for students at any stage of their topic analysis journey.

For an explanation of topic modeling in general, check out this lecture from the University of Michigan's text mining course in Coursera. Here, you can also find this lecture covering text classification. Plus, this course at Udemy covers NLP in general and several aspects of topic modeling as well.

If you are looking for lectures on some of the algorithms covered in this piece, check out:

- Naive Bayes, explained by Andrew Ng.

- Support Vector Machines, a visual explanation with sample code.

- Deep Learning explained simply in four parts. This series is amazing for getting a sense of the idea behind deep learning.

- Latent Dirichlet Allocation explained in a simple and understandable way. For a more in-depth dive, try this lecture by David Blei, author of the seminal LDA paper.

Now, if what you're interested in is a pro-level course in machine learning, Stanford cs229 is a must. It's an advanced course for computer science students, so it's rated M for Math (which is great if that's what you're into). All the material is available online for free, so you can just hop in and check it out at your own pace.

Topic Analysis Tutorials

By now, you’re probably ready to dive in and make your own model. This section is split into two different parts. First, we’ll provide step-by-step tutorials to build topic analysis models using Python (with Gensim and NLTK) and R.

Then, we’ll show you how to build a classifier for topic analysis using MonkeyLearn.

Tutorials Using Open Source Libraries

Topic Classification in Python

For this, we will cover a simple example of creating a text classifier using NLTK and scikit-learn.

pip install nltk

pip install sklearn

Download a CSV with sample data for this classifier here.

import csv

reviews = [row for row in csv.reader(open('reviews.csv'))]

This is a list of lists, representing the rows and columns of the CSV file. Every row in the CSV has three columns: the text, the sentiment for that text (we won't use that one in this tutorial) and the topic:

[

['Text', 'Sentiment', 'Topic'],

['Room safe did not work.', 'negative', 'Facilities'],

['Mattress very comfortable.', 'positive', 'Comfort'],

['No bathroom in room', 'negative', 'Facilities'],

...

]

First, let's do a bit of processing and cleaning of the data. When doing NLP, text cleanup and processing is a very important first step. Good models cannot be trained with dirty data.

For this purpose, we define a function to do the processing using NLTK. An important feature of this library is that it comes with several corpora ready to use. Since they can get pretty big, they aren't included with the library; they have to be downloaded with the download function.

import re

import nltk

# We need this dataset in order to use the tokenizer

nltk.download('punkt')

from nltk.tokenize import word_tokenize

# Also download the list of stopwords to filter out

nltk.download('stopwords')

from nltk.corpus import stopwords

from nltk.stem.porter import PorterStemmer

stemmer = PorterStemmer()

def process_text(text):

# Make all the strings lowercase and remove non alphabetic characters

text = re.sub('[^A-Za-z]', ' ', text.lower())

# Tokenize the text; this is, separate every sentence into a list of words

# Since the text is already split into sentences you don't have to call sent_tokenize

tokenized_text = word_tokenize(text)

# Remove the stopwords and stem each word to its root

clean_text = [

stemmer.stem(word) for word in tokenized_text

if word not in stopwords.words('english')

]

# Remember, this final output is a list of words

return clean_text

Now that we have that function ready, process the data:

# Remove the first row, since it only has the labels

reviews = reviews[1:]

texts = [row[0] for row in reviews]

topics = [row[2] for row in reviews]

# Process the texts to so they are ready for training

# But transform the list of words back to string format to feed it to sklearn

texts = [" ".join(process_text(text)) for text in texts]

The sentences turned into list of stemmed words without any connectors, which is what we need to feed the algorithm.

texts looks like this now:

['room extrem small practic bed',

'room safe work',

'mattress comfort',

'uncomfort thin mattress plastic cover rustl everi time move',

'bathroom room',

...

]

With the cleanup out of the way, we are ready to start training. First, the texts must be vectorized, that is, transformed into numbers that we can feed to the machine learning algorithm.

We do this using scikit-learn.

from sklearn.feature_extraction.text import CountVectorizer

matrix = CountVectorizer(max_features=1000)

vectors = matrix.fit_transform(texts).toarray()

Now, we separate our training data and our test data, in order to obtain performance metrics.

from sklearn.model_selection import train_test_split

vectors_train, vectors_test, topics_train, topics_test = train_test_split(vectors, topics)

Finally, we train a Naive Bayes classifier with the training set and test the model using the testing set.

from sklearn.naive_bayes import GaussianNB

classifier = GaussianNB()

classifier.fit(vectors_train, topics_train)

# Predict with the testing set

topics_pred = classifier.predict(vectors_test)

# ...and measure the accuracy of the results

from sklearn.metrics import classification_report

print(classification_report(topics_test, topics_pred))

This outputs something like this:

precision recall f1-score support

Cleanliness 0.43 0.43 0.43 7

Comfort 0.52 0.57 0.54 23

Facilities 0.55 0.50 0.52 22

avg / total 0.52 0.52 0.52 52

It's not a stellar performance, but considering the size of the dataset it's not bad. If you don't know what precision, recall, and f1-score are, they're explained in the Metrics and Evaluation section. Support for a category is simply how many samples there were in that category.

From here, the model can be tweaked and tested again in order to get better results. A good starting point is the parameters of the CountVectorizer.

Of course, this is a very simple example, but it illustrates all the steps required for building a classifier: obtain the data, clean it, process it, train a model, and iterate.

Using this same process you can also train a classifier for sentiment analysis, with the sentiment tags included in the dataset that we didn't use in this tutorial.

Topic Modeling in Python

For topic modeling we will use Gensim.

We'll be building on the preprocessing done on the previous tutorial, so we just need to worry about getting Gensim up and running:

pip install gensim

We pick up halfway through the classifier tutorial. We leave our text as a list of words, since Gensim accepts that as input. Then, we create a Gensim dictionary from the data using the bag of words model:

from gensim import corpora

texts = [process_text(text) for text in texts]

dictionary = corpora.Dictionary(texts)

corpus = [dictionary.doc2bow(text) for text in texts]

After that, we're ready to go. It's important to note that here we're just using the review texts, and not the topics that come with the dataset. Using this dictionary, we train an LDA model, instructing Gensim to find three topics in the data:

from gensim import models

model = models.ldamodel.LdaModel(corpus, num_topics=3, id2word=dictionary, passes=15)

topics = model.print_topics(num_words=3)

for topic in topics:

print(topic)

And that's it! The code will print out the mixture of the most representative words for three topics:

(0, '0.034*"room" + 0.021*"bathroom" + 0.013*"night"')

(1, '0.056*"bed" + 0.043*"room" + 0.024*"comfort"')

(2, '0.059*"room" + 0.033*"clean" + 0.023*"shower"')

Interestingly, the algorithm identified words that look a lot like keywords for our original Facilities, Comfort and Cleanliness topics.

Since this is a toy example with few texts (and we know their topic) it isn't very useful, but this example illustrates the basics of how to do topic modeling using Gensim.

Topic Modeling in R

If you want to do topic modeling in R, we urge you to try out the Tidy Topic Modeling tutorial for the topicmodels package. It's straightforward and explains the basics for doing topic modeling using R.

Using MonkeyLearn Templates

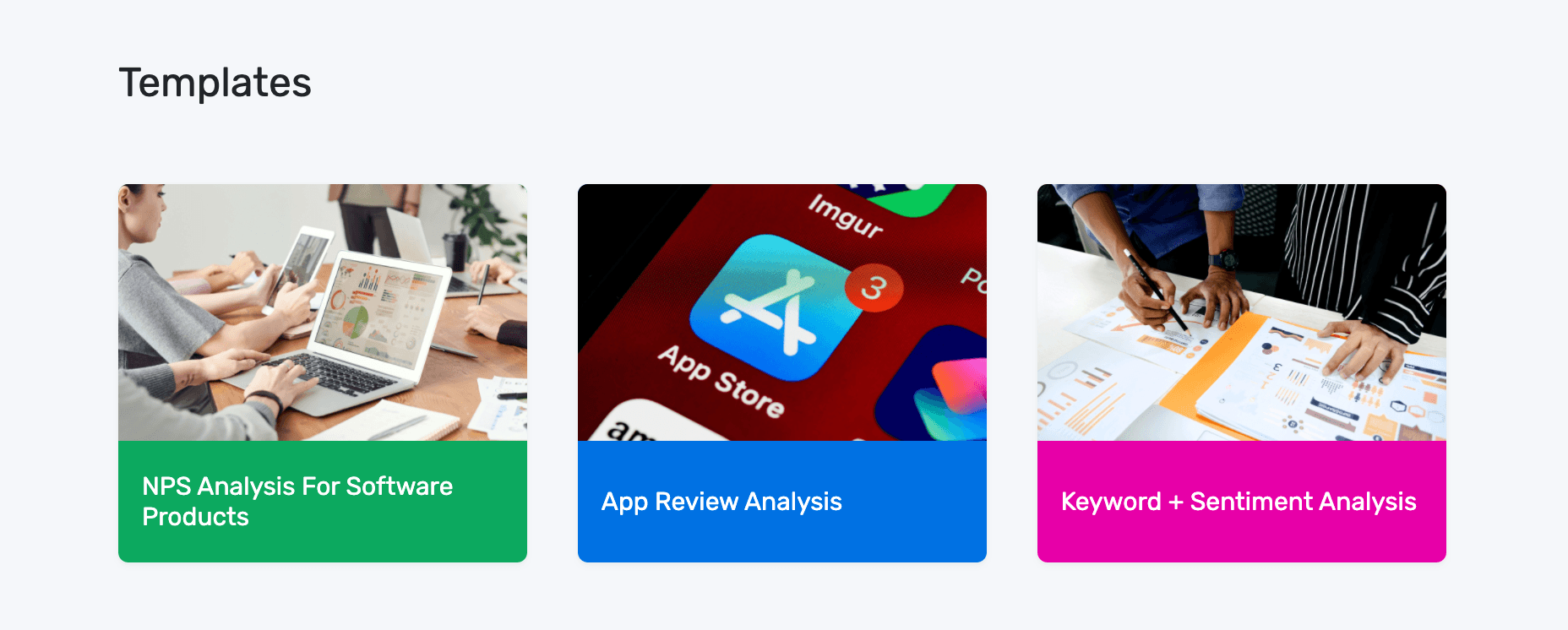

With MonkeyLearn, you can gain access to our templates, which are really easy to use if you’re looking for a completely code-free experience.

Here’s how our templates work:

1. Choose your template

For this quick walkthrough of how to use our templates, we’ve selected the NPS analysis template.

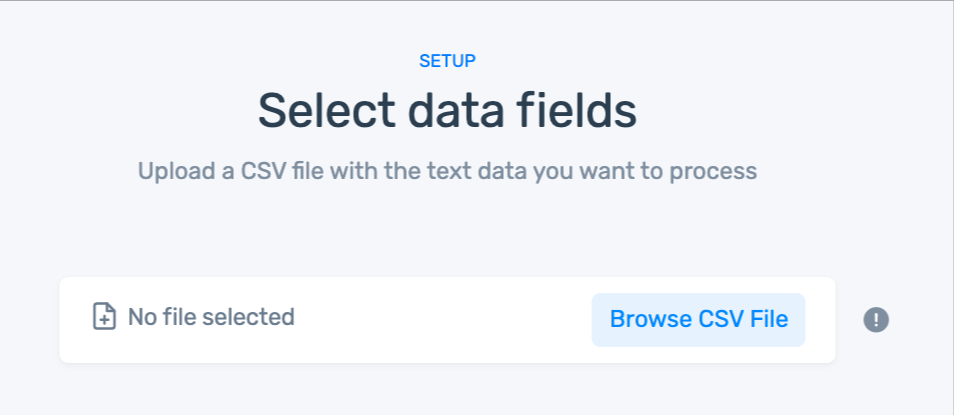

2. Upload your data:

If you don't have a CSV file:

- You can use our sample dataset.

- Or, download your own survey responses from the survey tool you use with this documentation.

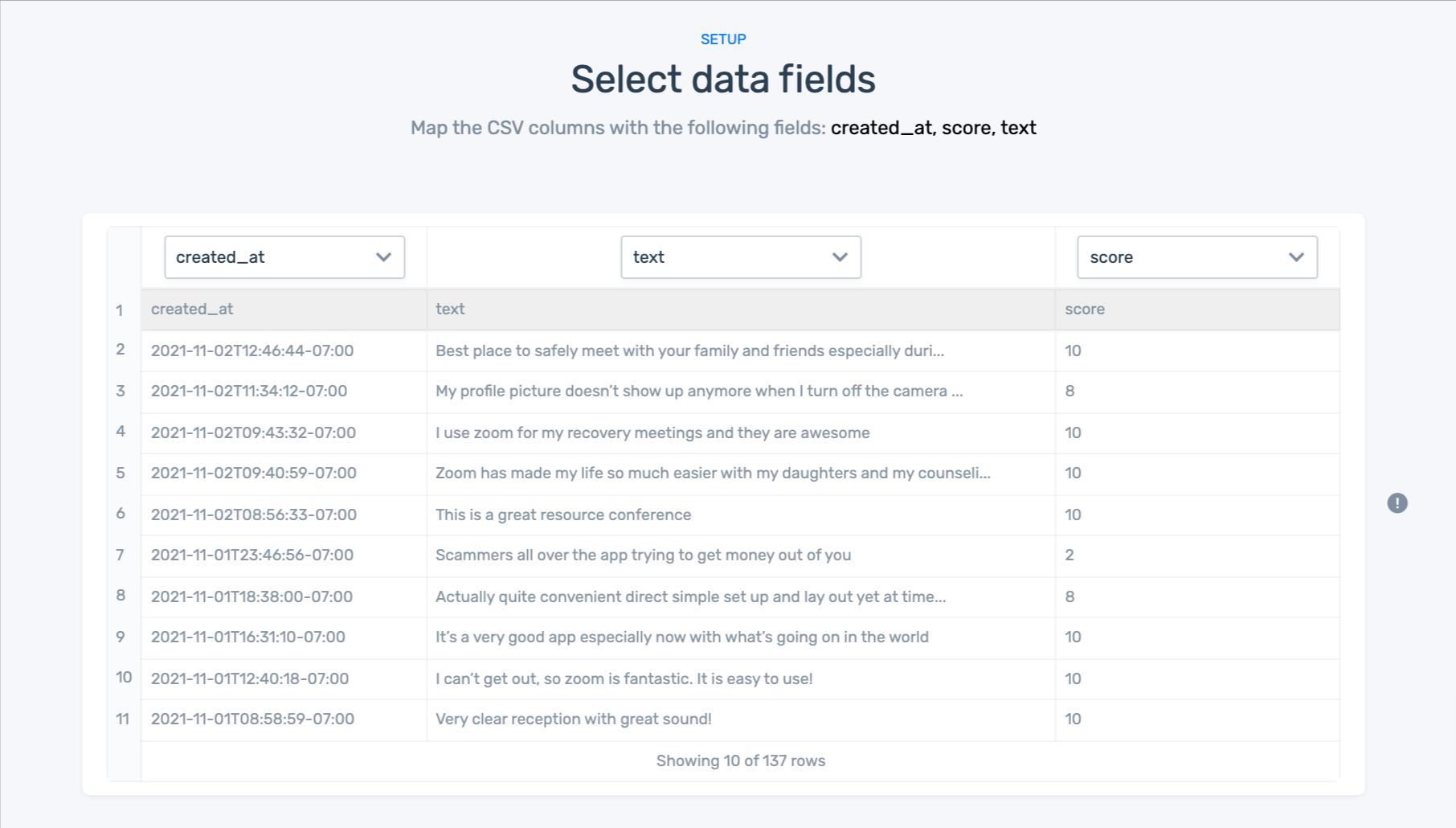

3. Match your data to the right fields in each column:

Fields you'll need to match:

- created_at: Date that the response was sent.

- text: Text of the response.

- score: NPS score given by the customer.

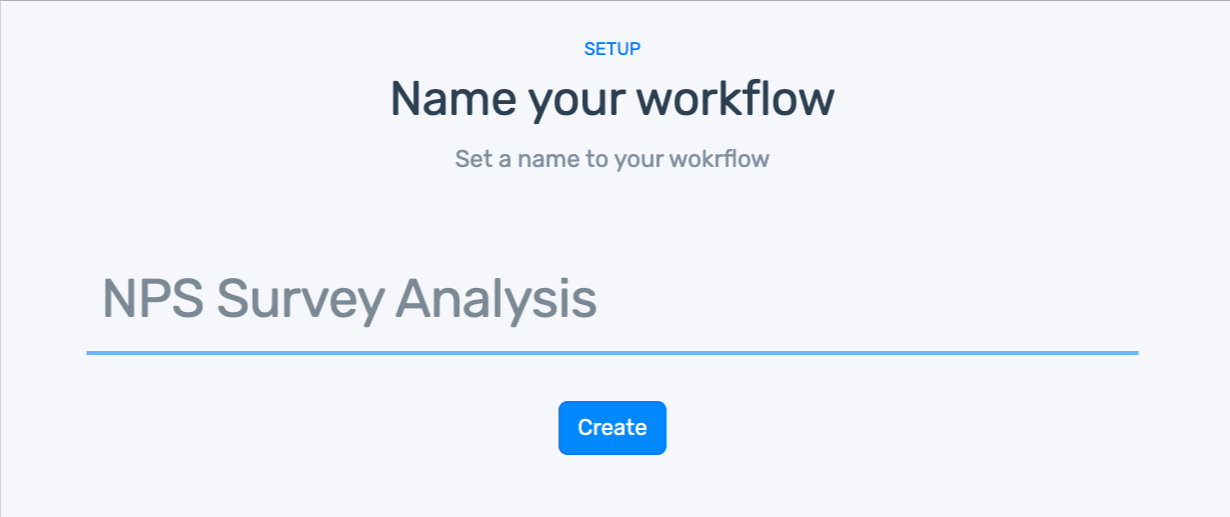

4. Name your workflow:

5. Wait for MonkeyLearn to process your data:

6. Explore your dashboard!

- Filter by topic, sentiment, keyword, score, or NPS category.

- Share via email with other coworkers.

Want to run your data through MonkeyLearn templates? Book a demo.

Final Words on Topic Analysis

Topic analysis makes it possible to detect topics and subjects within huge sets of text data in a fast and simple way. Topic classification allows you to automate your business, from customer service to social media monitoring and beyond. Thanks to topic analysis, you can accomplish complex tasks more effectively and obtain valuable insights from your data that will lead to better business decisions.

Sign up to MonkeyLearn to get started with topic analysis and other powerful text analysis tools.

Or book a demo to learn more about the insights you can get from your data.