Short Text Classification

With the explosive growth of social media, e-commerce, and online communication, short text classification has become a hot topic in recent years. Examples of short text can be found in a number of contexts such as tweets, chat messages, search queries, product descriptions, or online reviews. Although the applications are wide-ranging, short text classification can be a hard and challenging task.

For starters, short text is sparse with a low number of features and doesn’t provide enough word co-occurrence. This is why preprocessing the data is particularly important in short text classification; stopword removal (i.e. the removal of words that carry no useful information for the classification task) and stemming (i.e. the conversion of words into their roots or stems), are useful techniques for reducing the data sparsity and shrinking the feature space. Also, short text usually doesn’t provide enough background information for a good similarity measure between different texts. Researchers have recently made some great efforts at tackling these challenges, with word embeddings being one of the resulting key breakthroughs in the field. Word embeddings stand on the concept that similar words tend to occur together and will have a similar context (e.g. football and basketball tend to have a similar context around sports). Instead of using the conventional bag-of-words (BOW) model, short text classifiers can use word embeddings such as Word2Vec or GloVe for improving word representation and boosting the accuracy of the model.

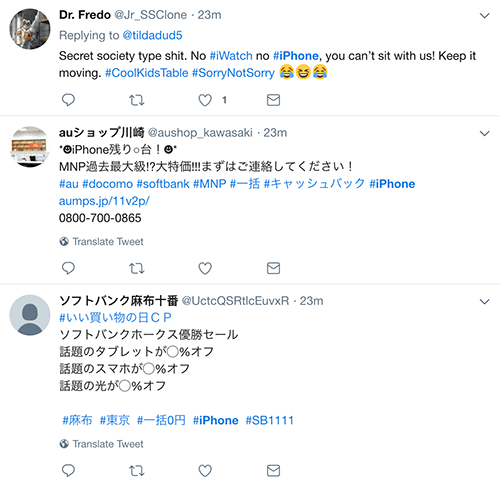

Short text is also particularly challenging for text classification due to its messy nature, usually containing misspellings, grammatical errors, abbreviations, slang, unusual terms, emojis, and noise. A clear example of these challenges are tweets; a quick search using #iphone can show how noisy short text data can be:

Advantages Over Long Text Classification

On the bright side, short text classification does have some advantages over long text classification. For example, creating a training dataset for short text classifiers is a faster and more efficient process; not only does a human annotator take less time to read and tag each example, but the decision process is also much simpler. Reading a full document with multiple pages and then deciding which tags or categories are appropriate is a complex and time-consuming task. Human annotators often face analysis paralysis while tagging full documents, which leads to inconsistencies and errors in the training data.

Also, short text classifiers are easier to test and evaluate. Once you build a text classifier, a good way to know how well your classifier works is by testing the model with new examples. In short text classifiers, you can try the model with several dozen new examples and manually check the predictions in a breeze. Doing the same manual evaluation on longer documents is a more daunting task.

Creating a Short Text Classifier

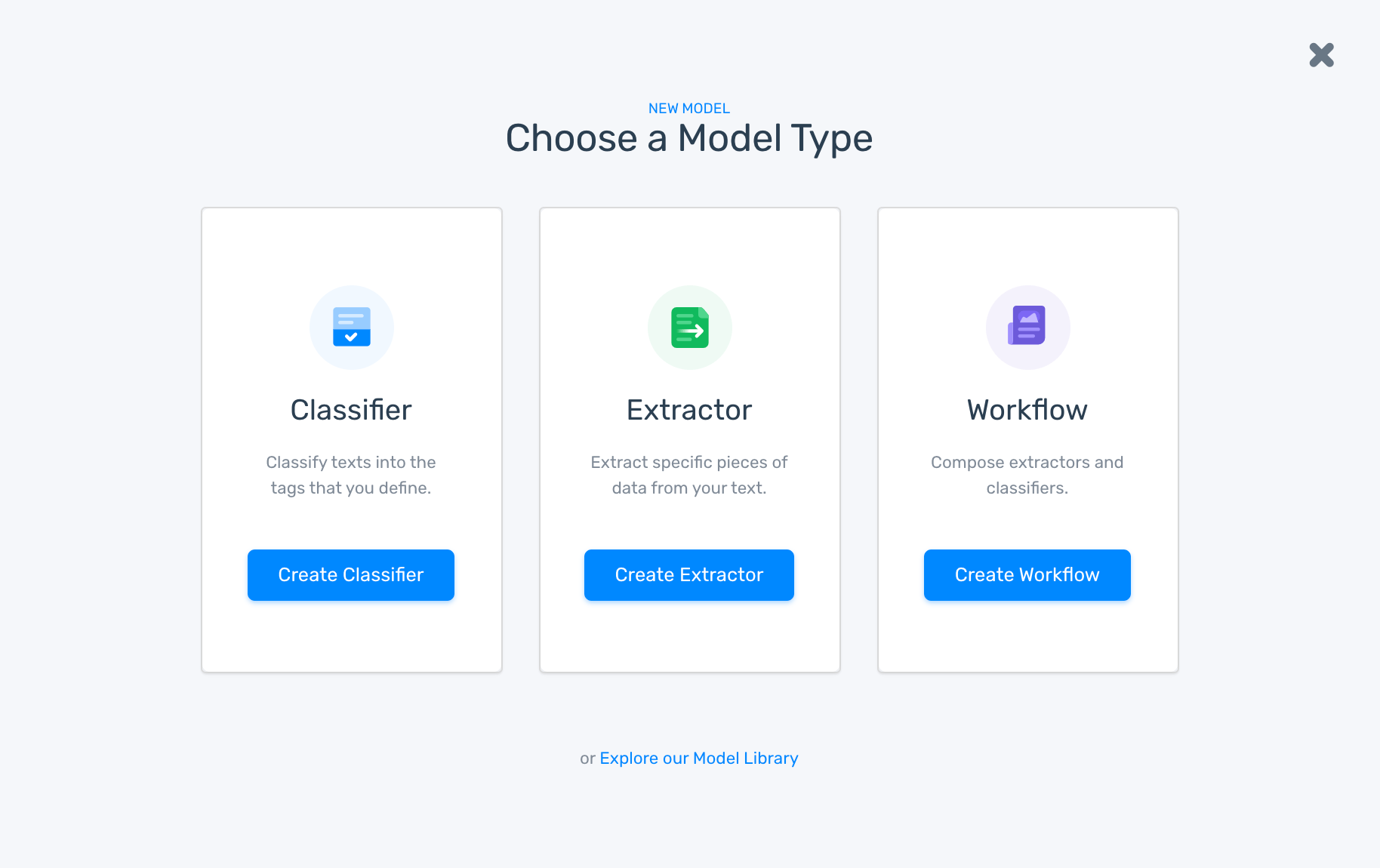

If you are interested in creating a classifier for short text, you can use MonkeyLearn and start experimenting right away. The platform makes it easy and straightforward to create text classifiers with machine learning. You just need to sign up for free, log in to the platform and select Create a Model:

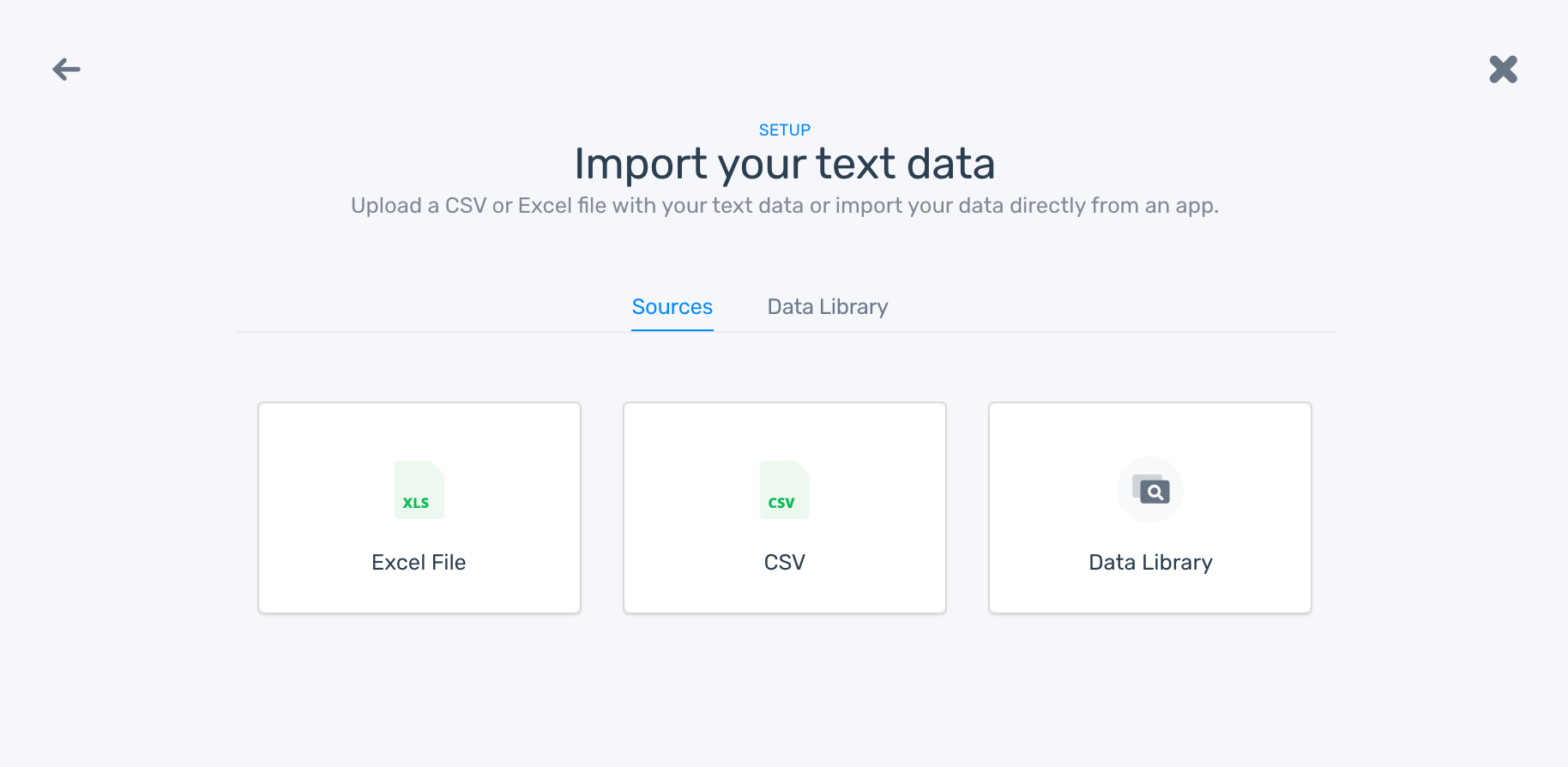

After selecting Classifier, you’ll need to upload the training data to the platform:

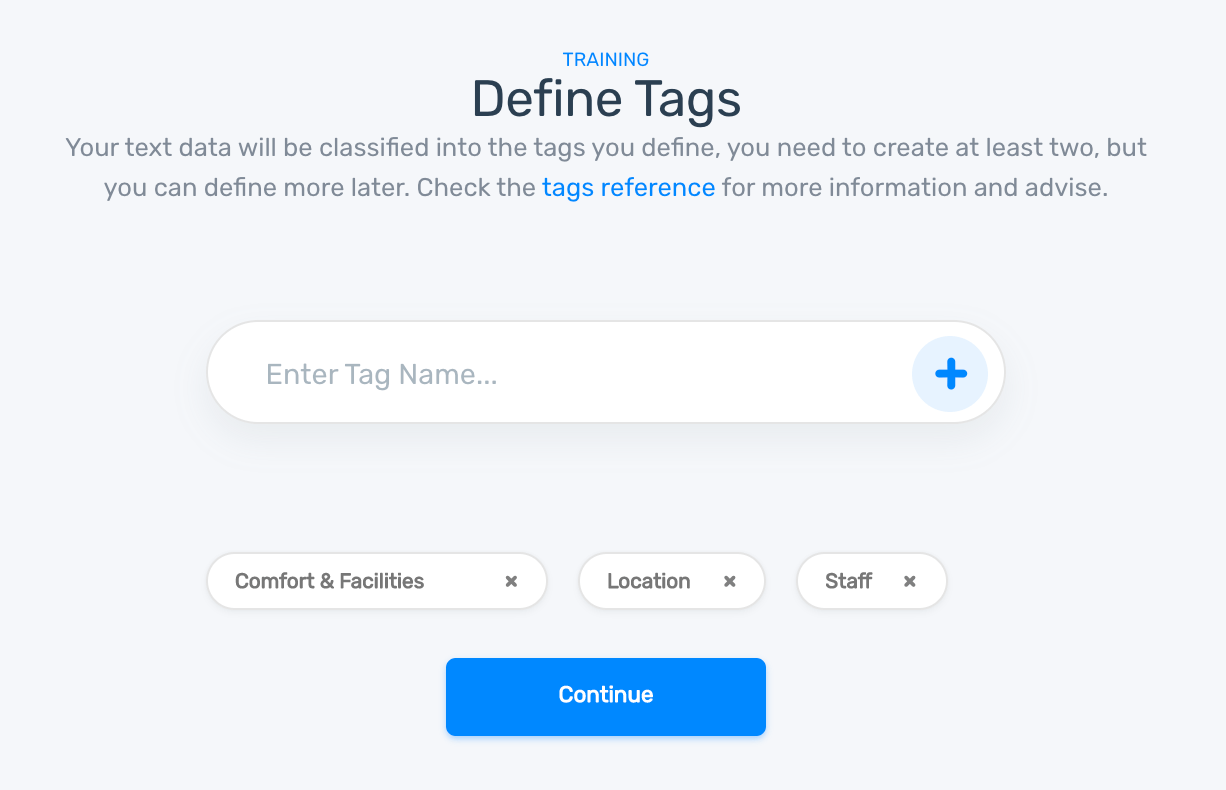

Next, you’ll need to define the tags or categories you want to use in your classifier:

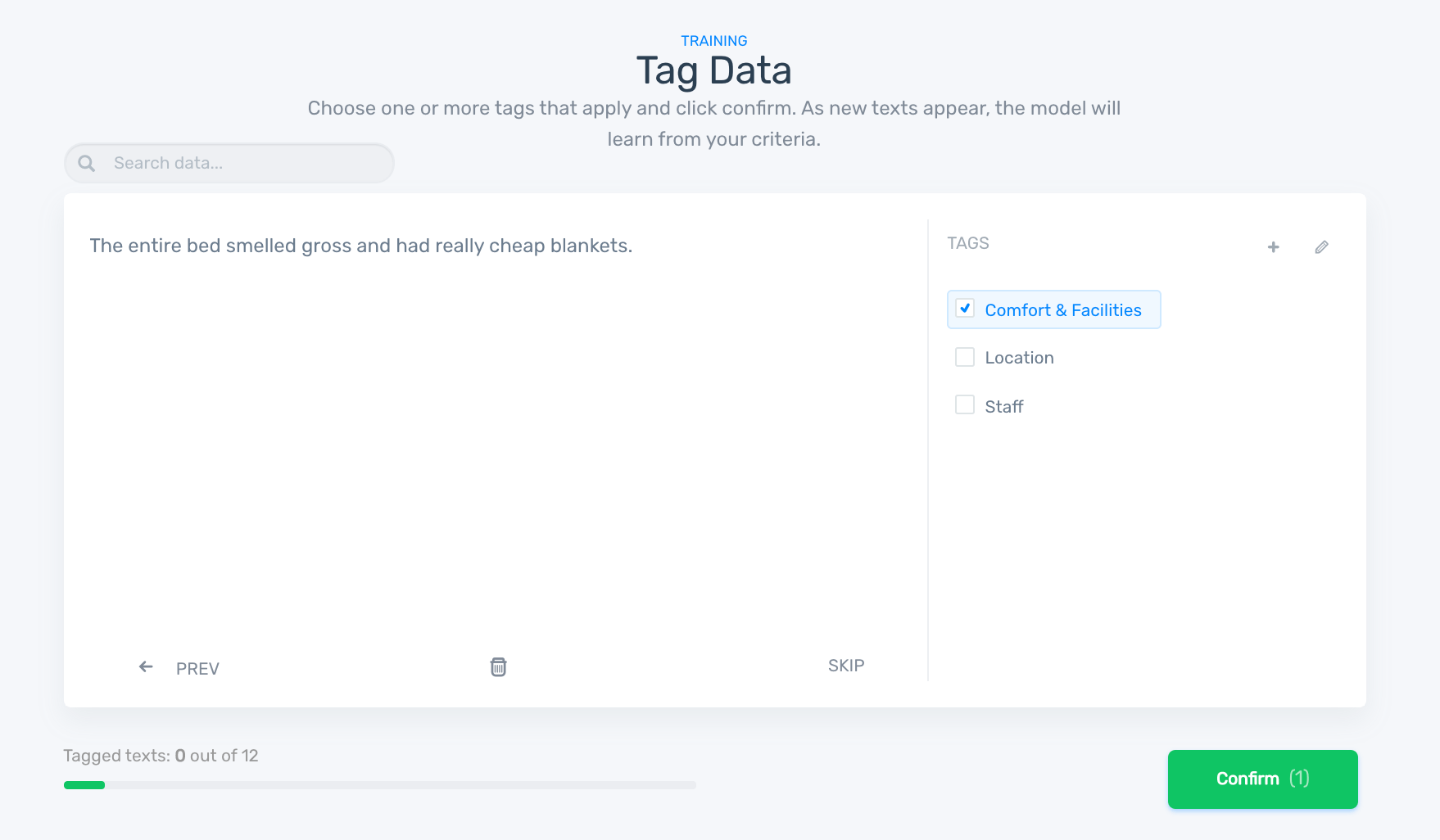

Last, you’ll need to tag data with the appropriate categories to provide annotated examples to the algorithm and start training the classifier:

After you have tagged enough training data, the model will be trained and you’ll be able to try out the classifier by using the user interface:

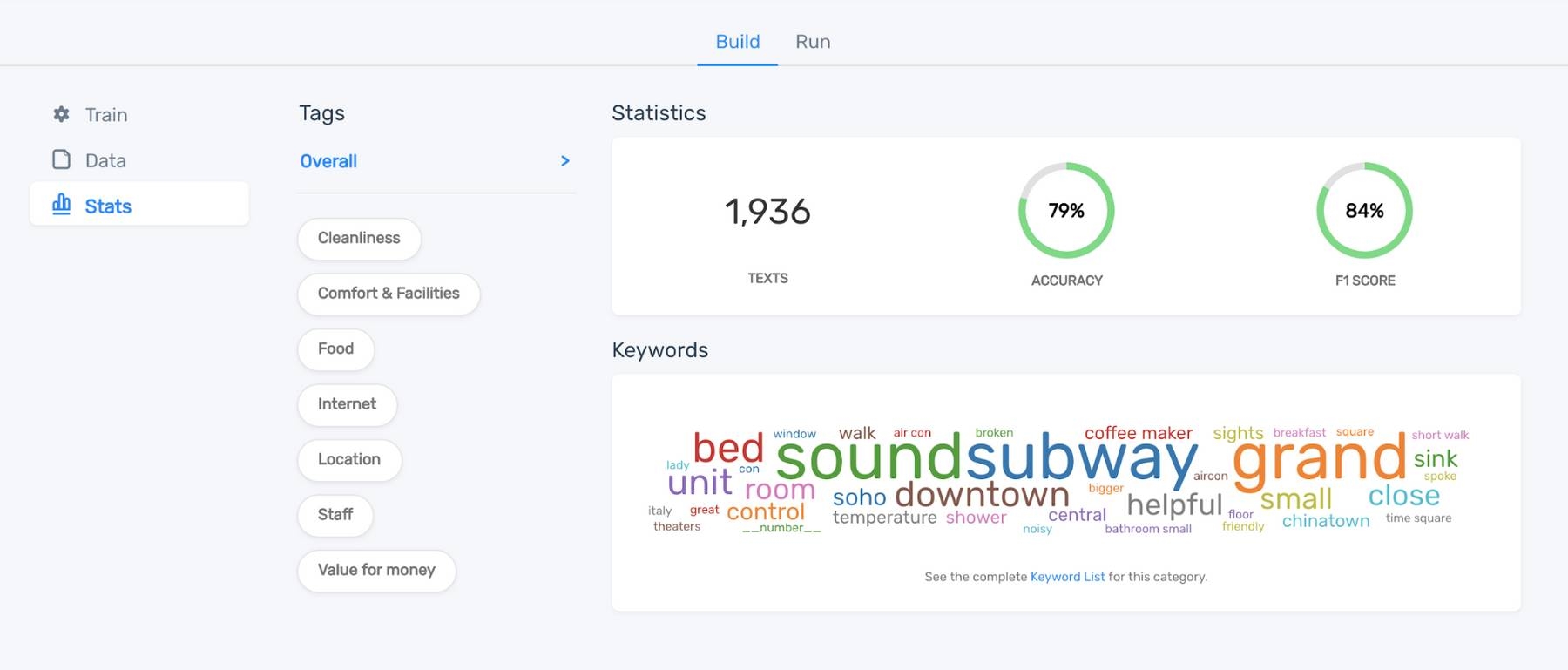

MonkeyLearn provides classifier metrics such as Accuracy, F1 Score, Precision, and Recall to help you evaluate the performance of the model:

You can further improve the results of the classifier by tagging additional data, work on improving the classifier metrics, or by using the keywords to troubleshoot classifier performance.

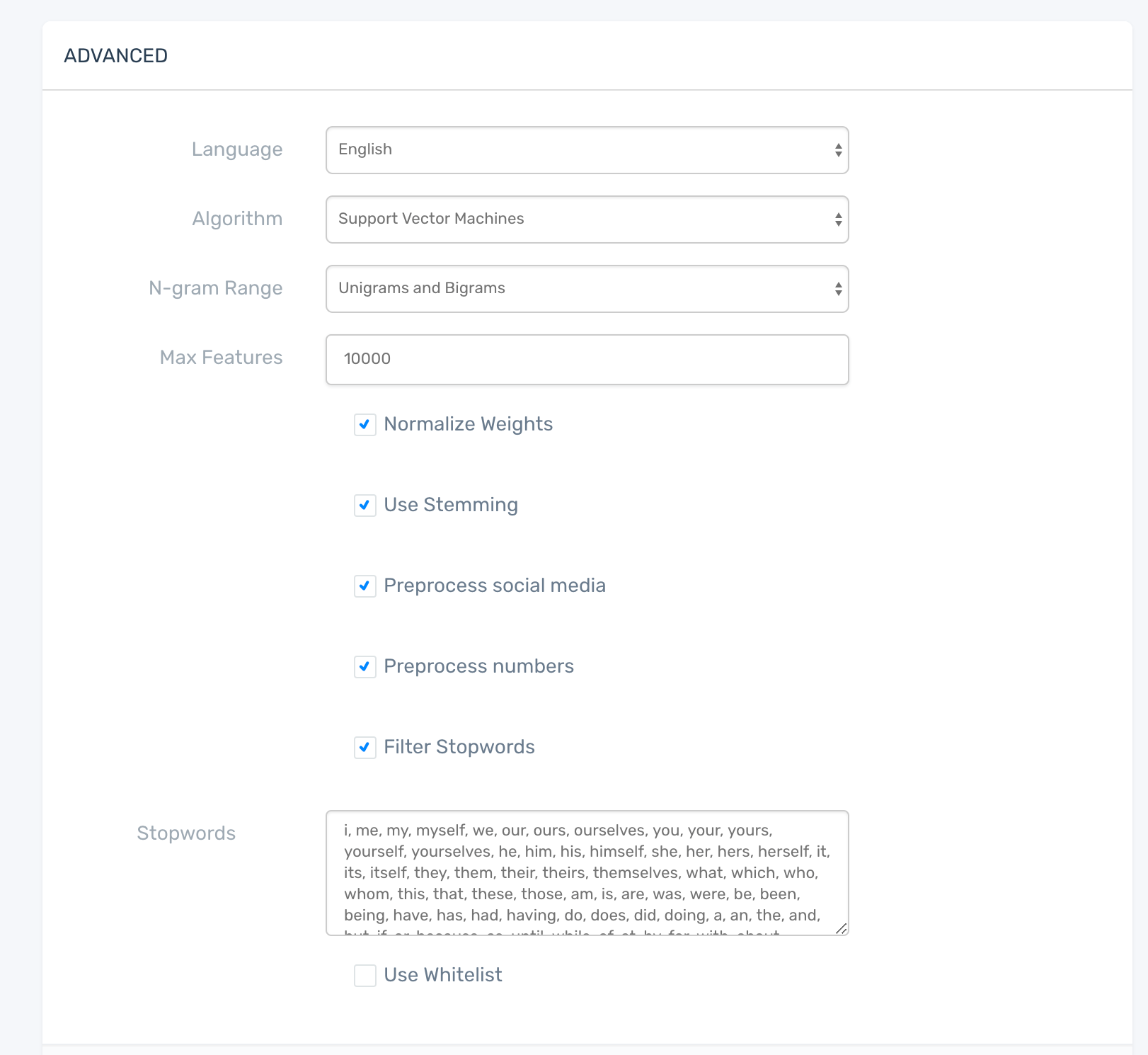

You can experiment with stopwords, stemming, and other advanced configurations under Settings:

Once you have the results you are looking for, you can put the classifier to work to analyze new data either by uploading a CSV or Excel file for batch analysis, by using the API with your favorite programming language, or by using integrations.