Your Guide to Data Cleaning & The Benefits of Clean Data

When using data to garner insights, you need to make sure that you have high-quality data.

This may seem obvious to most, but it’s often where businesses struggle.

George Fuechsel, an IBM programmer and instructor, first coined the term "garbage in, garbage out", as a way of reminding his students that a computer processes what it is given.

In other words, your insights will only be as good as the data you input.

You might have huge amounts of data that could help guide your business, but gathering and analyzing it isn’t enough.

You also need to clean your data.

In this guide, learn what data cleaning is, why it’s important, and discover the best tools for cleaning your data:

- What is Data Cleaning?

- Data Cleaning Importance

- The Data Cleaning Process

- Data Cleaning Tools

- Data Cleaning Challenges

Let’s start with a definition.

What Is Data Cleaning?

Data cleaning (also known as data cleansing or data scrubbing) is the process of correcting or removing corrupt, incorrect, or unnecessary data from a data set (or group of datasets) before data analysis. This way, you will analyze only relevant data, and your results will be more accurate.

There are a number of different data cleaning techniques you can use, depending on the type of data at your disposal and the type of analysis you wish to do.

Data cleaning can be a tedious process, but it’s absolutely necessary to get proper results and truly great insights. And certain tools can take a lot of the pain out of the procedure.

The Importance of Data Cleaning

Successful data cleaning measures will ensure that your analysis results are accurate and consistent.

We often hear about the power of data and the need for data-driven decision-making in business. But that only really works when you use clean data from the outset.

The problem with dirty data

“Dirty” data can actually do your business more harm than good.

Raúl Garreta, CEO and Co-Founder of MonkeyLearn, says “If your downstream process receives garbage as input data, the quality of your results will also be bad”.

Basically, if you work with dirty data, not only will you not get the most accurate results possible, but they may be skewed to the point that they’re actually detrimental. No one wants to be making “trash” data-driven decisions, which could affect your company’s trajectory for years.

The more bad data you use, and the longer you use it, the more it will cost your company – both in dollars and wasted time. This goes for both quantitative (structured) and qualitative (unstructured) data.

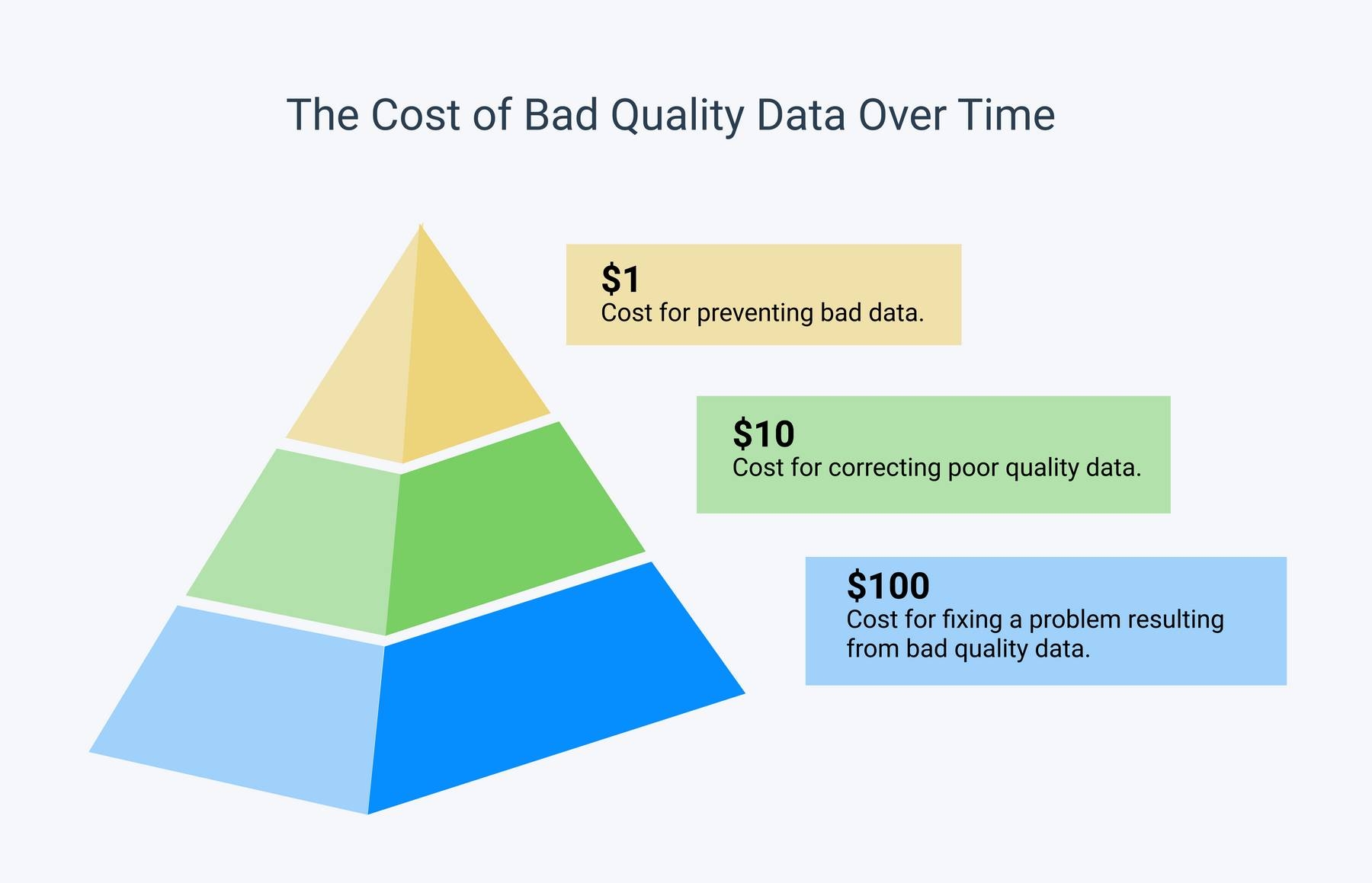

It’s the 1-10-100 principle:

Essentially, it makes more sense to invest $1 in prevention, than to spend $10 on correction or $100 on fixing a problem after failure.

In machine learning, for example, if you build or train a model based on bad records, the resulting machine learning model will provide poor predictions.

In fact, better data is even more important than more powerful algorithms. Any data scientist will tell you – data cleaning is often the most important step in machine learning.

Even in the most basic quantitative data analysis, if you have hundreds of records and data sets, but much of it is irrelevant or just noise, your resulting analysis will probably have no relationship whatsoever to your actual needs.

How does bad data affect your individual teams?

Dirty data will not only have a negative effect on your bottom line, it will also impact individual teams and your customers – 89% of business leaders say that inaccurate data prevents them from offering great customer experiences:

Marketing

If you’re not confident about the data in your marketing database then you could be marketing to the wrong audience at the wrong time. Think of all the wasted money spent on ill-fitting campaigns.

Customer Support

Dirty support data will lead to poor (and slow) ticket routing and make an already upset customer a potential churn. Even seemingly small things, like incorrectly bucketing customer issues can completely skew your results and lead to the wrong follow-up actions.

Product Team

You need to be sure that you’re focusing on the right updates and features. If your data is dirty then it will be hard to pinpoint very specific issues that need addressing.

Sales Team

When you’re working with dirty data, you’ll miss out on the best potential customers to target, and your sales will dwindle.

Clean data leads to:

- Streamlined processes because you’re not wasting time analyzing duplicate data that you’ve already worked with. And the downstream processes will all have the same good data.

- Increased productivity because you won’t be wasting time on unnecessary analysis. And you’ll be set up for better internal communication.

- Better overall decision making: good data means well-informed decisions.

So, just how do you ensure that you start out with the clean data you need?

The Data Cleaning Process

Data cleaning is not necessarily a “fun” process, but when you break it out into these 3 steps, it can be much less daunting.

- Step 1: Data exploring

- Step 2: Data filtering

- Step 3: Data cleaning

1. Data exploring

Data exploring is the first step to data cleaning – basically, a first look at your data. For this step, you’ll need to import your data to a spreadsheet, so you can view it properly.

- Look at the records. Dive in to understand what it all means and how it relates to your needs.

- Filter by fields or column values.

- Facet or segment data by field values.

This way you’ll start having an overview of what the data is about and start seeing patterns of what will eventually need to be cleaned: i.e., what records to remove and what records to clean.

Excel, Google Sheets, Airtable, and Open Refine, are all great options for this step. They all have functionalities to allow you to see a good amount of records at the same time, so you can filter them, search them, etc.

Additionally, a lot of these tools come with in-built analytics, so you can start gaining some high-level visual insights from your data:

- The distribution of the different values of a field (histograms)

- Number of records (pie charts)

- Length of the text fields (histogram)

- Recurring words (keyword clouds)

These will all be helpful to break up and examine your data.

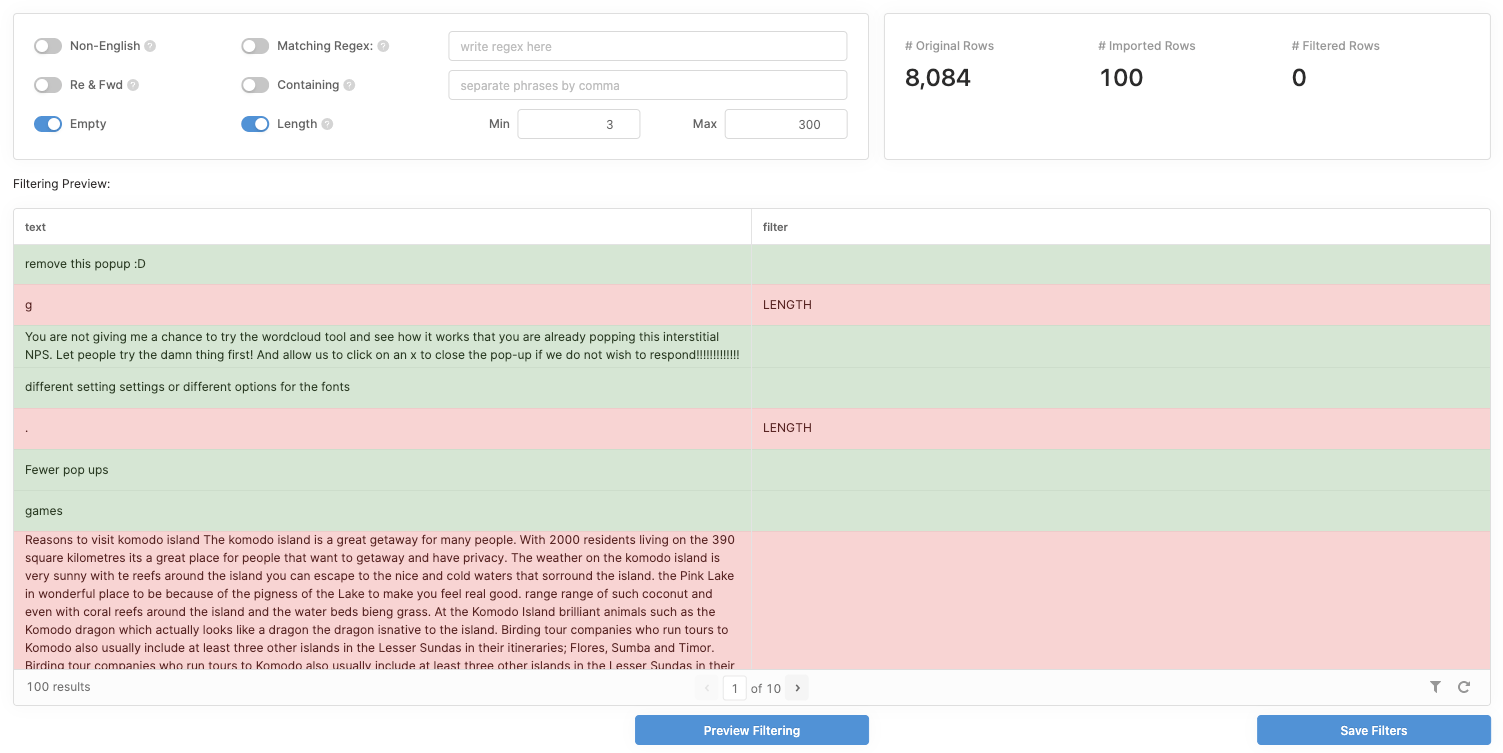

2. Data filtering

Do some data filtering to remove records that won’t be relevant to whatever downstream process you have planned on top of the data – whether it be data analysis, data warehousing, data modeling, machine learning, etc.

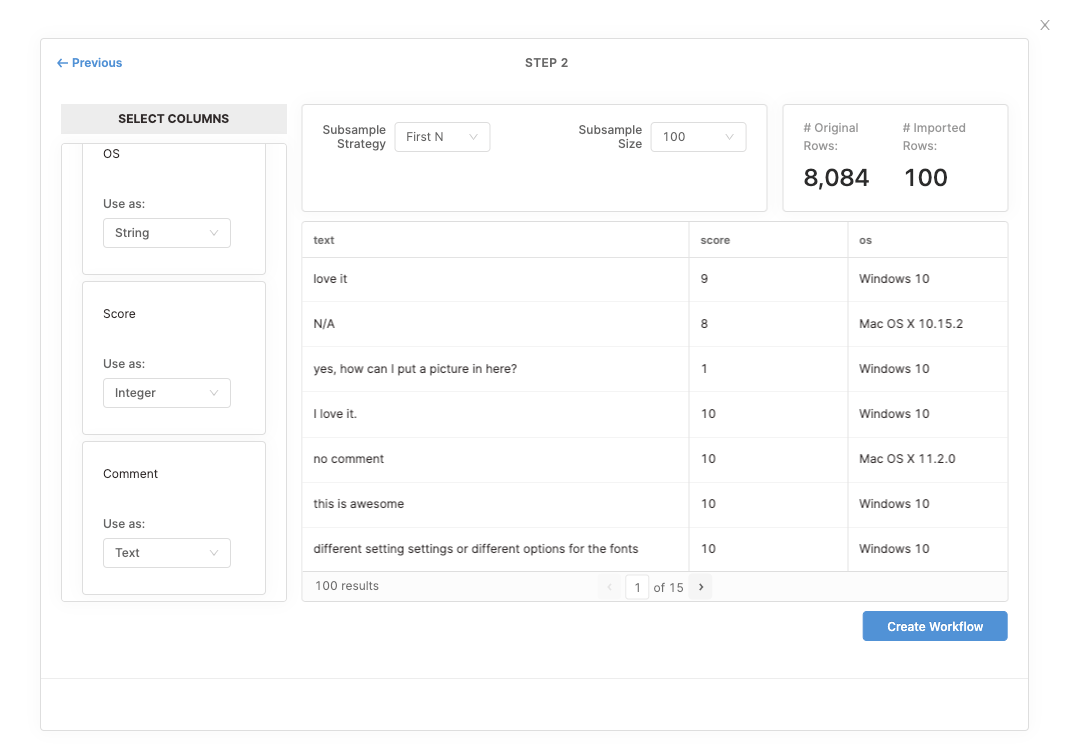

Don’t just filter records, but columns, too

This may seem basic, but filtering happens across records (rows) and fields (columns). It’s how you see CSV files, like Excel or Google Spreadsheets. There are rows and columns.

Take a look at this tool, below, for example. You need to select rows and filter by column and value to get the information you’re after:

Focus on what’s important

Think about how emails are filtered by Spam, Promotions, Social, etc. It would be just too overwhelming to deal with all that mail – and time-consuming – if it all came to your primary inbox. Thanks to email filters, you generally only get the most important emails at the top of your inbox.

Data filtering works the same way. The end user (or end result) gets a much better experience by removing all the noise and irrelevant records. By only focusing on important records, you’ll have a much more efficient process.

Common filters

There are limitless filters you can apply to your data. It all depends on what you want to do, but here are some common ones you can set up for machine learning text analysis.

- Remove all texts that aren’t in the language you want to analyze.

- Remove empty records.

- Remove duplicate records.

- Remove records that contain a particular set of irrelevant keywords, eg: "GET FREE," "You won X," etc.

- Remove records that are larger than a particular number of characters.

An example of a filtering tool:

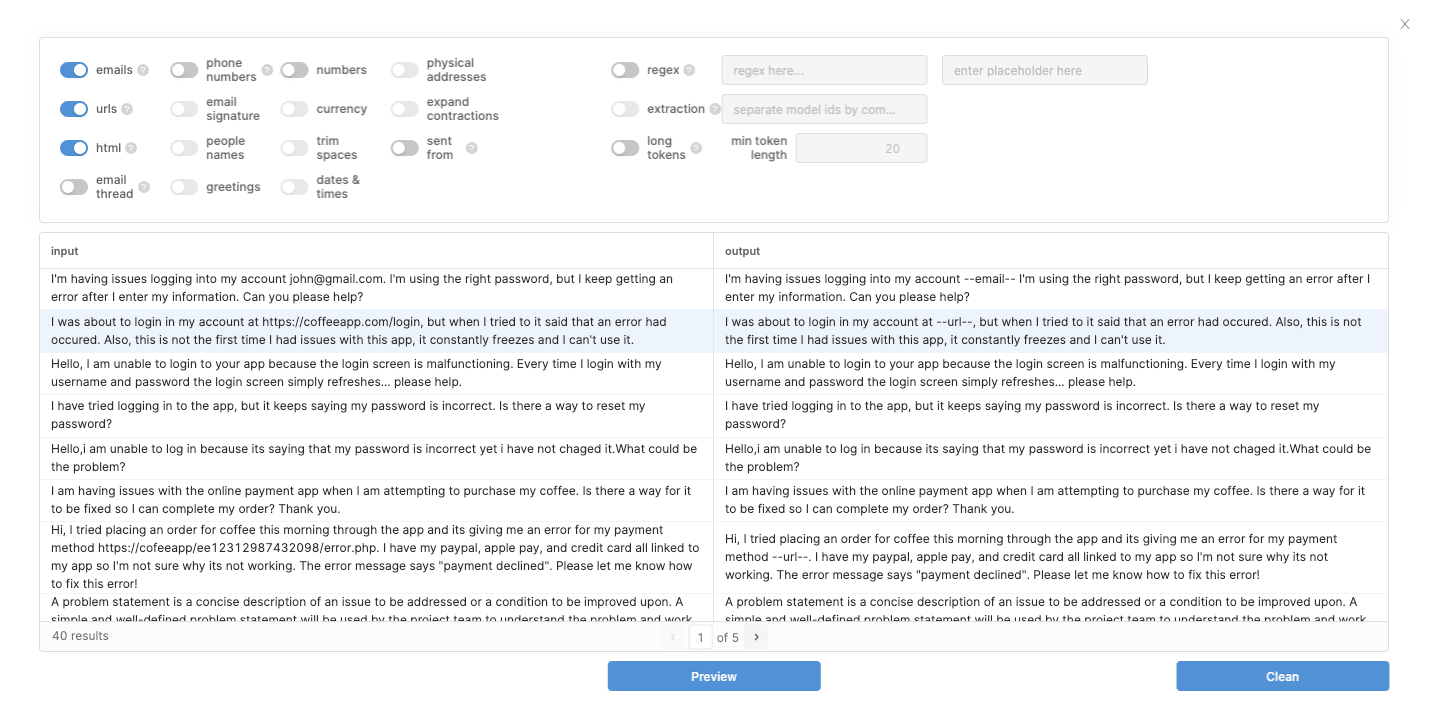

3. Data cleaning

After you remove as much noise as possible, you’ll need to do some in-depth data cleaning on the records that remain.

The aim of data cleaning is to ‘transform’ record fields by removing irrelevant data.

Data cleaning will also improve and arrange data in a uniform way so your records are of better quality to be used for the downstream process.

Let’s focus on open-ended text data.

Basically, machine learning text analysis uses Natural Language Processing (NLP) to “understand” and analyze text much like a human would.

However, the algorithms don’t work to the point that they can automatically tell which individual pieces of text are definitely relevant and which are unnecessary. So the text needs to be cleaned to make it as uniform as possible.

Common text cleaning techniques

Depending on what your data looks like, where it comes from, and what you need to improve, there could be dozens of sets of cleaners (data transformations) that you may need to do on your data.

The below are some common cleaning functions that you should consider for text analysis:

- Remove personal identifiable (PII) data

These are things like email addresses, peoples’ names, physical addresses, company names, phone numbers, etc. This is especially useful when you want to anonymize data in order to protect confidentiality.

- Remove URLs

They may end up being analyzed as actual words and would skew the analysis toward keywords that aren’t actually part of your analysis.

- Translate into the language you need to work with

Rarely do models work well when analyzing multiple languages at the same time.

- Remove HTML tags

They don’t generally contain any useful data.

- Fix character encoding

Character encoding is a way to convert text into binary. However, there are many different encodings, and if you try to read text with a different decoder than the one it was written in, you’ll end with a bunch of unknown characters and scrambled text.

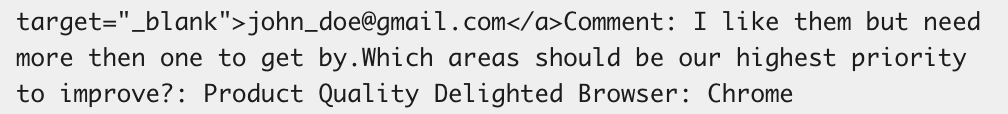

- Remove or extract particular artifacts that match a regular expression (regex)

Regular expressions are used to identify strings of text that match a certain pattern. They can be used to extract patterns that you want to keep, or to remove data. For example, if you use the following expression to extract data in the text below, you’ll receive the following output:

Expression:

(?i)(?:Comment:)(.*?)(?:Which.*$)

Extracted data (output)

I like them but need more then one to get by.

By replacing the string with the contents, you get what you want and discard the rest. It’s a lot easier to detect what you want to keep than write expressions for patterns you would like to exclude. To exclude data, you would need to create more than one regex.

- Remove boilerplate email text

Things like greetings, previous replies, email signatures, etc., will skew your analysis because they aren’t at all relevant to the data you need.

- Remove excessive blank space between text

They could be read by machine learning as part of a word, so tidy up your spacing!

- Remove tracking codes

Things like order forms, delivery tracking codes, etc. are similar to URLs – they’re just not relevant to the analysis you likely want to do.

Here’s what text looks like before and after being processed by a data cleaning tool. Notice that URLs have been standardized:

Data Cleaning Tools

Here are some of the top data cleaning tools to help set you up for the best possible results:

1. Open Refine

Previously a Google SaaS product called Open Refine, at some point they simply opened it to the public. It’s one of the best available because its powerful and easy-to-use GUI allows you to quickly explore and clean data without any code. But the possibility to run Python scripts to do more complex data filtering and cleaning means you can more thoroughly customize to your needs.

2. Jupyter Notebook

For more technical data cleaning, that requires Python scripting, Jupyter Notebook is a powerful option. Here you can run scripts and make use of Python’s many resources (like all the regex operations) and other third party libraries, like Pandas for data frames, matplotlib for chards, and Spacy for Natural Language Processing.

3. Trifacta Wrangler

Trifacta's uncomplicated dashboard allows no-code users and coders, alike, to easily collaborate on projects across the organization. And their scalability across any cloud allows huge projects to be handled with the same ease as small ones. Trifacta originally began with research at Stanford.

Data wrangling is a whole new approach to data cleaning that automatically exports unstructured data into Excel, R, Tableau, and Protovis, so it can more easily be manipulated.

4. TIBCO Clarity

TIBCO Clarity is strictly a SaaS data prep tool, so the focus is clear. They allow data integration from a variety of sources and formats, so you can clean and prepare all of your data together. They leverage data discovery and profiling with ease with the ability to detect data patterns and types of auto-metadata generation.

Data Cleaning Challenges

First of all, data cleaning is tedious.

It’s also the most-time-consuming data science task:

But don’t let data cleaning overwhelm you.

When you see the results clean data can deliver, you’ll start to appreciate cleaning it!

Here are some things to keep in mind to ensure your data is as clean as possible.

- Stick to the process that’s right for you

Put your process in place and stick to it. Know what the steps are and follow them, in order, religiously. This is a constant, recurring process.

- Be thorough

Don’t cut corners. Make sure your following all necessary protocols or it will cause hours of added pain in the end. Again, it all comes back to the 1-10-100 principle that we talked about earlier.

- Use the right tools

Data cleaning is much more effective (and easier) when you use the right tools. You’re better off using common data filters and data cleaners, so you don’t have to start from scratch. Tools with an efficient and easy-to-use UI allow you to preview the effect of your filters and cleaners, so you can see it working in real time. And you can run tests to see what works and what new cleaners you may need to add on with sample run throughs.

- Check your results

When you’re working with machine learning, you need to constantly check on the results of the models you’re training. How are they performing? Is there some kind of dirty data that got left behind that may be skewing their performance?

Conclusion

Whether it’s a basic, numerical quantitative analysis or you’re using machine learning on huge documents, open-ended survey responses, or customer feedback from all over the internet, data cleaning is a massively important step in any well-run analysis.

This guide should help get you started with data cleaning, so you don’t end up with bad data.

Once you’ve cleaned your data and are ready to run your data through text analysis tools, sign up for a demo with MonkeyLearn.