8 Effective Data Cleaning Techniques for Better Data

We know that solid data guides the best business decisions. This is true regardless of your industry.

But, while we often talk about the good data can do for your business, it’s important to talk about bad data too.

When you have bad or “dirty” data, and you use it to help make important business decisions, this data is likely to do more harm than good.

To get your data up to scratch and ready for analysis you’ll first need to clean it. Data cleaning, while not always the most favored part of the data journey, needs to be an integral part of your data preprocessing practice. Dismissing it at the beginning of the process will create potential headaches for yourself further down the line.

Here, we’ll go through what data cleaning is, why it is such an important part of your data management process, and show you the different techniques you can use to perform data cleaning.

Feel free to jump straight to the section that interests you the most:

Why Is Data Cleaning so Important?

Data cleaning, data cleansing, or data scrubbing is the act of first identifying any issues or bad data, then systematically correcting these issues. If the data is unfixable, you will need to remove the bad elements to properly clean your data.

Unclean data normally comes as a result of human error, scraping data, or combining data from multiple sources. Multichannel data is now the norm, so inconsistencies across different data sets are to be expected.

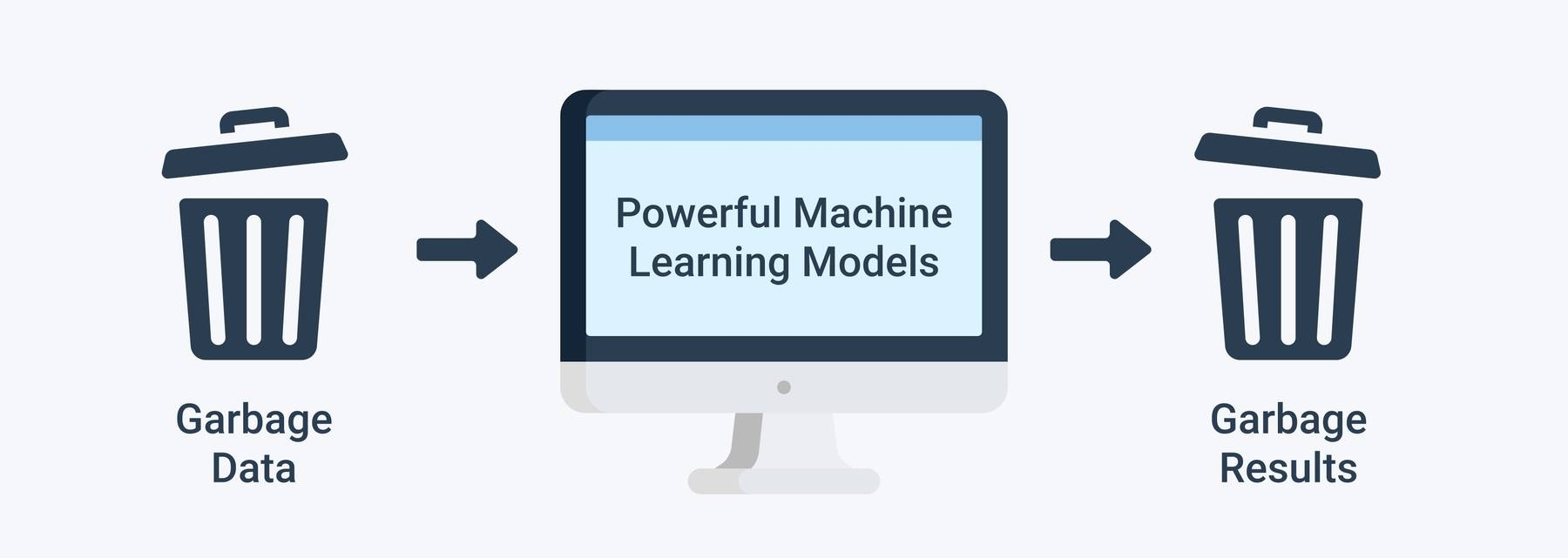

You have to clean this bad data before you start analyzing it, especially if you will be running it through machine learning models.

Why? Because it can offer misleading or incorrect insights. If you’re using these insights to navigate important business decisions then this could be potentially devastating.

It can also be costly. Research from Gartner has found that bad data costs businesses anywhere from $9.7 million to $14.2 million annually.

At the least working with bad data is a massive waste of time.

It’s useful here to think of the commonly used phrase: “garbage in, garbage out”.

This is a great way to illustrate that if you use data that is bad or unclean, you are likely to get results that are also bad. If you put good data in, you’re likely to get good results.

Data cleaning sounds like a no-brainer then right? It is. But it’s also time-consuming and can be tedious. This is why it’s not always paid the attention it should be. But, if you don’t set aside the time at the outset, you’ll probably spend more time fixing problems further down the line.

So, now that we’ve established that data cleaning is a non-negotiable step in your data preprocessing, let’s get into the techniques.

Data Cleaning Techniques That You Can Put Into Practice Right Away

First, we should note that each case and data set will require different data cleaning methods. The techniques we are about to go through cover the most common issues likely to arise. You’ll probably need to employ a few of the techniques when cleaning your data.

Before you start cleaning, you should also think about your objectives and what you hope to gain from cleaning and analyzing this data. This will help you establish what is relevant within your data, and what is not.

It’s also a good idea to set some rules or standards before you even begin to input data. An example of this would be using only one style of date format or address format. This will prevent the need to clean up a lot of inconsistencies.

With that in mind, let’s get started.

Here are 8 effective data cleaning techniques:

- Remove duplicates

- Remove irrelevant data

- Standardize capitalization

- Convert data type

- Clear formatting

- Fix errors

- Language translation

- Handle missing values

Let’s go through these in more detail now.

1. Remove Duplicates

When you collect your data from a range of different places, or scrape your data, it’s likely that you will have duplicated entries. These duplicates could originate from human error where the person inputting the data or filling out a form made a mistake.

Duplicates will inevitably skew your data and/or confuse your results. They can also just make the data hard to read when you want to visualize it, so it’s best to remove them right away.

2. Remove Irrelevant Data

Irrelevant data will slow down and confuse any analysis that you want to do. So, deciphering what is relevant and what is not is necessary before you begin your data cleaning. For instance, if you are analyzing the age range of your customers, you don’t need to include their email addresses.

Other elements you’ll need to remove as they add nothing to your data include:

- Personal identifiable (PII) data

- URLs

- HTML tags

- Boilerplate text (for ex. in emails)

- Tracking codes

- Excessive blank space between text

3. Standardize Capitalization

Within your data, you need to make sure that the text is consistent. If you have a mixture of capitalization, this could lead to different erroneous categories being created.

It could also cause problems when you need to translate before processing as capitalization can change the meaning. For instance, Bill is a person's name whereas a bill or to bill is something else entirely.

If, in addition to data cleaning, you are text cleaning in order to process your data with a computer model, it’s much simpler to put everything in lowercase.

4. Convert Data Types

Numbers are the most common data type that you will need to convert when cleaning your data. Often numbers are imputed as text, however, in order to be processed, they need to appear as numerals.

If they are appearing as text, they are classed as a string and your analysis algorithms cannot perform mathematical equations on them.

The same is true for dates that are stored as text. These should all be changed to numerals. For example, if you have an entry that reads September 24th 2021, you’ll need to change that to read 09/24/2021.

5. Clear Formatting

Machine learning models can’t process your information if it is heavily formatted. If you are taking data from a range of sources, it’s likely that there are a number of different document formats. This can make your data confusing and incorrect.

You should remove any kind of formatting that has been applied to your documents, so you can start from zero. This is normally not a difficult process, both excel and google sheets, for example, have a simple standardization function to do this.

6. Fix Errors

It probably goes without saying that you’ll need to carefully remove any errors from your data. Errors as avoidable as typos could lead to you missing out on key findings from your data. Some of these can be avoided with something as simple as a quick spell-check.

Spelling mistakes or extra punctuation in data like an email address could mean you miss out on communicating with your customers. It could also lead to you sending unwanted emails to people who didn’t sign up for them.

Other errors can include inconsistencies in formatting. For example, if you have a column of US dollar amounts, you’ll have to convert any other currency type into US dollars so as to preserve a consistent standard currency. The same is true of any other form of measurement such as grams, ounces, etc.

7. Language Translation

To have consistent data, you’ll want everything in the same language.

The Natural Language Processing (NLP) models behind software used to analyze data are also predominantly monolingual, meaning they are not capable of processing multiple languages. So, you’ll need to translate everything into one language.

8. Handle Missing Values

When it comes to missing values you have two options:

- Remove the observations that have this missing value

- Input the missing data

What you choose to do will depend on your analysis goals and what you want to do next with your data.

Removing the missing value completely might remove useful insights from your data. After all, there was a reason that you wanted to pull this information in the first place.

Therefore it might be better to input the missing data by researching what should go in that field. If you don’t know what it is, you could replace it with the word missing. If it is numerical you can place a zero in the missing field.

However, if there are so many missing values that there isn’t enough data to use, then you should remove the whole section.

The Wrap Up

While it can sometimes be time-consuming to clean your data, it will cost you more than just time if you skip this step. “Dirty” data can lead to a whole host of issues, so you want it clean before you begin your analysis.

Once you have cleaned your data, you’ll need the right tools at hand to analyze this information. MonkeyLearn is the perfect option.

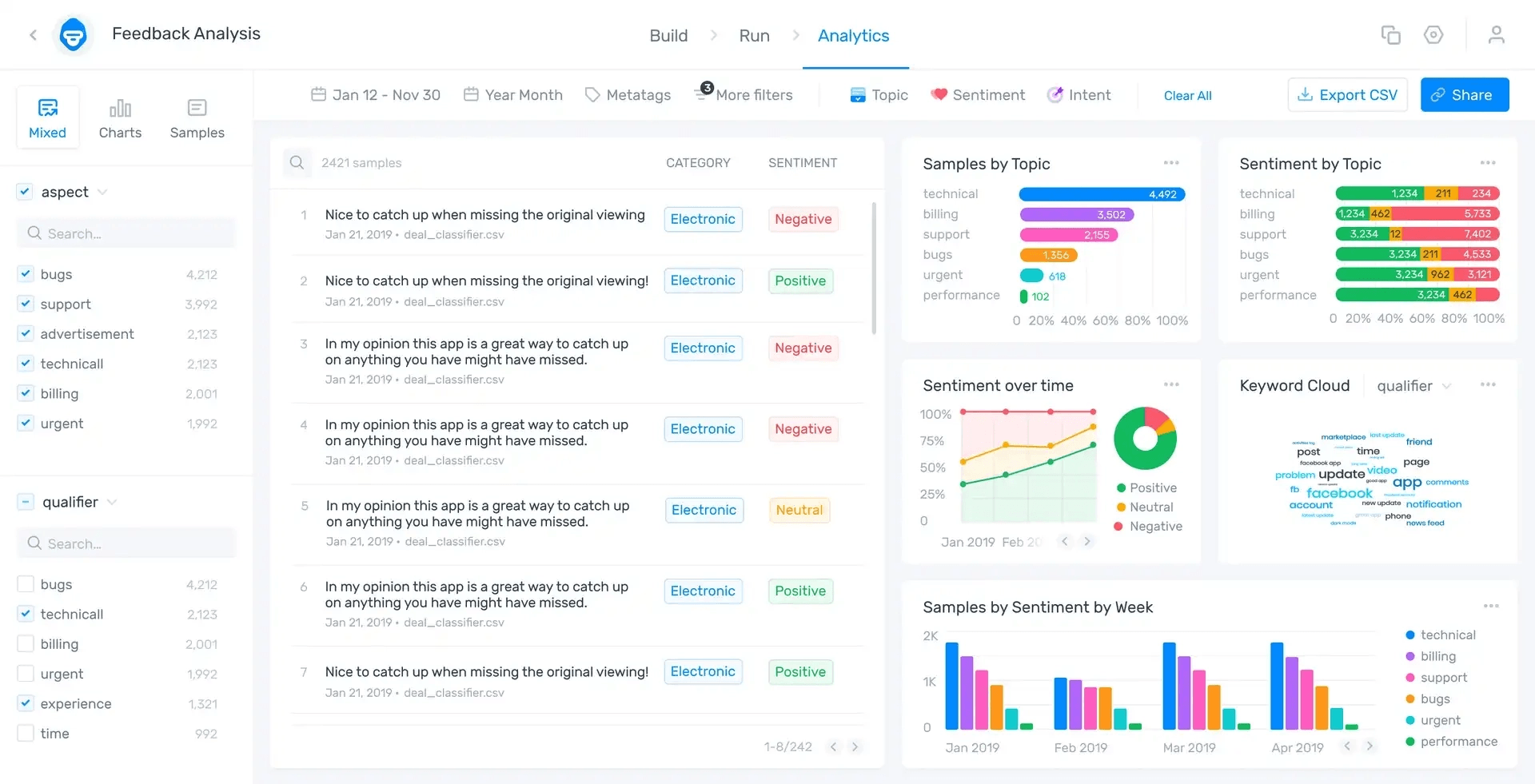

Harnessing the power of AI and machine learning, MonkeyLearn tools such as the sentiment analyzer and keyword extractor allow you to analyze your qualitative data in an objective, fast way. You can also visualize all your insights in one easy-to-read place with the MonkeyLearn Studio Dashboard (pictured below).

Sign up for a demo today to see how MonkeyLearn can help you revolutionize your data analysis, or get started right away with a free trial.

Inés Roldós

October 18th, 2021