Topic Modeling: An Introduction

Topic modeling is an unsupervised machine learning technique that’s capable of scanning a set of documents, detecting word and phrase patterns within them, and automatically clustering word groups and similar expressions that best characterize a set of documents.

You’ve probably been hearing a lot about artificial intelligence, along with terms like machine learning and Natural Language Processing (NLP). Especially if you work in a company that processes hundreds, or even thousands of customer interactions every day. Data analysis of social media posts, emails, chats, open-ended survey responses, and more, is not an easy task, and less so when delegated to humans alone.

That’s why many are excited about the implications artificial intelligence could have on their day-to-day tasks, as well as on businesses as a whole. AI-powered text analysis uses a wide variety of methods or algorithms to process language naturally, one of which is topic analysis – used to automatically detect topics from texts.

By using topic analysis models, businesses are able to offload simple tasks onto machines instead of overloading employees with too much data. Just imagine the time your team could save and spend on more important tasks, if a machine was able to sort through endless lists of customer surveys or support tickets every morning.

In this guide, we’re going to take a look at two types of topic analysis techniques: topic modeling and topic classification. Topic modeling is an ‘unsupervised’ machine learning technique, in other words, one that doesn’t require training. Topic classification is a ‘supervised’ machine learning technique, one that needs training before being able to automatically analyze texts.

First, we’ll delve into what topic modeling is, how it works, and how it compares to topic classification. Then, we’ll present various use cases and tools that you can use to easily get started with topic analysis, as well as a series of tutorials that will help you create your own models.

- What is Topic Modeling?

- Examples of Topic Modeling and Topic Classification

- How does Topic Modeling work?

- Use Cases and Applications:

- Resources:

What is Topic Modeling?

Topic modeling is a machine learning technique that automatically analyzes text data to determine cluster words for a set of documents. This is known as ‘unsupervised’ machine learning because it doesn’t require a predefined list of tags or training data that’s been previously classified by humans.

Since topic modeling doesn’t require training, it’s a quick and easy way to start analyzing your data. However, you can’t guarantee you’ll receive accurate results, which is why many businesses opt to invest time training a topic classification model.

Since topic classification models require training, they’re known as ‘supervised’ machine learning techniques. What does that mean? Well, as opposed to text modeling, topic classification needs to know the topics of a set of texts before analyzing them. Using these topics, data is tagged manually so that a topic classifier can learn and later make predictions by itself.

For example, let’s say you’re a software company that’s released a new data analysis feature, and you want to analyze what customers are saying about it. You’d first need to create a list of tags (topics) that are relevant to the new feature e.g. Data Analysis, Features, User Experience, then you’d need to use data samples to teach your topic classifier how to tag each text using these predefined topic tags.

Although topic classification involves extra legwork, this topic analysis technique delivers more accurate results than unsupervised techniques, which means you’ll get more valuable insights that help you make better, data-based decisions.

You could say that unsupervised techniques are a short-term or quick-fix solution, while supervised techniques are more of a long-term solution that will help your business grow.

Examples of Topic Modeling and Topic Classification

Let’s take a look at some examples, to help you better understand the differences between automatic topic modeling and topic classification.

Topic modeling could be used to identify the topics of a set of customer reviews by detecting patterns and recurring words. Let’s take a look at how an ‘unsupervised’ technique would group the below review for Eventbrite, for example:

“The nice thing about Eventbrite is that it's free to use as long as you're not charging for the event. There is a fee if you are charging for the event – 2.5% plus a $0.99 transaction fee.”

By identifying words and expressions such as free to use, fee, charging, 2.5% plus 99 cents transaction fee, topic modeling can group this review with other reviews that talk about similar things (these may or may not be about pricing).

A topic classification model could also be used to determine what customers are talking about in customer reviews, open-ended survey responses, and on social media, to name just a few. However, these supervised techniques use a different approach. Rather than inferring what similarity cluster the review belongs to, classification models are able to automatically label a review with predefined topic tags. Take this review about SurveyMonkey, for example:

“We have the gold level plan and use it for everything, love the features! It is one of the best bang for buck possible.”

A topic classification model that’s been trained to understand these expressions (gold level plan, love the features, and best bang for buck) would be able to tag this review as topics Features and Price.

In short, topic modeling algorithms churn out collections of expressions and words that it thinks are related, leaving you to figure out what these relations mean, while topic classification delivers neatly packaged topics, with labels such as Price, and Features, eliminating any guesswork.

How Does Topic Modeling Work?

It’s simple, really. Topic modeling involves counting words and grouping similar word patterns to infer topics within unstructured data. Let’s say you’re a software company and you want to know what customers are saying about particular features of your product. Instead of spending hours going through heaps of feedback, in an attempt to deduce which texts are talking about your topics of interest, you could analyze them with a topic modeling algorithm.

By detecting patterns such as word frequency and distance between words, a topic model clusters feedback that is similar, and words and expressions that appear most often. With this information, you can quickly deduce what each set of texts are talking about. Remember, this approach is ‘unsupervised’ meaning that no training is required.

Now, let’s say you train a model to detect specific topics. That’s a whole different kettle of fish, and a step that’s needed for topic classification algorithms – a supervised technique. Let’s compare the two topic analysis algorithms to further understand the differences between them.

Topic Modeling vs Topic Classification

Topic modeling and topic classification do have one thing in common. They’re the most commonly used topic analysis techniques. Apart from that, they’re both very different and the one you choose, well, that depends on several factors.

In theory, unsupervised machine learning algorithms such as topic modeling require less manual input than supervised algorithms. That’s because they don't need to be trained by humans with manually tagged data. However, they do need high-quality data, and not only that – they need it in bucket loads, which may not always be easy to come by.

At the end of your topic modeling analysis, you’ll receive collections of documents that the algorithm has grouped together, as well as clusters of words and expressions that it used to infer these relations.

Supervised machine learning algorithms, on the other hand, deliver neatly packaged results with topic labels such as Price and UX. Yes, they take longer to set up since you’ll need to train them by tagging datasets with a predefined list of topics. But, if you label your texts accurately and refine your criteria, you’ll be rewarded with a model that can accurately classify unseen texts according to their topics, as well as results that you can put to use.

At the end of the day, it comes down to this. If you don’t have a lot of time to analyze texts, or you’re not looking for a fine-grained analysis and just want to figure out what topics a bunch of texts are talking about, you’ll probably be happy with a topic modeling algorithm.

However, if you have a list of predefined topics for a set of texts and want to label them automatically without having to read each one, as well as gain accurate insights, you’re better off using a topic classification algorithm.

Now that we’ve explained the differences between topic modeling and topic classification, we’re going to go into more detail about how each of these machine learning algorithms works… and, yes, things are about to get a bit more technical.

Topic Modeling

Topic Modeling refers to the process of dividing a corpus of documents in two:

- A list of the topics covered by the documents in the corpus

- Several sets of documents from the corpus grouped by the topics they cover.

The underlying assumption is that every document comprises a statistical mixture of topics, i.e. a statistical distribution of topics that can be obtained by “adding up” all of the distributions for all the topics covered. What topic modeling methods do is try to figure out which topics are present in the documents of the corpus and how strong that presence is.

In this section, we’ll help you see the big picture of two topic modeling methods, namely, Latent Semantic Analysis (LSA) _and _Latent Dirichlet Allocation (LDA).

Latent Semantic Analysis (LSA)

Latent Semantic Analysis (LSA) is one of the most frequent topic modeling methods analysts make use of. It is based on what is known as the distributional hypothesis which states that the semantics of words can be grasped by looking at the contexts the words appear in. In other words, under this hypothesis, the semantics of two words will be similar if they tend to occur in similar contexts.

That said, LSA computes how frequently words occur in the documents – and the whole corpus – and assumes that similar documents will contain approximately the same distribution of word frequencies for certain words. In this case, syntactic information (e.g. word order) and semantic information (e.g. the multiplicity of meanings of a given word) are ignored and each document is treated as a bag of words.

The standard method for computing word frequencies is what is known as tf-idf. This method computes frequencies by taking into consideration not only how frequent words are in a given document, but also how frequent words are in all the corpus of documents. Words with a higher frequency in the full corpus will be better candidates for document representations than less frequent words, regardless of how many times they appear in individual documents. As a result, tf-idf representations are much better than those that only take into consideration word frequencies at document level.

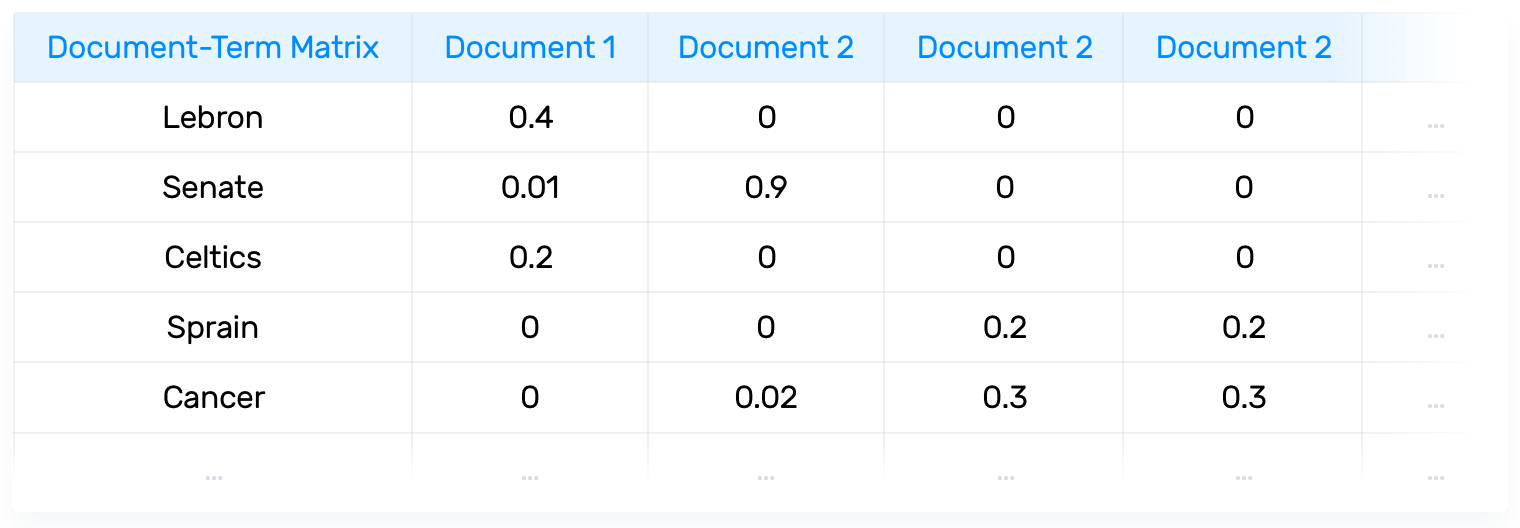

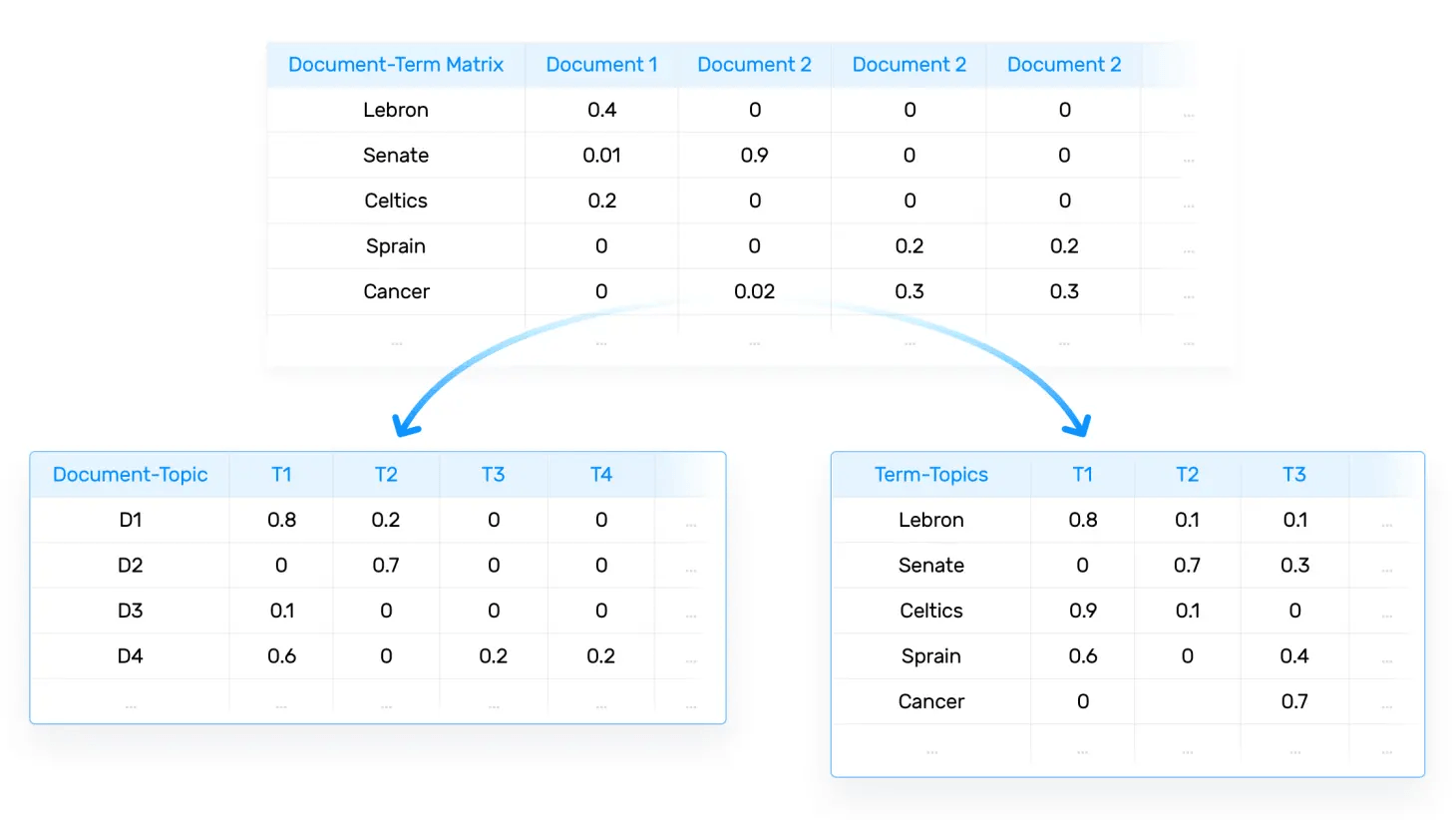

Once tf-idf frequencies have been computed, we can create a Document-term matrix which shows the tf-idf value for each term in a given document. This matrix will have rows for every document in the corpus and columns for every term considered.

This Document-term matrix can be decomposed into the product of 3 matrices (USV) by using singular value decomposition (SVD). The U matrix is known as the Document-topic matrix and the V matrix is known as the Term-topic matrix:

Linear algebra guarantees that the S matrix will be diagonal and LSA will consider each singular value, i.e. each of the numbers in the main diagonal of matrix S, as a potential topic found in the documents.

Now, if we keep the largest t singular values together with the first t columns of U and the first t rows of V, we can obtain the t more frequent topics found in our original Document-term matrix. We call this truncated SVD since it does not keep all of the singular values of the original matrix and, in order to use it for LSA, we will have to set the value of t as a hyperparameter.

The quality of the topic assignment for every document and the quality of the terms assigned to each topic can be assessed through different techniques by looking at the vectors that make up the U and V matrices, respectively.

Latent Dirichlet Allocation (LDA)

Latent Dirichlet Allocation (LDA) and LSA are based on the same underlying assumptions: the distributional hypothesis, (i.e. similar topics make use of similar words) and the statistical mixture hypothesis (i.e. documents talk about several topics) for which a statistical distribution can be determined. The purpose of LDA is mapping each document in our corpus to a set of topics which covers a good deal of the words in the document.

What LDA does in order to map the documents to a list of topics is assign topics to arrangements of words, e.g. n-grams such as best player for a topic related to sports. This stems from the assumption that documents are written with arrangements of words and that those arrangements determine topics. Yet again, just like LSA, LDA also ignores syntactic information and treats documents as bags of words. It also assumes that all words in the document can be assigned a probability of belonging to a topic. That said, the goal of LDA is to determine the mixture of topics that a document contains.

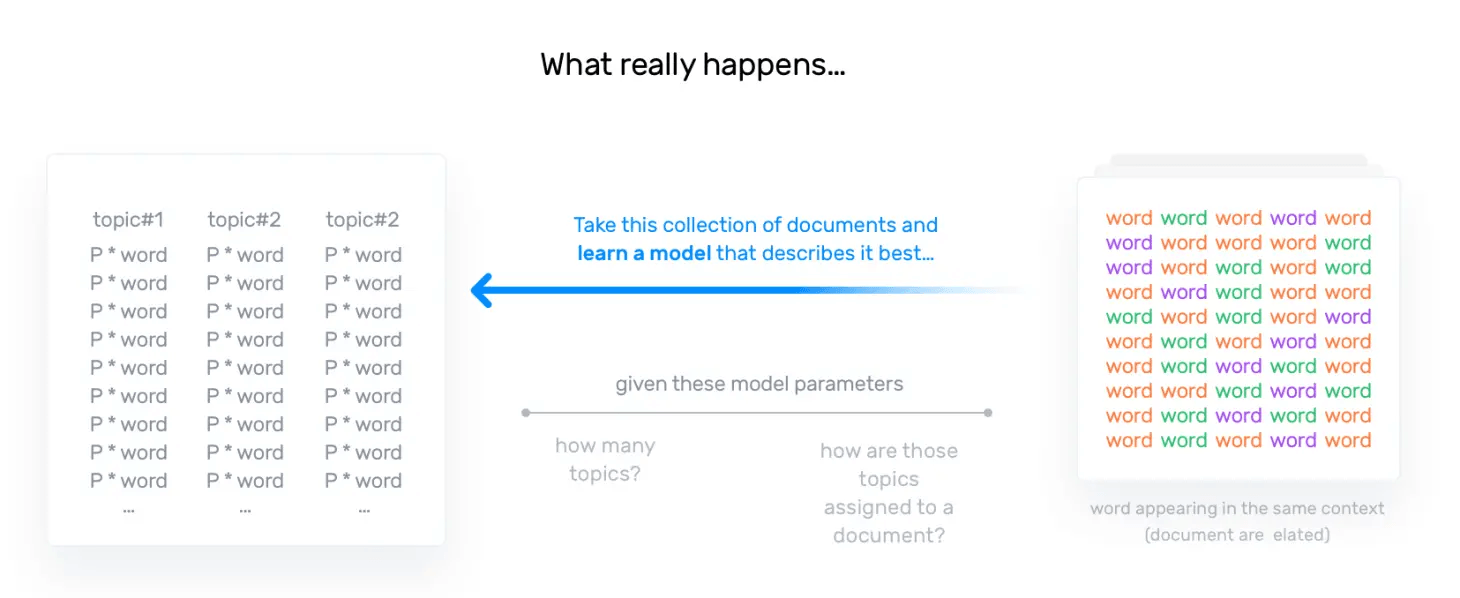

In other words, LDA assumes that topics and documents look like this:

And, when LDA models a new document, it works this way:

The main difference between LSA and LDA is that LDA assumes that the distribution of topics in a document and the distribution of words in topics are Dirichlet distributions. LSA does not assume any distribution and therefore, leads to more opaque vector representations of topics and documents.

There are two hyperparameters that control document and topic similarity, known as alpha and beta, respectively. A low value of alpha will assign fewer topics to each document whereas a high value of alpha will have the opposite effect. A low value of beta will use fewer words to model a topic whereas a high value will use more words, thus making topics more similar between them.

A third hyperparameter has to be set when implementing LDA, namely, the number of topics the algorithm will detect since LDA cannot decide on the number of topics by itself.

The output of the algorithm is a vector that contains the coverage of every topic for the document being modeled. It will look something like this [0.2, 0.5, etc.] where the first value shows the coverage of the first topic, and so on. If compared appropriately, these vectors can give you insights into the topical characteristics of your corpus.

For more information on how those probabilities are computed, the statistical distributions assumed by the algorithm, or how to implement LDA, you can refer to the original LDA paper.

Also, for more information on how to compare vector representations to get insights into document similarity or the distribution of topics over a document corpus, you might want to read about cosine similarity or other similarity measures. All of these comparisons can be used to compute similarities between the output vectors of both LSA and LDA.

Topic Classification

If you’re running a topic classification analysis, you’ll need to predefine a list of topics. For example, if you’re a software company and you have a set of customer reviews you want to analyze, you’ll probably include topics such as Functionality , Usability, and Reliability on your list.

To determine your topics, it’s always best to do some research. You could always use the previously discussed topic modeling methods to determine topics for your supervised machine learning model – it’s certainly a quick way to find out what a batch of texts is talking about. However, in most cases, you’ll probably refine topics as you build your topic classification model.

When you have your list of topics and reliable datasets, you’ll be able to start training your algorithm by tagging text data with your predefined list of topics. Once you’ve tagged enough examples, your algorithm will start tagging new reviews automatically – that’s machine learning in action. Keep in mind that before you put a model to work on large sets of data, you should be 100% confident that, one, your topic list will deliver the results you’re after and, two, your tagged dataset is consistent.

To understand the ins and outs of this supervised machine learning model, let’s delve a little deeper into the three ways you can approach automated topic classification: rule-based systems, machine learning systems, and hybrid systems.

Rule-Based Systems

By now, you’re probably a bit more clued-up about machine learning; it goes something like this – humans lead by example and algorithms follow suit, right? Now, let’s throw you off a little (don’t worry, it will all become clear again)... It's also possible to build a topic classifier without machine learning!

You’re probably thinking, doesn’t that make it an unsupervised model?

Well, no. This type of model runs on a rule-based system. It works by directly programming a set of hand-made rules, based on the content of the documents that a human expert has read.

The rules represent the code written by the expert, meaning an algorithm can differentiate topics by deciphering semantically relevant elements of a text, while also taking into account the metadata a document may have.

Each one of these rules is made up of a pattern and a prediction. Since we’re focusing on topic analysis, the prediction will be the topic.

Now, let’s imagine you have a set of support tickets you want to analyze using rules. First, you’d need to define a list of words, one for each topic (e.g for billing issues, words like price, charge, invoice, and transaction, and for app features, words like usability, bugs and performance). Once you have your list, your rule-based system is ready to analyze new tickets. It does this by counting the frequency of your topic words to determine which labels to assign new tickets. You can improve these systems as you go along by refining existing rules and adding new ones.

The downside of these rule-based systems is that they're too complex for someone without expert knowledge, and require constant analysis and testing to ensure they’re functioning in the correct way. Plus, when you add new rules, existing rules are altered. In short, these systems are high-maintenance and unscalable.

Machine Learning Systems

Rule-based systems are gradually being replaced by automated machine learning systems that deliver better results with much less effort.

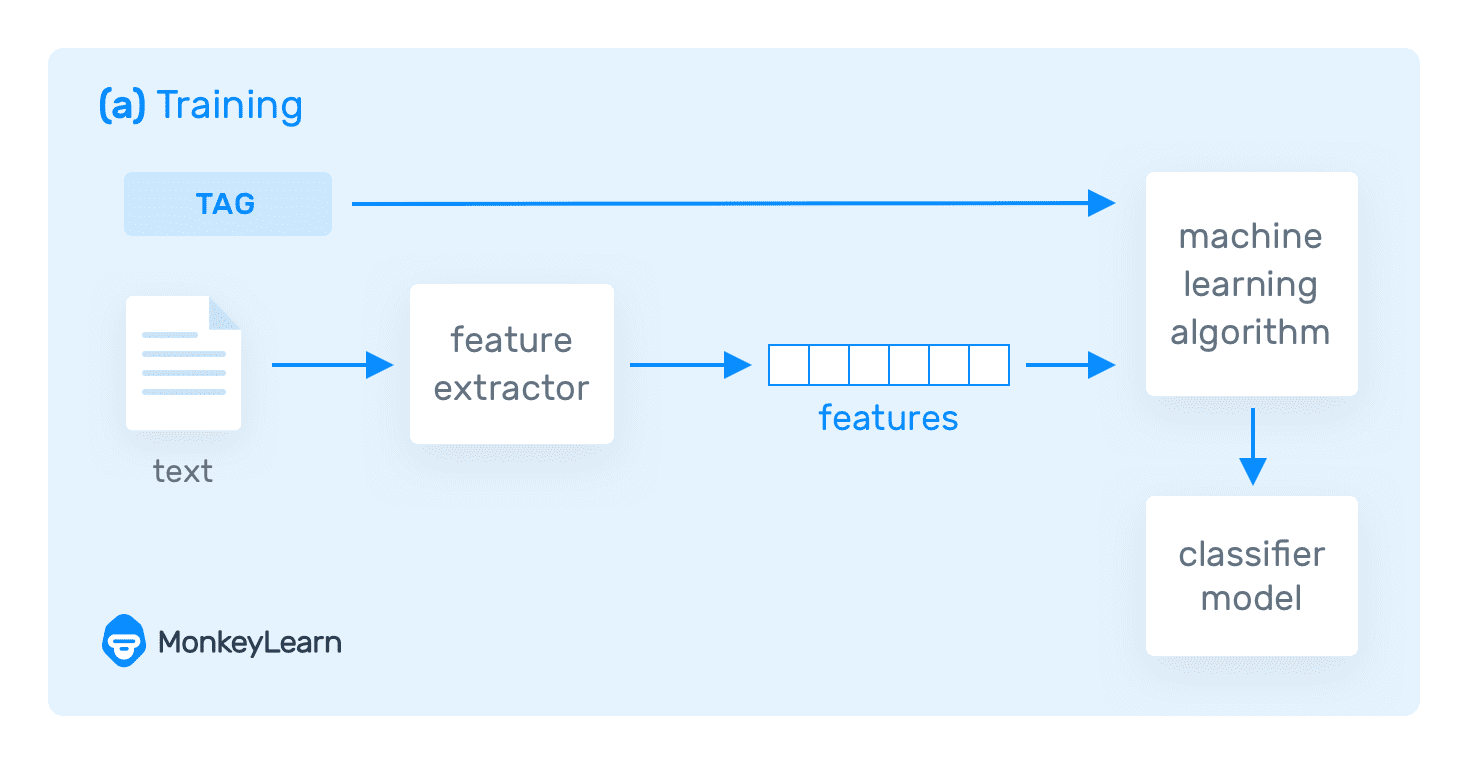

A topic classification machine learning model needs to be fed examples of text and a list of predefined tags, known as training data. However, machine learning models can’t understand text, so information first needs to be transformed into vectors (lists of numbers that encode information) before they can recognize patterns, extract relevant information and categorize data.

One of the most common ways to transform text information into vectors is bag of words vectorization, which you can learn more about here.

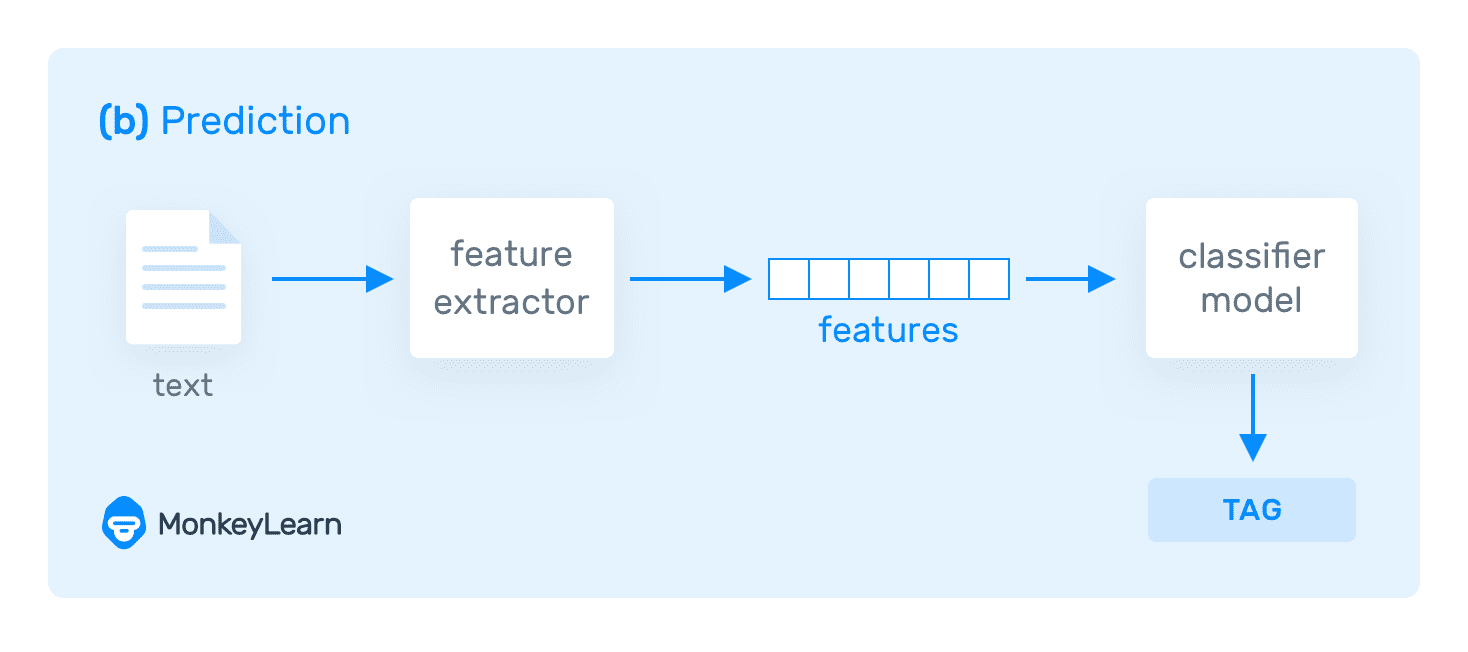

Once the text is transformed into vectors and the training data is tagged with the expected tags, this information is fed to an algorithm to create the classification model:

This classification model is now able to classify new texts because it has learned how to make predictions automatically:

And if you want to keep training topic classification models to make them even more accurate, you can just tag more data, without having to go through complex procedures.

Now that you’re knee-deep in machine learning, let’s meet some widely used algorithms for topic classification:

Naive Bayes

Naive Bayes (NB) is a family of simple algorithms – the most popular being Multinomial Naive Bayes (MNB) – that deliver great results when dealing with small amounts of data, say between 1,000 and 10,000 texts. It works by correlating the probability of words appearing in a text with the probability of that text being about a certain topic.

Support Vector Machines (SVM)

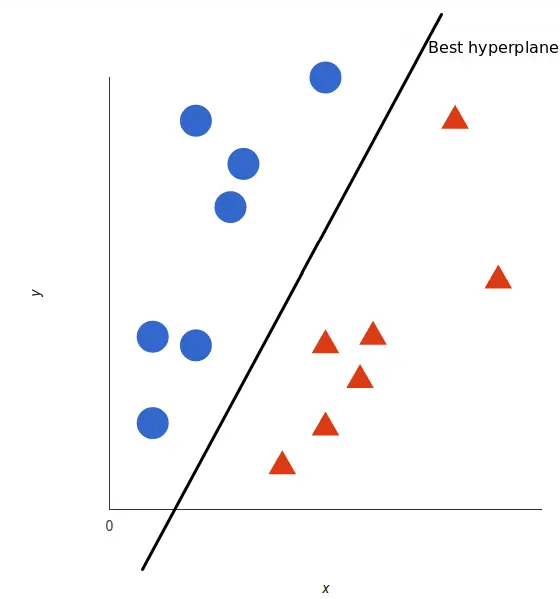

SVM is slightly more complex than Naive Bayes, but follow the same rules. The upside is that they often deliver better results than NB for topic classification; the downside is that they require complex programming and require more computing resources. However, it's possible to speed up the training process of an SVM by optimizing the algorithm by feature selection, in addition to running an optimized linear kernel such as scikit-learn's Linear SVC.

Once training data is vectorized (numbered), SVM is able to separate these vectors into topics by creating ‘a boundary’ or imaginary line (otherwise known as a hyperplane). Now, when a new text is analyzed by the algorithm, it’s able to categorize it automatically based on the information received from the training data, and decide which side of the line it should appear:

Ever played battleships? Well, all SVMs components work in a similar way. Played on ruled grids with a wall separating the two players (the hyperplane), each player's fleet of ships are marked (vectorized), and each side represents a different fleet of ships (topic). Now, when one player enters a code (prediction), and hits the location of one of the ships, you’re well on your way to eliminating the rest of the fleet, or in an SVM’s case, identifying the topic.

Deep Learning

This is a machine learning technique that teaches computers to do what comes naturally to humans: learn by example. While it’s been around since the 1950s, deep learning is making a come back, not just because of lower computing costs, increased computing power and availability of data, but also because it’s achieving more accurate results than ever before – results that often exceed human-level performance.

Topic classification, in particular, has benefited from deep learning’s revival and uses two main deep learning architectures: Convolutional Neural Networks (CNN) and Recurrent Neural Networks (RNN). If you’d like to delve even deeper, and find out what the differences are between these two frameworks, check out this comparison.

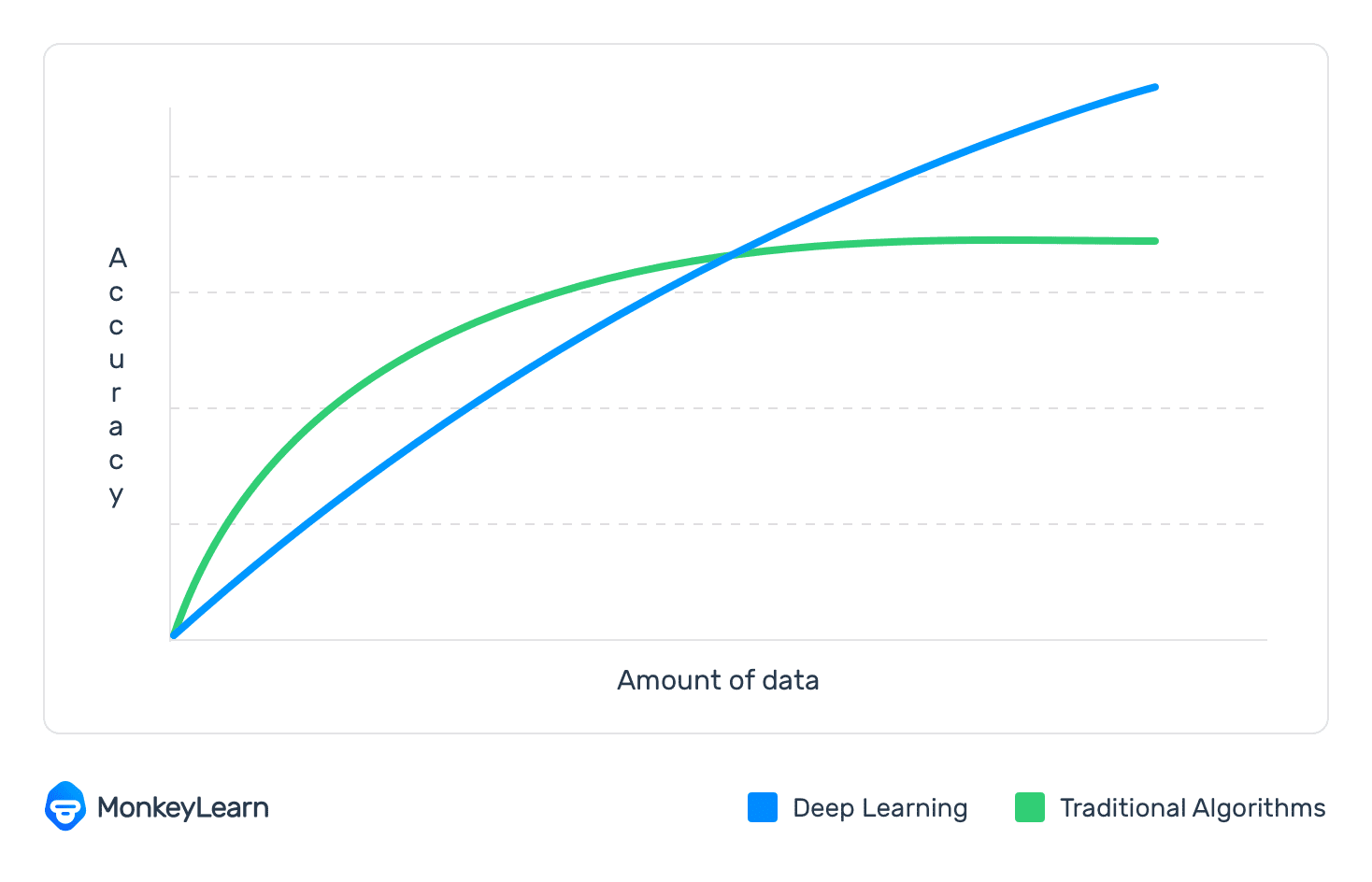

Before you get too excited about deep learning algorithms, however, be aware that they require much more training data than traditional machine learning algorithms. Instead of 1,000 training samples, you’ll need to millions. On the other hand, traditional machine learning algorithms such as SVM and NB are limited. After you go beyond the threshold of training data for these algorithms, accuracy stops improving, while deep learning classifiers continue to improve the more data they have:

There are many machine learning algorithms out there that serve a different purpose depending on the problem you’re trying to solve. For instance, spam detection was declared ‘solved’ a couple of decades ago using just Naive Bayes and n-grams. Word2Vec or GloVe are great deep learning algorithms for getting better vector representations for words when using traditional machine learning algorithms.

Hybrid Systems

These are simply combinations of machine learning classifiers and rule-based systems, which improve results as you fine-tune rules. You can use these to rules to tweak topics that have been incorrectly modeled by the machine learning classifier.

Metrics and Evaluation

Ok, algorithms, done. Now, let’s focus on the results, and a question that you’ve probably been wondering from the start. How do you know how good your topic classification model is?

Well, you already know that supervised machine learning models need to be fed training data that is relevant to your predefined topic list. By using a subset of that tagged data to test your model, you can find out how accurate it is at making predictions. You can test the actual tag for a specific text and compare it to the predicted tag then, with the results, calculate the following evaluation metrics:

- Accuracy: the percentage of texts that were assigned the correct topic

- Precision: the percentage of texts the classifier tagged correctly out of the total number of texts it predicted for each topic

- Recall: the percentage of texts the model predicted for each topic out of the total number of texts it should have predicted for that topic

- F1 Score: the average of both precision and recall

The best way to test your model and receive these evaluation metrics is through cross-validation. This involves randomly splitting your training dataset randomly into equally sizes (e.g 4 sets, each with 25% of the data), then training a classifier with one of these sets, and using the remaining unseen 75% to test your classifier. Then you can build the final model by using all the sets as training data.

Use Cases & Applications

From sales and marketing to customer support and product teams, topic modeling and topic classification can help eliminate manual and repetitive tasks, as well as speed up processes in a simple and cost-effective way.

Teams spend hours routing support tickets, customer feedback and the like by tagging and sorting data, which machine learning algorithms can do automatically, and in real-time. They also function 24/7 and deliver more accurate results that businesses can use to make insightful decisions. On top of that, getting started is easy. With software like MonkeyLearn, you don’t need coding skills or much knowledge about machine learning (that’s not to say this article isn’t full of useful information; it always helps to understand the tools you’re working with!).

To understand how machine learning could help you, let’s take a closer look at the areas in which topic classification and topic modeling are making waves:

Customer Service

In this day and age, customer service can break or make a company. It’s no longer about having an awesome product when there are competitors out there with similar products. Your unique selling point (USP) now needs to be about constantly delivering a kick-ass customer service that will make you stand out from the crowd.

Thirty-three percent of Americans say they’ll consider switching companies after just a single instance of poor service. But how can you deliver great customer service when your teams are bombarded with more data than ever before?

The answer lies within machine learning. Topic modeling and topic classification models can be used in customer support to help teams handle large amounts of data by:

Automatically tagging customer support tickets according to topic, or recognizing patterns and delivering results in the form of frequently occurring words and expressions.

Automatically triaging and routing conversations to the most appropriate team. For example, tickets tagged Billing Issues or Refunds, or containing expressions such as ‘credit card transaction’, ‘subscription error’, and so on, would be sent to the accounts department. Likewise, queries tagged with Bug Issues and Software, or containing expressions such as ‘strange glitch’ and ‘app isn’t working’ would be sent to the dev team.

Automatically detecting the urgency of a support ticket and prioritizing accordingly. For example, if a ticket is tagged as Bug Issue, Urgent, or a machine recognizes expressions such as ‘right away’, ‘immediate attention’ etc. This approach to text analysis has helped companies avoid a potential PR crisis, and even make the most out of a bad situation.

Getting insights from customer support conversations

Instead of tasking customer support teams with tagging and triaging customer queries, which is both repetitive and time-consuming, you can delegate these tasks to machines. This means customer support agents are able to focus on more important tasks, like personalized customer attention or dealing with more complex issues that machines are unable to solve alone.

Customer Feedback

We’ve all come across the term customer-centric – a strategy that’s based on putting your customer first, and at the core of your business. That means listening to the Voice of Customer (VoC), in other words, what customers have to say, via reviews, social media posts, emails, chats and surveys, and responding in a way that will make them want to use your service or product again, and even recommend it to others. After having a positive experience with a company, 77% of customers would recommend it to a friend.

The best way to approach this customer-centric strategy? With machine learning in tow.

Not only can you use topic classification and topic modeling for processing customer feedback and responding in a more timely and effective manner, you can also use it to make more informed decisions, either on the spot when dealing with individual customers, or when making improvements to your product or service.

Topic analysis models can help automatically analyze open-ended responses (unstructured data) such as NPS responses, customer surveys and product reviews, all valuable sources of information that can help shape your product or service, and encourage business growth.

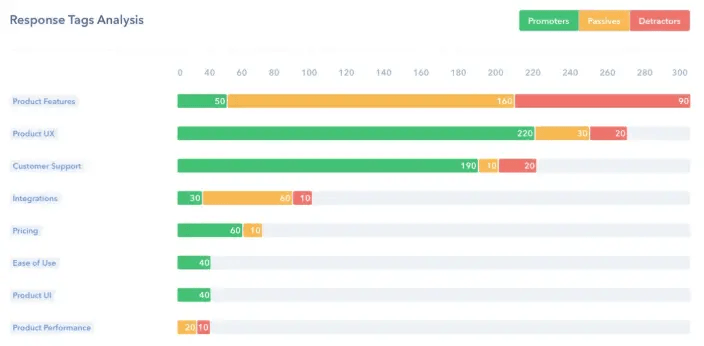

Let’s say you receive a batch of NPS responses, where the first question in your survey asked customers to rate your product on a scale of 1-10. The quantifying answers to this question are easily analyzed, and customer are categorized as promoter (9 or 10), passive (7, 8) or detractor (6 or below).

However, the second question is a follow-up asking the customer to explain the score they gave – this explanation is the most important data. However, as luck would have it, it’s also the most difficult to analyze. Imagine that a customer leaves a score of four and provides the following explanation:

“The app is simple to set up, but the cost per user license can be prohibitive for small companies.

That’s one short piece of feedback with two topics (Ease of Use and Pricing). Now, imagine receiving thousands of responses just like this, both positive and negative, and all mentioning different topics. Processing all these open-ended answers manually is bound to give you a headache. Topic classification and topic modeling can eliminate this manual labor by sorting these survey responses automatically. While topic modeling will group similar responses together, topic classification will deliver topics for each piece of data.

Here’s an example of how one company was able to use topic classification to analyze open-ended NPS responses using MonkeyLearn:

Retently, an NPS tool, trained their classification model to tag each response with different topics, including Product, UX, Customer Support and Ease of Use. Then, within these topics they divided the responses into Promoters, Passives, and Detractors to determine the most popular topics for each group. The results? Take a look for yourselves:

There are other surveys that you could use instead of NPS. For example, customers surveys created with SurveyMonkey which lets you create sophisticated surveys and send them out via email, chat, mobile, social media, web, and more. As opposed to NPS surveys, more than 40% of SurveyMonkey’s surveys include open-ended responses. That’s great in terms of data, but you’re still faced with the same problem as you are with open-ended responses from NPS scores – how do you analyze all this unstructured text data? To learn how, check out this article about auto-tagging responses in SurveyMonkey with AI.

By importing information from surveys into your topic classification models, you’ll be able to find out the topics your customers are talking about in no time at all!

Product reviews are also a great source of unstructured customer feedback. Not only are they available in abundance on sites like Capterra, G2Crowd, Siftery, Yelp, Amazon, and Google Play, they’re also easy to gather using web scraping tools. These tools automatically collect specific information from different websites, so that you don’t have to spend hours copying and pasting reviews that mention your brand.

Plus, there are plenty of easy-to-use scraper tools available that let you build your own, without the need to code. Some of the best include Dexi.io, ParseHub, and Import.io. Once you have all your information in one place (Excel sheet, CSV file, Google sheets), you can use a topic classifier to detect the topics your customers are talking about most often.

Resources

You’ll want to get started with topic modeling and topic classification as soon as possible. And the great news is that you can. With so many tools and resources available, which are simple to use, it’s easy to get started.

We’ll introduce you to a selection of tools, including topic classification and topic modeling APIs, open-source libraries and SaaS APIs, to steer you in the right direction. If you want to dive deeper into how topic detection works, and practice what you’ve learned, our recommendations for papers and online courses are sure to pique your interest. Finally, we’ll provide you with some tutorials that will help you create your own topic classification and topic modeling tools.

Topic modeling APIs

Application programming interfaces (APIs) are a great way to seamlessly connect applications and extend the functionality of your apps. Luckily, there are plenty of topic modeling tools with their own API, and various languages in the data science community that are ideal for these machine learning models. Let’s take a closer look:

Open source

If you know how to code, there are many open source libraries for implementing a topic modeling solution from scratch. These are great because they offer flexibility and customization, and give you complete control of the whole process – from the pre-processing of data (tokenization, stopwords removal, stemming, lemmatization, etc), to feature extraction and training of the model (choosing the algorithm and its parameters).

In the following section, we’ll cover some of the best libraries for topic modeling using Python and R.

Python

Python is one of the most popular programming languages for machine learning and data analysis. Its focus on code readability makes it super easy-to-use, and it has a large community of contributors who have developed a wide range of options to implement NLP models.

One of the top choices for topic modeling in Python is Gensim, a robust library that provides a suite of tools for implementing LSA, LDA, and other topic modeling algorithms.

NLTK is a framework that is widely used for topic modeling and text classification. It provides plenty of corpora and lexical resources to use for training models, plus different tools for processing text, including tokenization, stemming, tagging, parsing, and semantic reasoning. Although NLTK can be quite slow and difficult to use, it’s the most well-known and complete NLP library out there.

SpaCy is the fastest framework for training NLP models. Although it is less flexible and supports fewer languages than NLTK, it’s much easier to use. SpaCy also provides built-in word vector and uses deep learning for training some models.

Scikit-learn provides a wide variety of algorithms for building machine learning models. It has excellent documentation, and intuitive methods that make it easy to train a model for topic modeling. If you are a beginner, you’ll find lots of useful tutorials that will help you get started with topic modeling using scikit-learn.

R

R is a statistical programming language that has become quite popular among data scientists and machine learning enthusiasts. It has a very strong community and over 10,000 packages that greatly extend R core functionality.

Topicmodels is the go-to package for topic modeling in R. It provides an easy-to-use interface for creating LDA models and is built on top of tm, a powerful package for text mining applications.

SaaS APIs

Perhaps you don’t have time to code your own solution, or your problem doesn’t justify the costs of implementing your own machine learning software. Maybe you don’t have the resources to train and maintain your own topic classification model. Whatever your reasons, rest assured that there’s always a way with machine learning!

Machine learning is now offered as a service, one that’s simpler to use and doesn't require programming expertise. That’s right, instead of creating your own APIs and algorithms, all you have to do is configure your machine learning service with your existing data, via a user-friendly interface.

To use one of the APIs that machine learning services offer, you’ll need to integrate them with SDKs (Software Development Kits) for popular programming languages or with integrations from third-parties. Other than that, the rest is smooth sailing.

Here are some machine learning services that you can try out for free:

- MonkeyLearn

- Amazon Comprehend

- IBM Watson

- Google Cloud NLP

- Aylien

- MeaningCloud

- BigML

Tutorials

We’ve explained the ins and outs of topic modeling and topic classification, and now it’s time to put what you’ve learned into practice. But, before diving headfirst into topic analysis, we’ve put together a series of tutorials to help you create a model that will deliver the best possible results.

Whether you’re using open source libraries to create your own topic modeling and topic classification models, or using one of the neatly packaged machine learning services we mentioned earlier, we’ve provided step-by-step explanations below.

First, we’ll go into more detail about building topic classification and topic modeling models using Python and R, then we’ll show you how easy it is to build similar models using MonkeyLearn.

Tutorials using open source libraries

Topic Classification in Python

In this tutorial, you’ll learn how to train a text classifier using NLTK and Scikit-learn.

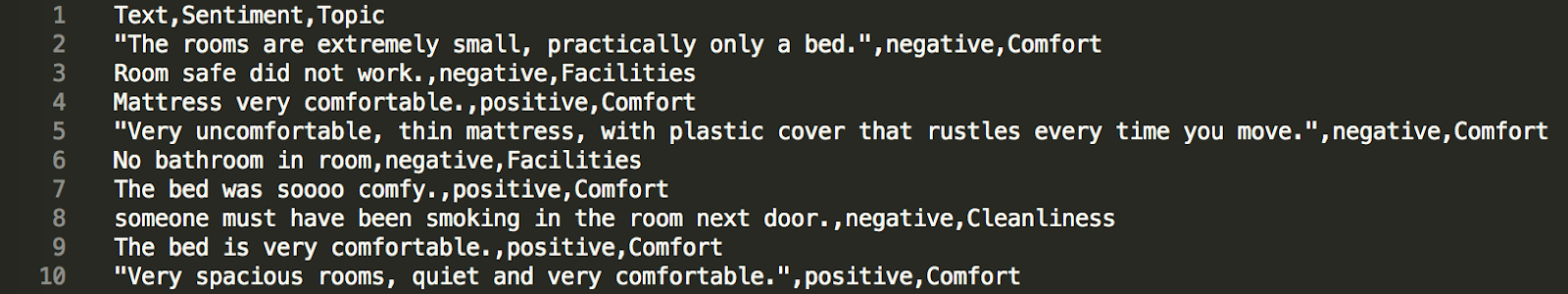

To get started, download this CSV with sample data. This dataset contains 223 hotel reviews, and it has three columns: review texts, review sentiments (e.g. Positive, Negative, and Neutral), and the review topics (e.g. Location, Comfort, Facilities, Cleanliness, etc). You’ll be using this data to train your classifier:

Next, install the following libraries using pip:

pip install nltk

pip install sklearn

Then, create a list of list that represents the rows and columns of the CSV file:

import csv

reviews = [row for row in csv.reader(open('reviews.csv'))]

print reviews

This generates the following output:

[

['Text', 'Sentiment', 'Topic'],

['Room safe did not work.', 'negative', 'Facilities'],

['Mattress very comfortable.', 'positive', 'Comfort'],

['No bathroom in room', 'negative', 'Facilities'],

...

]

Now, you’re ready to do some data cleaning. Keep in mind that this is a key step; you cannot build an accurate model with dirty data. With this in mind, we’ve defined a rule to use NLTK to filter out stopwords, remove non-alphabetic characters, and stem each word to its root:

import re

import nltk

# We need this dataset in order to use the tokenizer

nltk.download('punkt')

from nltk.tokenize import word_tokenize

# Also download the list of stopwords to filter out

nltk.download('stopwords')

from nltk.corpus import stopwords

from nltk.stem.porter import PorterStemmer

stemmer = PorterStemmer()

def process_text(text):

# Make all the strings lowercase and remove non alphabetic characters

text = re.sub('[^A-Za-z]', ' ', text.lower())

# Tokenize the text; this is, separate every sentence into a list of words

# Since the text is already split into sentences you don't have to call sent_tokenize

tokenized_text = word_tokenize(text)

# Remove the stopwords and stem each word to its root

clean_text = [

stemmer.stem(word) for word in tokenized_text

if word not in stopwords.words('english')

]

# Remember, this final output is a list of words

return clean_text

Next, we use the process text function to process the data:

# Remove the first row, since it only has the labels

reviews = reviews[1:]

texts = [row[0] for row in reviews]

topics = [row[2] for row in reviews]

# Process the texts to so they are ready for training

# But transform the list of words back to string format to feed it to sklearn

texts = [" ".join(process_text(text)) for text in texts]

This process transformed the reviews into a nice list of stemmed words without any stopwords, that is, words that don’t provide value to a classification model (e.g. ‘the’, ‘is’, or ‘and’). Now, the hotel reviews looks like this:

['room extrem small practic bed',

'room safe work',

'mattress comfort',

'uncomfort thin mattress plastic cover rustl everi time move',

'bathroom room',

...

]

Now that we have cleaned the data, we can go ahead and train our classifier using scikit-learn. First, we need to transform the texts into something a machine learning algorithm can understand. So, we’ll be transforming the texts into numbers:

from sklearn.feature_extraction.text import CountVectorizer

matrix = CountVectorizer(max_features=1000)

vectors = matrix.fit_transform(texts).toarray()

Next, we’ll partition the data into two different groups: data that will be used for training the model (AKA training data) and data that will be used for understanding how accurate it is (AKA testing data):

from sklearn.model_selection import train_test_split

vectors_train, vectors_test, topics_train, topics_test = train_test_split(vectors, topics)

Finally, the exciting part: training the machine learning model! We’ll train a Naive Bayes classifier that will be able to predict the topics of hotel reviews:

from sklearn.naive_bayes import GaussianNB

classifier = GaussianNB()

classifier.fit(vectors_train, topics_train)

And voilà! You have trained a text classifier with machine learning! But how accurate is this model? Let’s check by using the testing data to obtain performance metrics:

# Predict with the testing set

topics_pred = classifier.predict(vectors_test)

# ...and measure the accuracy of the results

from sklearn.metrics import classification_report

print(classification_report(topics_test, topics_pred))

This outputs the precision, recall, and F1-score for the different categories of the classifier:

| precision | recall | f1-score | support | |

|---|---|---|---|---|

| Cleanliness | 0.43 | 0.43 | 0.43 | 7 |

| Comfort | 0.52 | 0.57 | 0.54 | 23 |

| Facilities | 0.55 | 0.50 | 0.52 | 22 |

| avg / total | 0.52 | 0.52 | 0.52 | 52 |

Besides the performance metrics, this output shows the number of training samples used for training each category (also known as support). Taking into account the small size of the training dataset, the accuracy of the classifier is pretty good!

Keep in mind that training a machine learning model is an iterative process. From here, you should experiment and tweak the model to get better results. For example, you can add more training data so the algorithm has more information to learn from. Then, you can experiment with the number of maximum features to find the optimal setting for this particular model. You can eventually try another machine learning algorithm (such as SVM) to see if this improves the performance metrics.

This may be a simple classifier, but you have completed all the necessary steps for training a machine learning model from scratch, that is, cleaning and processing data, training a model, and testing its performance.

If you want to keep practicing your skills, you can follow the same step-by-step process with the same dataset to train a classifier for sentiment analysis. Instead of using topics to tag each review, use sentiment categories to train your model.

Topic Modeling in Python

Now, it’s time to build a model for topic modeling! We’ll be using the preprocessed data from the previous tutorial. Our weapon of choice this time around is Gensim, a simple library that’s perfect for getting started with topic modeling.

So, as a first step, let’s install Gensim in our local environment:

pip install gensim

Now, pick up from halfway through the classifier tutorial where we turned reviews into a list of stemmed words without any connectors:

['room extrem small practic bed',

'room safe work',

'mattress comfort',

'uncomfort thin mattress plastic cover rustl everi time move',

'bathroom room',

...

]

With this list of words, we can use Gensim to create a dictionary using the bag of words model:

from gensim import corpora

texts = [process_text(text) for text in texts]

dictionary = corpora.Dictionary(texts)

corpus = [dictionary.doc2bow(text) for text in texts]

Next, we can use this dictionary to train an LDA model. We’ll instruct Gensim to find three topics (clusters) in the data:

from gensim import models

model = models.ldamodel.LdaModel(corpus, num_topics=3, id2word=dictionary, passes=15)

topics = model.print_topics(num_words=3)

for topic in topics:

print(topic)

And that’s it! We have trained our first model for topic modeling! The code will return some words that represent the three topics:

0, '0.034*"room" + 0.021*"bathroom" + 0.013*"night"')

(1, '0.056*"bed" + 0.043*"room" + 0.024*"comfort"')

(2, '0.059*"room" + 0.033*"clean" + 0.023*"shower"')

For each topic cluster, we can see how the LDA algorithm surfaces words that look a lot like keywords for our original topics (Facilities, Comfort, and Cleanliness).

Since this is an example with just a few training samples we can’t really understand the data, but we’ve illustrated the basics of how to do topic modeling using Gensim.

Topic Modeling in R

If you want to do topic modeling in R, we suggest checking out the Tidy Topic Modeling tutorial for the topicmodels package. It's straightforward to follow, and it explains the basics for doing topic modeling using R.

How to Create a Topic Classification Model with MonkeyLearn

If you’re not familiar with the programming languages we mentioned above, or you don’t have the resources to implement these models, fear not. With MonkeyLearn, building topic classification models is easy, fast, and affordable (in fact, so affordable you can get started for free!).

To get started, sign up for free and follow the steps below to discover how machine learning models can simplify your topic sorting tasks.

1. Create a new classifier

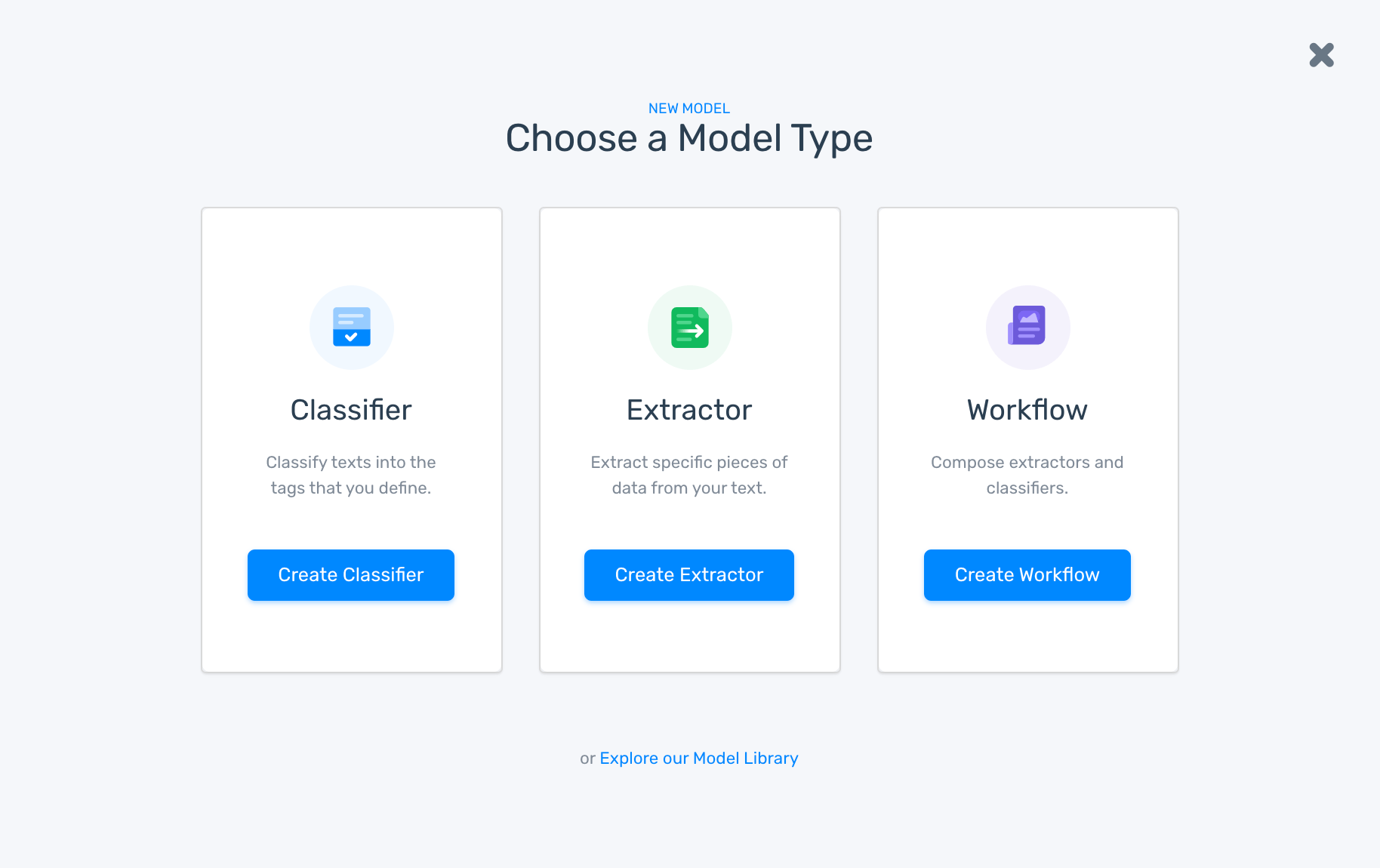

Go to the dashboard, click on ‘create a model’ and choose your model type, in this case a classifier:

2. Select how you want to classify your data

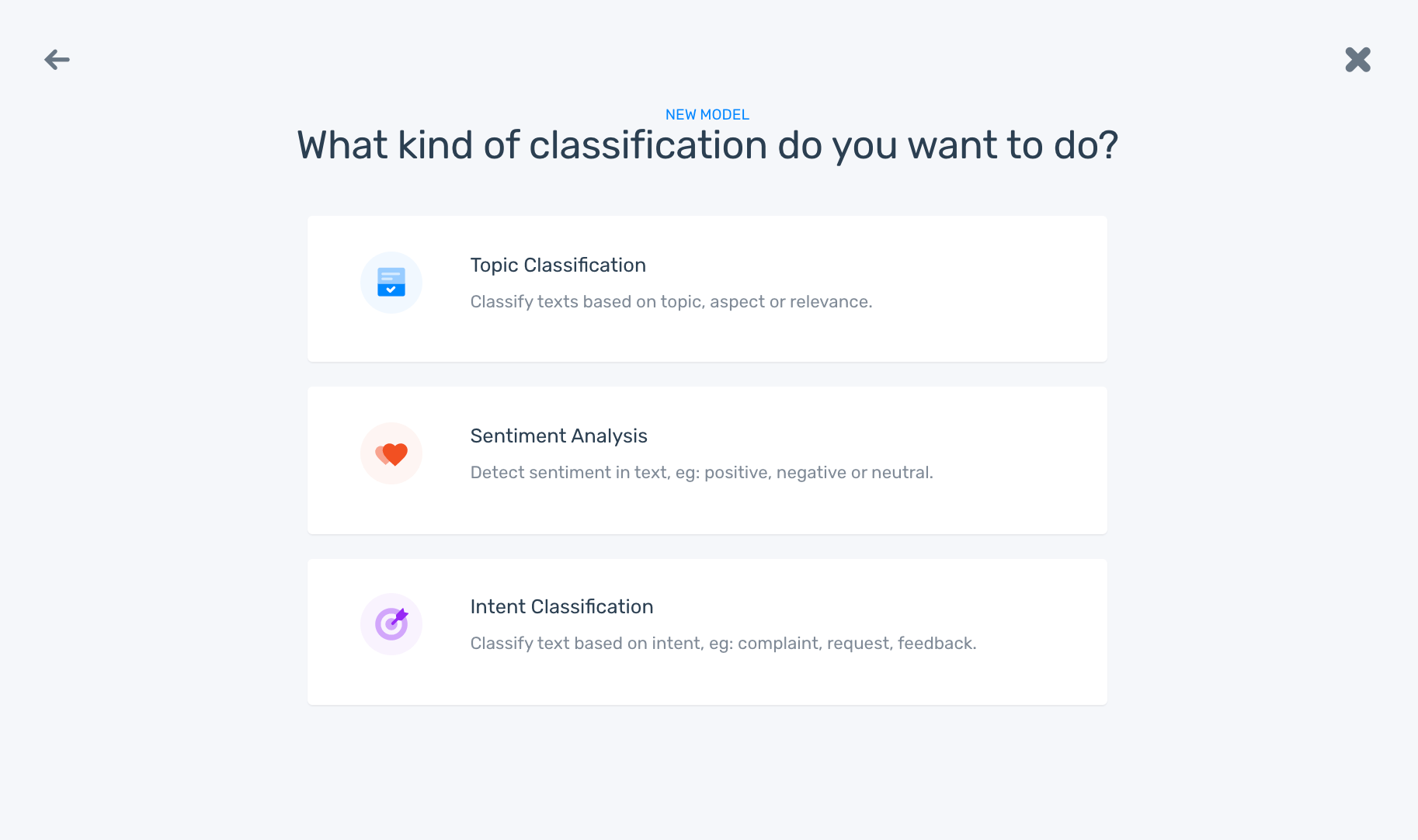

To create a model that classifies texts based on topic, aspect or relevance, click on topic classification:

3. Import your training data

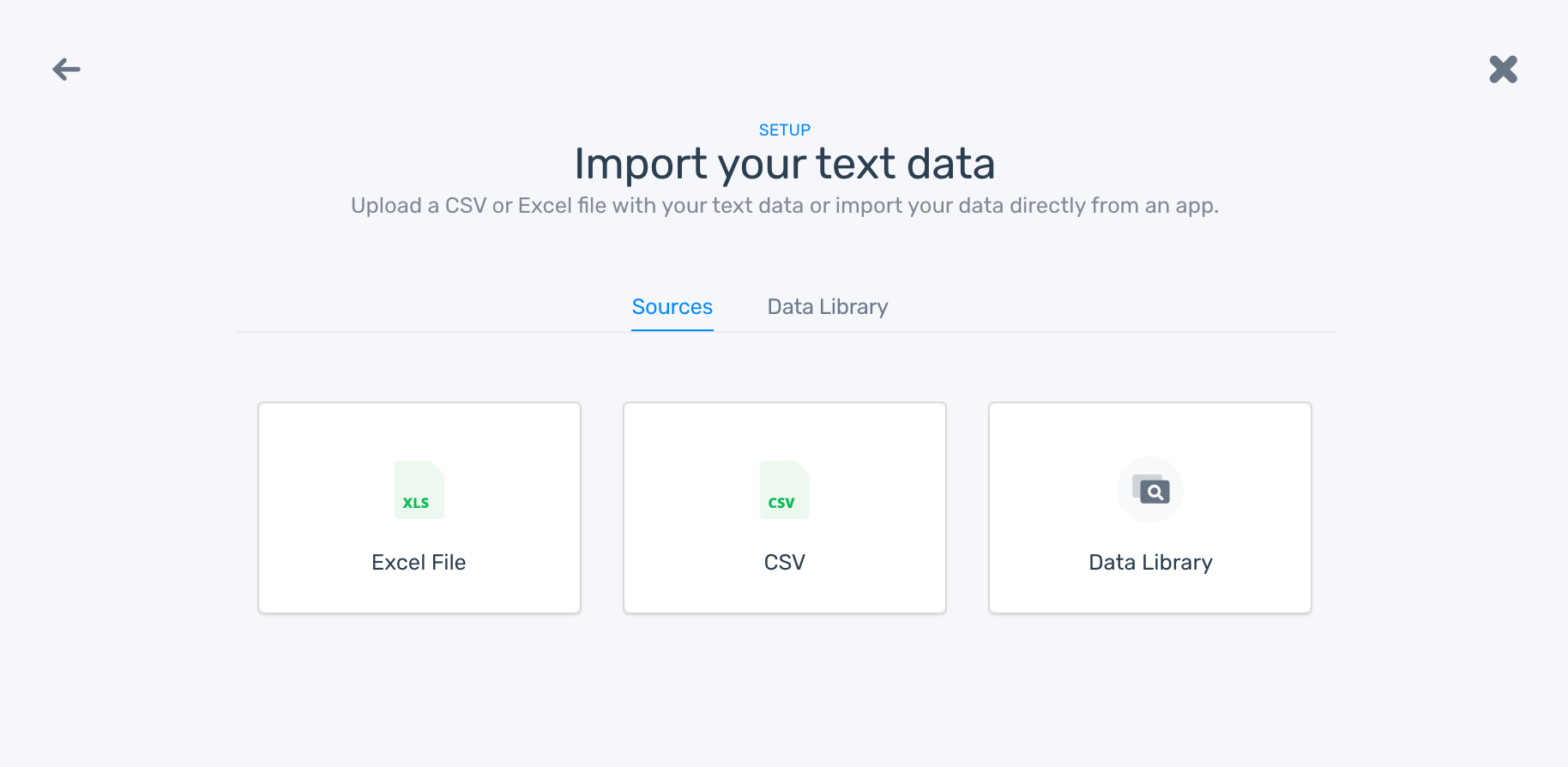

You’ll need to train your topic classification model using relevant data – data that mentions aspects about your product or service. You can upload your data as an Excel or CSV file, or from third-party apps such as Zendesk, Freshdesk, Twitter, Gmail, or RSS feeds:

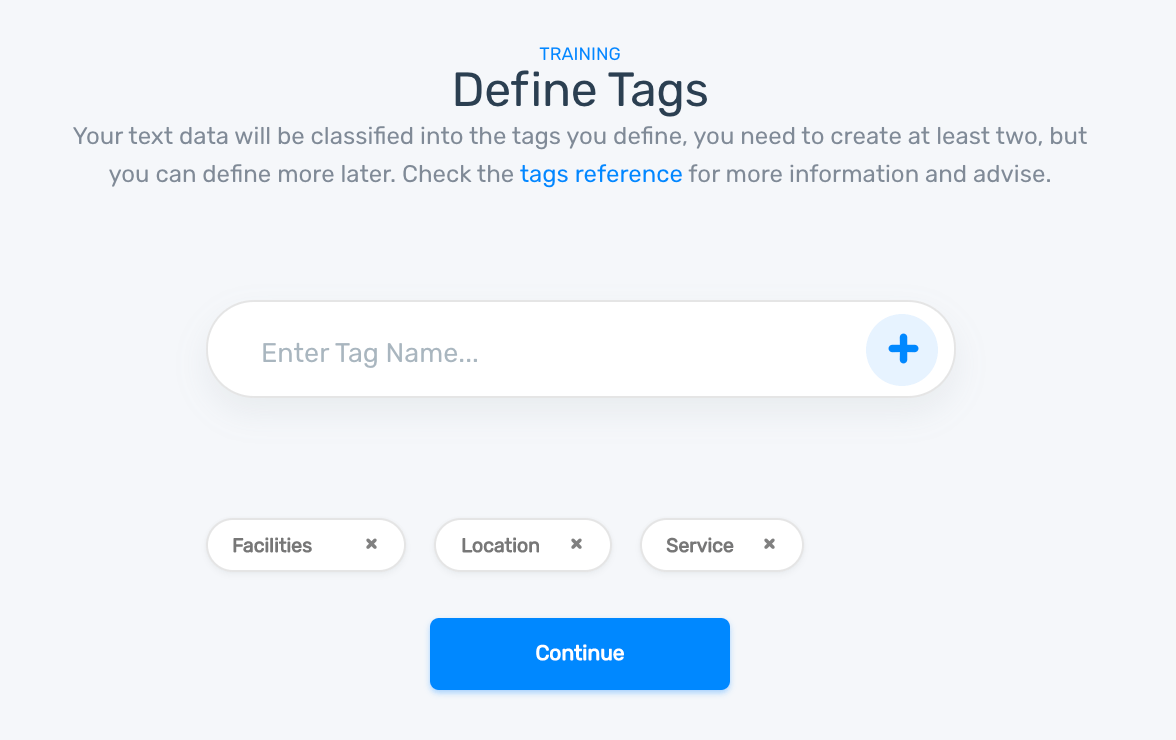

4. Define the tags for your classifier

You will need to define at least two tags to start training your classifier, and you can add more later on. We recommend using no more than 10 tags to begin training your model, as well as using broad tags for which you have enough examples. Check out this article on best practices for choosing and defining you tags:

5. Start training your topic classification model

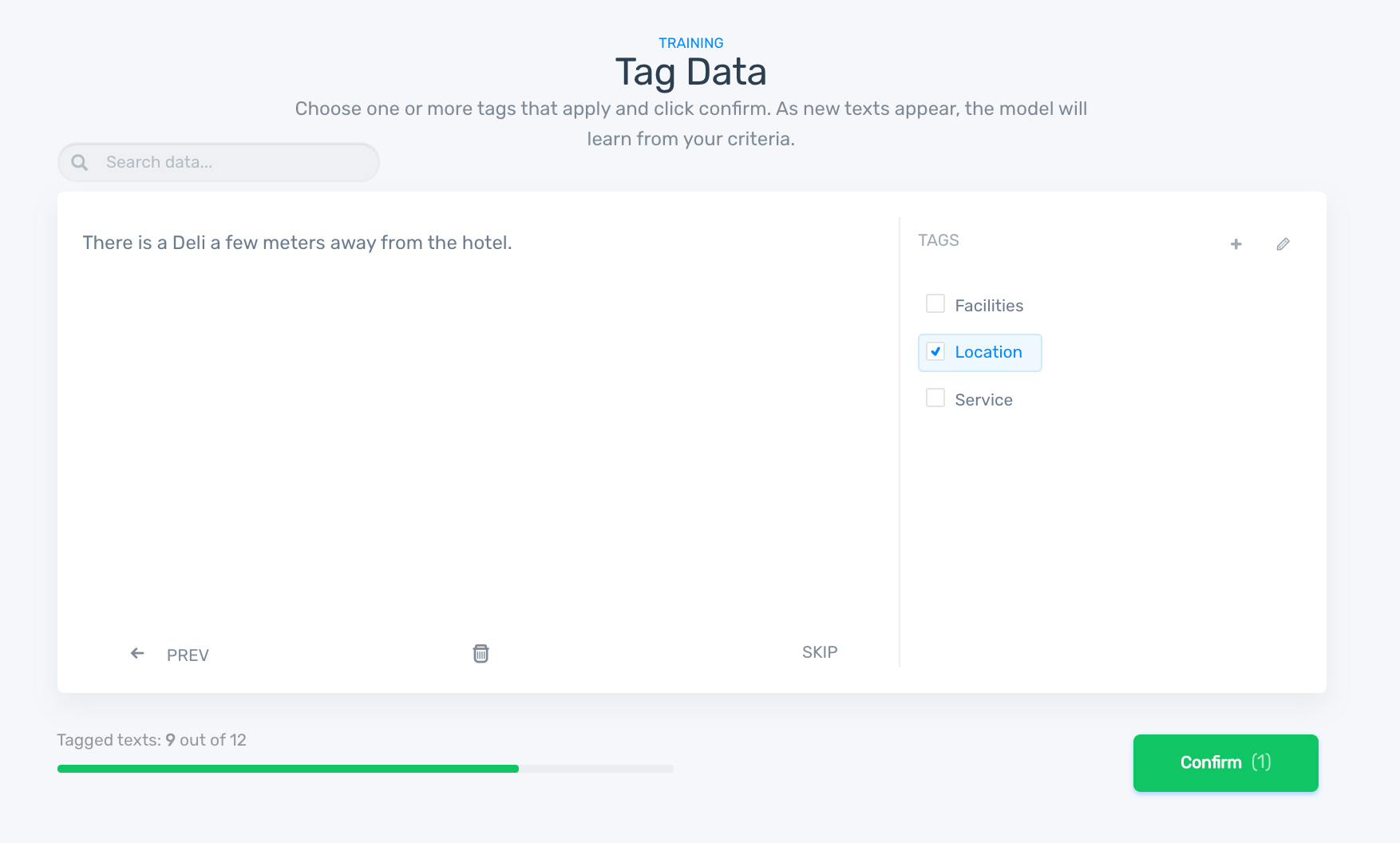

You’ll need to train your model manually, so that it knows how to label each text. Check out the example below to see how each text is allocated a tag (topic). Once you’ve tagged enough examples for each tag, you’ll notice that your model starts to make predictions on its own:

Remember that the more training examples you feed your model, the smarter it will be at making accurate predictions.

6. Test your classifier

Once you’ve tagged enough examples, click on ‘run’ then ‘demo’ to see how well your model is performing. You can use new data – data that the model hasn’t seen – or you can have a go at writing your own text, to test your topic classifier. In this example below, notice what happens when you write your text and click on ‘classify’:

If you think your model needs refining, go back to the ‘build’ tab to continue training your model. MonkeyLearn also provides tools that can help you understand how your model is performing. Go to the ‘stats’ section and you’ll notice statistics such as precision and recall, as well as a keyword cloud with the most common terms and expressions for each tag. You’ll also find ‘false positives’ or ‘false negatives’, in other words, texts that were tagged incorrectly, which you can retag to help your model correct its own errors in the future.

7. Integrate the topic classifier

You’ve made it to the final step! Once you’re happy with the performance of your topic classification model, you’re ready to use it. But how can do you sync the data you want to analyze with your new model. Well, you have three options:

- You could opt for batch processing – classify text in a batch – by going to ‘run’ then ‘batch’, and uploading a CSV or Excel file. Your classifier will analyze this data and send you a new file with its predictions.

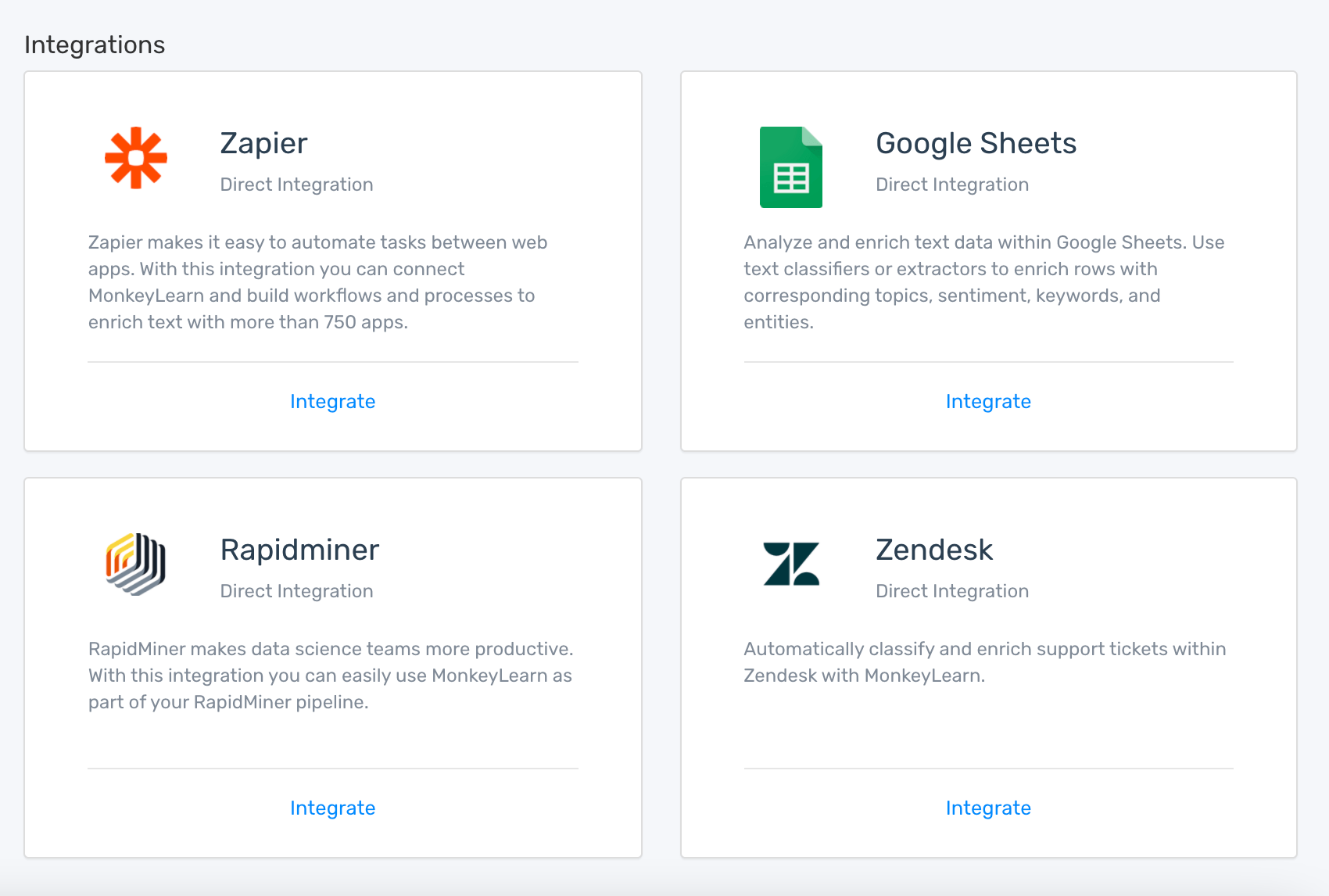

- Integrations are available with MonkeyLearn, and are used to automatically import new text data into your classifier. You could integrate Google Sheets, Zapier, Rapidminer, and Zendesk, for example, all without having to type a single line of code (see example below)

- If you’re a dab hand at coding, you’ll probably opt for MonkeyLearn's API to import new data.

Wrap-up

Topic modeling and topic classification are both very different approaches to machine learning, and the one you choose will depend on the problem you're trying to solve, as well as the resources available to you.

In this article, we provided an overview of the algorithms available for different purposes, as well as outlined several tools for both beginners and programming experts. By understanding how topic modeling and topic classification works, you’ll be better equipped to choose an approach that’s suited to you and your business. Whichever technique you choose to implement, you’ll be one step ahead on your journey towards better decision making, whether you work in sales and marketing or customer support and product teams.

Topic analysis is already being used to streamline and improve processes, as well as save time and, ultimately, help businesses grow. To get started on your machine learning journey, why not try out topic classification?

MonkeyLearn offers ready-to-use topic classification models that are easy to use and implement, without the need to code. And if you are looking to use a custom set of tags or a model trained with your own data, you could also train a custom top classifier in a few simple steps. Sign up to MonkeyLearn for free, request a demo, and our team will help you get started with topic analysis.

Federico Pascual

September 26th, 2019