What is Text Analysis? A Beginner’s Guide

If you receive huge amounts of unstructured data in the form of text (emails, social media conversations, chats), you’re probably aware of the challenges that come with analyzing this data.

Manually processing and organizing text data takes time, it’s tedious, inaccurate, and it can be expensive if you need to hire extra staff to sort through text.

Automate text analysis with a no-code tool

In this guide, learn more about what text analysis is, how to perform text analysis using AI tools, and why it’s more important than ever to automatically analyze your text in real time.

- Text Analysis Basics

- Methods & Techniques

- How Does Text Analysis Work?

- How to Analyze Text Data

- Use Cases and Applications

- Tools and Resources

- Tutorial

What Is Text Analysis?

Text analysis (TA) is a machine learning technique used to automatically extract valuable insights from unstructured text data. Companies use text analysis tools to quickly digest online data and documents, and transform them into actionable insights.

You can us text analysis to extract specific information, like keywords, names, or company information from thousands of emails, or categorize survey responses by sentiment and topic.

The Text Analysis vs. Text Mining vs. Text Analytics

Firstly, let's dispel the myth that text mining and text analysis are two different processes. The terms are often used interchangeably to explain the same process of obtaining data through statistical pattern learning. To avoid any confusion here, let's stick to text analysis.

So, text analytics vs. text analysis: what's the difference?

Text analysis delivers qualitative results and text analytics delivers quantitative results. If a machine performs text analysis, it identifies important information within the text itself, but if it performs text analytics, it reveals patterns across thousands of texts, resulting in graphs, reports, tables etc.

Let's say a customer support manager wants to know how many support tickets were solved by individual team members. In this instance, they'd use text analytics to create a graph that visualizes individual ticket resolution rates.

However, it's likely that the manager also wants to know which proportion of tickets resulted in a positive or negative outcome?

By analyzing the text within each ticket, and subsequent exchanges, customer support managers can see how each agent handled tickets, and whether customers were happy with the outcome.

Basically, the challenge in text analysis is decoding the ambiguity of human language, while in text analytics it's detecting patterns and trends from the numerical results.

Why Is Text Analysis Important?

When you put machines to work on organizing and analyzing your text data, the insights and benefits are huge.

Let's take a look at some of the advantages of text analysis, below:

Text Analysis Is Scalable

Text analysis tools allow businesses to structure vast quantities of information, like emails, chats, social media, support tickets, documents, and so on, in seconds rather than days, so you can redirect extra resources to more important business tasks.

Analyze Text in Real-time

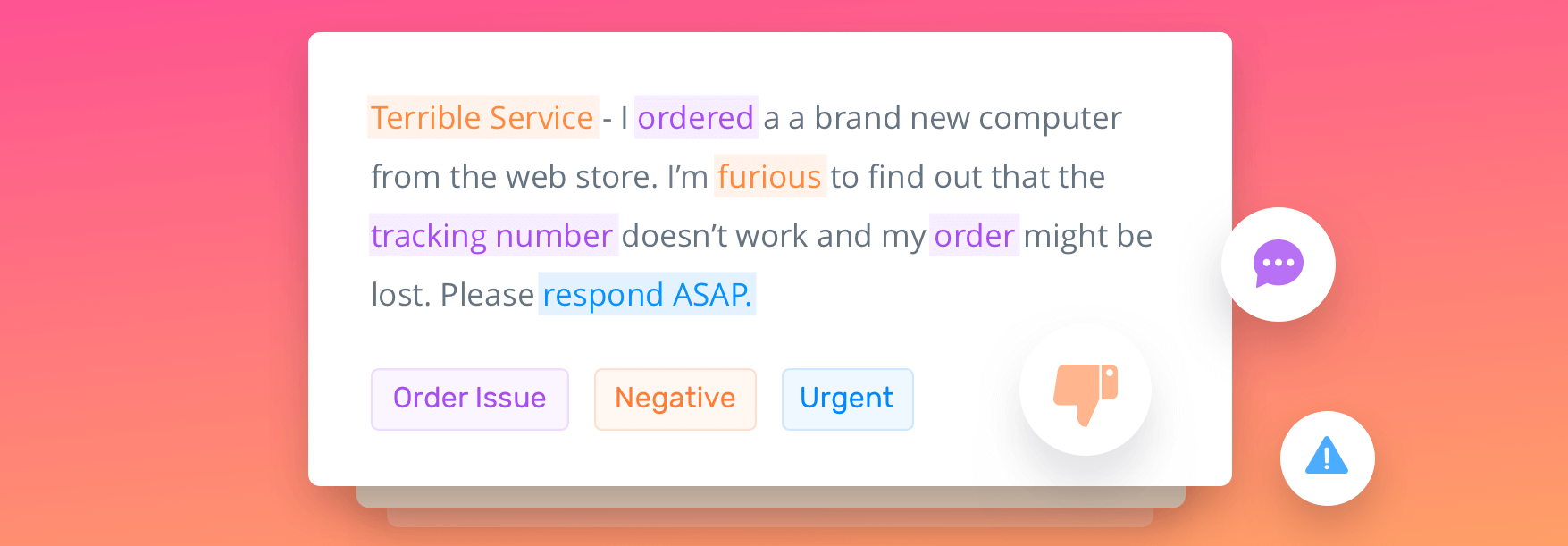

Businesses are inundated with information and customer comments can appear anywhere on the web these days, but it can be difficult to keep an eye on it all. Text analysis is a game-changer when it comes to detecting urgent matters, wherever they may appear, 24/7 and in real time. By training text analysis models to detect expressions and sentiments that imply negativity or urgency, businesses can automatically flag tweets, reviews, videos, tickets, and the like, and take action sooner rather than later.

AI Text Analysis Delivers Consistent Criteria

Humans make errors. Fact. And the more tedious and time-consuming a task is, the more errors they make. By training text analysis models to your needs and criteria, algorithms are able to analyze, understand, and sort through data much more accurately than humans ever could.

Text Analysis Methods & Techniques

There are basic and more advanced text analysis techniques, each used for different purposes. First, learn about the simpler text analysis techniques and examples of when you might use each one.

- Text Classification

- Text Extraction

- Word Frequency

- Collocation

- Concordance

- Word Sense Disambiguation

- Clustering

Text Classification

Text classification is the process of assigning predefined tags or categories to unstructured text. It's considered one of the most useful natural language processing techniques because it's so versatile and can organize, structure, and categorize pretty much any form of text to deliver meaningful data and solve problems. Natural language processing (NLP) is a machine learning technique that allows computers to break down and understand text much as a human would.

Below, we're going to focus on some of the most common text classification tasks, which include sentiment analysis, topic modeling, language detection, and intent detection.

Sentiment Analysis

Customers freely leave their opinions about businesses and products in customer service interactions, on surveys, and all over the internet. Sentiment analysis uses powerful machine learning algorithms to automatically read and classify for opinion polarity (positive, negative, neutral) and beyond, into the feelings and emotions of the writer, even context and sarcasm.

For example, by using sentiment analysis companies are able to flag complaints or urgent requests, so they can be dealt with immediately – even avert a PR crisis on social media. Sentiment classifiers can assess brand reputation, carry out market research, and help improve products with customer feedback.

Try out MonkeyLearn's pre-trained classifier. Just enter your own text to see how it works:

Topic Analysis

Another common example of text classification is topic analysis (or topic modeling) that automatically organizes text by subject or theme. For example:

“The app is really simple and easy to use”

If we are using topic categories, like Pricing, Customer Support, and Ease of Use, this product feedback would be classified under Ease of Use.

Try out MonkeyLearn's pre-trained topic classifier, which can be used to categorize NPS responses for SaaS products.

Intent Detection

Text classifiers can also be used to detect the intent of a text. Intent detection or intent classification is often used to automatically understand the reason behind customer feedback. Is it a complaint? Or is a customer writing with the intent to purchase a product? Machine learning can read chatbot conversations or emails and automatically route them to the proper department or employee.

Try out MonkeyLearn's email intent classifier.

Text Extraction

Text extraction is another widely used text analysis technique that extracts pieces of data that already exist within any given text. You can extract things like keywords, prices, company names, and product specifications from news reports, product reviews, and more.

You can automatically populate spreadsheets with this data or perform extraction in concert with other text analysis techniques to categorize and extract data at the same time.

Keyword Extraction

Keywords are the most used and most relevant terms within a text, words and phrases that summarize the contents of text. [Keyword extraction](](https://monkeylearn.com/keyword-extraction/) can be used to index data to be searched and to generate word clouds (a visual representation of text data).

Try out MonkeyLearn's pre-trained keyword extractor to see how it works. Just type in your text below:

Entity Recognition

A named entity recognition (NER) extractor finds entities, which can be people, companies, or locations and exist within text data. Results are shown labeled with the corresponding entity label, like in MonkeyLearn's pre-trained name extractor:

Word Frequency

Word frequency is a text analysis technique that measures the most frequently occurring words or concepts in a given text using the numerical statistic TF-IDF (term frequency-inverse document frequency).

You might apply this technique to analyze the words or expressions customers use most frequently in support conversations. For example, if the word 'delivery' appears most often in a set of negative support tickets, this might suggest customers are unhappy with your delivery service.

Collocation

Collocation helps identify words that commonly co-occur. For example, in customer reviews on a hotel booking website, the words 'air' and 'conditioning' are more likely to co-occur rather than appear individually. Bigrams (two adjacent words e.g. 'air conditioning' or 'customer support') and trigrams (three adjacent words e.g. 'out of office' or 'to be continued') are the most common types of collocation you'll need to look out for.

Collocation can be helpful to identify hidden semantic structures and improve the granularity of the insights by counting bigrams and trigrams as one word.

Concordance

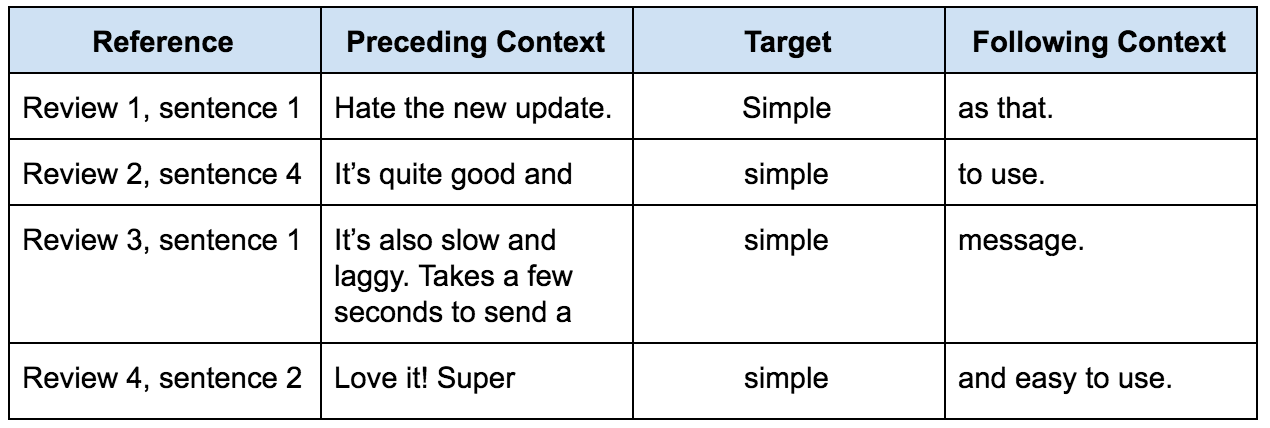

Concordance helps identify the context and instances of words or a set of words. For example, the following is the concordance of the word “simple” in a set of app reviews:

In this case, the concordance of the word “simple” can give us a quick grasp of how reviewers are using this word. It can also be used to decode the ambiguity of the human language to a certain extent, by looking at how words are used in different contexts, as well as being able to analyze more complex phrases.

Word Sense Disambiguation

It's very common for a word to have more than one meaning, which is why word sense disambiguation is a major challenge of natural language processing. Take the word 'light' for example. Is the text referring to weight, color, or an electrical appliance? Smart text analysis with word sense disambiguation can differentiate words that have more than one meaning, but only after training models to do so.

Clustering

Text clusters are able to understand and group vast quantities of unstructured data. Although less accurate than classification algorithms, clustering algorithms are faster to implement, because you don't need to tag examples to train models. That means these smart algorithms mine information and make predictions without the use of training data, otherwise known as unsupervised machine learning.

Google is a great example of how clustering works. When you search for a term on Google, have you ever wondered how it takes just seconds to pull up relevant results? Google's algorithm breaks down unstructured data from web pages and groups pages into clusters around a set of similar words or n-grams (all possible combinations of adjacent words or letters in a text). So, the pages from the cluster that contain a higher count of words or n-grams relevant to the search query will appear first within the results.

How Does Text Analysis Work?

To really understand how automated text analysis works, you need to understand the basics of machine learning. Let's start with this definition from Machine Learning by Tom Mitchell:

"A computer program is said to learn to perform a task T from experience E".

In other words, if we want text analysis software to perform desired tasks, we need to teach machine learning algorithms how to analyze, understand and derive meaning from text. But how? The simple answer is by tagging examples of text. Once a machine has enough examples of tagged text to work with, algorithms are able to start differentiating and making associations between pieces of text, and make predictions by themselves.

It's very similar to the way humans learn how to differentiate between topics, objects, and emotions. Let's say we have urgent and low priority issues to deal with. We don't instinctively know the difference between them – we learn gradually by associating urgency with certain expressions.

For example, when we want to identify urgent issues, we'd look out for expressions like 'please help me ASAP!' or 'urgent: can't enter the platform, the system is DOWN!!'. On the other hand, to identify low priority issues, we'd search for more positive expressions like 'thanks for the help! Really appreciate it' or 'the new feature works like a dream'.

How to Analyze Text Data

Text analysis can stretch it's AI wings across a range of texts depending on the results you desire. It can be applied to:

- Whole documents: obtains information from a complete document or paragraph: e.g., the overall sentiment of a customer review.

- Single sentences: obtains information from specific sentences: e.g., more detailed sentiments of every sentence of a customer review.

- Sub-sentences: obtains information from sub-expressions within a sentence: e.g., the underlying sentiments of every opinion unit of a customer review.

Once you know how you want to break up your data, you can start analyzing it.

Let’s take a look at how text analysis works, step-by-step, and go into more detail about the different machine learning algorithms and techniques available.

Data Gathering

You can gather data about your brand, product or service from both internal and external sources:

Internal Data

This is the data you generate every day, from emails and chats, to surveys, customer queries, and customer support tickets.

You just need to export it from your software or platform as a CSV or Excel file, or connect an API to retrieve it directly.

Some examples of internal data:

Customer Service Software: the software you use to communicate with customers, manage user queries and deal with customer support issues: Zendesk, Freshdesk, and Help Scout are a few examples.

CRM: software that keeps track of all the interactions with clients or potential clients. It can involve different areas, from customer support to sales and marketing. Hubspot, Salesforce, and Pipedrive are examples of CRMs.

Chat: apps that communicate with the members of your team or your customers, like Slack, Hipchat, Intercom, and Drift.

Email: the king of business communication, emails are still the most popular tool to manage conversations with customers and team members.

Surveys: generally used to gather customer service feedback, product feedback, or to conduct market research, like Typeform, Google Forms, and SurveyMonkey.

NPS (Net Promoter Score): one of the most popular metrics for customer experience in the world. Many companies use NPS tracking software to collect and analyze feedback from their customers. A few examples are Delighted, Promoter.io and Satismeter.

Databases: a database is a collection of information. By using a database management system, a company can store, manage and analyze all sorts of data. Examples of databases include Postgres, MongoDB, and MySQL.

Product Analytics: the feedback and information about interactions of a customer with your product or service. It's useful to understand the customer's journey and make data-driven decisions. ProductBoard and UserVoice are two tools you can use to process product analytics.

External Data

This is text data about your brand or products from all over the web. You can use web scraping tools, APIs, and open datasets to collect external data from social media, news reports, online reviews, forums, and more, and analyze it with machine learning models.

Web Scraping Tools:

Visual Web Scraping Tools: you can build your own web scraper even with no coding experience, with tools like. Dexi.io, Portia, and ParseHub.e.

Web Scraping Frameworks: seasoned coders can benefit from tools, like Scrapy in Python and Wombat in Ruby, to create custom scrapers.

APIs

Facebook, Twitter, and Instagram, for example, have their own APIs and allow you to extract data from their platforms. Major media outlets like the New York Times or The Guardian also have their own APIs and you can use them to search their archive or gather users' comments, among other things.

Integrations

SaaS tools, like MonkeyLearn offer integrations with the tools you already use. You can connect directly to Twitter, Google Sheets, Gmail, Zendesk, SurveyMonkey, Rapidminer, and more. And perform text analysis on Excel data by uploading a file.

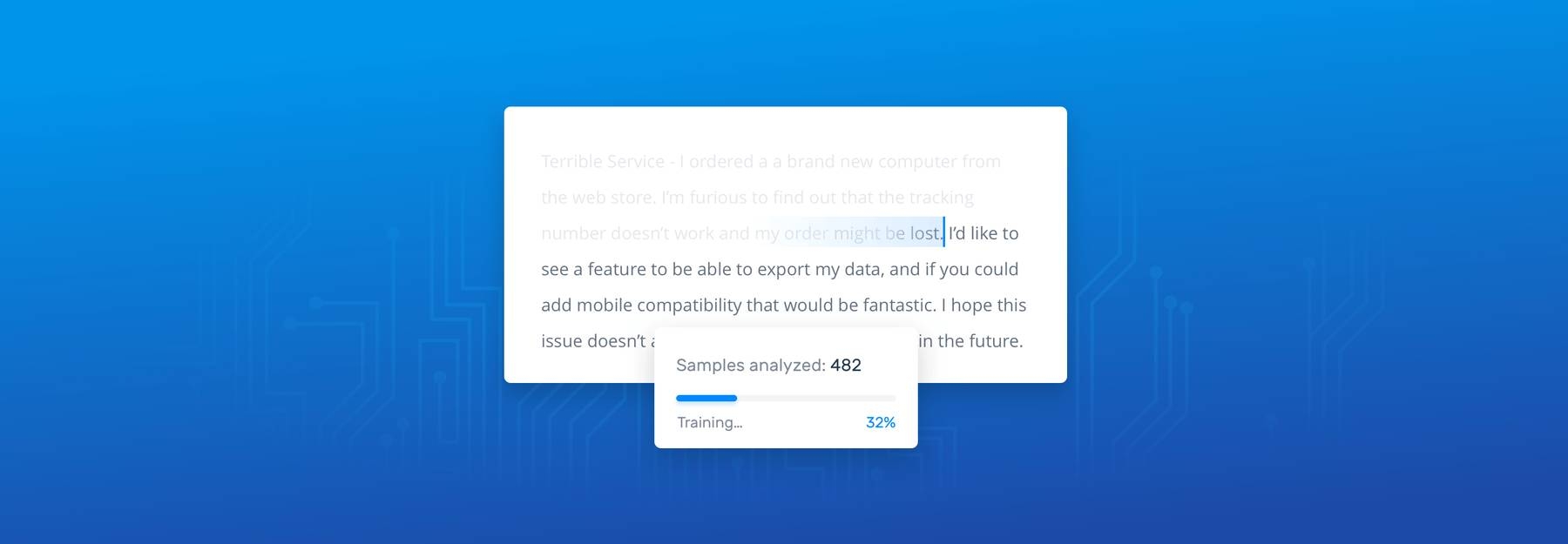

2. Data Preparation

In order to automatically analyze text with machine learning, you’ll need to organize your data. Most of this is done automatically, and you won't even notice it's happening. However, it's important to understand that automatic text analysis makes use of a number of natural language processing techniques (NLP) like the below.

Tokenization, Part-of-speech Tagging, and Parsing

Tokenization is the process of breaking up a string of characters into semantically meaningful parts that can be analyzed (e.g., words), while discarding meaningless chunks (e.g. whitespaces).

The examples below show two different ways in which one could tokenize the string 'Analyzing text is not that hard'.

(Incorrect): Analyzing text is not that hard. = [“Analyz”, “ing text”, “is n”, “ot that”, “hard.”]

(Correct): Analyzing text is not that hard. = [“Analyzing”, “text”, “is”, “not”, “that”, “hard”, “.”]

Once the tokens have been recognized, it's time to categorize them. Part-of-speech tagging refers to the process of assigning a grammatical category, such as noun, verb, etc. to the tokens that have been detected.

Here are the PoS tags of the tokens from the sentence above:

“Analyzing”: VERB, “text”: NOUN, “is”: VERB, “not”: ADV, “that”: ADV, “hard”: ADJ, “.”: PUNCT

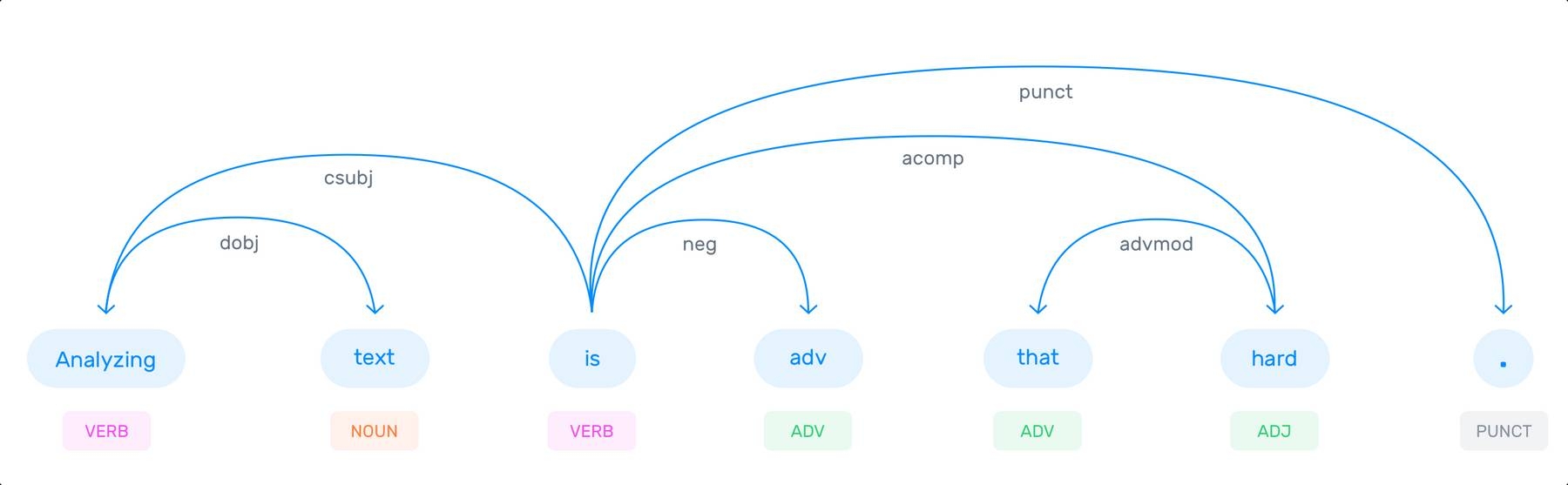

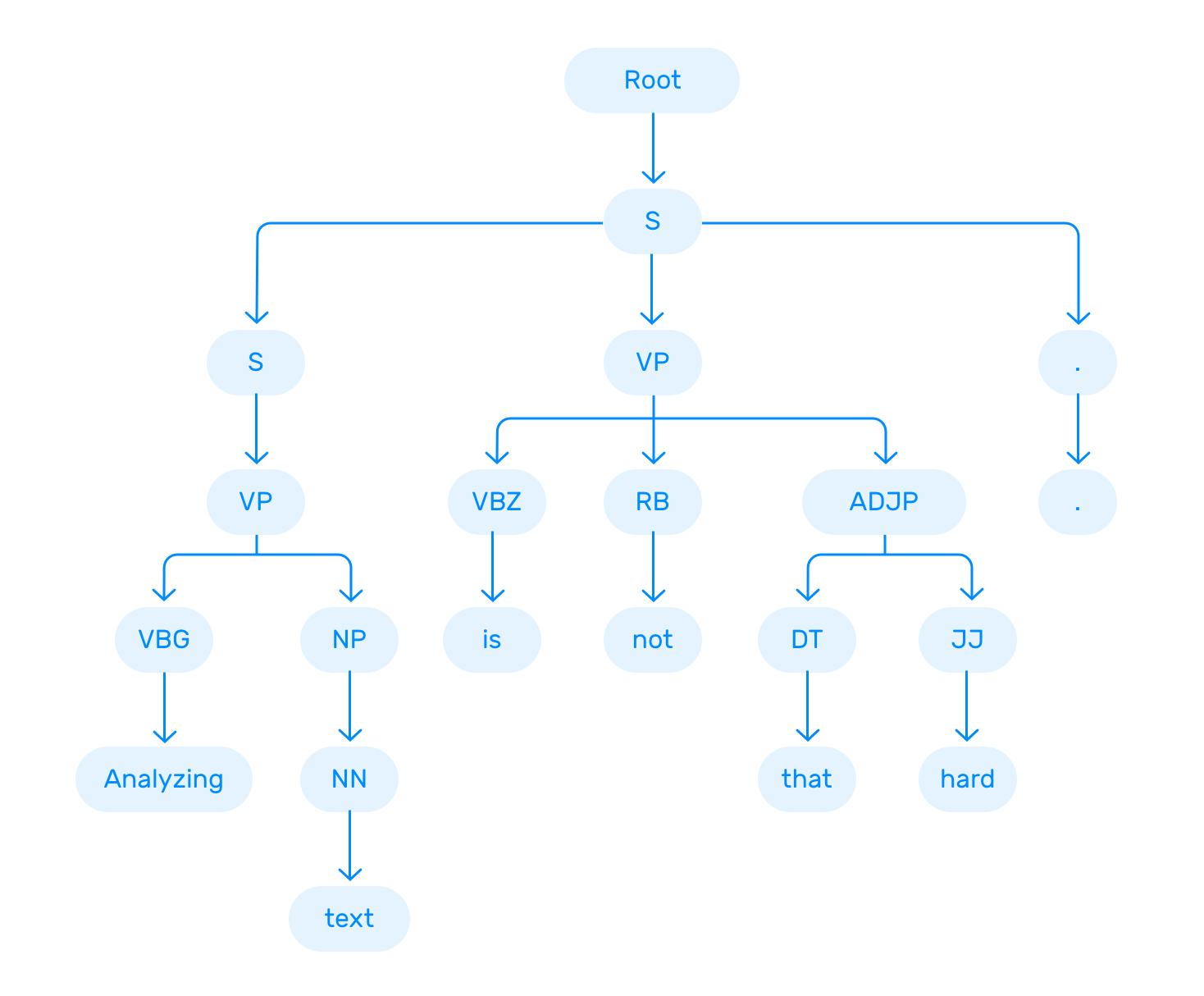

With all the categorized tokens and a language model (i.e. a grammar), the system can now create more complex representations of the texts it will analyze. This process is known as parsing. In other words, parsing refers to the process of determining the syntactic structure of a text. To do this, the parsing algorithm makes use of a grammar of the language the text has been written in. Different representations will result from the parsing of the same text with different grammars.

The examples below show the dependency and constituency representations of the sentence 'Analyzing text is not that hard'.

Dependency Parsing

Dependency grammars can be defined as grammars that establish directed relations between the words of sentences. Dependency parsing is the process of using a dependency grammar to determine the syntactic structure of a sentence:

Constituency Parsing

Constituency phrase structure grammars model syntactic structures by making use of abstract nodes associated to words and other abstract categories (depending on the type of grammar) and undirected relations between them. Constituency parsing refers to the process of using a constituency grammar to determine the syntactic structure of a sentence:

As you can see in the images above, the output of the parsing algorithms contains a great deal of information which can help you understand the syntactic (and some of the semantic) complexity of the text you intend to analyze.

Depending on the problem at hand, you might want to try different parsing strategies and techniques. However, at present, dependency parsing seems to outperform other approaches.

Lemmatization and Stemming

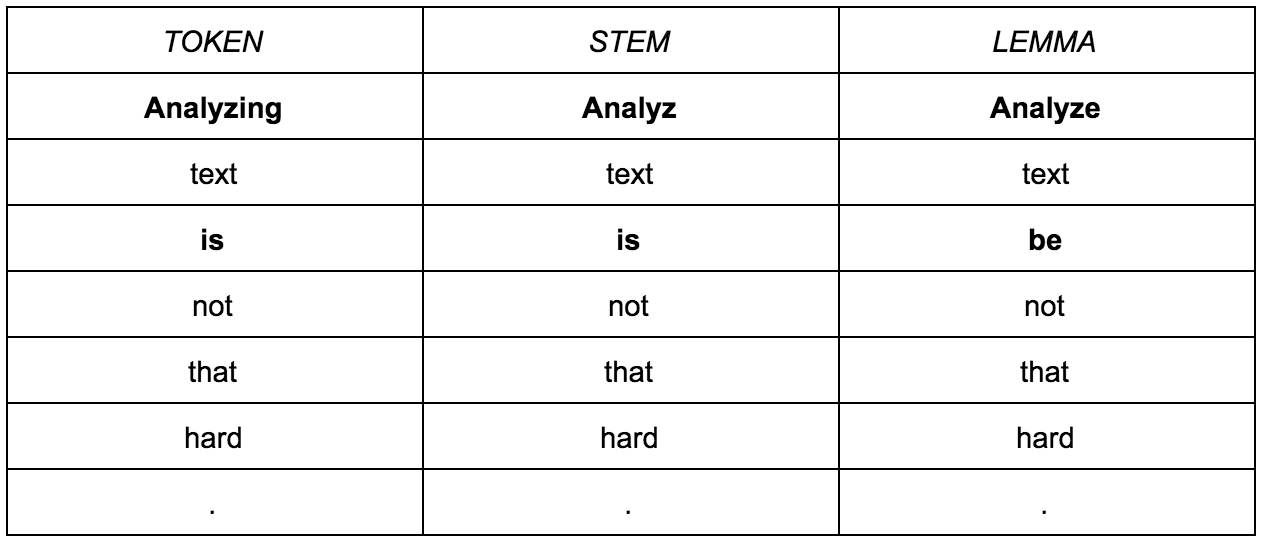

Stemming and lemmatization both refer to the process of removing all of the affixes (i.e. suffixes, prefixes, etc.) attached to a word in order to keep its lexical base, also known as root or stem or its dictionary form or lemma. The main difference between these two processes is that stemming is usually based on rules that trim word beginnings and endings (and sometimes lead to somewhat weird results), whereas lemmatization makes use of dictionaries and a much more complex morphological analysis.

The table below shows the output of NLTK's Snowball Stemmer and Spacy's lemmatizer for the tokens in the sentence 'Analyzing text is not that hard'. The differences in the output have been boldfaced:

Stopword Removal

To provide a more accurate automated analysis of the text, we need to remove the words that provide very little semantic information or no meaning at all. These words are also known as stopwords: a, and, or, the, etc.

There are many different lists of stopwords for every language. However, it's important to understand that you might need to add words to or remove words from those lists depending on the texts you want to analyze and the analyses you would like to perform.

You might want to do some kind of lexical analysis of the domain your texts come from in order to determine the words that should be added to the stopwords list.

Analyze Your Text Data

Now that you’ve learned how to mine unstructured text data and the basics of data preparation, how do you analyze all of this text?

Well, the analysis of unstructured text is not straightforward. There are countless text analysis methods, but two of the main techniques are text classification and text extraction.

Text Classification

Text classification (also known as text categorization or text tagging) refers to the process of assigning tags to texts based on its content.

In the past, text classification was done manually, which was time-consuming, inefficient, and inaccurate. But automated machine learning text analysis models often work in just seconds with unsurpassed accuracy.

The most popular text classification tasks include sentiment analysis (i.e. detecting when a text says something positive or negative about a given topic), topic detection (i.e. determining what topics a text talks about), and intent detection (i.e. detecting the purpose or underlying intent of the text), among others, but there are a great many more applications you might be interested in.

Rule-based Systems

In text classification, a rule is essentially a human-made association between a linguistic pattern that can be found in a text and a tag. Rules usually consist of references to morphological, lexical, or syntactic patterns, but they can also contain references to other components of language, such as semantics or phonology.

Here's an example of a simple rule for classifying product descriptions according to the type of product described in the text:

(HDD|RAM|SSD|Memory) → Hardware

In this case, the system will assign the Hardware tag to those texts that contain the words HDD, RAM, SSD, or Memory.

The most obvious advantage of rule-based systems is that they are easily understandable by humans. However, creating complex rule-based systems takes a lot of time and a good deal of knowledge of both linguistics and the topics being dealt with in the texts the system is supposed to analyze.

On top of that, rule-based systems are difficult to scale and maintain because adding new rules or modifying the existing ones requires a lot of analysis and testing of the impact of these changes on the results of the predictions.

Machine Learning-based Systems

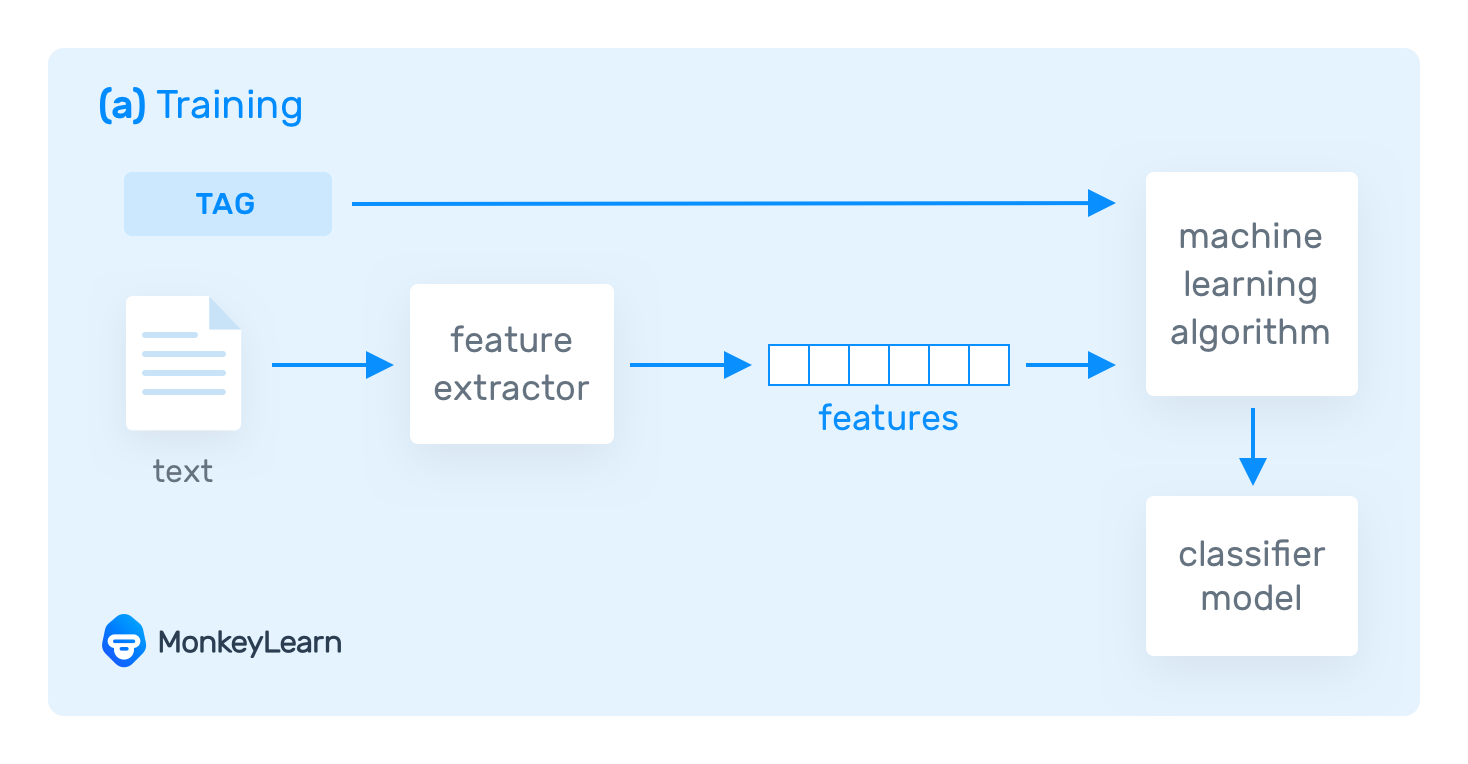

Machine learning-based systems can make predictions based on what they learn from past observations. These systems need to be fed multiple examples of texts and the expected predictions (tags) for each. This is called training data. The more consistent and accurate your training data, the better ultimate predictions will be.

When you train a machine learning-based classifier, training data has to be transformed into something a machine can understand, that is, vectors (i.e. lists of numbers which encode information). By using vectors, the system can extract relevant features (pieces of information) which will help it learn from the existing data and make predictions about the texts to come.

There are a number of ways to do this, but one of the most frequently used is called bag of words vectorization. You can learn more about vectorization here.

Once the texts have been transformed into vectors, they are fed into a machine learning algorithm together with their expected output to create a classification model that can choose what features best represent the texts and make predictions about unseen texts:

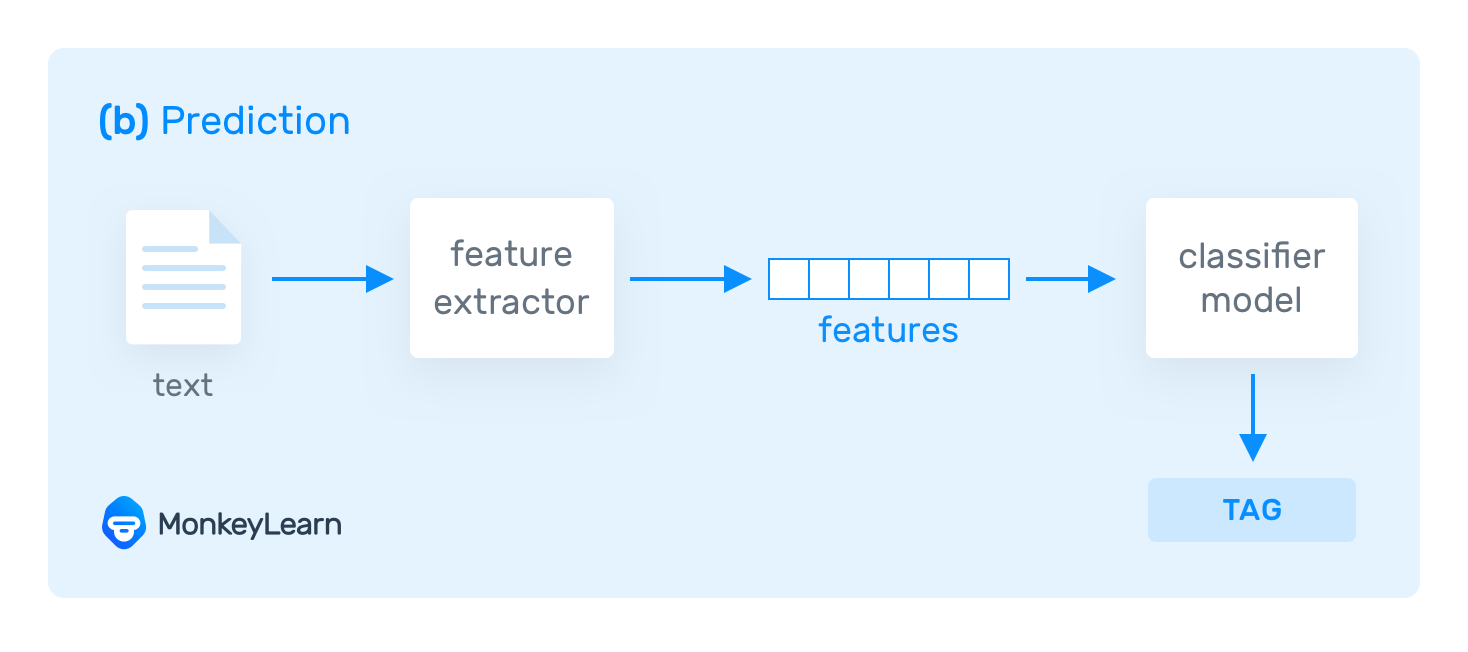

The trained model will transform unseen text into a vector, extract its relevant features, and make a prediction:

Machine Learning Algorithms

There are many machine learning algorithms used in text classification. The most frequently used are the Naive Bayes (NB) family of algorithms, Support Vector Machines (SVM), and deep learning algorithms.

The Naive Bayes family of algorithms is based on Bayes's Theorem and the conditional probabilities of occurrence of the words of a sample text within the words of a set of texts that belong to a given tag. Vectors that represent texts encode information about how likely it is for the words in the text to occur in the texts of a given tag. With this information, the probability of a text's belonging to any given tag in the model can be computed. Once all of the probabilities have been computed for an input text, the classification model will return the tag with the highest probability as the output for that input.

One of the main advantages of this algorithm is that results can be quite good even if there’s not much training data.

Support Vector Machines (SVM) is an algorithm that can divide a vector space of tagged texts into two subspaces: one space that contains most of the vectors that belong to a given tag and another subspace that contains most of the vectors that do not belong to that one tag.

Classification models that use SVM at their core will transform texts into vectors and will determine what side of the boundary that divides the vector space for a given tag those vectors belong to. Based on where they land, the model will know if they belong to a given tag or not.

The most important advantage of using SVM is that results are usually better than those obtained with Naive Bayes. However, more computational resources are needed for SVM.

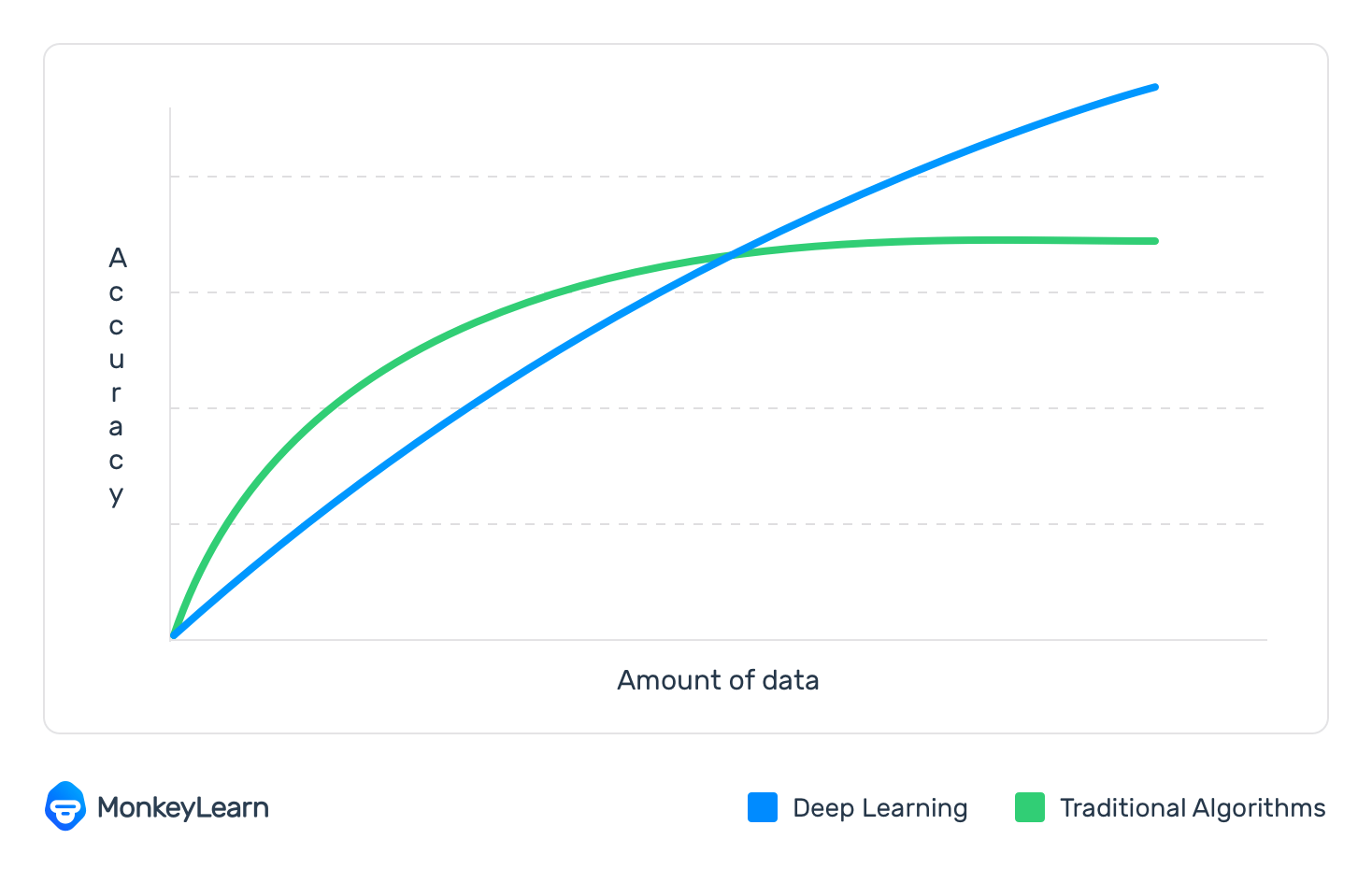

Deep Learning is a set of algorithms and techniques that use “artificial neural networks” to process data much as the human brain does. These algorithms use huge amounts of training data (millions of examples) to generate semantically rich representations of texts which can then be fed into machine learning-based models of different kinds that will make much more accurate predictions than traditional machine learning models:

Hybrid Systems

Hybrid systems usually contain machine learning-based systems at their cores and rule-based systems to improve the predictions

Evaluation

Classifier performance is usually evaluated through standard metrics used in the machine learning field: accuracy, precision, recall, and F1 score. Understanding what they mean will give you a clearer idea of how good your classifiers are at analyzing your texts.

It is also important to understand that evaluation can be performed over a fixed testing set (i.e. a set of texts for which we know the expected output tags) or by using cross-validation (i.e. a method that splits your training data into different folds so that you can use some subsets of your data for training purposes and some for testing purposes, see below).

Accuracy, Precision, Recall, and F1 score

Accuracy is the number of correct predictions the classifier has made divided by the total number of predictions. In general, accuracy alone is not a good indicator of performance. For example, when categories are imbalanced, that is, when there is one category that contains many more examples than all of the others, predicting all texts as belonging to that category will return high accuracy levels. This is known as the accuracy paradox. To get a better idea of the performance of a classifier, you might want to consider precision and recall instead.

Precision states how many texts were predicted correctly out of the ones that were predicted as belonging to a given tag. In other words, precision takes the number of texts that were correctly predicted as positive for a given tag and divides it by the number of texts that were predicted (correctly and incorrectly) as belonging to the tag.

We have to bear in mind that precision only gives information about the cases where the classifier predicts that the text belongs to a given tag. This might be particularly important, for example, if you would like to generate automated responses for user messages. In this case, before you send an automated response you want to know for sure you will be sending the right response, right? In other words, if your classifier says the user message belongs to a certain type of message, you would like the classifier to make the right guess. This means you would like a high precision for that type of message.

Recall states how many texts were predicted correctly out of the ones that should have been predicted as belonging to a given tag. In other words, recall takes the number of texts that were correctly predicted as positive for a given tag and divides it by the number of texts that were either predicted correctly as belonging to the tag or that were incorrectly predicted as not belonging to the tag.

Recall might prove useful when routing support tickets to the appropriate team, for example. It might be desired for an automated system to detect as many tickets as possible for a critical tag (for example tickets about 'Outrages / Downtime') at the expense of making some incorrect predictions along the way. In this case, making a prediction will help perform the initial routing and solve most of these critical issues ASAP. If the prediction is incorrect, the ticket will get rerouted by a member of the team. When processing thousands of tickets per week, high recall (with good levels of precision as well, of course) can save support teams a good deal of time and enable them to solve critical issues faster.

The F1 score is the harmonic means of precision and recall. It tells you how well your classifier performs if equal importance is given to precision and recall. In general, F1 score is a much better indicator of classifier performance than accuracy is.

Cross-validation

Cross-validation is quite frequently used to evaluate the performance of text classifiers. The method is simple. First of all, the training dataset is randomly split into a number of equal-length subsets (e.g. 4 subsets with 25% of the original data each). Then, all the subsets except for one are used to train a classifier (in this case, 3 subsets with 75% of the original data) and this classifier is used to predict the texts in the remaining subset. Next, all the performance metrics are computed (i.e. accuracy, precision, recall, F1, etc.). Finally, the process is repeated with a new testing fold until all the folds have been used for testing purposes.

Once all folds have been used, the average performance metrics are computed and the evaluation process is finished.

Text Extraction

Text Extraction refers to the process of recognizing structured pieces of information from unstructured text. For example, it can be useful to automatically detect the most relevant keywords from a piece of text, identify names of companies in a news article, detect lessors and lessees in a financial contract, or identify prices on product descriptions.

Regular Expressions

Regular Expressions (a.k.a. regexes) work as the equivalent of the rules defined in classification tasks. In this case, a regular expression defines a pattern of characters that will be associated with a tag.

For example, the pattern below will detect most email addresses in a text if they preceded and followed by spaces:

(?i)\b(?:[a-zA-Z0-9_-.]+)@(?:(?:[[0-9]{1,3}.[0-9]{1,3}.[0-9]{1,3}.)|(?:(?:[a-zA-Z0-9-]+.)+))(?:[a-zA-Z]{2,4}|[0-9]{1,3})(?:]?)\b

By detecting this match in texts and assigning it the email tag, we can create a rudimentary email address extractor.

There are obvious pros and cons of this approach. On the plus side, you can create text extractors quickly and the results obtained can be good, provided you can find the right patterns for the type of information you would like to detect. On the minus side, regular expressions can get extremely complex and might be really difficult to maintain and scale, particularly when many expressions are needed in order to extract the desired patterns.

Conditional Random Fields

Conditional Random Fields (CRF) is a statistical approach often used in machine-learning-based text extraction. This approach learns the patterns to be extracted by weighing a set of features of the sequences of words that appear in a text. Through the use of CRFs, we can add multiple variables which depend on each other to the patterns we use to detect information in texts, such as syntactic or semantic information.

This usually generates much richer and complex patterns than using regular expressions and can potentially encode much more information. However, more computational resources are needed in order to implement it since all the features have to be calculated for all the sequences to be considered and all of the weights assigned to those features have to be learned before determining whether a sequence should belong to a tag or not.

One of the main advantages of the CRF approach is its generalization capacity. Once an extractor has been trained using the CRF approach over texts of a specific domain, it will have the ability to generalize what it has learned to other domains reasonably well.

Evaluation

Extractors are sometimes evaluated by calculating the same standard performance metrics we have explained above for text classification, namely, accuracy, precision, recall, and F1 score. However, these metrics do not account for partial matches of patterns. In order for an extracted segment to be a true positive for a tag, it has to be a perfect match with the segment that was supposed to be extracted.

Consider the following example:

'Your flight will depart on January 14, 2020 at 03:30 PM from SFO'

If we created a date extractor, we would expect it to return January 14, 2020 as a date from the text above, right? So, if the output of the extractor were January 14, 2020, we would count it as a true positive for the tag DATE.

But, what if the output of the extractor were January 14? Would you say the extraction was bad? Would you say it was a false positive for the tag DATE? To capture partial matches like this one, some other performance metrics can be used to evaluate the performance of extractors. One example of this is the ROUGE family of metrics.

ROUGE (Recall-Oriented Understudy for Gisting Evaluation) is a family of metrics used in the fields of machine translation and automatic summarization that can also be used to assess the performance of text extractors. These metrics basically compute the lengths and number of sequences that overlap between the source text (in this case, our original text) and the translated or summarized text (in this case, our extraction).

Depending on the length of the units whose overlap you would like to compare, you can define ROUGE-n metrics (for units of length n) or you can define the ROUGE-LCS or ROUGE-L metric if you intend to compare the longest common sequence (LCS).

4.Visualize Your Text Data

Now you know a variety of text analysis methods to break down your data, but what do you do with the results? Business intelligence (BI) and data visualization tools make it easy to understand your results in striking dashboards.

- MonkeyLearn Studio

MonkeyLearn Studio is an all-in-one data gathering, analysis, and visualization tool. Deep learning machine learning techniques allow you to choose the text analyses you need (keyword extraction, sentiment analysis, aspect classification, and on and on) and chain them together to work simultaneously.

You’ll see the importance of text analytics right away. Simply upload your data and visualize the results for powerful insights. It all works together in a single interface, so you no longer have to upload and download between applications.

- Google Data Studio

Google's free visualization tool allows you to create interactive reports using a wide variety of data. Once you've imported your data you can use different tools to design your report and turn your data into an impressive visual story. Share the results with individuals or teams, publish them on the web, or embed them on your website.

- Looker

Looker is a business data analytics platform designed to direct meaningful data to anyone within a company. The idea is to allow teams to have a bigger picture about what's happening in their company.

You can connect to different databases and automatically create data models, which can be fully customized to meet specific needs. Take a look here to get started.

- Tableau

Tableau is a business intelligence and data visualization tool with an intuitive, user-friendly approach (no technical skills required). Tableau allows organizations to work with almost any existing data source and provides powerful visualization options with more advanced tools for developers.

There's a trial version available for anyone wanting to give it a go. Learn how to perform text analysis in Tableau.

Text Analysis Applications & Examples

Did you know that 80% of business data is text? Text is present in every major business process, from support tickets, to product feedback, and online customer interactions. Automated, real time text analysis can help you get a handle on all that data with a broad range of business applications and use cases. Maximize efficiency and reduce repetitive tasks that often have a high turnover impact. Better understand customer insights without having to sort through millions of social media posts, online reviews, and survey responses.

If you work in customer experience, product, marketing, or sales, there are a number of text analysis applications to automate processes and get real world insights. And best of all you don’t need any data science or engineering experience to do it.

Social Media Monitoring

Let's say you work for Uber and you want to know what users are saying about the brand. You've read some positive and negative feedback on Twitter and Facebook. But 500 million tweets are sent each day, and Uber has thousands of mentions on social media every month. Can you imagine analyzing all of them manually?

This is where sentiment analysis comes in to analyze the opinion of a given text. By analyzing your social media mentions with a sentiment analysis model, you can automatically categorize them into Positive, Neutral or Negative. Then run them through a topic analyzer to understand the subject of each text. By running aspect-based sentiment analysis, you can automatically pinpoint the reasons behind positive or negative mentions and get insights such as:

- The top complaint about Uber on social media?

- The success rate of Uber's customer service - are people happy or are annoyed with it?

- What Uber users like about the service when they mention Uber in a positive way?

Now, let's say you've just added a new service to Uber. For example, Uber Eats. It's a crucial moment, and your company wants to know what people are saying about Uber Eats so that you can fix any glitches as soon as possible, and polish the best features. You can also use aspect-based sentiment analysis on your Facebook, Instagram and Twitter profiles for any Uber Eats mentions and discover things such as:

- Are people happy with Uber Eats so far?

- What is the most urgent issue to fix?

- How can we incorporate positive stories into our marketing and PR communication?

Not only can you use text analysis to keep tabs on your brand's social media mentions, but you can also use it to monitor your competitors' mentions as well. Is a client complaining about a competitor's service? That gives you a chance to attract potential customers and show them how much better your brand is.

Brand Monitoring

Follow comments about your brand in real time wherever they may appear (social media, forums, blogs, review sites, etc.). You’ll know when something negative arises right away and be able to use positive comments to your advantage.

The power of negative reviews is quite strong: 40% of consumers are put off from buying if a business has negative reviews. An angry customer complaining about poor customer service can spread like wildfire within minutes: a friend shares it, then another, then another… And before you know it, the negative comments have gone viral.

- Understand how your brand reputation evolves over time.

- Compare your brand reputation to your competitor's.

- Identify which aspects are damaging your reputation.

- Pinpoint which elements are boosting your brand reputation on online media.

- Identify potential PR crises so you can deal with them ASAP.

- Tune into data from a specific moment, like the day of a new product launch or IPO filing. Just run a sentiment analysis on social media and press mentions on that day, to find out what people said about your brand.

- Repost positive mentions of your brand to get the word out.

Customer Service

Despite many people's fears and expectations, text analysis doesn't mean that customer service will be entirely machine-powered. It just means that businesses can streamline processes so that teams can spend more time solving problems that require human interaction. That way businesses will be able to increase retention, given that 89 percent of customers change brands because of poor customer service. But, how can text analysis assist your company's customer service?

Ticket Tagging

Let machines do the work for you. Text analysis automatically identifies topics, and tags each ticket. Here's how it works:

- The model analyzes the language and expressions a customer language, for example, “I didn't get the right order.”

- Then, it compares it to other similar conversations.

- Finally, it finds a match and tags the ticket automatically. In this case, it could be under a Shipping Problems tag.

This happens automatically, whenever a new ticket comes in, freeing customer agents to focus on more important tasks.

Ticket Routing & Triage: Find the Right Person for the Job

Machine learning can read a ticket for subject or urgency, and automatically route it to the appropriate department or employee .

For example, for a SaaS company that receives a customer ticket asking for a refund, the text mining system will identify which team usually handles billing issues and send the ticket to them. If a ticket says something like “How can I integrate your API with python?”, it would go straight to the team in charge of helping with Integrations.

Ticket Analytics: Learn More From Your Customers

What is commonly assessed to determine the performance of a customer service team? Common KPIs are first response time, average time to resolution (i.e. how long it takes your team to resolve issues), and customer satisfaction (CSAT). And, let's face it, overall client satisfaction has a lot to do with the first two metrics.

But how do we get actual CSAT insights from customer conversations? How can we identify if a customer is happy with the way an issue was solved? Or if they have expressed frustration with the handling of the issue?

In this situation, aspect-based sentiment analysis could be used. This type of text analysis delves into the feelings and topics behind the words on different support channels, such as support tickets, chat conversations, emails, and CSAT surveys. A text analysis model can understand words or expressions to define the support interaction as Positive, Negative, or Neutral, understand what was mentioned (e.g. Service or UI/UX), and even determine the sentiments behind the words (e.g. Sadness, Anger, etc.).

Urgency Detection: Prioritize Urgent Tickets

“Where do I start?” is a question most customer service representatives often ask themselves. Urgency is definitely a good starting point, but how do we define the level of urgency without wasting valuable time deliberating?

Text mining software can define the urgency level of a customer ticket and tag it accordingly. Support tickets with words and expressions that denote urgency, such as 'as soon as possible' or 'right away', are duly tagged as Priority.

To see how text analysis works to detect urgency, check out this MonkeyLearn urgency detection demo model.

Voice of Customer (VoC) & Customer Feedback

Once you get a customer, retention is key, since acquiring new clients is five to 25 times more expensive than retaining the ones you already have. That's why paying close attention to the voice of the customer can give your company a clear picture of the level of client satisfaction and, consequently, of client retention. Also, it can give you actionable insights to prioritize the product roadmap from a customer's perspective.

Analyzing NPS Responses

Maybe your brand already has a customer satisfaction survey in place, the most common one being the Net Promoter Score (NPS). This survey asks the question, 'How likely is it that you would recommend [brand] to a friend or colleague?'. The answer is a score from 0-10 and the result is divided into three groups: the promoters, the passives, and the detractors.

But here comes the tricky part: there's an open-ended follow-up question at the end 'Why did you choose X score?' The answer can provide your company with invaluable insights. Without the text, you're left guessing what went wrong. And, now, with text analysis, you no longer have to read through these open-ended responses manually.

You can do what Promoter.io did: extract the main keywords of your customers' feedback to understand what's being praised or criticized about your product. Is the keyword 'Product' mentioned mostly by promoters or detractors? With this info, you'll be able to use your time to get the most out of NPS responses and start taking action.

Another option is following in Retently's footsteps using text analysis to classify your feedback into different topics, such as Customer Support, Product Design, and Product Features, then analyze each tag with sentiment analysis to see how positively or negatively clients feel about each topic. Now they know they're on the right track with product design, but still have to work on product features.

Analyzing Customer Surveys

Does your company have another customer survey system? If it's a scoring system or closed-ended questions, it'll be a piece of cake to analyze the responses: just crunch the numbers.

However, if you have an open-text survey, whether it's provided via email or it's an online form, you can stop manually tagging every single response by letting text analysis do the job for you. Besides saving time, you can also have consistent tagging criteria without errors, 24/7.

Business Intelligence

Data analysis is at the core of every business intelligence operation. Now, what can a company do to understand, for instance, sales trends and performance over time? With numeric data, a BI team can identify what's happening (such as sales of X are decreasing) – but not why. Numbers are easy to analyze, but they are also somewhat limited. Text data, on the other hand, is the most widespread format of business information and can provide your organization with valuable insight into your operations. Text analysis with machine learning can automatically analyze this data for immediate insights.

For example, you can run keyword extraction and sentiment analysis on your social media mentions to understand what people are complaining about regarding your brand.

You can also run aspect-based sentiment analysis on customer reviews that mention poor customer experiences. After all, 67% of consumers list bad customer experience as one of the primary reasons for churning. Maybe it's bad support, a faulty feature, unexpected downtime, or a sudden price change. Analyzing customer feedback can shed a light on the details, and the team can take action accordingly.

And what about your competitors? What are their reviews saying? Run them through your text analysis model and see what they're doing right and wrong and improve your own decision-making.

Sales and Marketing

Prospecting is the most difficult part of the sales process. And it's getting harder and harder. The sales team always want to close deals, which requires making the sales process more efficient. But 27% of sales agents are spending over an hour a day on data entry work instead of selling, meaning critical time is lost to administrative work and not closing deals.

Text analysis takes the heavy lifting out of manual sales tasks, including:

- Updating the deal status as 'Not interested' in your CRM.

- Qualifying your leads based on company descriptions.

- Identifying leads on social media that express buying intent.

GlassDollar, a company that links founders to potential investors, is using text analysis to find the best quality matches. How? They use text analysis to classify companies using their company descriptions. The results? They saved themselves days of manual work, and predictions were 90% accurate after training a text classification model. You can learn more about their experience with MonkeyLearn here.

Not only can text analysis automate manual and tedious tasks, but it can also improve your analytics to make the sales and marketing funnels more efficient. For example, you can automatically analyze the responses from your sales emails and conversations to understand, let's say, a drop in sales:

- What are the blocks to completing a deal?

- What sparks a customer's interest?

- What are customer concerns?

Now, Imagine that your sales team's goal is to target a new segment for your SaaS: people over 40. The first impression is that they don't like the product, but why? Just filter through that age group's sales conversations and run them on your text analysis model. Sales teams could make better decisions using in-depth text analysis on customer conversations.

Finally, you can use machine learning and text analysis to provide a better experience overall within your sales process. For example, Drift, a marketing conversational platform, integrated MonkeyLearn API to allow recipients to automatically opt out of sales emails based on how they reply.

It's time to boost sales and stop wasting valuable time with leads that don't go anywhere. Xeneta, a sea freight company, developed a machine learning algorithm and trained it to identify which companies were potential customers, based on the company descriptions gathered through FullContact (a SaaS company that has descriptions of millions of companies).

You can do the same or target users that visit your website to:

- Get information about where potential customers work using a service like Clearbit and classify the company according to its type of business to see if it's a possible lead.

- Extract information to easily learn the user's job position, the company they work for, its type of business and other relevant information.

- Hone in on the most qualified leads and save time actually looking for them: sales reps will receive the information automatically and start targeting the potential customers right away.

Product Analytics

Let's imagine your startup has an app on the Google Play store. You're receiving some unusually negative comments. What's going on?

You can find out what’s happening in just minutes by using a text analysis model that groups reviews into different tags like Ease of Use and Integrations. Then run them through a sentiment analysis model to find out whether customers are talking about products positively or negatively. Finally, graphs and reports can be created to visualize and prioritize product problems with MonkeyLearn Studio.

We did this with reviews for Slack from the product review site Capterra and got some pretty interesting insights. Here's how:

We analyzed reviews with aspect-based sentiment analysis and categorized them into main topics and sentiment.

We extracted keywords with the keyword extractor to get some insights into why reviews that are tagged under 'Performance-Quality-Reliability' tend to be negative.

Text Analysis Resources

There are a number of valuable resources out there to help you get started with all that text analysis has to offer.

Text Analysis APIs

You can use open-source libraries or SaaS APIs to build a text analysis solution that fits your needs. Open-source libraries require a lot of time and technical know-how, while SaaS tools can often be put to work right away and require little to no coding experience.

Open Source Libraries

Python

Python is the most widely-used language in scientific computing, period. Tools like NumPy and SciPy have established it as a fast, dynamic language that calls C and Fortran libraries where performance is needed.

These things, combined with a thriving community and a diverse set of libraries to implement natural language processing (NLP) models has made Python one of the most preferred programming languages for doing text analysis.

NLTK

NLTK, the Natural Language Toolkit, is a best-of-class library for text analysis tasks. NLTK is used in many university courses, so there's plenty of code written with it and no shortage of users familiar with both the library and the theory of NLP who can help answer your questions.

SpaCy

SpaCy is an industrial-strength statistical NLP library. Aside from the usual features, it adds deep learning integration and convolutional neural network models for multiple languages.

Unlike NLTK, which is a research library, SpaCy aims to be a battle-tested, production-grade library for text analysis.

Scikit-learn

Scikit-learn is a complete and mature machine learning toolkit for Python built on top of NumPy, SciPy, and matplotlib, which gives it stellar performance and flexibility for building text analysis models.

TensorFlow

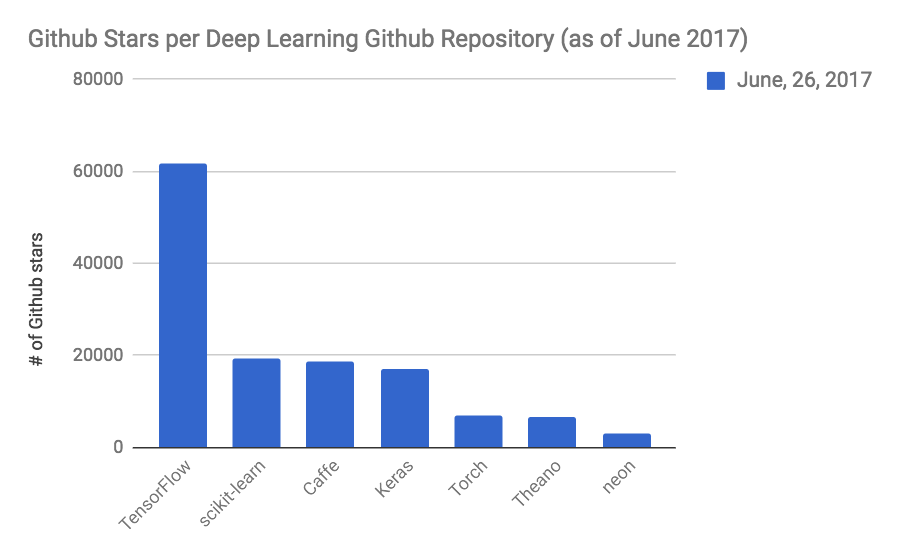

Developed by Google, TensorFlow is by far the most widely used library for distributed deep learning. Looking at this graph we can see that TensorFlow is ahead of the competition:

PyTorch

PyTorch is a deep learning platform built by Facebook and aimed specifically at deep learning. PyTorch is a Python-centric library, which allows you to define much of your neural network architecture in terms of Python code, and only internally deals with lower-level high-performance code.

Keras

Keras is a widely-used deep learning library written in Python. It's designed to enable rapid iteration and experimentation with deep neural networks, and as a Python library, it's uniquely user-friendly.

An important feature of Keras is that it provides what is essentially an abstract interface to deep neural networks. The actual networks can run on top of Tensorflow, Theano, or other backends. This backend independence makes Keras an attractive option in terms of its long-term viability.

The permissive MIT license makes it attractive to businesses looking to develop proprietary models.

R

R is the pre-eminent language for any statistical task. Its collection of libraries (13,711 at the time of writing on CRAN far surpasses any other programming language capabilities for statistical computing and is larger than many other ecosystems. In short, if you choose to use R for anything statistics-related, you won't find yourself in a situation where you have to reinvent the wheel, let alone the whole stack.

Caret

Caret is an R package designed to build complete machine learning pipelines, with tools for everything from data ingestion and preprocessing, feature selection, and tuning your model automatically.

mlr

The Machine Learning in R project (mlr for short) provides a complete machine learning toolkit for the R programming language that's frequently used for text analysis.

Java

Java needs no introduction. The language boasts an impressive ecosystem that stretches beyond Java itself and includes the libraries of other The JVM languages such as The Scala and Clojure. Beyond that, the JVM is battle-tested and has had thousands of person-years of development and performance tuning, so Java is likely to give you best-of-class performance for all your text analysis NLP work.

CoreNLP

Stanford's CoreNLP project provides a battle-tested, actively maintained NLP toolkit. While it's written in Java, it has APIs for all major languages, including Python, R, and Go.

OpenNLP

The Apache OpenNLP project is another machine learning toolkit for NLP. It can be used from any language on the JVM platform.

Weka

Weka is a GPL-licensed Java library for machine learning, developed at the University of Waikato in New Zealand. In addition to a comprehensive collection of machine learning APIs, Weka has a graphical user interface called the Explorer, which allows users to interactively develop and study their models.

Weka supports extracting data from SQL databases directly, as well as deep learning through the deeplearning4j framework.

SaaS APIs

Using a SaaS API for text analysis has a lot of advantages:

- No setup:

Most SaaS tools are simple plug-and-play solutions with no libraries to install and no new infrastructure.

- No code:

SaaS APIs provide ready to use solutions. You give them data and they return the analysis. Every other concern – performance, scalability, logging, architecture, tools, etc. – is offloaded to the party responsible for maintaining the API.

You often just need to write a few lines of code to call the API and get the results back.

- Easy Integration:

SaaS APIs usually provide ready-made integrations with tools you may already use. This will allow you to build a truly no-code solution. Learn how to integrate text analysis with Google Sheets.

Some of the most well-known SaaS solutions and APIs for text analysis include:

There is an ongoing Build vs. Buy Debate when it comes to text analysis applications: build your own tool with open-source software, or use a SaaS text analysis tool?

Building your own software from scratch can be effective and rewarding if you have years of data science and engineering experience, but it’s time-consuming and can cost in the hundreds of thousands of dollars.

SaaS tools, on the other hand, are a great way to dive right in. They can be straightforward, easy to use, and just as powerful as building your own model from scratch. MonkeyLearn is a SaaS text analysis platform with dozens of pre-trained models. Or you can customize your own, often in only a few steps for results that are just as accurate. All with no coding experience necessary.

Training Datasets

If you talk to any data science professional, they'll tell you that the true bottleneck to building better models is not new and better algorithms, but more data.

Indeed, in machine learning data is king: a simple model, given tons of data, is likely to outperform one that uses every trick in the book to turn every bit of training data into a meaningful response.

So, here are some high-quality datasets you can use to get started:

Topic Classification

Reuters news dataset: one the most popular datasets for text classification; it has thousands of articles from Reuters tagged with 135 categories according to their topics, such as Politics, Economics, Sports, and Business.

20 Newsgroups: a very well-known dataset that has more than 20k documents across 20 different topics.

Sentiment Analysis

Product reviews: a dataset with millions of customer reviews from products on Amazon.

Twitter airline sentiment on Kaggle: another widely used dataset for getting started with sentiment analysis. It contains more than 15k tweets about airlines (tagged as positive, neutral, or negative).

First GOP Debate Twitter Sentiment: another useful dataset with more than 14,000 labeled tweets (positive, neutral, and negative) from the first GOP debate in 2016.

Other Popular Datasets

Spambase: this dataset contains 4,601 emails tagged as spam and not spam.

SMS Spam Collection: another dataset for spam detection. It has more than 5k SMS messages tagged as spam and not spam.

Hate speech and offensive language: a dataset with more than 24k tagged tweets grouped into three tags: clean, hate speech, and offensive language.

Finding high-volume and high-quality training datasets are the most important part of text analysis, more important than the choice of the programming language or tools for creating the models. Remember, the best-architected machine-learning pipeline is worthless if its models are backed by unsound data.

Text Analysis Tutorials

The best way to learn is by doing.

First, we'll go through programming-language-specific tutorials using open-source tools for text analysis. These will help you deepen your understanding of the available tools for your platform of choice.

Then, we'll take a step-by-step tutorial of MonkeyLearn so you can get started with text analysis right away.

Tutorials Using Open Source Libraries

In this section, we'll look at various tutorials for text analysis in the main programming languages for machine learning that we listed above.

Python

NLTK

The official NLTK book is a complete resource that teaches you NLTK from beginning to end. In addition, the reference documentation is a useful resource to consult during development.

Other useful tutorials include:

WordNet with NLTK: Finding Synonyms for words in Python: this tutorial shows you how to build a thesaurus using Python and WordNet.

Tokenizing Words and Sentences with NLTK: this tutorial shows you how to use NLTK's language models to tokenize words and sentences.

SpaCy

spaCy 101: Everything you need to know: part of the official documentation, this tutorial shows you everything you need to know to get started using SpaCy.

This tutorial shows you how to build a WordNet pipeline with SpaCy.

Furthermore, there's the official API documentation, which explains the architecture and API of SpaCy.

If you prefer long-form text, there are a number of books about or featuring SpaCy:

- Introduction to Machine Learning with Python: A Guide for Data Scientists.

- Practical Machine Learning with Python.

- Text Analytics with Python.

Scikit-learn

The official scikit-learn documentation contains a number of tutorials on the basic usage of scikit-learn, building pipelines, and evaluating estimators.

Scikit-learn Tutorial: Machine Learning in Python shows you how to use scikit-learn and Pandas to explore a dataset, visualize it, and train a model.

For readers who prefer books, there are a couple of choices:

Our very own Raúl Garreta wrote this book: Learning scikit-learn: Machine Learning in Python.

Additionally, the book Hands-On Machine Learning with Scikit-Learn and TensorFlow introduces the use of scikit-learn in a deep learning context.

Keras

The official Keras website has extensive API as well as tutorial documentation. For readers who prefer long-form text, the Deep Learning with Keras book is the go-to resource. The book uses real-world examples to give you a strong grasp of Keras.

Other tutorials:

Practical Text Classification With Python and Keras: this tutorial implements a sentiment analysis model using Keras, and teaches you how to train, evaluate, and improve that model.

Text Classification in Keras: this article builds a simple text classifier on the Reuters news dataset. It classifies the text of an article into a number of categories such as sports, entertainment, and technology.

TensorFlow

TensorFlow Tutorial For Beginners introduces the mathematics behind TensorFlow and includes code examples that run in the browser, ideal for exploration and learning. The goal of the tutorial is to classify street signs.

The book Hands-On Machine Learning with Scikit-Learn and TensorFlow helps you build an intuitive understanding of machine learning using TensorFlow and scikit-learn.

Finally, there's the official Get Started with TensorFlow guide.

PyTorch

The official Get Started Guide from PyTorch shows you the basics of PyTorch. If you're interested in something more practical, check out this chatbot tutorial; it shows you how to build a chatbot using PyTorch.

The Deep Learning for NLP with PyTorch tutorial is a gentle introduction to the ideas behind deep learning and how they are applied in PyTorch.

Finally, the official API reference explains the functioning of each individual component.

R

Caret

A Short Introduction to the Caret Package shows you how to train and visualize a simple model. A Practical Guide to Machine Learning in R shows you how to prepare data, build and train a model, and evaluate its results. Finally, you have the official documentation which is super useful to get started with Caret.

mlr

For those who prefer long-form text, on arXiv we can find an extensive mlr tutorial paper. This is closer to a book than a paper and has extensive and thorough code samples for using mlr.

Java

CoreNLP

If interested in learning about CoreNLP, you should check out Linguisticsweb.org's tutorial which explains how to quickly get started and perform a number of simple NLP tasks from the command line. Moreover, this CloudAcademy tutorial shows you how to use CoreNLP and visualize its results. You can also check out this tutorial specifically about sentiment analysis with CoreNLP. Finally, there's this tutorial on using CoreNLP with Python that is useful to get started with this framework.

OpenNLP

First things first: the official Apache OpenNLP Manual should be the starting point. The book Taming Text was written by an OpenNLP developer and uses the framework to show the reader how to implement text analysis. Moreover, this tutorial takes you on a complete tour of OpenNLP, including tokenization, part of speech tagging, parsing sentences, and chunking.

Weka

The Weka library has an official book Data Mining: Practical Machine Learning Tools and Techniques that comes handy for getting your feet wet with Weka.

If you prefer videos to text, there are also a number of MOOCs using Weka:

Data Mining with Weka: this is an introductory course to Weka.

More Data Mining with Weka: this course involves larger datasets and a more complete text analysis workflow.

Advanced Data Mining with Weka: this course focuses on packages that extend Weka's functionality.

The Text Mining in WEKA Cookbook provides text-mining-specific instructions for using Weka.

How to Run Your First Classifier in Weka: shows you how to install Weka, run it, run a classifier on a sample dataset, and visualize its results.

Text Analysis Tutorial With MonkeyLearn Templates

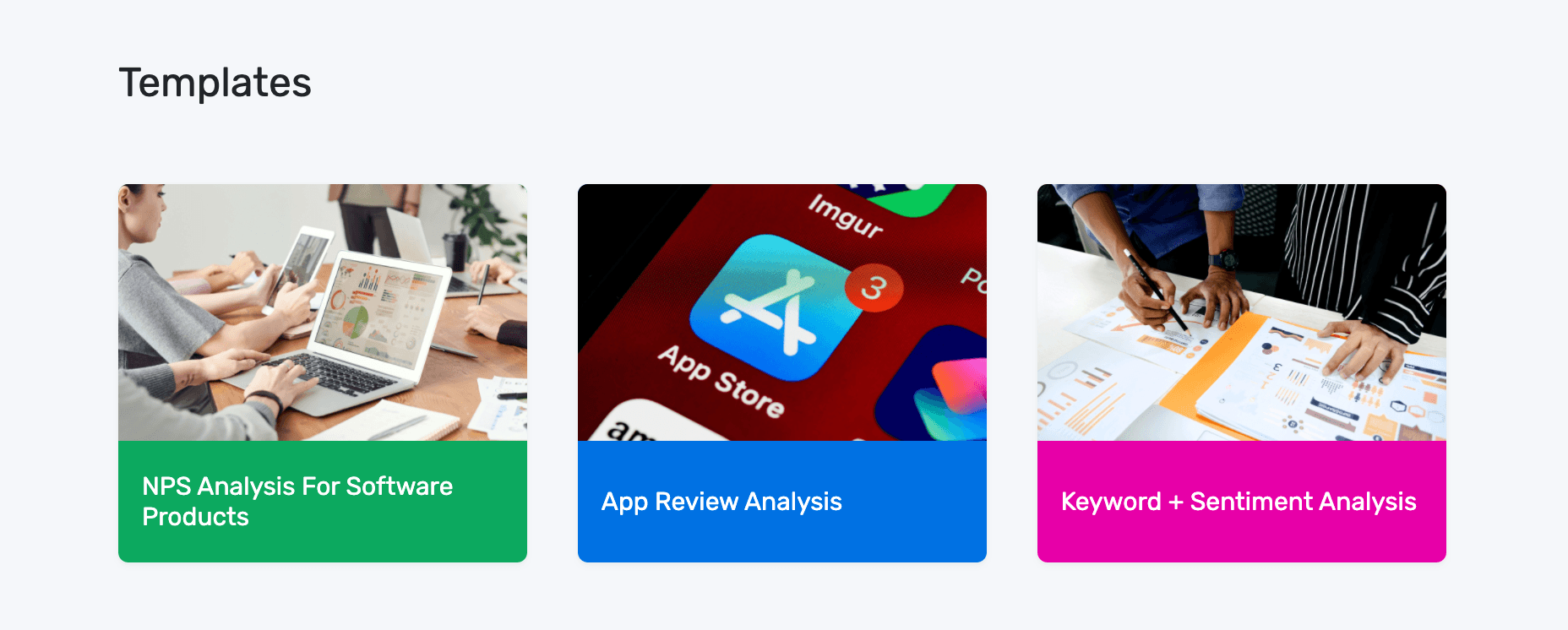

MonkeyLearn Templates is a simple and easy-to-use platform that you can use without adding a single line of code.

Follow the step-by-step tutorial below to see how you can run your data through text analysis tools and visualize the results:

1. Choose a template to create your workflow:

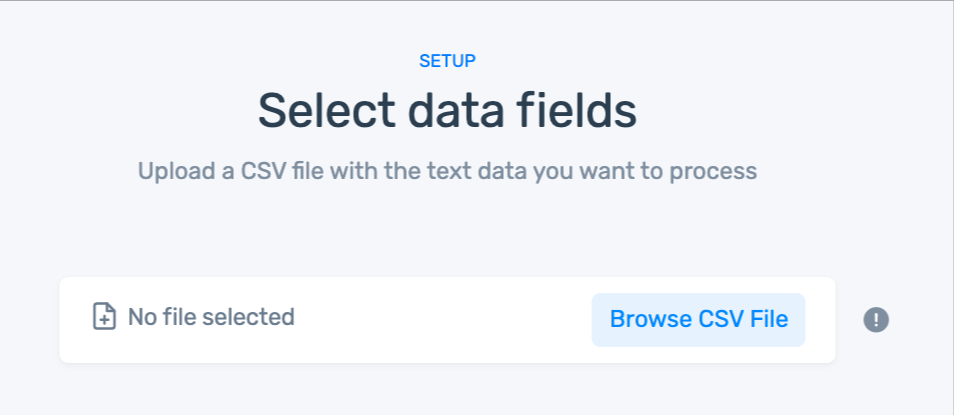

2. Upload your data.

We chose the app review template, so we’re using a dataset of reviews.

If you don't have a CSV file:

- You can use our sample dataset.

- Or, download your own survey responses from the survey tool you use with this documentation.

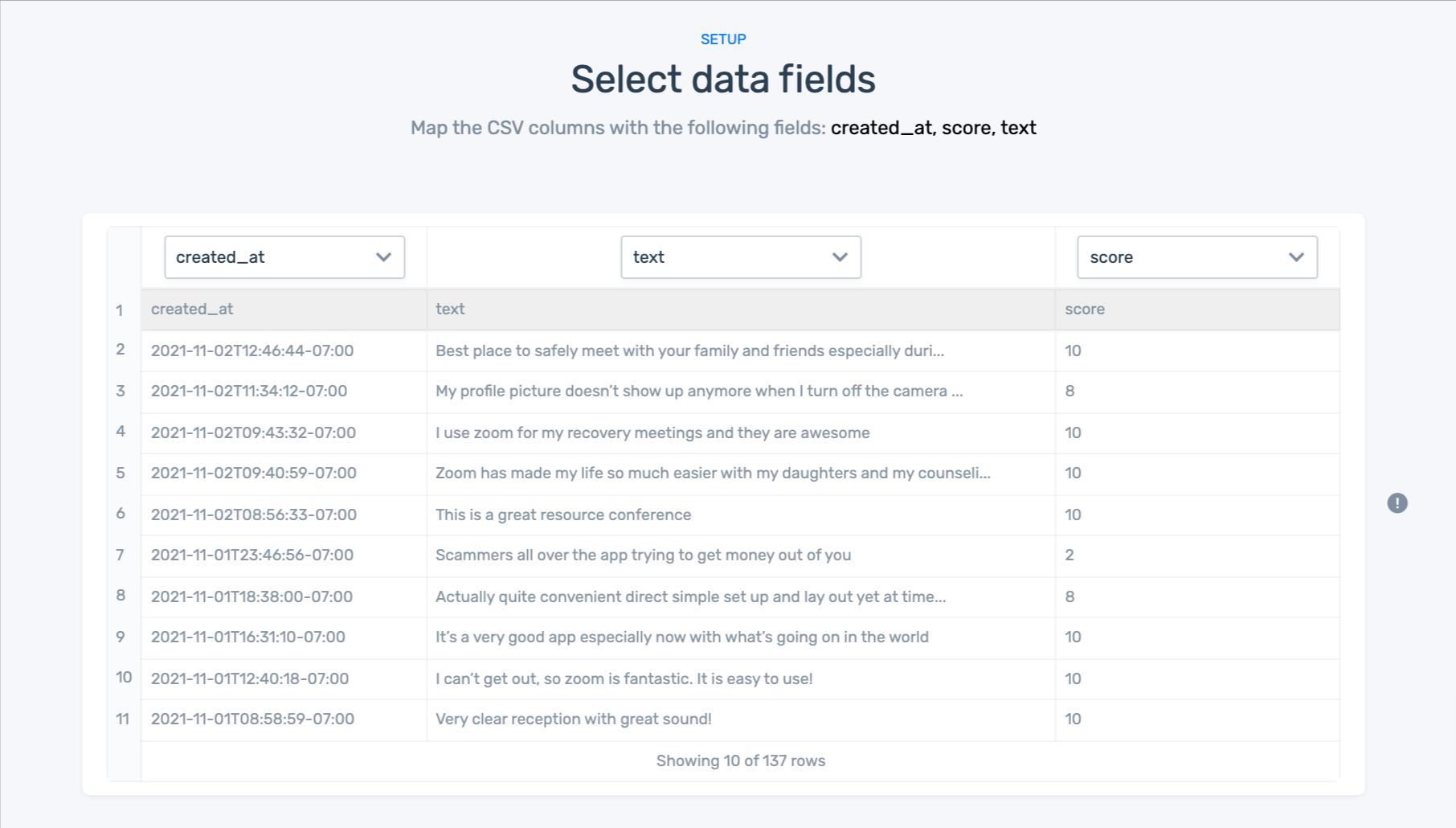

3. Match your data to the right fields in each column:

Fields:

- created_at: Date that the response was sent.

- text: Text of the response.

- rating: Score given by the customer.

4. Name your workflow:

5. Wait for MonkeyLearn to process your data:

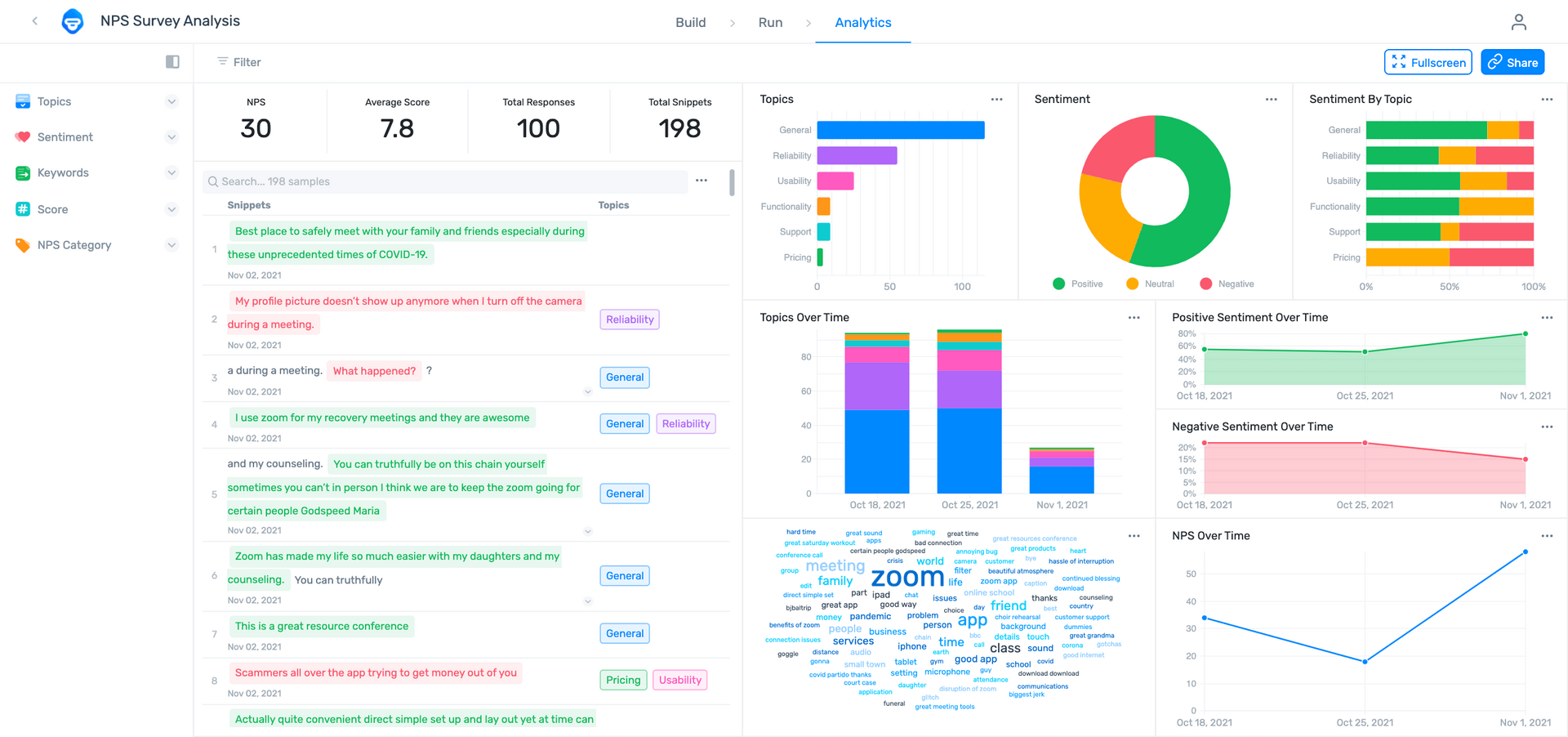

6. Explore your dashboard!

MonkeyLearn’s data visualization tools make it easy to understand your results in striking dashboards. Spot patterns, trends, and immediately actionable insights in broad strokes or minute detail.

You can:

- Filter by topic, sentiment, keyword, or rating.

- Share via email with other coworkers.

Takeaway

Text analysis is no longer an exclusive, technobabble topic for software engineers with machine learning experience. It has become a powerful tool that helps businesses across every industry gain useful, actionable insights from their text data. Saving time, automating tasks and increasing productivity has never been easier, allowing businesses to offload cumbersome tasks and help their teams provide a better service for their customers.

If you would like to give text analysis a go, sign up to MonkeyLearn for free and begin training your very own text classifiers and extractors – no coding needed thanks to our user-friendly interface and integrations.

And take a look at the MonkeyLearn Studio public dashboard to see what data visualization can do to see your results in broad strokes or super minute detail.

Reach out to our team if you have any doubts or questions about text analysis and machine learning, and we'll help you get started!