Data Cleaning Steps & Process to Prep Your Data for Success

No matter what kind of data analytics you’re performing, your analysis and any other downstream processes are only as good as the data you start with.

Most raw data, whether text, images, video – often even data stored in spreadsheets – is improperly formatted, incomplete, or downright dirty and needs to be properly cleaned and structured before you begin your analysis.

There are a number of data cleaning, “data cleansing,” or “data scrubbing” techniques you can put to use to ensure your data is properly prepared for analysis.

What Is Data Cleaning?

Data cleaning is the process of editing, correcting, and structuring data within a data set so that it’s generally uniform and prepared for analysis. This includes removing corrupt or irrelevant data and formatting it into a language that computers can understand for optimal analysis.

There is an often repeated saying in data analysis: “Garbage in, garbage out,” which means that, if you start with bad data (garbage), you’ll only get “garbage” results.

Data cleaning is often a tedious process, but it’s absolutely essential to get top results and powerful insights from your data.

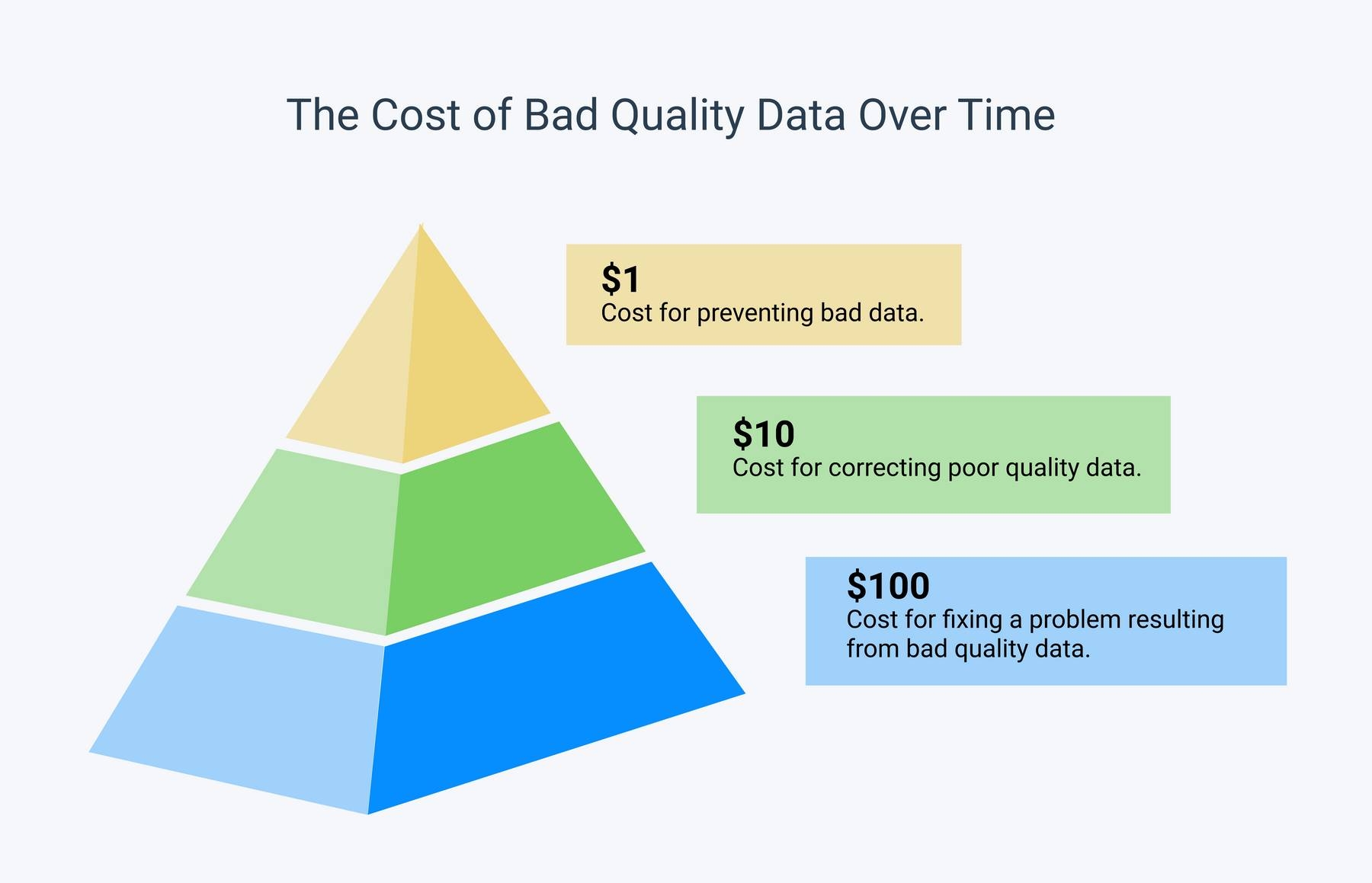

This is powerfully elucidated with the 1-10-100 principle: It costs $1 to prevent bad data, $10 to correct bad data, and $100 to fix a downstream problem created by bad data.

So, it’s important that you perform proper data cleaning to ensure you get the best possible results.

In machine learning, data scientists agree that better data is even more important than the most powerful algorithms. This is because machine learning models only perform as well as the data they’re trained on.

If you’re training your models with bad data, the end analysis results will not only be generally untrustworthy, but will often be completely harmful to your organization.

Proper data cleaning will save time and money and make your organization more efficient, help you better target distinct markets and groups, and allow you to use the same data sets for multiple analyses and downstream functions.

Follow the data cleaning tips and data cleaning techniques below to set yourself up for optimum analysis and results.

Data Cleaning Steps & Techniques

Here is a 6 step data cleaning process to make sure your data is ready to go.

- Step 1: Remove irrelevant data

- Step 2: Deduplicate your data

- Step 3: Fix structural errors

- Step 4: Deal with missing data

- Step 5: Filter out data outliers

- Step 6: Validate your data

1. Remove irrelevant data

First, you need to figure out what analyses you’ll be running and what are your downstream needs. What questions do you want to answer or problems do you want to solve?

Take a good look at your data and get an idea of what is relevant and what you may not need. Filter out data or observations that aren’t relevant to your downstream needs.

If you’re doing an analysis of SUV owners, for example, but your data set contains data on Sedan owners, this information is irrelevant to your needs and would only skew your results.

You should also consider removing things like hashtags, URLs, emojis, HTML tags, etc., unless they are necessarily a part of your analysis.

2. Deduplicate your data

If you’re collecting data from multiple sources or multiple departments, use scraped data for analysis, or have received multiple survey or client responses, you will often end up with data duplicates.

Duplicate records slow down analysis and require more storage. Even more importantly, however, if you train a machine learning model on a dataset with duplicate results, the model will likely give more weight to the duplicates, depending on how many times they’ve been duplicated. So they need to be removed for well-balanced results.

Even simplistic data cleaning tools can be helpful to deduplicate your data because duplicate records are easy for AI programs to recognize.

3. Fix structural errors

Structural errors include things like misspellings, incongruent naming conventions, improper capitalization, incorrect word use, etc. These can affect analysis because, while they may be obvious to humans, most machine learning applications wouldn’t recognize the mistakes and your analyses would be skewed.

For example, if you’re running an analysis on different data sets – one with a ‘women’ column and another with a ‘female’ column, you would have to standardize the title. Similarly things like dates, addresses, phone numbers, etc. need to be standardized, so that computers can understand them.

4. Deal with missing data

Scan your data or run it through a cleaning program to locate missing cells, blank spaces in text, unanswered survey responses, etc. This could be due to incomplete data or human error. You’ll need to determine whether everything connected to this missing data – an entire column or row, a whole survey, etc. – should be completely discarded, individual cells entered manually, or left as is.

The best course of action to deal with missing data will depend on the analysis you want to do and how you plan to preprocess your data. Sometimes you can even restructure your data, so the missing values won’t affect your analysis.

5. Filter out data outliers

Outliers are data points that fall far outside of the norm and may skew your analysis too far in a certain direction. For example, if you’re averaging a class’s test scores and one student refuses to answer any of the questions, his/her 0% would have a big impact on the overall average. In this case, you should consider deleting this data point, altogether. This may give results that are “actually” much closer to the average.

However, just because a number is much smaller or larger than the other numbers you’re analyzing, doesn’t mean that the ultimate analysis will be inaccurate. Just because an outlier exists, doesn’t mean that it shouldn’t be considered. You’ll have to consider what kind of analysis you’re running and what effect removing or keeping an outlier will have on your results.

6. Validate your data

Data validation is the final data cleaning technique used to authenticate your data and confirm that it’s high quality, consistent, and properly formatted for downstream processes.

- Do you have enough data for your needs?

- Is it uniformly formatted in a design or language that your analysis tools can work with?

- Does your clean data immediately prove or disprove your theory before analysis?

Validate that your data is regularly structured and sufficiently clean for your needs. Cross check corresponding data points and make sure nothing is missing or inaccurate.

Machine learning and AI tools can be used to verify that your data is valid and ready to be put to use. And once you’ve gone through the proper data cleaning steps, you can use data wrangling techniques and tools to help automate the process.

Data Cleaning Tips

- Create the right process and use it consistently

Set up a data cleaning process that’s right for your data, your needs, and the tools you’ll use for analysis. This is an iterative process, so once you have your specific steps and techniques in place, you’ll need to follow them religiously for all subsequent data and analyses.

It’s important to remember that, although data cleaning may be tedious, it’s absolutely vital to your downstream processes. If you don’t start with clean data, you’ll undoubtedly regret it in the future when your analysis produces “garbage results.”

- Use tools

There are a number of helpful data cleaning tools you can put to use to help the process – from free and basic, to advanced and machine learning augmented. Do some research and find out what data cleaning tools are best for you.

If you know how to code, you can build models for your specific needs, but there are great tools even for non-coders. Check out tools with an efficient UI, so you can preview the effect of your filters and quickly test them on different data samples.

- Pay attention to errors and track where dirty data comes from

Track and annotate common errors and trends in your data, so you’ll know what kinds of cleaning techniques you need to use on data from different sources. This will save huge amounts of time and make your data even cleaner – especially when integrating with analysis tools you use regularly.

Wrap Up

It’s clear that data cleaning is a necessary, if slightly annoying, process when running any kind of data analysis. Follow the steps above and you’re well on your way to having data that’s fully prepped and ready for downstream processes.

Remember to keep your processes consistent and don’t cut corners on data cleaning, so you’ll end up with accurate, real-world, immediately actionable results.

MonkeyLearn is a SaaS machine learning text analysis platform with a suite of tools to help you get the most out of your clean data.

Take a look at MonkeyLearn to learn about sentiment analysis, topic categorization, keyword analysis, and dozens of other techniques that can run automatically, 24/7 on your text data, so you never miss an insight.

Tobias Geisler Mesevage

June 3rd, 2021