The Practical Guide to Textual Analysis

Textual analysis is the process of gathering and examining qualitative data to understand what it’s about.

But making sense of qualitative information is a major challenge. Whether analyzing data in business or performing academic research, manually reading, analyzing, and tagging text is no longer effective – it’s time-consuming, results are often inaccurate, and the process far from scalable.

Fortunately, developments in the sub-fields of Artificial Intelligence (AI) like machine learning and natural language processing (NLP) are creating unprecedented opportunities to process and analyze large collections of text data.

Thanks to algorithms trained with machine learning it is possible to perform a myriad of tasks that involve analyzing text, like topic classification (automatically tagging texts by topic), feature extraction (identifying specific characteristics in a text) and sentiment analysis (recognizing the emotions that underlie a given text).

Below, we’ll dive into textual analysis with machine learning, what it is and how it works, and reveal its most important applications in business and academic research:

Let’s start with the basics!

Getting Started With Textual Analysis

What is Textual Analysis?

While similar to text analysis, textual analysis is mainly used in academic research to analyze content related to media and communication studies, popular culture, sociology, and philosophy.

In this case, the purpose of textual analysis is to understand the cultural and ideological aspects that underlie a text and how they are connected with the particular context in which the text has been produced. In short, textual analysis consists of describing the characteristics of a text and making interpretations to answer specific questions.

One of the challenges of textual analysis resides in how to turn complex, large-scale data into manageable information. Computer-assisted textual analysis can be instrumental at this point, as it allows you to perform certain tasks automatically (without having to read all the data) and makes it simple to observe patterns and get unexpected insights. For example, you could perform automated textual analysis on a large set of data and easily tag all the information according to a series of previously defined categories. You could also use it to extract specific pieces of data, like names, countries, emails, or any other features.

Companies are using computer-assisted textual analysis to make sense of unstructured business data, and find relevant insights that lead to data-driven decisions. It’s being used to automate everyday tasks like ticket tagging and routing, improving productivity, and saving valuable time.

Difference Between Textual Analysis and Content Analysis?

When we talk about textual analysis we refer to a data-gathering process for analyzing text data. This qualitative methodology examines the structure, content, and meaning of a text, and how it relates to the historical and cultural context in which it was produced. To do so, textual analysis combines knowledge from different disciplines, like linguistics and semiotics.

Content analysis can be considered a subcategory of textual analysis, which intends to systematically analyze text, by coding the elements of the text to get quantitative insights. By coding text (that is, establishing different categories for the analysis), content analysis makes it possible to examine large sets of data and make replicable and valid inferences.

Sitting at the intersection between qualitative and quantitative approaches, content analysis has proved to be very useful to study a wide array of text data ― from newspaper articles to social media messages ― within many different fields, that range from academic research to organizational or business studies.

What is Computer-Assisted Textual Analysis?

Computer-assisted textual analysis involves using a software, digital platform, or computational tools to perform tasks related to text analysis automatically.

The developments in machine learning make it possible to create algorithms that can be trained with examples and learn a series of tasks, from identifying topics on a given text to extracting relevant information from an extensive collection of data. Natural Language Processing (NLP), another sub-field of AI, helps machines process unstructured data and transform it into manageable information that’s ready to analyze.

Automated textual analysis enables you to analyze large amounts of data that would require a significant amount of time and resources if done manually. Not only is automated textual analysis fast and straightforward, but it’s also scalable and provides consistent results.

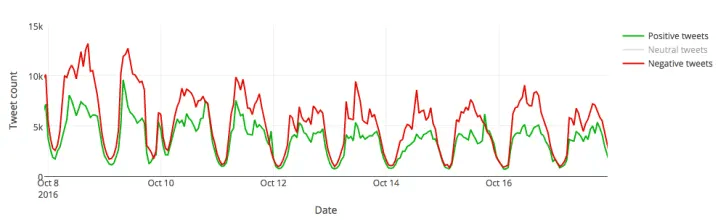

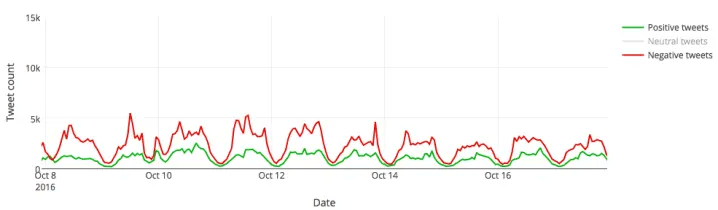

Let’s look at an example. During the US elections 2016, we used MonkeyLearn to analyze millions of tweets referring to Donald Trump and Hillary Clinton. A text classification model allowed us to tag each Tweet into the two predefined categories: Trump and Hillary. The results showed that, on an average day, Donald Trump was getting around 450,000 Twitter mentions while Hillary Clinton was only getting about 250,000. And that was just the tip of the iceberg! What was really interesting was the nuances of those mentions: were they favorable or unfavorable? By performing sentiment analysis, we were able to discover the feelings behind those messages and gain some interesting insights about the polarity of those opinions.

For example, this is how Trump’s Tweets looked like when counted by sentiment:

And this graphic shows the same for Hillary Clinton:

There are many methods and techniques for automated textual analysis. In the following section, we’ll take a closer look at each of them so that you have a better idea of what you can do with computer-assisted textual analysis.

Textual Analysis Methods & Techniques

Basic Methods

Word Frequency

Word frequency helps you find the most recurrent terms or expressions within a set of data. Counting the times a word is mentioned in a group of texts can lead you to interesting insights, for example, when analyzing customer feedback responses. If the terms ‘hard to use’ or ‘complex’ often appear in comments about your product, it may indicate you need to make UI/UX adjustments.

Collocation

By ‘collocation’ we mean a sequence of words that frequently occur together. Collocations are usually bigrams (a pair of words) and trigrams (a combination of three words). ‘Average salary’, ‘global market’, ‘close a deal’, ‘make an appointment’, ‘attend a meeting’ are examples of collocations related to business.

In textual analysis, identifying collocations is useful to understand the semantic structure of a text. Counting bigrams and trigrams as one word improves the accuracy of the analysis.

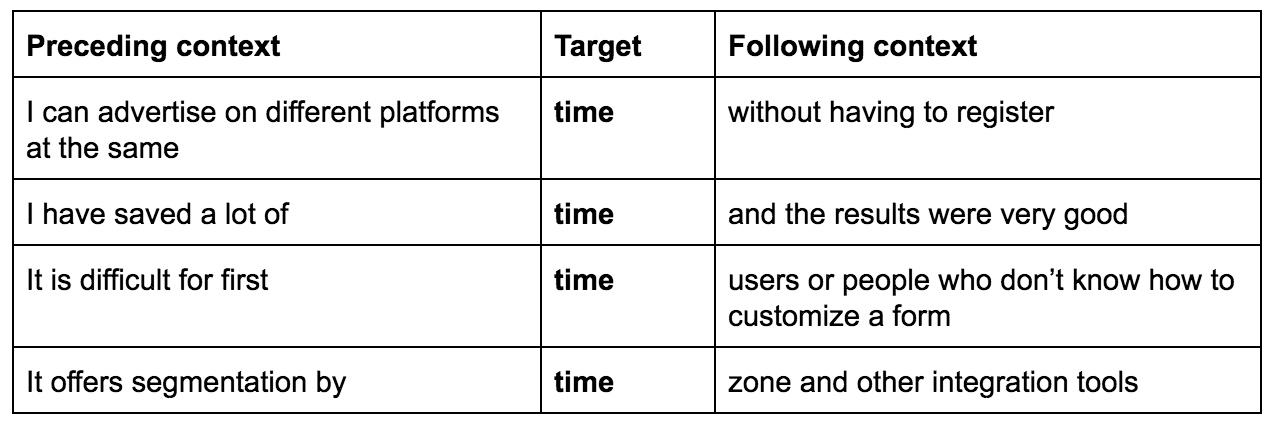

Concordance

Human language is ambiguous: depending on the context, the same word can mean different things. Concordance is used to identify instances in which a word or a series of words appear, to understand its exact meaning. For example, here are a few sentences from product reviews containing the word ‘time’:

Advanced Methods

Text Classification

Text classification is the process of assigning tags or categories to unstructured data based on its content.

When we talk about unstructured data we refer to all sorts of text-based information that is unorganized, and therefore complex to sort and manage. For businesses, unstructured data may include emails, social media posts, chats, online reviews, support tickets, among many others. Text classification ― one of the essential tasks of Natural Language Processing (NLP) ― makes it possible to analyze text in a simple and cost-efficient way, organizing the data according to topic, urgency, sentiment or intent. We’ll take a closer look at each of these applications below:

Topic Analysis consists of assigning predefined tags to an extensive collection of text data, based on its topics or themes. Let’s say you want to analyze a series of product reviews to understand what aspects of your product are being discussed, and a review reads ‘the customer service is very responsive, they are always ready to help’. This piece of feedback will be tagged under the topic

‘Customer Service’.Sentiment Analysis, also known as ‘opinion mining’, is the automated process of understanding the attributes of an opinion, that is, the emotions that underlie a text (e.g. positive, negative, and neutral). Sentiment analysis provides exciting opportunities in all kinds of fields. In business, you can use it to analyze customer feedback, social media posts, emails, support tickets, and chats. For instance, you could analyze support tickets to identify angry customers and solve their issues as a priority. You may also combine topic analysis with sentiment analysis (it is called aspect-based sentiment analysis) to identify the topics being discussed about your product, and also, how people are reacting towards those topics. For example, take the product review we mentioned earlier for topic analysis: ‘the customer service is very responsive, they are always ready to help’. This statement would be classified as both

PositiveandCustomer Service.Language detection: this allows you to classify a text based on its language. It’s particularly useful for routing purposes. For example, if you get a support ticket in Spanish, it could be automatically routed to a Spanish-speaking customer support team.

Intent detection: text classifiers can also be used to recognize the intent of a given text. What is the purpose behind a specific message? This can be helpful if you need to analyze customer support conversations or the results of a sales email campaign. For example, you could analyze email responses and classify your prospects based on their level of interest in your product.

Text Extraction

Text extraction is a textual analysis technique which consists of extracting specific terms or expressions from a collection of text data. Unlike text classification, the result is not a predefined tag but a piece of information that is already present in the text. For example, if you have a large collection of emails to analyze, you could easily pull out specific information such as email addresses, company names or any keyword that you need to retrieve. In some cases, you can combine text classification and text extraction in the same analysis.

The most useful text extraction tasks include:

Named-entity recognition: used to extract the names of companies, people, or organizations from a set of data.

Keyword extraction: allows you to extract the most relevant terms within a text. You can use keyword extraction to index data to be searched, create tags clouds, summarize the content of a text, among many other things.

Feature extraction: used to identify specific characteristics within a text. For example, if you are analyzing a series of product descriptions, you could create customized extractors to retrieve information like brand, model, color, etc.

Why is Textual Analysis Important?

Every day, we create a colossal amount of digital data. In fact, in the last two years alone we generated 90% percent of all the data in the world. That includes social media messages, emails, Google searches, and every other source of online data.

At the same time, books, media libraries, reports, and other types of databases are now available in digital format, providing researchers of all disciplines opportunities that didn’t exist before.

But the problem is that most of this data is unstructured. Since it doesn’t follow any organizational criteria, unstructured text is hard to search, manage, and examine. In this scenario, automated textual analysis tools are essential, as they help make sense of text data and find meaningful insights in a sea of information.

Text analysis enables businesses to go through massive collections of data with minimum human effort, saving precious time and resources, and allowing people to focus on areas where they can add more value. Here are some of the advantages of automated textual analysis:

Scalability

You can analyze as much data as you need in just seconds. Not only will you save valuable time, but you’ll also make your teams much more productive.

Real-time analysis

For businesses, it is key to detect angry customers on time or be warned of a potential PR crisis. By creating customized machine learning models for text analysis, you can easily monitor chats, reviews, social media channels, support tickets and all sorts of crucial data sources in real time, so you’re ready to take action when needed.

Academic researchers, especially in the political science field, may find real-time analysis with machine learning particularly useful to analyze polls, Twitter data, and election results.

Consistent criteria

Routine manual tasks (like tagging incoming tickets or processing customer feedback, for example) often end up being tedious and time-consuming. There are more chances of making mistakes and the criteria applied within team members often turns out to be inconsistent and subjective. Machine learning algorithms, on the other hand, learn from previous examples and always use the same criteria to analyze data.

How does Textual Analysis Work?

Computer-assisted textual analysis makes it easy to analyze large collections of text data and find meaningful information. Thanks to machine learning, it is possible to create models that learn from examples and can be trained to classify or extract relevant data.

But how easy is to get started with textual analysis?

As with most things related to artificial intelligence (AI), automated text analysis is perceived as a complex tool, only accessible to those with programming skills. Fortunately, that’s no longer the case. AI platforms like MonkeyLearn are actually very simple to use and don’t require any previous machine learning expertise. First-time users can try different pre-trained text analysis models right away, and use them for specific purposes even if they don’t have coding skills or have never studied machine learning.

However, if you want to take full advantage of textual analysis and create your own customized models, you should understand how it works.

There are two steps you need to follow before running an automated analysis: data gathering and data preparation. Here, we’ll explain them more in detail:

Data gathering: when we think of a topic we want to analyze, we should first make sure that we can obtain the data we need. Let’s say you want to analyze all the customer support tickets your company has received over a designated period of time. You should be able to export that information from your software and create a CSV or an Excel file. The data can be either internal (that is, data that’s only available to your business, like emails, support tickets, chats, spreadsheets, surveys, databases, etc) or external (like review sites, social media, news outlets or other websites).

Data preparation: before performing automated text analysis it’s necessary to prepare the data that you are going to use. This is done by applying a series of Natural Language Processing (NLP) techniques. Tokenization, parsing, lemmatization, stemming and stopword removal are just a few of them.

Once these steps are complete, you will be all set up for the data analysis itself. In this section, we’ll refer to how the most common textual analysis methods work: text classification and text extraction.

Text Classification

Text classification is the process of assigning tags to a collection of data based on its content.

When done manually, text categorization is a time-consuming task that often leads to mistakes and inaccuracies. By doing this automatically, it is possible to obtain very good results while spending less time and resources. Automatic text classification consists of three main approaches: rule-based, machine learning and hybrid.

Rule-based systems

Rule-based systems follow an ‘if-then’ (condition-action) structure based on linguistic rules. Basically, rules are human-made associations between a linguistic pattern on a text and a predefined tag. These linguistic patterns often refer to morphological, syntactic, lexical, semantic, or phonological aspects.

For instance, this could be a rule to classify a series of laptop descriptions:

(Lenovo |Sony | Hewlett Packard | Apple) → Brand

In this case, when the text classification model detects any of those words within a text (the ‘if’ portion), it will assign the predefined tag ‘brand’ to them (the ‘then’ portion).

One of the main advantages of rule-based systems is that they are easy to understand by humans. On the downside, creating complex systems is quite tricky, because you need to have good knowledge of linguistics and of the topics present in the text that you want to analyze. Besides, adding new rules can be tough as it requires several tests, making rule-based systems hard to scale.

Machine learning-based systems

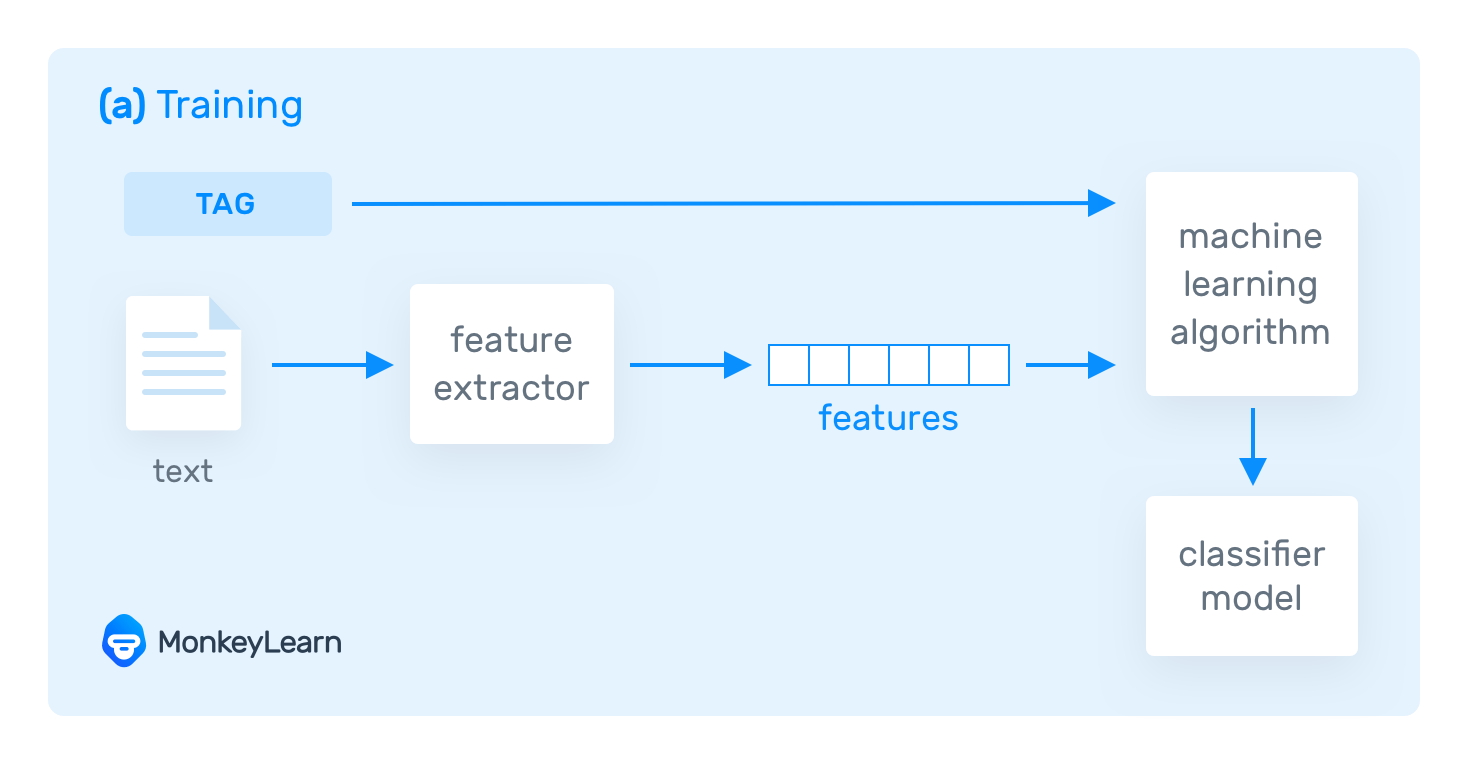

Machine learning-based systems are trained to make predictions based on examples. This means that a person needs to provide representative and consistent samples and assign the expected tags manually so that the system learns to make its own predictions from those past observations. The collection of manually tagged data is called training data.

But how does machine learning actually work?

Suppose you are training a machine learning-based classifier. The system needs to transform the training data into something it can understand: in this case, vectors (an array of numbers with encoded data). Vectors contain a set of relevant features from the given text, and use them to learn and make predictions on future data.

One of the most common methods for text vectorization is called bag of words and consists of counting how many times a particular word (from a predetermined list of words) appears in the text you want to analyze.

So, the text is transformed into vectors and fed into a machine learning algorithm along with its expected tags, creating a text classification model:

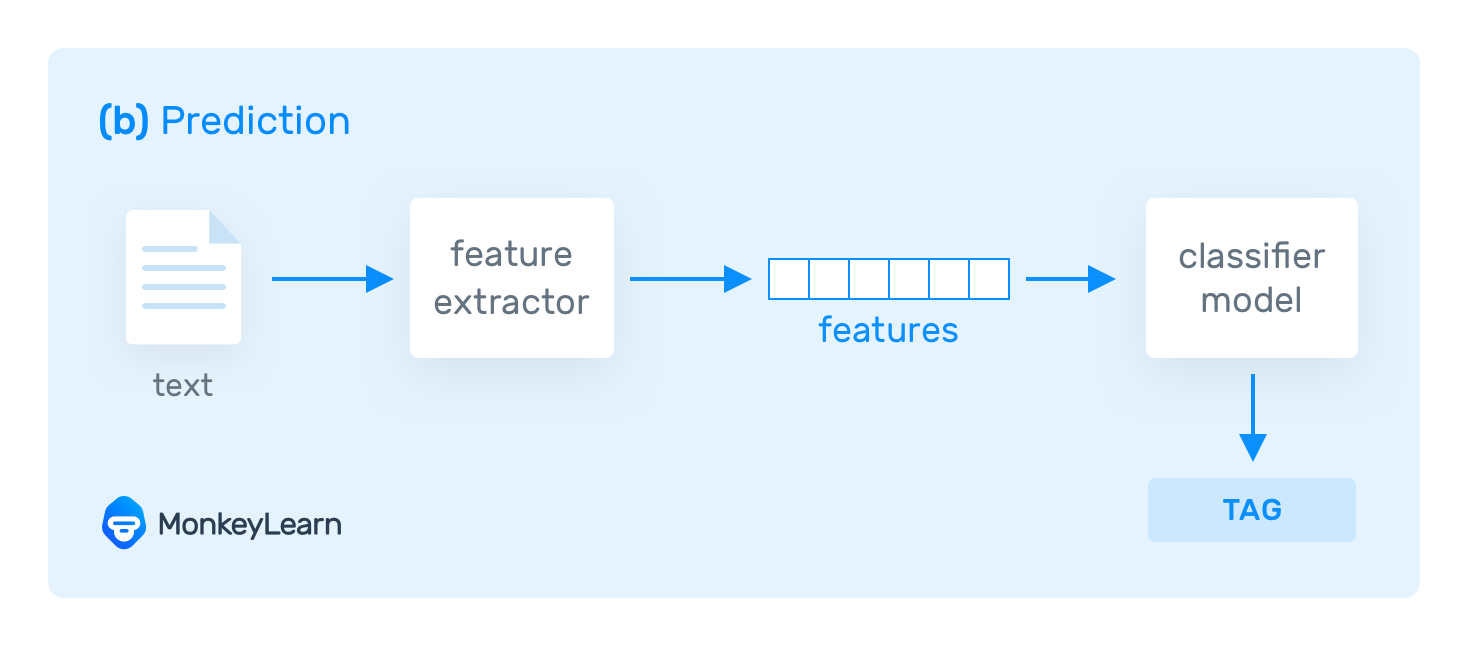

After being trained, the model can make predictions over unseen data:

Machine learning algorithms

The most common algorithms used in text classification are Naive Bayes family of algorithms (NB), Support Vector Machines (SVM), and deep learning algorithms.

Naive Bayes family of algorithms (NB) is a probabilistic algorithm that uses Bayes’ theorem to calculate the probability of each tag for a given text. It then provides the tag with the highest likelihood of occurrence. This algorithm provides good results as long as the training data is scarce.

Support Vector Machines (SVM) is a machine learning algorithm that divides vectors into two different groups within a three-dimensional space. In one group, you have vectors that belong to a given tag, and in the other group vectors that don’t belong to that tag. Using this algorithm requires more coding skills, but the results are better than the ones with Naive Bayes.

Deep learning algorithms try to emulate the way the human brain thinks. They use millions of training examples and generate very rich representations of texts, leading to much more accurate predictions than other machine learning algorithms. The downside is that they need vast amounts of training data to provide accurate results and require intensive coding.

Hybrid systems

These systems combine rule-based systems and machine learning-based systems to obtain more accurate predictions.

Evaluation

There are different parameters to evaluate the performance of a text classifier: accuracy, precision, recall, and F1 score.

You can measure how your text classifier works by comparing it to a fixed testing set (that is, a group of data that already includes its expected tags) or by using cross-validation, a process that divides your training data into two groups – one used to train the model, and the other used to test the results.

Let’s go into more detail about each of these parameters:

Accuracy: this is the number of correct predictions that the text classifier makes divided by the total number of predictions. However, accuracy alone is not the best parameter to analyze the performance of a text classifier. When the number of examples is imbalanced (for example, a lot of the data belongs to one of the categories) you may experience an accuracy paradox, that is, a model with high accuracy, but one that’s not necessarily able to make accurate predictions for all tags. In this case, it’s better to look at precision and recall, and F1 score.

Precision: this metric indicates the number of correct predictions for a given tag, divided by the total number of correct and incorrect predictions for that tag. In this case, a high precision level indicates there were less false positives. For some tasks ― like sending automated email responses ― you will need text classification models with a high level of precision, that will only deliver an answer when it’s highly likely that the recipient belongs to a given tag.

Recall: it shows the number of correct predictions for a given tag, over the number of predictions that should have been predicted as belonging to that tag. High recall metrics indicate there were less false negatives and, if routing support tickets for example, it means that tickets will be sent to the right teams.

F1 score: this metric considers both precision and recall results, and provides an idea of how well your text classifier is working. It allows you to see how accurate is your model for all the tags you’re using.

Cross-validation

Cross-validation is a method used to measure the accuracy of a text classifier model. It consists of splitting the training dataset into a number of equal-length subsets, in a random way. For instance, let’s imagine you have four subsets and each of them contains 25% of your training data.

All of those subsets except one are used to train the text classifier. Then, the classifier is used to make predictions over the remaining subset. After this, you need to compile all the metrics we mentioned before (accuracy, precision, recall, and F1 score), and start the process all over again, until all the subsets have been used for testing. Finally, all the results are compiled to obtain the average performance of each metric.

Text Extraction

Text extraction is the process of identifying specific pieces of text from unstructured data. This is very useful for a variety of purposes, from extracting company names from a Linkedin dataset to pulling out prices on product descriptions.

Text extraction allows to automatically visualize where the relevant terms or expressions are, without needing to read or scan all the text by yourself. And that is particularly relevant when you have massive databases, which would otherwise take ages to analyze manually.

There are different approaches to text extraction. Here, we’ll refer to the most commonly used and reliable:

Regular expressions

Regular expressions are similar to rules for text classification models. They can be defined as a series of characters that define a pattern.

Every time the text extractor detects a coincidence with a pattern, it assigns the corresponding tag.

This approach allows you to create text extractors quickly and with good results, as long as you find the right patterns for the data you want to analyze. However, as it gets more complex, it can be hard to manage and scale.

Conditional Random Fields

Conditional Random Fields (CRF) is a statistical approach used for text extraction with machine learning. It identifies different patterns by assigning a weight to each of the word sequences within a text. CRF’s also allow you to create additional parameters related to the patterns, based on syntactic or semantic information.

This approach creates more complex and richer patterns than regular expressions and can encode a large volume of information. However, if you want to train the text extractor properly, you will need to have in-depth NLP and computing knowledge.

Evaluation

You can use the same performance metrics that we mentioned for text classification (accuracy, precision, recall, and F1 score), although these metrics only consider exact matches as positive results, leaving partial matches aside.

If you want partial matches to be included in the results, you should use a performance metric called ROUGE (Recall-Oriented Understudy for Gisting Evaluation). This group of metrics measures lengths and numbers of sequences to make a match between the source text and the extraction performed by the model.

The parameters used to compare these two texts need to be defined manually. You may define ROUGE-n metrics (n is the length of the units you want to measure) or ROUGE-L metrics (to compare the longest common sentence).

Use Cases and Applications

Automated textual analysis is the process of obtaining meaningful information out of raw data. Considering unstructured data is getting closer to 80% of the existing information in the digital world, it’s easy to understand why this brings outstanding opportunities for businesses, organizations, and academic researchers.

For companies, it is now possible to obtain real-time insights on how their users feel about their products and make better business decisions based on data. Shifting to a data-driven approach is one of the main challenges of businesses today.

Textual analysis has many exciting applications across different areas of a company, like customer service, marketing, product, or sales. By allowing the automation of specific tasks that used to be manual, textual analysis is helping teams become more productive and efficient, and allowing them to focus on areas where they can add real value.

In the academic research field, computer-assisted textual analysis (and mainly, machine learning-based models) are expanding the horizons of investigation, by providing new ways of processing, classifying, and obtaining relevant data.

In this section, we’ll describe the most significant applications related to customer service, customer feedback, and academic research.

Customer Service

It’s not all about having an amazing product or investing a lot of money on advertising. What really tips the balance when it comes to business success is to provide high-quality customer service. Stats claim that 70% of the customer journey is defined by how people feel they are being treated.

So, how can textual analysis help companies deliver a better customer service experience?

Automatically tag support tickets

Every time a customer sends a request, comment, or complain, there’s a new support ticket to be processed. Customer support teams need to categorize every incoming message based on its content, a routine task that can be boring, time-consuming, and inconsistent if done manually.

Textual analysis with machine learning allows you automatically identify the topic of each support ticket and tag it accordingly. How does it work?

- First, a person defines a set of categories and trains a classifier model by applying the appropriate tags to a number of representative samples.

- The model analyzes the words and expressions used in each ticket. For example: ‘I’m having problems when paying with my credit card’, and it compares it with previous examples.

- Finally, it automatically tags the ticket according to its content. In this case, the ticket would be tagged as

Payment Issues.

Automatically route and triage support tickets

Once support tickets are tagged, they need to be routed to the appropriate team in charge to deal with that issue. Machine learning enables teams to send a ticket to the right person in real-time, based on the ticket’s topic, language or complexity. For example, a ticket previously tagged as Payment Issues will be automatically routed to the Billing Area.

Detect the urgency of a ticket

A simple task, like being able to prioritize tickets based on their urgency, can have a substantial positive impact on your customer service. By analyzing the content of each ticket, a textual analysis model can let you assess which of them are more critical and prioritize accordingly. For instance, a ticket containing the words or expressions ‘as soon as possible’ or ‘immediately’ would be automatically classified as Urgent.

Get insights from ticket analytics

The performance of customer service teams is usually measured by KPI’s, like first response time, the average time of resolution, and customer satisfaction (CSAT).

Textual analysis algorithms can be used to analyze the different interactions between customers and the customer service area, like chats, support tickets, emails, and customer satisfaction surveys.

You can use aspect-based sentiment analysis to understand the main topics discussed by your customers and how they are feeling about those topics. For example, you may have a lot of mentions referring to the topic ‘UI/UX’. But, are all those customers’ opinions positive, negative, or neutral? This type of analysis can provide a more accurate perspective of what they think about your product and get a deeper understanding the overall customer satisfaction.

Customer Feedback

Listening to the Voice of Customer (VoC) is critical to understand the customers’ expectations, experience and opinion about your brand. Two of the most common tools to monitor and examine customer feedback are customer surveys and product reviews.

By analyzing customer feedback data, companies can detect topics for improvement, spot product flaws, get a better understanding of your customer’s needs and measure their level of satisfaction, among many other things.

But how do you process and analyze tons of reviews or thousands of customer surveys? Here are some ideas of how you can use textual analysis algorithms to analyze different kinds of customer feedback:

Analyze NPS Responses

Net Promoter Score (NPS) is the most popular tool to measure customer satisfaction. The first part of the survey involves giving the brand a score from 0 to 10 based on the question: 'How likely is it that you would recommend [brand] to a friend or colleague?'. The results allow you to classify your customers as promoters, passives, and detractors.

Then, there’s a follow-up question, inquiring about the reasons for your previous score. These open-ended responses often provide the most insightful information about your company. At the same time, it’s the most complex data to process. Yes, you could read and tag each of the responses manually, but what if there are thousands of them?

Textual analysis with machine learning enables you to detect the main topics that your customers are referring to, and even extract the most relevant keywords related to those topics. To make the most of your data, you could also perform sentiment analysis and find out if your customers are talking about a given topic positively or negatively.

Analyze Customer Surveys

Besides NPS, textual analysis algorithms can help you analyze all sorts of customer surveys. Using a text classification model to tag your responses can make you save a lot of valuable time and resources while allowing you to obtain consistent results.

Analyze Product Reviews

Product reviews are a significant factor when buying a product. Prospective buyers read at least 10 reviews before feeling they can trust a local business and that’s just one of the (many) reasons why you should keep a close eye on what people are saying about your brand online.

Analyzing product reviews can give you an idea of what people love and hate the most about your product and service. It can provide useful insights and opportunities for improvement. And it can show you what to do to get one step ahead of your competition.

The truth is that going through pages and pages of product reviews is not a very exciting task. Categorizing all those opinions can take teams hours and in the end, it becomes an expensive and unproductive process. That’s why automated textual analysis is a game-changer.

Imagine you want to analyze a set of product reviews from your SaaS company in G2 Crowd. A textual analysis model will allow you to tag g each review based on topic, like Ease of Use, Price, UI/UX, Integrations. You could also run a sentiment analysis to discover how your customers feel about those topics: do they think the price is suitable or too expensive? Do they find it too complex or easy to use?

Thanks to textual analysis algorithms, you can get powerful information to help you make data-driven decisions, and empower your teams to be more productive by reducing manual tasks to a minimum.

Academic Research

What if you were able to sift through tons of papers and journals, and discover data that is relevant to your research in just seconds? Just imagine if you could easily classify years of news articles and extract meaningful keywords from them, or analyze thousands of tweets after a significant political change.

Even though machine learning applications in business and science seem to be more frequent, social science research is also benefiting from ML to perform tasks related to the academic world.

Social science researchers need to deal with vast volumes of unstructured data. Therefore, one of the major opportunities provided by computer-assisted textual analysis is being able to classify data, extract relevant information, or identify different groups in extensive collections of data.

Another application of textual analysis with machine learning is supporting the coding process. Coding is one of the early steps of any qualitative textual analysis. It involves a detailed examination of what you want to analyze to become familiar with the data. When done manually, this task can be very time consuming and often inaccurate or inconsistent. Fortunately, machine learning algorithms (like text classifier models) can help you do this in very little time and allow you to scale up the coding process easily.

Finally, using machine learning algorithms to scan large amounts of papers, databases, and journal articles can lead to new investigation hypotheses.

Final Words

In a world overloaded with data, textual analysis with machine learning is a powerful tool that enables you to make sense of unstructured information and find what’s relevant in just seconds.

With promising use cases across many fields from marketing to social science research, machine learning algorithms are far from being a niche technology only available for a few. Moreover, they are turning into user-friendly applications that are dominated by workers with little or no coding skills.

Thanks to text analysis models, teams are becoming more productive by being released from manual and routine tasks that used to take valuable time from them. At the same time, companies can make better decisions based on valuable, real-time insights obtained from data.

By now, you probably have an idea of what textual analysis is with machine learning and how you can use it to make your everyday tasks more efficient and straightforward. Ready to get started? MonkeyLearn makes it very simple to take your first steps. Just contact us and get a personalized demo from one of our experts!